Published online Oct 28, 2025. doi: 10.3748/wjg.v31.i40.111389

Revised: August 14, 2025

Accepted: September 24, 2025

Published online: October 28, 2025

Processing time: 120 Days and 20.9 Hours

Radical gastrectomy for gastric cancer demands meticulous pre-operative staging and real-time intra-operative guidance to optimise oncologic margins and mini

Core Tip: Artificial-intelligence models now stage gastric tumours with near-radiologist accuracy, while multimodal three-dimensional navigation fuses computed tomography, magnetic resonance imaging, ultrasound and fluorescence data to guide sub-millimeter resections. Integrating these tools into radical gastrectomy reduces margin positivity and tissue trauma, and real-time analytics predict complications and survival, enabling personalised follow-up. We dissect the algorithms, navigation hardware and validation studies underpinning this leap, outline ethical and economic hurdles, and map a translational roadmap that could make data-driven, image-guided gastrectomy the new standard of care.

- Citation: Miao YR, Wang Y, Shi L, Lv JT, Yang XJ. Challenges in clinical translation of artificial intelligence and real-time imaging navigation in radical gastrectomy. World J Gastroenterol 2025; 31(40): 111389

- URL: https://www.wjgnet.com/1007-9327/full/v31/i40/111389.htm

- DOI: https://dx.doi.org/10.3748/wjg.v31.i40.111389

Gastric cancer remains a significant global health burden, ranking among the most common malignancies and leading causes of cancer-related mortality worldwide[1]. Radical gastrectomy, particularly when combined with lymphadenectomy, remains the cornerstone of curative treatment[2]. However, the complexity of gastric cancer surgery arises from the necessity to perform precise maneuvers within complex anatomical structures, where it is essential to preserve vital blood vessels while ensuring comprehensive lymph node dissection. This imposes rigorous demands on the surgeon’s technical expertise and specialized knowledge. To enhance surgical outcomes, reduce complications, and improve patient survival and quality of life, the medical community continuously explores innovative technological adjuncts[3].

Within this context, the integration of artificial intelligence (AI) and real-time imaging navigation technologies has emerged as a transformative force in the advancement of precision medicine[4,5]. AI excels at processing and analyzing vast volumes of medical data, including imaging, pathology, and clinical records, to enable more accurate diagnoses, individualized treatment planning, and reliable prognostic predictions[3]. Machine learning and deep learning algorithms, in particular, offer the capacity to detect subtle patterns and variations imperceptible to the human eye, thereby enhancing decision-making across the surgical continuum[5,6].

In contrast, real-time imaging navigation systems focus on generating precise three-dimensional (3D) anatomical reconstructions through advanced imaging modalities, fundamentally reshaping the intraoperative visual field[7,8]. By synchronizing with surgical instruments and intraoperative camera feeds, these systems provide augmented visual guidance, thereby enhancing the precision of tissue dissection, lymph node clearance, and vascular preservation[9,10]. Therefore, while AI in surgery primarily emphasizes data analysis and decision support, real-time imaging navigation systems concentrate on immediate visual guidance and 3D reconstruction to facilitate accurate surgical execution.

Nevertheless, clinical translation of these technologies faces notable challenges[11], including data privacy, algorithmic bias, image resolution and real-time processing limitations, as well as debates over cost-effectiveness and feasibility[12]. Establishing standardized protocols and fostering multicenter collaborations are essential for validation across diverse populations and surgical settings[13]. In sum, while AI holds substantial promise for gastric cancer management, its application still demands further research and standardization[14].

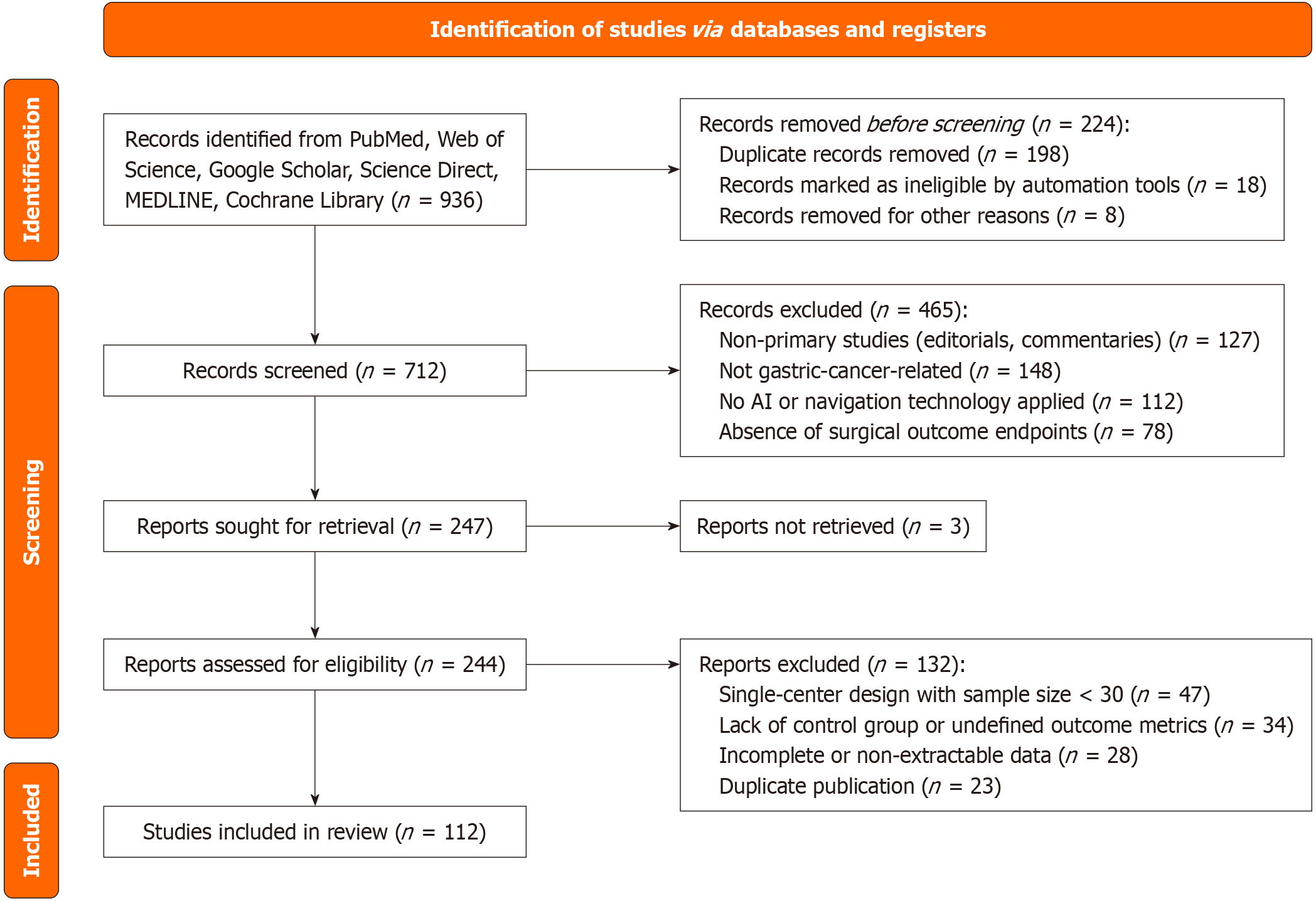

In accordance with the PRISMA 2020 guidelines, a systematic search was conducted in PubMed, Embase, Web of Science, CNKI, ClinicalTrials.gov, and ScienceDirect for relevant literature published up to May 2025. The search terms included “artificial intelligence”, “real-time imaging navigation”, “radical gastrectomy”, “gastric cancer”, “intraoperative guidance”, and “surgical outcomes”. No restrictions were applied regarding language or publication type. Original studies evaluating the clinical application of AI or navigation technologies in radical gastrectomy for gastric cancer were included, whereas editorials, letters, and non-clinical studies were excluded. The study selection process is presented in Figure 1.

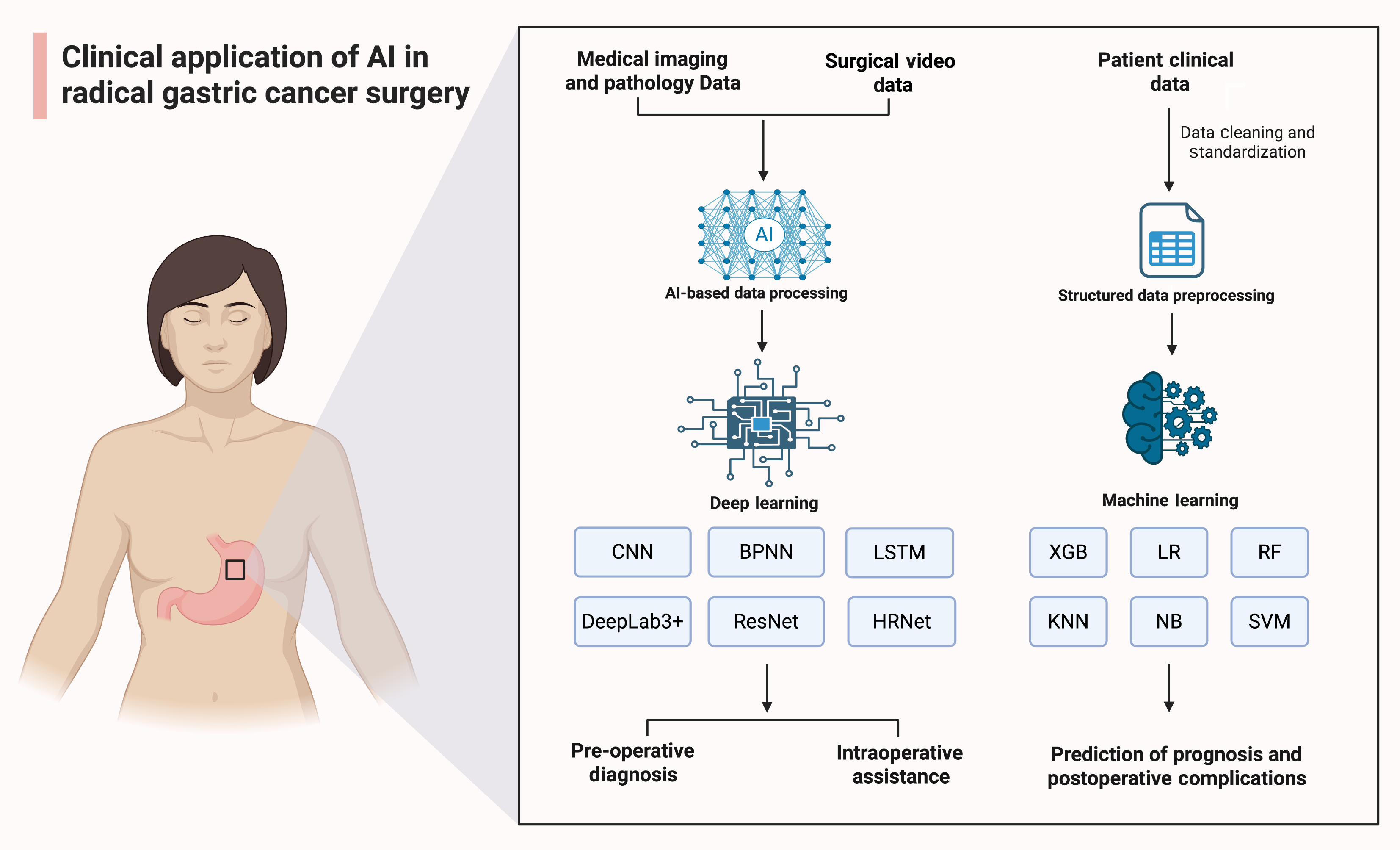

The concept of AI was first introduced at the Dartmouth Summer Research Project in 1956. Broadly defined, AI refers to the capacity of machines to learn from large datasets containing representative examples, identify patterns and relationships, and apply this knowledge to make decisions on unseen data[15]. The earliest explorations of AI in surgery date back to 1976, when professor Gunn pioneered the use of computer analysis for diagnosing acute abdominal pain[15,16]. In recent years, the intersection of medicine and AI has witnessed rapid growth[17], coinciding with the increasing complexity of modern clinical problems that demand the acquisition, interpretation, and application of vast medical knowledge[18,19]. Radiomics, a vital subfield of medical AI, has rapidly evolved alongside advances in medical imaging and computational analysis. In oncology, radiomics enables the extraction of quantitative features from medical images, offering support for clinical decision-making and individualized therapy planning[20-22]. Moreover, machine learning, a pivotal subset of AI, has found extensive application within the medical domain. Algorithms such as random forest (RF), support vector machine, and artificial neural network (ANN) leverage clinical data to develop predictive models that facilitate disease diagnosis (Figure 2), differential diagnosis, and prognostic assessment[23-26]. These emerging technologies present new opportunities for enhancing complex surgical procedures, such as radical gastrectomy. Specifically, AI-assisted real-time imaging navigation systems can assist surgeons in precisely identifying tumors while preserving vital vasculature and neural structures[14]. As AI continues to evolve, its applications in gastric cancer surgery are expected to broaden significantly[26].

Clinical practice of AI in preoperative diagnosis: Imaging diagnosis. Endoscopic examination remains the most commonly used method for early gastric cancer screening. However, it demands high technical expertise from the operator, requiring extended training periods and a steep learning curve. Recent studies have shown that AI can effectively address these challenges[27]. Initially, AI was primarily applied to endoscopic image recognition for gastric cancer diagnosis. As deep learning algorithms advanced, AI has moved beyond its initial exploratory phase, gradually maturing and expanding its application beyond medical image analysis to improve diagnostic accuracy[28].

In early gastric cancer diagnosis, Chen et al[28] analyzed 12 retrospective case-control studies (n = 11685) and found that AI demonstrated a combined sensitivity of 0.86 (95% confidence interval: 0.75-0.92) and specificity of 0.90 (95% confidence interval: 0.84-0.93) in diagnosing early gastric cancer, highlighting its substantial diagnostic value. Similarly, He et al[29] developed a deep learning system based on convolutional neural networks (CNN), named ENDOANGEL-ME, for diagnosing early gastric cancer in magnified image-enhanced endoscopic examination. Their multicenter diagnostic study revealed that ENDOANGEL-ME achieved an accuracy rate of 90.32%, significantly outperforming the diagnostic accuracy of experienced endoscopists (70.16% ± 8.78%). In 94 external videos, ENDOANGEL-ME enhanced the accuracy and sensitivity of endoscopists, with accuracy rising from 80.32% to 85.64% and sensitivity improving from 67.19% to 82.03%[29]. This not only improved the diagnostic efficiency of early gastric cancer but also helped alleviate the workload of endoscopists.

Digital pathology diagnosis. The definitive diagnosis of gastric cancer relies on pathological examination, which serves as the gold standard[30,31]. The tumor-related information and biomarkers contained in the pathological report are crucial for guiding subsequent patient treatment[32]. With advancements in AI, the integration of digital pathology and AI algorithms has significantly advanced gastric cancer pathological analysis[33]. Wang et al[34] applied a CNN algorithm to analyze hematoxylin and eosin-stained gastric cancer slides. Their CNN-based computer-aided diagnosis system achieved an area under the curve (AUC) of 0.89, sensitivity of 0.778, specificity of 0.995, and overall accuracy of 0.989. This system’s classifications were consistent with those of pathologists. Karakitsos et al[35] used backpropagation ANN to analyze gastric cancer slides stained with Papanicolaou and achieved an overall accuracy rate of 97.3%. They further compared two ANN approaches, backpropagation and learning vector quantization, based on nuclear mor

Overall, AI, through traditional machine learning and deep learning algorithms, particularly CNN, has become capable of processing multidimensional data, including clinical and imaging data (Figure 2). By reducing human error caused by individual experience discrepancies, AI improves the accuracy and consistency of both imaging and pathology diagnoses. Leveraging deep learning and machine learning technologies, AI has demonstrated high accuracy and diagnostic efficiency in both early gastric cancer imaging and pathology. This makes AI highly scalable in interactive diagnosis and pathology image analysis, providing objective evidence and assistance for personalized treatment decisions[3].

Clinical practice of AI in intraoperative assistance: In radical gastrectomy for gastric cancer, the application of AI is gradually becoming a key approach for improving surgical safety and enhancing long-term prognostic outcomes. Several studies have focused on developing AI-based intraoperative assistance systems to provide real-time guidance and decision support during surgery. Chen et al[39] developed an AI deep learning-based perigastric blood vessel recognition model. This model, built upon the DeepLab3+ architecture with ResNet (residual network)-50 as the backbone network, was trained using surgical video data from laparoscopic radical gastrectomy with D2 Lymphadenectomy in patients with locally advanced gastric cancer. The perigastric blood vessel recognition model demonstrated excellent real-time vascular recognition performance, achieving, for arteries, a precision of 0.9442, recall of 0.9099, intersection over union (IoU) of 0.8635, and an F1 score of 0.9267; for veins, a precision of 0.9349, recall of 0.8491, IoU of 0.8015, and an F1 score of 0.8897. Notably, the model maintained satisfactory performance in obese patients, with IoU and F1 scores both exceeding 0.5.

Besides, Yoshida et al[40] employed CNN and long short-term memory networks to create an AI model for recognizing surgical steps during laparoscopic distal gastrectomy (LDG). This model demonstrated an overall accuracy rate of 88.8% in identifying surgical steps. Specifically, during the left gastric lymph node dissection and reconstruction phases, the high-score group showed significantly fewer frames than the low-score group (829 vs 1152, P < 0.01; 1208 vs 1586, P = 0.01). Additionally, the adequacy of the surgical field development score in the high-score group was significantly higher than that in the low-score group (0.975 vs 0.970, P = 0.04).

A critical challenge in gastric cancer treatment is reducing the incidence of postoperative pancreatic fistula (POPF) following radical gastrectomy. Recently, Nakamura et al[41] using the HRNet (high-resolution network) algorithm, an innovative AI-based semantic segmentation system was developed to address POPF by enabling precise identification and highlighting of the pancreas during robot-assisted radical gastrectomy. In the quantitative evaluation phase, based on 80 test images, the AI system achieved a precision of 0.70, recall of 0.59, IoU of 0.46, and a Dice coefficient of 0.61, indicating favorable performance in distinguishing the pancreas from surrounding adipose tissue. In the qualitative assessment, 10 surgeons rated the sensitivity of the AI system in recognizing pancreatic parenchyma, yielding an average score of 4.18 out of 5. These findings demonstrate that the surgical AI system can effectively highlight the pancreas, reduce misidentification and thereby lower the risk of POPF.

Clinical practice of AI in postoperative prognosis: Clinical practice of AI-driven complication prediction in radical gastrectomy for gastric cancer. In gastric cancer treatment, predicting postoperative complications, particularly those associated with laparoscopic radical gastrectomy, has been a major focus of clinical attention. AI algorithms have also shown significant potential in this area. In a study aimed at reducing gastric cancer morbidity and mortality, Huang et al[38] developed “MIL-GC”, a deep learning model based on multiple instance learning for predicting gastric cancer prognosis. The “MIL-GC” model achieved a concordance index for survival prediction of 0.671 in internal validation and 0.657 in external validation. Its risk scores were confirmed as strong predictors of overall survival in both univariate and multivariate analyses (hazard ratios of 2.414 and 1.803, respectively, both P < 0.05). This model can provide clinicians with accurate prognostic predictions to support the selection of appropriate treatment plans, thereby improving survival outcomes in gastric cancer patients. Similarly, Hong et al[42] employed AI algorithms including RF, extreme gradient boosting, and linear regression, integrating missing-data handling, variable selection, and hyperparameter tuning to optimize model performance (Figure 2).

The study’s findings demonstrate that the RF model attained an AUC of 0.8969 (P < 0.0001) in the training set and 0.7515 (P < 0.0001) in the validation set for predicting complications in laparoscopic total gastrectomy. In the case of LDG, the model achieved an AUC of 0.8853 (P < 0.0001) in the training set, with the validation set reaching 0.9025 (P < 0.0001). In comparison, linear regression methods, such as LASSO regression, demonstrated AUC values of only 0.667 and 0.688 for laparoscopic total gastrectomy and LDG in the validation set, respectively. This study highlights the significant advantage of machine learning models, particularly RF, in capturing the complex clinical data features and predicting postoperative complications.

Clinical practice of AI-driven lymph node metastasis (LNM) prediction in radical gastrectomy for gastric cancer. LNM also plays a critical role in the progression and prognosis of gastric cancer. The NCCN Clinical Practice Guidelines in Oncology emphasize the importance of imaging in postoperative follow-up for gastric cancer, particularly in assessing lymph node status during surveillance[43]. Currently, computed tomography (CT) and magnetic resonance imaging (MRI) are widely used in post-treatment evaluations for gastric cancer, and the integration of AI with radiomics has proven helpful in clinical decision-making. For patients with tubular gastric adenocarcinoma following radical gastrectomy, CT texture analysis can reveal potential features associated with lymphovascular invasion (LVI) and perineural invasion (PNI). Yardımcı et al[44] developed a machine learning-based CT texture analysis model to predict LVI and PNI in gastric cancer patients. LNM also plays a critical role in the progression and prognosis of gastric cancer. In this study, 271 texture features were extracted from portal venous phase CT images, and dimensionality reduction combined with feature selection algorithms identified five features associated with LVI and PNI. The RF algorithm performed best in predicting LVI, achieving an AUC of 0.894 and an accuracy of 80.9%, while the Naive Bayes algorithm performed best in predicting PNI, with an AUC of 0.754 and an accuracy of 68.2%. These findings demonstrate the potential of CT texture analysis combined with machine learning in predicting LVI and PNI. Similarly, the study by Li et al[45] is also noteworthy. They successfully built a nomogram model for predicting LNM in gastric cancer patients using dual-energy CT and integrated clinical data. The model achieved AUC values of 0.84 and 0.82 in the training and validation sets, respectively, highlighting dual-energy CT’s significant potential in predicting gastric cancer LNM.

Drawing from clinical case studies, AI application throughout the perioperative period of radical gastrectomy for gastric cancer has markedly advanced tumor resection precision and postoperative complication risk prediction[46], providing robust data-driven support for personalized treatment planning. These developments underscore the importance of understanding human-AI interaction and highlight the considerable potential of AI algorithms in this surgical domain. With ongoing technological progress and broader clinical integration, evidence strongly suggests that an AI-assisted era in gastric cancer treatment is rapidly emerging.

With continuous advancements in imaging technology, modalities such as CT and MRI have increasingly been applied in preoperative planning, providing more accurate anatomical information for radical gastrectomy. However, preoperative planning alone does not meet the demand for synchronous and precise intraoperative information, particularly in complex cases where precise surgical manipulation is challenging. The emergence of intraoperative real-time image-guided navigation technology effectively addresses these inherent limitations, significantly enhancing surgical precision and safety.

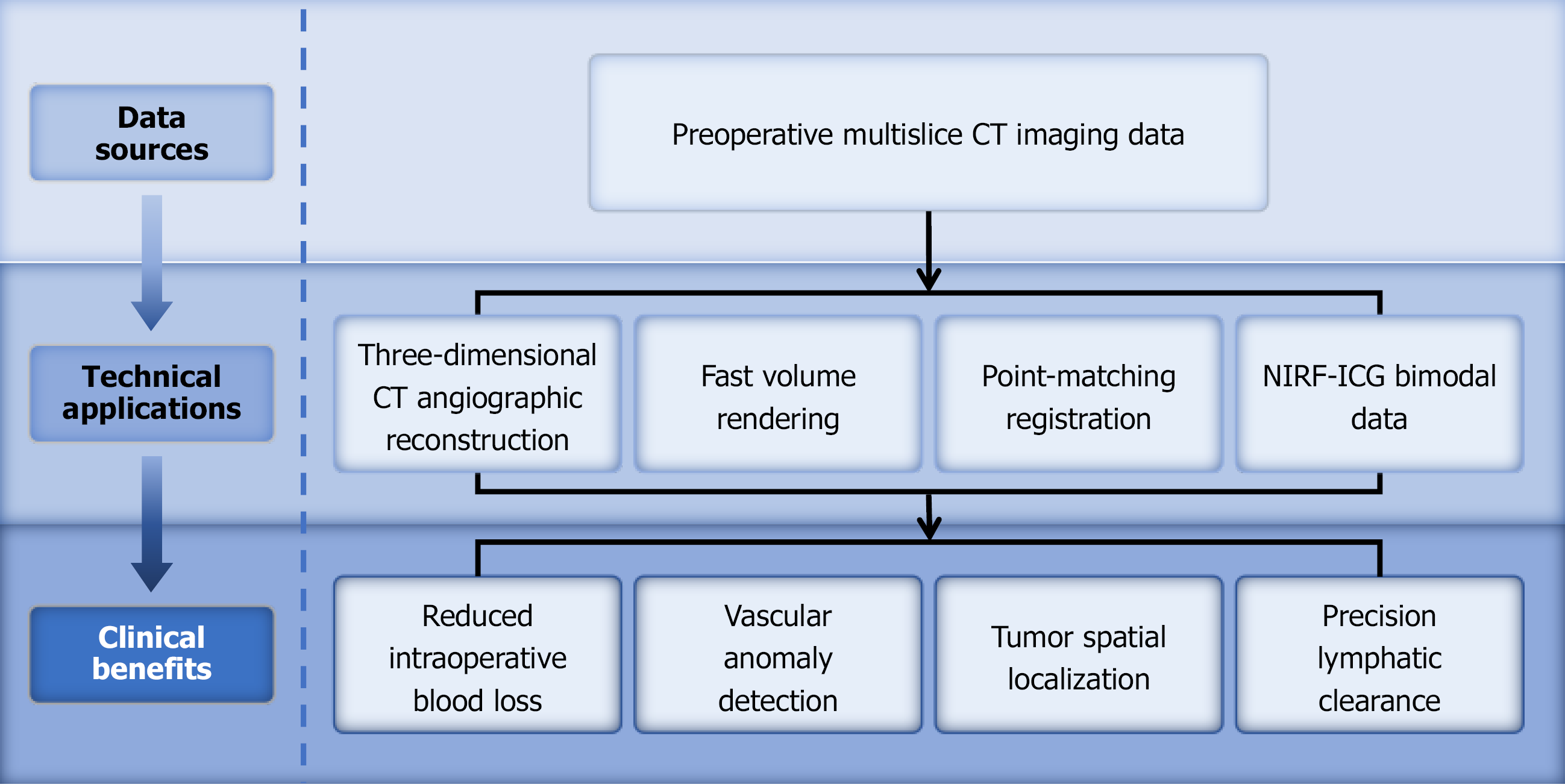

In the era of precise gastric cancer treatment, real-time image-guided surgery has gained increasing attention in the clinical practice of radical gastrectomy. The core principle of intraoperative real-time imaging navigation technology is based on the integration of various imaging techniques and sensors to obtain detailed anatomical data and to perform deep learning on preoperative medical imaging data (Figure 3). On one hand, through this multimodal fusion technology, the navigation system can construct a more accurate 3D model and register it with real-time intraoperative images, thereby integrating preoperative information with the intraoperative situation. This provides surgeons with a more intuitive surgical view[47], thereby enhancing the precision of the surgery[48]. On the other hand, the technology can display the relationship between surgical instruments and the anatomical structure in real time based on preoperative imaging[49]. By integrating modern molecular diagnostic techniques with imaging technology, it offers a more personalized surgical approach tailored to the patient’s individual condition, thereby further improving patient prognosis[50]. Simultaneously, the integration of real-time intraoperative imaging navigation technology with AI algorithms further enhances the surgeon’s ability to assess the patient’s anatomical structures, providing a more precise and personalized surgical plan for the patient[50]. In recent years, the integration and application of real-time imaging navigation technology in radical gastrectomy for gastric cancer have continually advanced. Multiple clinical validation studies related to real-time navigation have demonstrated that the system offers high feasibility and safety. The development of this technology will further propel radical gastrectomy for gastric cancer toward greater precision and safety.

Application value of 3D CT vascular reconstruction combined with real-time imaging navigation technology in laparoscopic radical gastrectomy: Although traditional laparoscopic radical gastrectomy has become the standard procedure, it still faces challenges such as vascular variations and difficulties in lymph node localization, especially in complex abdominal anatomical structures. These issues may lead to prolonged surgery time, increased blood loss, and higher postoperative complication risks. Jung et al[51] evaluated the clinical value of the RUS-GA navigation system in laparoscopic radical gastrectomy. During surgery, the system used an additional monitor to merge reconstructed vascular anatomy with real-time laparoscopic images, providing intuitive intraoperative navigation. The study included 38 patients in the navigation group and 114 in the non-navigation group, with propensity score matching applied to reduce bias. The results showed that the navigation group had a significantly lower overall complication rate (10.5% vs 26.3%, P = 0.043) and a lower incidence of Clavien-Dindo grade ≥ 2 complications (7.9% vs 24.6%, P = 0.027). No pancreas-related complications occurred in the navigation group, compared with 7.0% in the non-navigation group (P = 0.093).

Similarly, Takiguchi et al[52] synchronized real-time 3D CT reconstruction images with laparoscopic movements. By attaching a 3D motion sensor to the laparoscope to detect its angle, the system presented real-time 3D CT images synchronized with laparoscopic video. In a comparison of 10 Laparoscopic surgeries with conventional procedures, the system significantly reduced intraoperative blood loss (84 ± 93 g vs 190 ± 248 g, P = 0.036), identified vascular anatomical variations (including four complex cases), and, through preoperative simulation, optimized laparoscope insertion site selection.

Hayashi et al[53] generated virtual laparoscopic views by segmenting preoperative arterial and portal venous phase enhanced CT images and applying rapid volume-rendering techniques. The team employed a Polaris Spectra optical tracking system to precisely capture laparoscope position and orientation, and used point-matching registration (Figure 3) to determine a coordinate transformation matrix based on body-surface markers and CT images. In a clinical application involving 23 Laparoscopic gastrectomy patients, the navigation system achieved a mean fiducial registration error of 14.0 ± 5.6 mm (range: 6.1-29.8 mm). Despite this error, surgeons provided positive feedback, noting substantial advantages in identifying upper abdominal vascular branching patterns and anomalies, particularly for the left gastric artery, left gastric vein, right gastric artery, and left hepatic artery.

Likewise, Takiguchi et al[54] introduced two preoperatively reconstructed 3D CT vascular imaging approaches: Standard laparoscopic view 3D vascular imaging (LapView 3D CT vascular imaging) and a fusion of pancreatic body imaging with LapView 3D CT vascular imaging, offering more accurate anatomical references for intraoperative navigation. In a sample of 10 patients, LapView 3D CT vascular imaging successfully and clearly visualized all critical vessels involved in laparoscopic lymph node dissection, preventing misinterpretation of vascular anatomy. A retrospective comparative analysis between the navigation system and conventional methods in laparoscopic-assisted distal gastrectomy and laparoscopic-assisted pylorus-preserving gastrectomy showed that navigation reduced operative time (230.6 ± 40.2 minute vs 263.3 ± 35.8 minute) and intraoperative blood loss (112.9 ± 60.2 g vs 247.5 ± 113.1 g, P < 0.03)[54]. These findings strongly support the efficacy of intraoperative navigation systems in enhancing surgical safety and minimizing blood loss (Figure 3), providing robust technical and clinical evidence for precision navigation in laparoscopic gastric cancer surgery.

Application value of optical molecular imaging combined with real-time imaging navigation technology in laparoscopic radical gastrectomy: Early gastric cancer typically has a shallow invasion depth and small size, making it difficult to determine the resection line during laparoscopic gastrectomy due to the lack of tactile feedback[55]. Therefore, intraoperative tumor localization is crucial for ensuring an adequate safety margin[56]. Advances in optical molecular imaging technology have significantly enhanced the capabilities of real-time imaging navigation systems, accelerating the realization of precision surgery. This technology combines personalized molecular data with traditional anatomical imaging data, providing more precise diagnostic and therapeutic options for radical gastrectomy.

Recent studies have shown that the use of indocyanine green (ICG) and near-infrared fluorescence (NIRF) imaging technologies can significantly improve tumor localization and lymph node detection during gastric cancer surgery. This method not only provides real-time visualization of the anatomical structure in the surgical area but also helps surgeons perform precise lymph node dissection and blood flow assessment during surgery (Figure 3). NIRF imaging, particularly when combined with the application of ICG fluorescent tracers, has become a highly promising adjunct in laparoscopic radical gastrectomy for gastric cancer[57,58]. This technique allows ICG to clearly highlight specific anatomical structures under near-infrared light, providing crucial real-time navigation information to surgeons, thereby enhancing the precision and safety of the procedure[59]. In terms of tumor localization during radical gastrectomy, ICG solution is injected into the submucosal layer around the tumor during endoscopy, clearly displaying the tumor’s position and boundaries. This aids in determining the resection range[56,60], particularly for early gastric cancer surgeries, where unnecessary tissue removal can be avoided, leading to more precise, personalized operations[61]. Ushimaru et al[56] investigated the feasibility and safety of ICG fluorescence marking in laparoscopic gastric cancer surgery. Their findings showed that the ICG group had shorter operative times (P < 0.001), lower estimated blood loss (P = 0.005), and significantly reduced postoperative hospital stays (P < 0.001). In five non-ICG cases, the positive resection margin rate was 6.0%, while no positive resection margins were observed in the ICG group (P = 0.008). This indicates that ICG fluorescence labeling is safe and feasible, and can assist surgeons in more accurately identifying tumor locations during laparoscopic surgery, serving as a valuable navigation tool.

Regarding sentinel lymph node detection, ICG fluorescence tracers can accurately identify sentinel lymph nodes, achieving a specificity of 100% and an accuracy of 98%. As the technology develops, its sensitivity is also improving, aiding in the assessment of LNM and providing guidance for subsequent lymph node dissection. This reduces unnecessary lymph node dissection, minimizing surgical trauma[62]. In determining the extent of lymph node dissection, preoperative or intraoperative injection of ICG allows for real-time assessment of lymphatic drainage pathways, enabling precise identification of the dissection boundaries. This approach increases the number of lymph nodes harvested while reducing the risk of residual lymph nodes. A prospective study involving 133 patients undergoing ICG NIRF-guided laparoscopic gastrectomy showed that the ICG NIRF technique improved the number of lymph nodes harvested and, compared to the non-ICG NIRF group (57.4%), significantly reduced non-compliant lymph nodes (31.8%) without increasing complications[62].

In the fusion application of real-time imaging navigation and optical molecular imaging technologies, such as NIRF imaging combined with ICG fluorescence tracers, significant benefits are observed in tumor localization and defining resection margins during surgery, further improving the precision and safety of the procedure[63-66]. The superior tissue penetration of near-infrared light, coupled with high-brightness AIEgens, ensures precise visualization of deep tissue structures, further enhancing the accuracy of surgical navigation[67]. Fluorescence tracers like ICG have already been widely used to assess gastric tissue perfusion and lymphatic drainage, providing valuable guidance for intraoperative decision-making[64,68,69].

In summary, real-time imaging navigation technology has revolutionized radical gastrectomy for gastric cancer. Preoperative imaging techniques such as CT, MRI, and intraoperative ultrasound, combined with deep learning algorithms, construct individualized 3D visualization models to achieve precise intraoperative registration[52,70]. This not only provides surgeons with augmented reality (AR) surgical views but also significantly enhances the identification of complex anatomical structures and facilitates the formulation of personalized surgical plans. On the clinical application front, systems like the RUS-GA navigation system, which utilizes AI-driven 3D abdominal model reconstruction for patient-specific navigation, have proven effective in reducing the incidence of intraoperative complications, particularly in minimizing postoperative pancreatic complications[63,70]. Additionally, the integration of 3D CT vascular reconstruction with real-time imaging navigation enhances the visualization of key anatomical landmarks, helping to reduce intraoperative blood loss and improve surgical safety[52]. The integrated application of these technologies has significantly enhanced the precision and safety of radical gastrectomy for gastric cancer, signaling a shift toward more personalized and intelligent approach to gastric cancer surgery[71-74]. This advancement offers new therapeutic prospects and hope for improving the prognosis of gastric cancer patients.

The application of AI in the medical field is advancing rapidly, particularly in enhancing surgical precision and safety, which has had a significant impact on gastric cancer treatment. However, these advancements also raise substantial challenges beyond the technical domain, involving deeper issues of medical ethics, patient rights, and social equity. First, the progress of AI systems depends on training and learning from large volumes of patient data, which often contain sensitive personal information and health records[75,76]. Therefore, ensuring the security of such data during collection, storage, and utilization is a critical issue that must be addressed[75,76]. Data breaches or unauthorized access could seriously infringe upon patient privacy and adversely affect their lives[77].

To address this concern, some institutions have proposed solutions such as federated learning and blockchain technology[78,79], which enable data sharing and processing while preserving privacy. Federated learning distributes computation tasks across multiple devices, thereby avoiding centralized storage of patient data and greatly reducing the risk of privacy leaks. Blockchain technology, on the other hand, offers a transparent, secure, and tamper-proof platform for data exchange, strengthening data integrity and privacy protection[79].

Data security and privacy must be prioritized as AI becomes integrated into healthcare[75]. Unauthorized access to sensitive training datasets can result in de-anonymization and re-identification of individuals[80]. Therefore, AI applications in healthcare must comply with established data protection and privacy laws[81]. For example, the European Union’s General Data Protection Regulation and other frameworks have set clear guidelines to address these challenges[82,83].

However, regulatory requirements for data privacy differ significantly across regions. For instance, the General Data Protection Regulation in the European Union and the Health Insurance Portability and Accountability Act in the United States stipulate different standards for informed consent[84]. This variability underscores the importance of international collaboration and technological innovation to achieve standardization across jurisdictions. The adoption of unified digital tools and platforms can help harmonize informed consent requirements across regulatory regions, ensuring that patients receive equal and transparent treatment worldwide. When AI systems process and analyze data, informed consent becomes particularly critical[85]. Patients should be fully informed about how their data will be used by AI, along with the potential risks and benefits, and should have the right to decide whether to provide consent[81]. Ensuring informed consent not only protects patient autonomy but also builds trust in AI applications in healthcare[86,87].

Furthermore, the translation of AI technology into clinical practice is accompanied by ethical considerations regarding its clinical acceptance, with particular emphasis on the trust barriers that surgeons may encounter in relation to AI-driven decision-making, posing substantial challenges. Despite the potential benefits of AI in diagnosis and treatment, surgeons remain cautious about its practical application. An online survey of German surgeons[88] found that while 71% of respondents believed AI could help manage workloads, only 32% thought it could prevent unnecessary treatments. Furthermore, 81% expressed concerns that AI might erode the clinical decision-making skills of doctors, 52% worried about AI’s transparency, 45% feared issues related to patient trust, and 44% were concerned about physician integrity. This lack of trust stems from the complexity and opacity of AI algorithms, making it difficult for surgeons to understand how AI decisions are made, raising concerns about reliability. Moreover, medical decision-making directly concerns patient life and health. Surgeons typically rely on their professional judgment and experience, yet when AI systems make errors, the absence of a clear accountability mechanism can trigger medical disputes, ultimately undermining the trust between patients and physicians. It is also noteworthy that, although AI can align with core medical ethical values, its reliance on existing human experience can introduce bias. Such bias may influence clinical decision-making at multiple stages of the AI lifecycle, potentially exacerbating healthcare disparities[89].

To avoid decision biases during AI training, efforts should be made to ensure that training data are not limited or biased, as this could lead to inaccurate diagnostic or treatment recommendations for certain populations, thus impacting fairness in healthcare. For instance, if an AI prediction model suggests different diagnoses or treatments based on gender, race, or other factors without clear justification, it may impede clinical decision-making[90]. Furthermore, the regulatory and policy frameworks for AI in healthcare are still in the early stages. At present, the regulatory framework for AI in healthcare remains at an early stage, lacking unified quality control and evaluation standards. Therefore, it is particularly important to establish an AI application framework grounded in ethical values to guide its responsible and sustainable development[90,91].

The rapid development of AI often outpaces existing policy and ethical guidelines, making it crucial to address these ethical concerns early in the technology’s application. These discussions not only support the healthy development of the technology but also uphold medical ethics and social equity. As such, AI’s ethical framework must include considerations of transparency, accountability, and fairness[92], and healthcare professionals must receive specialized training to address the ethical challenges posed by the increasing use of AI[93].

Although real-time imaging navigation technology has shown increasing potential in clinical applications, it is important to recognize and objectively assess the current technical limitations, particularly in the context of radical gastrectomy. First, while real-time imaging navigation systems can provide synchronized real-time images with laparoscopic movements, their image resolution and refresh rates may be constrained by hardware limitations[71]. In complex surgical environments with limited conditions, real-time navigation systems may struggle to capture the dynamic changes of small lesions or lymph node metastases. For example, some fluorescence imaging systems may suffer from low signal-to-noise ratios in low-light environments, which can obscure the borders of early-stage cancerous lesions, thus affecting the thoroughness of lymph node dissection[67]. Furthermore, in radical gastrectomy, when tumors are closely connected to surrounding critical blood vessels, nerves, and other structures, systems may find it challenging to accurately identify and synchronize these structures[71]. Additionally, the use of navigation systems requires extra equipment and technical support, which may increase the complexity and cost of the surgery[53].

Another limitation is that most current real-time navigation systems rely on preoperative imaging data. However, physiological changes during surgery may result in discrepancies between the preoperative plan and the actual intraoperative situation[49]. Intraoperative changes, such as tissue deformation and bleeding, may affect the accuracy of navigation systems, leading to deviations between the navigation images and actual anatomical structures. Based on current technologies, if the data fusion algorithm of the navigation system fails to calibrate the displacement deviation between preoperative imaging and intraoperative anatomical structures in real-time, it may lead to marker misalignment, thereby increasing the risk of surgical errors. For instance, factors like pneumoperitoneum and organ displacement can cause the preoperatively constructed 3D model to differ from the actual anatomy, impacting navigation accuracy[49]. Therefore, real-time updating of navigation information to accommodate these changes remains a significant challenge in the current technology. Moreover, real-time navigation systems require surgeons to have a certain level of technical proficiency and experience. Surgeons unfamiliar with the system may need additional training to become proficient in operating these real-time navigation systems during surgery. Despite its potential advantages, real-time navigation technology still faces technical limitations that must be addressed in clinical practice[71].

The cost-effectiveness of AI systems and real-time imaging navigation technologies in radical gastrectomy has been a topic of widespread debate. Integrating these technologies into various aspects of gastric cancer surgery entails significant financial investment for healthcare institutions[94]. This investment not only covers advanced equipment required for acquiring high-quality, real-time imaging data, but also includes the development of sophisticated software and the subsequent costs of data integration and maintenance. These software systems must undergo rigorous clinical validation to meet the high-precision requirements of surgical navigation. Additionally, staff training constitutes a significant component of the overall cost structure, as medical personnel must receive dedicated instruction to ensure they can accurately apply AI-based recommendations during surgical procedures. However, it remains unclear whether these high costs can be justified by the clinical benefits they offer[95]. Furthermore, disparities in technological capabilities and equipment between medical institutions exacerbate the complexity of AI implementation. In some primary healthcare institutions, the lack of specialized personnel to operate and maintain these systems directly limits the widespread adoption and application of AI technologies.

Secondly, from the perspective of cost-related disputes, the high costs of AI and real-time imaging navigation technology may pose significant barriers to the adoption of these technologies by some medical institutions. On one hand, the substantial expenses associated with equipment procurement, system licensing fees, and ongoing maintenance may impose a significant financial burden on small- and medium-sized medical institutions, thereby limiting the broader adoption of such technologies. On the other hand, from a long-term perspective, AI technologies have the potential to progressively reduce healthcare costs by improving surgical precision, lowering postoperative complication rates, and shortening hospital stays[96,97]. However, the key issue remains how to seamlessly integrate these technologies into existing healthcare workflows to improve efficiency rather than becoming an additional burden. This requires collaboration among medical technology developers, healthcare administrators, and clinicians to explore and develop effective solutions. The integration of AI with real-time imaging navigation systems necessitates not only the resolution of technical challenges but also a comprehensive consideration of the ethical, legal, and social implications associated with their implementation[81]. Only through collaborative efforts and the development of appropriate regulations and standards can these emerging technologies be successfully applied in healthcare to improve efficiency and service quality.

To better evaluate the cost-effectiveness of these technologies, it is advisable to conduct a more detailed cost-benefit analysis comparing traditional surgical methods with AI- and navigation-assisted surgeries, with particular attention to the potential long-term savings in healthcare expenditures, such as reductions in postoperative complications and shorter hospital stays. In addition, exploring potential reimbursement models or funding mechanisms could help medical institutions in resource-limited settings overcome the substantial initial investment. Only through well-designed policy support and standardized measures can AI and real-time imaging navigation technologies be successfully integrated into the healthcare system, thereby enhancing the quality and efficiency of medical services.

Based on existing research, most clinical studies on gastrointestinal tumors have focused on the independent validation of either AI-assisted technologies or real-time navigation systems. However, with continuous technological advancements, AI’s capabilities in data integration and predictive modeling are expected to merge with real-time navigation systems. To enhance navigation performance and lower technical barriers, the integration of 5G edge computing with AI chips is emerging as a critical trend[98-100]. This combination significantly accelerates image processing speed, reduces data transmission latency, and optimizes real-time responsiveness, which is particularly important for latency-sensitive AR and virtual reality (VR) applications[101,102]. Meanwhile, AI chips, by providing powerful computing capacity, support the execution of complex image processing algorithms and AI models, thereby improving the accuracy and efficiency of intraoperative navigation[103].

Currently, navigation systems integrated with VR and AR technologies have demonstrated great potential in both preoperative simulation and intraoperative assistance for complex surgeries[104]. For example, VR simulators offer surgeons a highly immersive training environment, significantly shortening the learning curve and enhancing surgical skills and precision[105,106]. Existing studies have already optimized neurosurgical navigation systems through AI algorithms to improve precision and safety in brain tumor resections[107]. The integration of real-time navigation with AI-derived anatomical information is expected to further enhance surgical safety. For instance, in breast-conserving surgery, combining real-time 3D VR navigation with open MRI technology has yielded encouraging results in ensuring safe surgical margins[108].

Looking ahead, as these technologies evolve and integrate, they are expected to play an increasingly important role in all stages of gastric cancer treatment. Preoperatively, AI can accurately assess tumor location, size, shape, and its relationship with surrounding tissues based on multimodal imaging, while real-time navigation can generate detailed 3D models to support surgical planning. Intraoperatively, AI algorithms can process real-time images to identify anatomical structures and tumor boundaries, while the navigation system displays the position of surgical instruments, aiding precise tumor resection and preservation of critical tissues. Postoperatively, AI can analyze intraoperative imaging and data to optimize future surgical decisions and, together with navigation technology, monitor patient recovery, enabling early detection of abnormalities. The integration of preoperative imaging with intraoperative real-time data, coupled with deep learning optimization, holds the potential to automate steps in gastrectomy, thereby further improving surgical efficiency and quality.

It is worth noting that, to ensure the safe and effective adoption of these advanced technologies in radical gastrectomy, a unified certification framework for navigation system operational competence must be established. Therefore, it is recommended that industry associations take the lead in developing phased training protocols, incorporating eye-tracking and AI assessment tools[109] to objectively and quantitatively evaluate surgeons’ operational skills, ensuring both technology adoption and clinical safety. To address current technical limitations, such as image resolution and refresh rates, the integration of 5G and edge computing offers a promising solution. These emerging technologies can help flatten surgeons’ learning curves, while simulators can further standardize their proficiency in surgical navigation.

Multicenter collaboration has already made significant progress in the diagnosis and treatment of gastric cancer, but challenges remain. Clinical data sharing and integration serve as key driving forces, with centers actively collecting, organizing, and sharing clinical data to build comprehensive databases that encompass diverse regions, ethnicities, and disease characteristics[110]. This helps enhance the generalization and predictive accuracy of AI models. Additionally, multicenter collaboration promotes the standardization and normalization of technology, as centers jointly develop unified data collection, labeling, and analysis protocols and quality control systems to ensure the comparability and reliability of research results[111]. Despite the promising results of AI and real-time navigation technology in assisting radical gastrectomy, current research remains largely prospective and may suffer from sample size limitations, potentially leading to biased predictions. Consequently, the integration of AI technology with real-time imaging navi

The integration of AI and radiomics has markedly improved the preoperative diagnostic accuracy of gastrectomy. Deep learning enhances recognition of surgical steps, vascular structures, and adjacent organs, while machine learning supports postoperative outcome monitoring, survival prediction, and complication prevention. Real-time navigation, multimodal imaging fusion, AR, and fluorescence imaging strengthen intraoperative precision and safety. Clinical adoption should follow the principle of “empowerment rather than replacement”, fostering human-machine collaboration to combine clinical expertise with AI capabilities.

Future priorities include conducting randomized controlled trials to validate AI performance, standardizing algorithms for global use, and incorporating patient-reported outcomes to assess long-term impacts on quality of life. Challenges in data quality, transparency, and clinical adaptability require integrated AI-human decision-making frameworks to safeguard scientific rigor and ethics. Addressing global disparities in access, infrastructure, and training is essential for equitable implementation. Through interdisciplinary collaboration and innovation, these technologies can drive the intelligent transformation of gastrectomy, advancing precision medicine and delivering safer, more personalized care.

| 1. | Thrift AP, Wenker TN, El-Serag HB. Global burden of gastric cancer: epidemiological trends, risk factors, screening and prevention. Nat Rev Clin Oncol. 2023;20:338-349. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 484] [Reference Citation Analysis (1)] |

| 2. | Rosa F, Schena CA, Laterza V, Quero G, Fiorillo C, Strippoli A, Pozzo C, Papa V, Alfieri S. The Role of Surgery in the Management of Gastric Cancer: State of the Art. Cancers (Basel). 2022;14:5542. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 13] [Reference Citation Analysis (0)] |

| 3. | Wang Z, Liu Y, Niu X. Application of artificial intelligence for improving early detection and prediction of therapeutic outcomes for gastric cancer in the era of precision oncology. Semin Cancer Biol. 2023;93:83-96. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 8] [Cited by in RCA: 45] [Article Influence: 15.0] [Reference Citation Analysis (0)] |

| 4. | Li R, Li J, Wang Y, Liu X, Xu W, Sun R, Xue B, Zhang X, Ai Y, Du Y, Jiang J. The artificial intelligence revolution in gastric cancer management: clinical applications. Cancer Cell Int. 2025;25:111. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 7] [Reference Citation Analysis (0)] |

| 5. | Shastry KA, Sanjay HA. Cancer diagnosis using artificial intelligence: a review. Artif Intell Rev. 2022;55:2641-2673. [RCA] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 11] [Article Influence: 2.2] [Reference Citation Analysis (0)] |

| 6. | Jin P, Ji X, Kang W, Li Y, Liu H, Ma F, Ma S, Hu H, Li W, Tian Y. Artificial intelligence in gastric cancer: a systematic review. J Cancer Res Clin Oncol. 2020;146:2339-2350. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 85] [Cited by in RCA: 64] [Article Influence: 10.7] [Reference Citation Analysis (0)] |

| 7. | Vicini S, Bortolotto C, Rengo M, Ballerini D, Bellini D, Carbone I, Preda L, Laghi A, Coppola F, Faggioni L. A narrative review on current imaging applications of artificial intelligence and radiomics in oncology: focus on the three most common cancers. Radiol Med. 2022;127:819-836. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 63] [Reference Citation Analysis (0)] |

| 8. | Aromiwura AA, Settle T, Umer M, Joshi J, Shotwell M, Mattumpuram J, Vorla M, Sztukowska M, Contractor S, Amini A, Kalra DK. Artificial intelligence in cardiac computed tomography. Prog Cardiovasc Dis. 2023;81:54-77. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 14] [Cited by in RCA: 14] [Article Influence: 4.7] [Reference Citation Analysis (0)] |

| 9. | Knudsen JE, Ghaffar U, Ma R, Hung AJ. Clinical applications of artificial intelligence in robotic surgery. J Robot Surg. 2024;18:102. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 31] [Cited by in RCA: 92] [Article Influence: 46.0] [Reference Citation Analysis (0)] |

| 10. | Zhang C, Hallbeck MS, Salehinejad H, Thiels C. The integration of artificial intelligence in robotic surgery: A narrative review. Surgery. 2024;176:552-557. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 15] [Article Influence: 7.5] [Reference Citation Analysis (0)] |

| 11. | Cao R, Tang L, Fang M, Zhong L, Wang S, Gong L, Li J, Dong D, Tian J. Artificial intelligence in gastric cancer: applications and challenges. Gastroenterol Rep (Oxf). 2022;10:goac064. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 2] [Cited by in RCA: 31] [Article Influence: 7.8] [Reference Citation Analysis (0)] |

| 12. | Shandhi MMH, Dunn JP. AI in medicine: Where are we now and where are we going? Cell Rep Med. 2022;3:100861. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 19] [Reference Citation Analysis (0)] |

| 13. | Koh DM, Papanikolaou N, Bick U, Illing R, Kahn CE Jr, Kalpathi-Cramer J, Matos C, Martí-Bonmatí L, Miles A, Mun SK, Napel S, Rockall A, Sala E, Strickland N, Prior F. Artificial intelligence and machine learning in cancer imaging. Commun Med (Lond). 2022;2:133. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 146] [Reference Citation Analysis (0)] |

| 14. | Yan SC, Peng W, Cheng M, Yang WR, Wu YY. [Artificial intelligence for lymph node metastasis prediction in gastric cancer: research progress]. Zhonghua Wei Chang Wai Ke Za Zhi. 2025;28:95-102. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 2] [Reference Citation Analysis (0)] |

| 15. | Sharma L, Garg PK. Artificial Intelligence. 1st ed. FL, United States: CRC Press, 2022. |

| 16. | Wilson DH, Wilson PD, Walmsley RG, Horrocks JC, De Dombal FT. Diagnosis of acute abdominal pain in the accident and emergency department. Br J Surg. 1977;64:250-254. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 43] [Cited by in RCA: 37] [Article Influence: 0.8] [Reference Citation Analysis (0)] |

| 17. | Yip M, Salcudean S, Goldberg K, Althoefer K, Menciassi A, Opfermann JD, Krieger A, Swaminathan K, Walsh CJ, Huang HH, Lee IC. Artificial intelligence meets medical robotics. Science. 2023;381:141-146. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 106] [Cited by in RCA: 56] [Article Influence: 18.7] [Reference Citation Analysis (0)] |

| 18. | Bhinder B, Gilvary C, Madhukar NS, Elemento O. Artificial Intelligence in Cancer Research and Precision Medicine. Cancer Discov. 2021;11:900-915. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 32] [Cited by in RCA: 417] [Article Influence: 83.4] [Reference Citation Analysis (0)] |

| 19. | Kaul V, Enslin S, Gross SA. History of artificial intelligence in medicine. Gastrointest Endosc. 2020;92:807-812. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 125] [Cited by in RCA: 382] [Article Influence: 63.7] [Reference Citation Analysis (4)] |

| 20. | McGivern KG, Drake TM, Knight SR, Lucocq J, Bernabeu MO, Clark N, Fairfield C, Pius R, Shaw CA, Seth S, Harrison EM. Applying artificial intelligence to big data in hepatopancreatic and biliary surgery: a scoping review. Art Int Surg. 2023;3:27-47. [DOI] [Full Text] |

| 21. | Bera K, Braman N, Gupta A, Velcheti V, Madabhushi A. Predicting cancer outcomes with radiomics and artificial intelligence in radiology. Nat Rev Clin Oncol. 2022;19:132-146. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 501] [Cited by in RCA: 543] [Article Influence: 135.8] [Reference Citation Analysis (1)] |

| 22. | Bi WL, Hosny A, Schabath MB, Giger ML, Birkbak NJ, Mehrtash A, Allison T, Arnaout O, Abbosh C, Dunn IF, Mak RH, Tamimi RM, Tempany CM, Swanton C, Hoffmann U, Schwartz LH, Gillies RJ, Huang RY, Aerts HJWL. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J Clin. 2019;69:127-157. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 848] [Cited by in RCA: 904] [Article Influence: 129.1] [Reference Citation Analysis (6)] |

| 23. | Bellos T, Manolitsis I, Katsimperis S, Juliebø-Jones P, Feretzakis G, Mitsogiannis I, Varkarakis I, Somani BK, Tzelves L. Artificial Intelligence in Urologic Robotic Oncologic Surgery: A Narrative Review. Cancers (Basel). 2024;16:1775. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 19] [Reference Citation Analysis (0)] |

| 24. | Nanda I, Khatua L, Porwal S, Singhal D. Machine Learning and Computer Vision Driving Precision in Robotic Surgery. Commun Appl Nonlinear Anal. 2024;32:495-506. [DOI] [Full Text] |

| 25. | Charles YP, Lamas V, Ntilikina Y. Artificial intelligence and treatment algorithms in spine surgery. Orthop Traumatol Surg Res. 2023;109:103456. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 26] [Reference Citation Analysis (0)] |

| 26. | Kann BH, Hosny A, Aerts HJWL. Artificial intelligence for clinical oncology. Cancer Cell. 2021;39:916-927. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 170] [Cited by in RCA: 217] [Article Influence: 43.4] [Reference Citation Analysis (0)] |

| 27. | Yu L, Tao MM, Xie LL, Chen K. [Advances in artificial intelligence-based medical imaging and digital pathology for diagnosis, treatment and prognostic assessment of gastric cancer]. Zhonghua Xiaohua Wai Ke Zazhi. 2025;. [DOI] [Full Text] |

| 28. | Chen PC, Lu YR, Kang YN, Chang CC. The Accuracy of Artificial Intelligence in the Endoscopic Diagnosis of Early Gastric Cancer: Pooled Analysis Study. J Med Internet Res. 2022;24:e27694. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 24] [Reference Citation Analysis (0)] |

| 29. | He X, Wu L, Dong Z, Gong D, Jiang X, Zhang H, Ai Y, Tong Q, Lv P, Lu B, Wu Q, Yuan J, Xu M, Yu H. Real-time use of artificial intelligence for diagnosing early gastric cancer by magnifying image-enhanced endoscopy: a multicenter diagnostic study (with videos). Gastrointest Endosc. 2022;95:671-678.e4. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 38] [Cited by in RCA: 41] [Article Influence: 10.3] [Reference Citation Analysis (0)] |

| 30. | Ai S, Li C, Li X, Jiang T, Grzegorzek M, Sun C, Rahaman MM, Zhang J, Yao Y, Li H. A State-of-the-Art Review for Gastric Histopathology Image Analysis Approaches and Future Development. Biomed Res Int. 2021;2021:6671417. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 13] [Cited by in RCA: 20] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 31. | Yan H, Li M, Cao L, Chen H, Lai H, Guan Q, Chen H, Zhou W, Zheng B, Guo Z, Zheng C. A robust qualitative transcriptional signature for the correct pathological diagnosis of gastric cancer. J Transl Med. 2019;17:63. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 8] [Cited by in RCA: 13] [Article Influence: 1.9] [Reference Citation Analysis (0)] |

| 32. | Bera K, Schalper KA, Rimm DL, Velcheti V, Madabhushi A. Artificial intelligence in digital pathology - new tools for diagnosis and precision oncology. Nat Rev Clin Oncol. 2019;16:703-715. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 858] [Cited by in RCA: 972] [Article Influence: 138.9] [Reference Citation Analysis (0)] |

| 33. | Chen S, Ding P, Guo H, Meng L, Zhao Q, Li C. Applications of artificial intelligence in digital pathology for gastric cancer. Front Oncol. 2024;14:1437252. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 4] [Reference Citation Analysis (0)] |

| 34. | Wang SZ, Wang JG, Lu Y, Zhang YJ, Xin FJ, Liu SL, Zhang XX, Liu GW, Li S, Sui D, Wang DS. [Clinical application of convolutional neural network in pathological diagnosis of metastatic lymph nodes of gastric cancer]. Zhonghua Wai Ke Za Zhi. 2019;57:934-938. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 5] [Reference Citation Analysis (0)] |

| 35. | Karakitsos P, Stergiou EB, Pouliakis A, Tzivras M, Archimandritis A, Liossi AI, Kyrkou K. Potential of the back propagation neural network in the discrimination of benign from malignant gastric cells. Anal Quant Cytol Histol. 1996;18:245-250. [PubMed] |

| 36. | Karakitsos P, Stergiou EB, Pouliakis A, Tzivras M, Archimandritis A, Liossi A, Kyrkou K. Comparative study of artificial neural networks in the discrimination between benign from malignant gastric cells. Anal Quant Cytol Histol. 1997;19:145-152. [PubMed] |

| 37. | Karakitsos P, Pouliakis A, Koutroumbas K, Stergiou EB, Tzivras M, Archimandritis A, Liossi AI. Neural network application in the discrimination of benign from malignant gastric cells. Anal Quant Cytol Histol. 2000;22:63-69. [PubMed] |

| 38. | Huang B, Tian S, Zhan N, Ma J, Huang Z, Zhang C, Zhang H, Ming F, Liao F, Ji M, Zhang J, Liu Y, He P, Deng B, Hu J, Dong W. Accurate diagnosis and prognosis prediction of gastric cancer using deep learning on digital pathological images: A retrospective multicentre study. EBioMedicine. 2021;73:103631. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 43] [Cited by in RCA: 82] [Article Influence: 16.4] [Reference Citation Analysis (0)] |

| 39. | Chen G, Xie Y, Yang B, Tan J, Zhong G, Zhong L, Zhou S, Han F. Artificial intelligence model for perigastric blood vessel recognition during laparoscopic radical gastrectomy with D2 lymphadenectomy in locally advanced gastric cancer. BJS Open. 2024;9:zrae158. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 5] [Reference Citation Analysis (0)] |

| 40. | Yoshida M, Kitaguchi D, Takeshita N, Matsuzaki H, Ishikawa Y, Yura M, Akimoto T, Kinoshita T, Ito M. Surgical step recognition in laparoscopic distal gastrectomy using artificial intelligence: a proof-of-concept study. Langenbecks Arch Surg. 2024;409:213. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 3] [Reference Citation Analysis (0)] |

| 41. | Nakamura T, Kobayashi N, Kumazu Y, Fukata K, Murakami M, Kohno S, Hojo Y, Nakao E, Kurahashi Y, Ishida Y, Shinohara H. Precise highlighting of the pancreas by semantic segmentation during robot-assisted gastrectomy: visual assistance with artificial intelligence for surgeons. Gastric Cancer. 2024;27:869-875. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 10] [Reference Citation Analysis (0)] |

| 42. | Hong QQ, Yan S, Zhao YL, Fan L, Yang L, Zhang WB, Liu H, Lin HX, Zhang J, Ye ZJ, Shen X, Cai LS, Zhang GW, Zhu JM, Ji G, Chen JP, Wang W, Li ZR, Zhu JT, Li GX, You J. Machine learning identifies the risk of complications after laparoscopic radical gastrectomy for gastric cancer. World J Gastroenterol. 2024;30:79-90. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 8] [Cited by in RCA: 15] [Article Influence: 7.5] [Reference Citation Analysis (5)] |

| 43. | Ajani JA, D'Amico TA, Bentrem DJ, Corvera CU, Das P, Enzinger PC, Enzler T, Gerdes H, Gibson MK, Grierson P, Gupta G, Hofstetter WL, Ilson DH, Jalal S, Kim S, Kleinberg LR, Klempner S, Lacy J, Lee B, Licciardi F, Lloyd S, Ly QP, Matsukuma K, McNamara M, Merkow RP, Miller AM, Mukherjee S, Mulcahy MF, Perry KA, Pimiento JM, Reddi DM, Reznik S, Roses RE, Strong VE, Su S, Uboha N, Wainberg ZA, Willett CG, Woo Y, Yoon HH, McMillian NR, Stein M. Gastric Cancer, Version 2.2025, NCCN Clinical Practice Guidelines In Oncology. J Natl Compr Canc Netw. 2025;23:169-191. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 54] [Reference Citation Analysis (0)] |

| 44. | Yardımcı AH, Koçak B, Turan Bektaş C, Sel İ, Yarıkkaya E, Dursun N, Bektaş H, Usul Afşar Ç, Gürsu RU, Kılıçkesmez Ö. Tubular gastric adenocarcinoma: machine learning-based CT texture analysis for predicting lymphovascular and perineural invasion. Diagn Interv Radiol. 2020;26:515-522. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 25] [Article Influence: 4.2] [Reference Citation Analysis (1)] |

| 45. | Li J, Dong D, Fang M, Wang R, Tian J, Li H, Gao J. Dual-energy CT-based deep learning radiomics can improve lymph node metastasis risk prediction for gastric cancer. Eur Radiol. 2020;30:2324-2333. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 44] [Cited by in RCA: 125] [Article Influence: 20.8] [Reference Citation Analysis (0)] |

| 46. | Wang HN, An JH, Zong L. Advances in artificial intelligence for predicting complication risks post-laparoscopic radical gastrectomy for gastric cancer: A significant leap forward. World J Gastroenterol. 2024;30:4669-4671. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 2] [Reference Citation Analysis (0)] |

| 47. | Ward TM, Mascagni P, Ban Y, Rosman G, Padoy N, Meireles O, Hashimoto DA. Computer vision in surgery. Surgery. 2021;169:1253-1256. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 24] [Cited by in RCA: 80] [Article Influence: 13.3] [Reference Citation Analysis (0)] |

| 48. | Wang Y, Cao D, Chen SL, Li YM, Zheng YW, Ohkohchi N. Current trends in three-dimensional visualization and real-time navigation as well as robot-assisted technologies in hepatobiliary surgery. World J Gastrointest Surg. 2021;13:904-922. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 28] [Cited by in RCA: 26] [Article Influence: 5.2] [Reference Citation Analysis (2)] |

| 49. | Kok END, Eppenga R, Kuhlmann KFD, Groen HC, van Veen R, van Dieren JM, de Wijkerslooth TR, van Leerdam M, Lambregts DMJ, Heerink WJ, Hoetjes NJ, Ivashchenko O, Beets GL, Aalbers AGJ, Nijkamp J, Ruers TJM. Accurate surgical navigation with real-time tumor tracking in cancer surgery. NPJ Precis Oncol. 2020;4:8. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 8] [Cited by in RCA: 24] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 50. | Wang Y, Zhang L, Yang Y, Lu S, Chen H. Progress of Gastric Cancer Surgery in the era of Precision Medicine. Int J Biol Sci. 2021;17:1041-1049. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 4] [Cited by in RCA: 73] [Article Influence: 14.6] [Reference Citation Analysis (0)] |

| 51. | Jung JE, Song JH, Oh S, Son SY, Hur H, Kwon IG, Han SU. Clinical Feasibility of Vascular Navigation System During Laparoscopic Gastrectomy for Gastric Cancer: A Retrospective Comparison With Propensity-Score Matching. J Gastric Cancer. 2024;24:356-366. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 1] [Reference Citation Analysis (0)] |

| 52. | Takiguchi S, Fujiwara Y, Yamasaki M, Miyata H, Nakajima K, Nishida T, Sekimoto M, Hori M, Nakamura H, Mori M, Doki Y. Laparoscopic intraoperative navigation surgery for gastric cancer using real-time rendered 3D CT images. Surg Today. 2015;45:618-624. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 13] [Article Influence: 1.1] [Reference Citation Analysis (0)] |

| 53. | Hayashi Y, Misawa K, Oda M, Hawkes DJ, Mori K. Clinical application of a surgical navigation system based on virtual laparoscopy in laparoscopic gastrectomy for gastric cancer. Int J Comput Assist Radiol Surg. 2016;11:827-836. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 29] [Cited by in RCA: 23] [Article Influence: 2.3] [Reference Citation Analysis (0)] |

| 54. | Takiguchi S, Sekimoto M, Fujiwara Y, Yasuda T, Yano M, Hori M, Murakami T, Nakamura H, Monden M. Laparoscopic lymph node dissection for gastric cancer with intraoperative navigation using three-dimensional angio computed tomography images reconstructed as laparoscopic view. Surg Endosc. 2004;18:106-110. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 24] [Cited by in RCA: 24] [Article Influence: 1.0] [Reference Citation Analysis (0)] |

| 55. | Kawakatsu S, Ohashi M, Hiki N, Nunobe S, Nagino M, Sano T. Use of endoscopy to determine the resection margin during laparoscopic gastrectomy for cancer. Br J Surg. 2017;104:1829-1836. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 28] [Cited by in RCA: 40] [Article Influence: 4.4] [Reference Citation Analysis (0)] |

| 56. | Ushimaru Y, Omori T, Fujiwara Y, Yanagimoto Y, Sugimura K, Yamamoto K, Moon JH, Miyata H, Ohue M, Yano M. The Feasibility and Safety of Preoperative Fluorescence Marking with Indocyanine Green (ICG) in Laparoscopic Gastrectomy for Gastric Cancer. J Gastrointest Surg. 2019;23:468-476. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 46] [Cited by in RCA: 73] [Article Influence: 10.4] [Reference Citation Analysis (0)] |

| 57. | Belia F, Biondi A, Agnes A, Santocchi P, Laurino A, Lorenzon L, Pezzuto R, Tirelli F, Ferri L, D'Ugo D, Persiani R. The Use of Indocyanine Green (ICG) and Near-Infrared (NIR) Fluorescence-Guided Imaging in Gastric Cancer Surgery: A Narrative Review. Front Surg. 2022;9:880773. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 40] [Reference Citation Analysis (0)] |

| 58. | Fujimoto D, Taniguchi K, Takashima J, Kobayashi H. Indocyanine Green Tracer-Guided Radical Robotic Distal Gastrectomy Using the Firefly™ System Improves the Quality of Lymph Node Dissection in Patients with Gastric Cancer. J Gastrointest Surg. 2023;27:1804-1811. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 9] [Article Influence: 3.0] [Reference Citation Analysis (0)] |

| 59. | Namikawa T, Sato T, Hanazaki K. Recent advances in near-infrared fluorescence-guided imaging surgery using indocyanine green. Surg Today. 2015;45:1467-1474. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 85] [Cited by in RCA: 79] [Article Influence: 7.2] [Reference Citation Analysis (0)] |

| 60. | Romanzi A, Mancini R, Ioni L, Picconi T, Pernazza G. ICG-NIR-guided lymph node dissection during robotic subtotal gastrectomy for gastric cancer. A single-centre experience. Int J Med Robot. 2021;17:e2213. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 20] [Cited by in RCA: 25] [Article Influence: 5.0] [Reference Citation Analysis (0)] |

| 61. | Schmidt T, Fuchs HF, Thomas MN, Müller DT, Lukomski L, Scholz M, Bruns CJ. Maßgeschneiderte Chirurgie in der Behandlung gastroösophagealer Tumoren. Chirurgie. 2024;95:261-267. [RCA] [DOI] [Full Text] [Cited by in Crossref: 2] [Cited by in RCA: 46] [Article Influence: 2.6] [Reference Citation Analysis (0)] |

| 62. | Sakamoto E, Safatle-Ribeiro AV, Jr UR. Advances in surgical techniques for gastric cancer: Indocyanine green and near-infrared fluorescence imaging. Is it ready for prime time? Chin J Cancer Res. 2022;34:587-591. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 6] [Reference Citation Analysis (0)] |

| 63. | Aoyama Y, Matsunobu Y, Etoh T, Suzuki K, Fujita S, Aiba T, Fujishima H, Empuku S, Kono Y, Endo Y, Ueda Y, Shiroshita H, Kamiyama T, Sugita T, Morishima K, Ebe K, Tokuyasu T, Inomata M. Artificial intelligence for surgical safety during laparoscopic gastrectomy for gastric cancer: Indication of anatomical landmarks related to postoperative pancreatic fistula using deep learning. Surg Endosc. 2024;38:5601-5612. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 9] [Article Influence: 4.5] [Reference Citation Analysis (0)] |

| 64. | Knospe L, Gockel I, Jansen-Winkeln B, Thieme R, Niebisch S, Moulla Y, Stelzner S, Lyros O, Diana M, Marescaux J, Chalopin C, Köhler H, Pfahl A, Maktabi M, Park JH, Yang HK. New Intraoperative Imaging Tools and Image-Guided Surgery in Gastric Cancer Surgery. Diagnostics (Basel). 2022;12:507. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 19] [Cited by in RCA: 18] [Article Influence: 4.5] [Reference Citation Analysis (0)] |

| 65. | Pu C, Wu T, Wang Q, Yang Y, Zhang K. Feasibility of novel intraoperative navigation for anatomical liver resection using real-time virtual sonography combined with indocyanine green fluorescent imaging technology. Biosci Trends. 2024;17:484-490. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 3] [Reference Citation Analysis (0)] |

| 66. | Natsugoe S, Arigami T, Uenosono Y, Yanagita S. Novel surgical approach based on the sentinel node concept in patients with early gastric cancer. Ann Gastroenterol Surg. 2017;1:180-185. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 10] [Cited by in RCA: 14] [Article Influence: 1.6] [Reference Citation Analysis (0)] |

| 67. | Dai J, Xue H, Chen D, Lou X, Xia F, Wang S. Aggregation-induced emission luminogens for assisted cancer surgery. Coord Chem Rev. 2022;464:214552. [RCA] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 41] [Article Influence: 10.3] [Reference Citation Analysis (0)] |

| 68. | Kitagawa H, Namikawa T, Iwabu J, Yokota K, Uemura S, Munekage M, Hanazaki K. Correlation between indocyanine green visualization time in the gastric tube and postoperative endoscopic assessment of the anastomosis after esophageal surgery. Surg Today. 2020;50:1375-1382. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 13] [Cited by in RCA: 15] [Article Influence: 2.5] [Reference Citation Analysis (0)] |

| 69. | Yamabuki T, Ohara M, Kaneko T, Fujiwara A, Takahashi R, Komuro K, Iwashiro N. PS01.206: Assessment of the Gastric Conduit Reconstruction Using Indocyanine Green Fluorescent Imaging During Esophagectomy. Dis Esophagus. 2018;31:108. [DOI] [Full Text] |

| 70. | Park SH, Kim KY, Kim YM, Hyung WJ. Patient-specific virtual three-dimensional surgical navigation for gastric cancer surgery: A prospective study for preoperative planning and intraoperative guidance. Front Oncol. 2023;13:1140175. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 11] [Reference Citation Analysis (0)] |

| 71. | Liu Z, Ali M, Sun Q, Zhang Q, Wei C, Wang Y, Tang D, Li X. Current status and future trends of real-time imaging in gastric cancer surgery: A literature review. Heliyon. 2024;10:e36143. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 2] [Reference Citation Analysis (0)] |

| 72. | Ukeh IN, Kassin MT, Varble N, Saccenti L, Li M, Xu S, Wood BJ. Fusion Technologies for Image-Guided Robotic Interventions. Tech Vasc Interv Radiol. 2024;27:101009. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 3] [Reference Citation Analysis (0)] |

| 73. | Chen X, Xie H, Tao X, Wang FL, Leng M, Lei B. Artificial intelligence and multimodal data fusion for smart healthcare: topic modeling and bibliometrics. Artif Intell Rev. 2024;57:91. [DOI] [Full Text] |

| 74. | Zhou K, Li ZZ, Cai ZM, Zhong NN, Cao LM, Huo FY, Liu B, Wu QJ, Bu LL. Nanotheranostics in cancer lymph node metastasis: The long road ahead. Pharmacol Res. 2023;198:106989. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 23] [Cited by in RCA: 17] [Article Influence: 5.7] [Reference Citation Analysis (0)] |

| 75. | Peltz J, Street AC. Artificial Intelligence and Ethical Dilemmas Involving Privacy. In: Artificial Intelligence and Global Security. United Kingdom: Emerald Publishing Limited, 2020. [DOI] [Full Text] |

| 76. | Bhatia M. An AI-enabled secure framework for enhanced elder healthcare. Eng Appl Artif Intell. 2024;131:107831. [DOI] [Full Text] |

| 77. | Almagharbeh WT, Alfanash HA, Alnawafleh KA, Alasmari AA, Alsaraireh FA, Dreidi MM, Nashwan AJ. Application of artificial intelligence in nursing practice: a qualitative study of Jordanian nurses' perspectives. BMC Nurs. 2025;24:92. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1] [Cited by in RCA: 18] [Article Influence: 18.0] [Reference Citation Analysis (1)] |

| 78. | Aich S, Sinai NK, Kumar S, Ali M, Choi YR, Joo M, Kim H. Protecting Personal Healthcare Record Using Blockchain & Federated Learning Technologies. In: 2021 23rd International Conference on Advanced Communication Technology (ICACT); 2021 Feb 7-10; PyeongChang, Korea (South). NY, United States: IEEE, 2021: 109-112. [DOI] [Full Text] |

| 79. | Bathula A, Gupta SK, Merugu S, Saba L, Khanna NN, Laird JR, Sanagala SS, Singh R, Garg D, Fouda MM, Suri JS. Blockchain, artificial intelligence, and healthcare: the tripod of future—a narrative review. Artif Intell Rev. 2024;57:238. [DOI] [Full Text] |

| 80. | Behrendt CA, Gombert A, Uhl C, Larena-Avellaneda A, Dorweiler B. Künstliche Intelligenz in der Gefäßchirurgie. Gefässchirurgie. 2024;29:150-156. [DOI] [Full Text] |

| 81. | Maleki Varnosfaderani S, Forouzanfar M. The Role of AI in Hospitals and Clinics: Transforming Healthcare in the 21st Century. Bioengineering (Basel). 2024;11:337. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1] [Cited by in RCA: 234] [Article Influence: 117.0] [Reference Citation Analysis (0)] |

| 82. | Romagnoli A, Ferrara F, Langella R, Zovi A. Healthcare Systems and Artificial Intelligence: Focus on Challenges and the International Regulatory Framework. Pharm Res. 2024;41:721-730. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 7] [Article Influence: 3.5] [Reference Citation Analysis (0)] |

| 83. | Saheb T, Saheb T. Mapping Ethical Artificial Intelligence Policy Landscape: A Mixed Method Analysis. Sci Eng Ethics. 2024;30:9. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1] [Cited by in RCA: 4] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 84. | Shah WF. Preserving Privacy and Security: A Comparative Study of Health Data Regulations - GDPR vs. HIPAA. Int J Res Appl Sci Eng Technol. 2023;11:2189-2199. [DOI] [Full Text] |

| 85. | Albalawi AF, Yassen MH, Almuraydhi KM, Althobaiti AD, Alzahrani HH, Alqahtani KM. Ethical Obligations and Patient Consent in the Integration of Artificial Intelligence in Clinical Decision-Making. J Healthc Sci. 2024;4:957-963. [DOI] [Full Text] |

| 86. | Wang Y, Ma Z. Ethical and legal challenges of medical AI on informed consent: China as an example. Dev World Bioeth. 2025;25:46-54. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 7] [Cited by in RCA: 9] [Article Influence: 9.0] [Reference Citation Analysis (0)] |

| 87. | Astromskė K, Peičius E, Astromskis P. Ethical and legal challenges of informed consent applying artificial intelligence in medical diagnostic consultations. AI Soc. 2021;36:509-520. [RCA] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 28] [Article Influence: 4.7] [Reference Citation Analysis (0)] |

| 88. | Henn J, Vandemeulebroucke T, Hatterscheidt S, Dohmen J, Kalff JC, van Wynsberghe A, Matthaei H. German surgeons' perspective on the application of artificial intelligence in clinical decision-making. Int J Comput Assist Radiol Surg. 2025;20:825-835. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 2] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 89. | Cross JL, Choma MA, Onofrey JA. Bias in medical AI: Implications for clinical decision-making. PLOS Digit Health. 2024;3:e0000651. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 159] [Reference Citation Analysis (1)] |

| 90. | Ning Y, Liu X, Collins GS, Moons KGM, McCradden M, Ting DSW, Ong JCL, Goldstein BA, Wagner SK, Keane PA, Topol EJ, Liu N. An ethics assessment tool for artificial intelligence implementation in healthcare: CARE-AI. Nat Med. 2024;30:3038-3039. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 13] [Article Influence: 6.5] [Reference Citation Analysis (0)] |

| 91. | Vandemeulebroucke T. The ethics of artificial intelligence systems in healthcare and medicine: from a local to a global perspective, and back. Pflugers Arch. 2025;477:591-601. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 3] [Article Influence: 3.0] [Reference Citation Analysis (0)] |