INTRODUCTION

Heart failure (HF) is a complex clinical syndrome characterized by dysfunction of the heart to pump or fill blood throughout the body. It is a major worldwide concern due to its high morbidity, mortality, and economy burden. The highest prevalence rates exist in Central Europe, North Africa, and the Middle East. Economic burden is also expected to rise and will consume > 10% of total health expenditures[1-3]. HF can be categorized by the ejection fraction level. Patients with HF with reduced ejection fraction (HFrEF) account for the majority of this subtype compared to those with HF with preserved ejection fraction (HFpEF) and mild-range ejection fraction. Despite the multifactorial nature of the disease, the most common underlying etiologies of HF are coronary artery disease, hypertension, valvular heart disease, cardiomyopathy, and chemo- and radiotherapy-induced cardiomyopathy[4]. Current guidelines from the European Society of Cardiology and American Heart Association (AHA) have established a solid recommendations on the diagnosis and management of patients with HF across ejection fraction levels[5-7]. Despite these well adopted guidelines, mortality and rehospitalization rates in patients with HF remain high in many regions[8-10]. Several predictors associated with an increased risk of death and/or rehospitalization are age, renal function, blood pressure, blood sodium level, ejection fraction, brain natriuretic peptide level, New York Association functional class, diabetes mellitus, etc[11-13]. Today, clinicians do not soley rely on traditional auscultation examinations of heart and lung sounds due to the high inter- and intra-listener variability[14] and because there are more reliable options available for diagnosis and risk assessment in HF patients, such as NT-pro BNP[15] and echocardiography parameters[16]. However, it is important to acknowledge that these examinations are either invasive, which carries risk, and/or require expertise for interpretation.

Heart and lung sound, together comprising the thoracic cavity sound, provide informative details that reflect patient conditions, particularly HF patients. HF patients can present with either systolic dysfunction, diastolic dysfunction, or both, which can be indicated by reduced myocardial contractility (S1 of heart sound) and/or increased left ventricular filling pressure (S3 of heart sound)[17]. Additionally, presence of fluid in the lung interstitium (pulmonary edema) can be auscultated from lung sounds of crackles and rales[18], well known features of thoracic cavity sound. Due to the limitations of human hearing, only a small amount of information can be captured from thoracic cavity sounds. Recent advances in artificial intelligence development have allowed for the retrieval of additional subtle features of thoracic cavity sounds, which could be associated with an increased risk of adverse outcomes in HF patients[19]. The implementation of artificial intelligence in analyzing thoracic cavity sounds in HF can potentially overcome the inter- and intra-listener variability and identify unknown features of the thoracic cavity sound. This implementation can be reflected in the utilization of machine learning, a subset of artificial intelligence, to develop an algorithm or model to learn and make a decision based on a specific outcome. This review aims to summarize the currently available studies on the use of artificial intelligence to analyze thoracic cavity sounds in HF patients, with the goal of enhancing their care.

THORACIC CAVITY SOUND IN HF PATIENTS

Thoracic cavity sounds are generated from various sources but primarily originate from lung sounds and heart sounds[20]. Lung sound is formed from airflow movement within the lower respiratory organs and can be physiologically classified into vesicular, bronchial, and tracheal lung sounds depending on the auscultation sites. Each lung sound has a specific range of frequency and length of inspiration and expiration phases. However, when airflow is disrupted for any reason, adventitious lung sounds may be produced. Adventitious breath sounds could be further classified based on sound continuity and frequency. In patients with HF, increased left ventricular filling pressure leads to retrograde pressure elevation of the left atrial and pulmonary veins, eventually causing fluid leakage to the lung interstitium and subsequent pulmonary edema[21]. Fluid in the lungs generally creates crackle/rale types of adventitious breath sounds[22]. Auscultation is commonly used to detect crackles/rales and assess the congestive state of HF patients[23]. However, some patients can have subclinical congestion that is not detectable by auscultation, but fluid in the lungs can be confirmed with more advanced imaging modalities, such as lung ultrasound. These patients are associated with worse outcomes; therefore, early detection of this condition is crucial to prevent HF rehospitalization[24].

The other main component of thoracic cavity sound is the heart sound. Heart sound is generated from the opening and closing of the heart atrioventricular and semilunar valves, which are also influenced by the rushing of blood to the chambers. Additional heart sounds can be generated when there is an abnormal structure or function of the heart chambers. There are four main physiological heart sounds. The first heart sound (S1) is generated by the closing of the mitral and tricuspid valves during diastole, and the second heart sound (S2) is generated by the closing of the aortic and pulmonic valve during end of systole. The third heart (S3) sound may occur when the blood rapidly rushes to the ventricle during diastole, which occurs due to excessive blood volume in conditions like HF. Lastly, the fourth heart sound (S4) occurs due to atrial contraction to empty blood from the atrial chamber to the ventricle. Additional heart sounds generated by altered blood flow through the heart chambers or valves can be heard as a murmur. It can either occur during systole or diastole and often have specific characteristics that indicate an underlying cardiac condition[20,25].

Thoracic cavity sounds are typically auscultated using a stethoscope; however, this examination is highly subjective and requires significant experience[26]. To address this issue, a digital stethoscope is designed to capture the sound waves, which will then be converted to electrical signal and transformed to waveforms. These waveforms provide a more objective review of thoracic cavity sounds and can be utilized for further study with the aid of artificial intelligence. A digital stethoscope primarily works by detecting thoracic cavity sounds using either piezoelectric sensors or a microphone, which then convert the sounds into electrical signals[27,28]. Currently available digital stethoscopes include the 3M Littmann 3200, the Thinklabs Digital Stethoscope, and the CliniCloud Digital Stethoscope, each with its own strengths and limitations[20].

In addition to using a stethoscope, thoracic cavity sounds, particularly heart sounds, can be collected by sensor implants such as cardiac resynchronization therapy-defibrillator (CRT-D) and implantable cardiac defibrillator (ICD). These multisensory devices utilize the HeartLogic algorithm and acquire various variables in the thoracic cavity, including heart sounds, thoracic impedance, respiration rate and the ratio of rate to volume, heart rate, and activity. Together, they form an index score that aids in the early detection of worsening HF conditions in ambulatory patients. The HeartLogic index identifies worsening HF conditions by monitoring the progressive increase in heart rate, decreased intensity of the first heart sound, and shallower breathing with faster and less inspiratory volume[29-31]. Several studies have demonstrated the potential of future HF monitoring programs for those with CRT-D and ICD[32-35]. However, the variables used to generate the index score are predicated on clinician-selected features, which may overlook subtle indicators that could yield additional insights into the pathophysiological status of HF patients. Additionally, it can only be used in a population of HF patients with implanted devices.

THORACIC CAVITY SOUNDS AND MACHINE LEARNING IN HF PATIENTS

Artificial intelligence, specifically machine learning, can assist in analyzing thoracic cavity sound data, aiding in the diagnosis and management of HF patients. Previous studies have primarily studied either lung sounds or heart sound separately, but only limited studies have evaluated them together. Machine learning can generally be divided into supervised learning, unsupervised learning, and reinforcement learning. Each serves their own purposes but have limitations. In brief, supervised learning requires labeled datasets to develop future prediction models while maintaining model interpretability but is limited to the number of labeled features a person can comprehend. On the other hand, unsupervised learning aims to determine the unknown in a dataset and creates a model based on it. Reinforcement learning creates a positive and negative reinforcement model that generates the most accurate model. The latest machine learning model is the deep learning. It is the most intriguing as it deals with complex algorithms designed to simulate the workings of the human brain. However, it is limited in interpretability and requires a vast amount of data[32,36-39]. One of the main benefits of deep learning is the avoidance of manual labeling of outputs and features, thus preventing the removal of essential hidden features.

WORKFLOW FOR ANALYZING THORACIC CAVITY SOUND DATA USING MACHINE LEARNING

Thoracic cavity sounds need to be processed before they can generate the desired output in an algorithm model. Given that thoracic cavity sound data comprises both heart and lung sounds and that previous studies have analyzed these sounds separately, this section provides a concise overview of the general workflow to process both sound types. The processes described below will primarily focus on supervised learning models. Deep learning differs from the conventional supervised learning model in that data clustering and classification are performed completely by the deep learning machine; hence there is no need for additional feature extraction and selection. Additionally, it is also designed to capture and understand complex patterns to mimic human brain networking system. Although it is particularly effective in dealing with such complex tasks, especially when there is a lack of knowledge on the features, it only works well when sufficiently large sample numbers are available. It is not advised to be used on a small sample data.

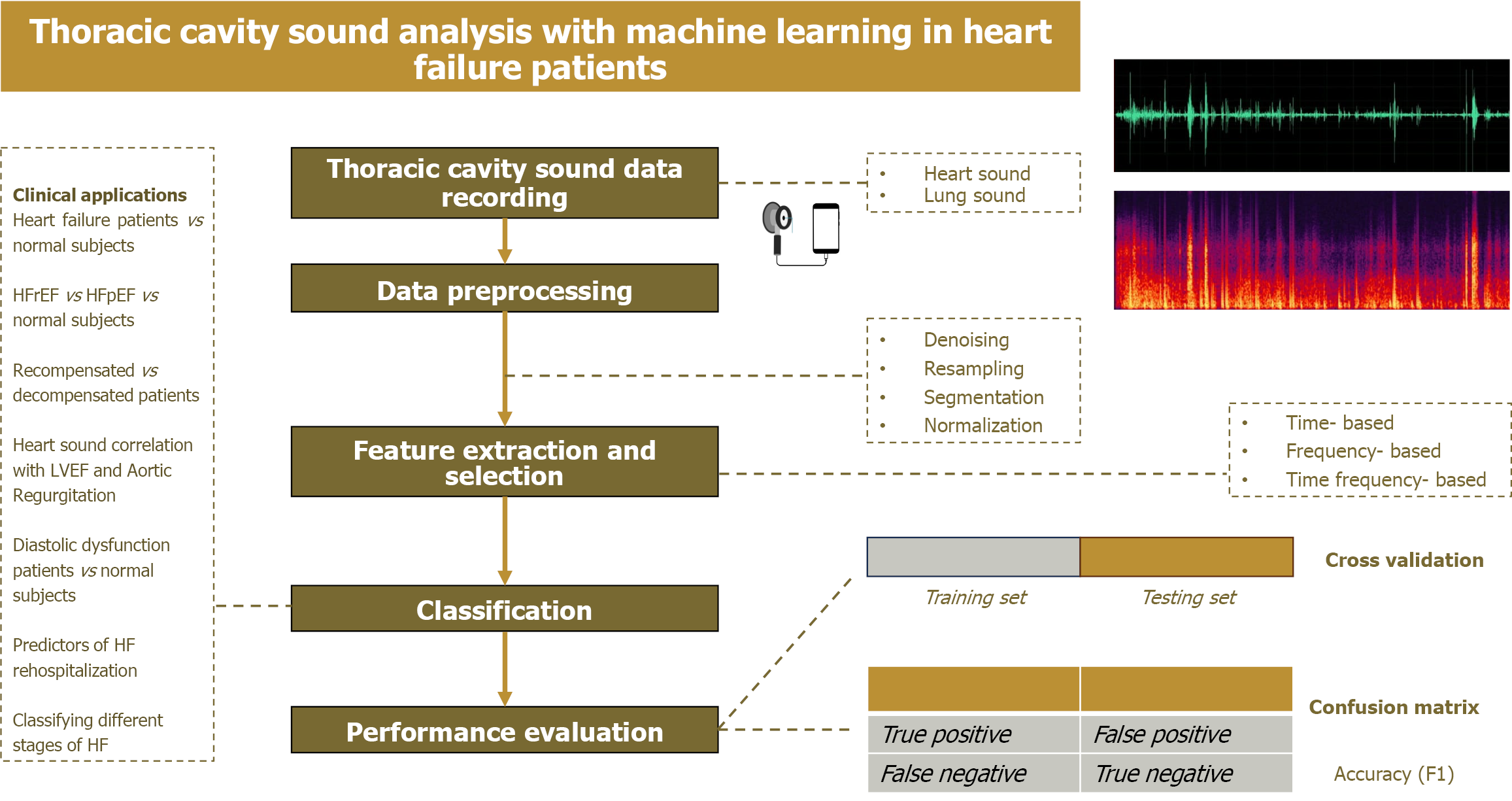

The following is the general workflow for analyzing thoracic cavity sound with machine learning models (Figure 1).

Figure 1 Workflow of thoracic cavity sound analysis with machine learning in heart failure patients.

HF: Heart failure; LVEF: Left ventricular ejection fraction; HFpEF: Heart failure with preserved ejection fraction.

Data preprocessing

Before the thoracic cavity sound data can be processed through machine learning, preprocessing of the sound data is necessary to ensure good quality data. Sound data can be pre-processed by denoising, resampling, segmentation, and normalization. Additionally, for lung sound, the process generally begins with conversion of lung sound to spectrogram visuals; gamma spectrogram is most widely used method[40].

Thoracic sounds are usually collected in an environment that is not very conducive. Hence, denoising sound data is important. One commonly used method is the Savitzky-Golay filter[41]. In lung sound data analysis, denoising can also be performed by filtering, wavelet based on time-frequency data, and empirical mode decomposition[42].

Resampling of sound data involves down-sampling to 600 Hz in accordance with Nyquist theory[43]. A filter can also be applied with a threshold of 1 kHz because cardiovascular sound is less likely to occur beyond that cutoff[44].

Normalization is performed to equalize heart sound amplitudes caused by different chest wall thicknesses of patients and inter-individual variations[45]. It is commonly performed with the root mean square or with peak normalization where normalization is done based on the average power level in sound data[46].

Segmentation standardizes the input for an artificial intelligence (AI) model with a fixed frame length (generally 0.16 second). Segmentation requires identification of the S1 and S2 components. Segmentation can be performed directly (Shannon energy, Hilbert transformation, complexity signatures, matching pursuit, etc) or indirectly by using ECG signal as reference. The (Gjoreski) Springer modification of Schmidth methods (segmentation) is also sometimes performed[47,48]. In lung sound analysis, segmentation of lung and heart sound can also be performed with filters such as independent component analysis and non-negative matrix factorization (NMF)[49].

Feature extraction

Feature extraction is a very crucial step in AI analysis. Feature extraction is a process that calculates the values of features that were selected for the machine learning algorithm[50]. Feature extraction can be based on time-domain features (variation of lung sound over time), frequency-domain features (distribution of frequency bands), and time- frequency domain features (provides information about the non-stationary nature of sound). Time-frequency spectrogram plots better characterize heart and lung sounds compared to temporal waveform; therefore, it is more commonly used[51]. Numerous methods have improved the feature extraction process. One main ideas is to conduct wavelet transformation, as it is possible to represent an orthonormal series that overcomes the limitations of Fourier transformation, which uses only stationary signal[52]. Because heart and lung sounds are non-stationary and dynamic signals, wavelet transformation is more appropriate. This transformation can be performed using a discrete wavelet transform algorithm[53]. Additionally, it is important to consider that heart sounds exhibit multifractal characteristics, which may only be captured and studied with the aid of machine learning. These multifractal characteristics are usually processed by the multifractal detrended fluctuation analysis (MF-DFA) method[54]. Some other commonly used feature extraction algorithms are energy entropy[55] and mel-frequency cepstral coefficients (MFCC)[56].

Feature selection

Feature selection is the process of managing high-dimensional data by identifying and retaining only the important and relevant features for training machine learning models. This process aims to reduce dimensionality and selects the most relevant features to use in further machine learning processing. This process also removes the repetitive features found during feature extraction. Excessive feature extraction can compromise the interpretability of AI models, while insufficient feature extraction might prevent the generation of the most accurate models. Additionally, extracting too many features will also increases the chance of over-fitting. Hence, selecting the most relevant features are one of the most critical elements in AI[50].

Feature selection can be performed statistically or using artificial intelligence-based, and meta-heuristic-based methods. Artificial intelligence-based feature selection can be performed by genetic algorithms, particle swarm optimization, simulated annealing, etc. An example of statistic-based feature selection is the least absolute shrinkage and selection operator algorithm. In addition to feature selection, other methods to reduce the dimensionality in an artificial intelligence model are through principal component analysis and linear discriminant analysis. This aims to prevent the curse of dimensionality where too many features will eventually degrade model performance[57,58].

Classification

The final step of artificial intelligence model generation is to classify the data into the required output. There are numerous methods developed for this purpose. Examples are support vector machine (SVM), gradient boosting classifier, decision tree, logistic regression, random forest classifier, K- nearest neighbor (KNN), XGBoost (XGB), naïve Bayes, etc. The discussion of each classifiers is beyond the scope of this review[59].

Performance evaluation

Machine learning model performance can be measured using accuracy (F1), sensitivity, specificity, and precision parameters. The confusion matrix can be used to break down a model’s performance parameters. This matrix includes the evaluation of the true positive and negative value and false positive and negative value. Additionally, cross-validation can be used to evaluate the robustness of a model to an independent dataset. Cross-validation can be performed with several methods, namely K-fold, stratified K-fold, time series, and leave one out cross validation[60,61].

PREVIOUS STUDIES ON THE APPLICATION OF MACHINE LEARNING FOR ANALYZING HEART SOUNDS IN PATIENTS WITH HF

Most studies have collected heart sound recordings in the Erb’s point for up to 30 seconds. The utilization of machine learning of heart sound data involves the differentiation of normal and HF patients, differentiation of HFrEF, HFpEF, and normal patients, prognosis of HF patients, correlation of heart sound with ejection fraction, etc.

Heart sounds to classify HF patients vs normal subjects

In a study by Zeinali et al[58], the authors aimed to differentiate heart sounds into normal, abnormal S3, and abnormal S4, which indirectly indicates HF condition. The authors utilized a supervised machine learning algorithm in 650 samples from 104 abnormal and 52 normal subjects. A feature reduction algorithm performed on gradient boosting classification performed the best with 0.91 accuracy (F1 score), followed by random forest and SVM with 0.83 accuracy.

Zheng et al[62] extracted heart sound features with maximum entropy spectra estimation, empirical mode decomposition, and MF-DFA to account for the non-stationary and multifractal nature of heart sounds. In this study, only interpretable features were selected to classify patients with HF (labeled as subjects with low cardiac reserve) and normal subjects. An SVM classifier was used to obtain high accuracy (95.36%). The selected interpretable features include higher diastolic/systolic amplitude (D/S), indicating myocardial perfusion state; S1 and S2 amplitudes, reflecting cardiac contractility and resistance; and delta alpha, representing the multifractal nature of heart sounds, which are diminished in HF patients

In another study, Jain et al[63] studied 303 patients and extracted 14 features to compare different types of classifiers in a supervised learning model. They found that the XGB classifier performed better than logistic regression and SVM in classifying patients with heart disease.

Additionally, Liu et al[64] studied the classification of a more specific population, HFpEF patients vs healthy subjects. The authors used MF-DFA feature extraction methods to account for the multifractality of heart sounds. Furthermore, they used an extreme learning machine (ELM) classifier, which is assumed to be faster and more effective than SVM. Twelve features were significantly different between the two populations and were input into the ELM classifier. The ELM classifier was more accurate than the SVM classifier (96.32% vs 94.01%). This study further elaborated and explained the extracted features in the discussion, where the D/S value reflected the blood perfusion during diastole; h (q minimum), h (q maximum) displayed the murmur generated by the heart sounds; and alpha maximum and minimum displayed signal fluctuations, which indicated the multifractality of heart sounds[65].

With a more advanced method, Gjoreski et al[44] proposed the use of a stacking module of different machine learning classifiers (SVN, J48, Naïve Bayes, KNN, random forest, bagging and boosting) to classify chronic HF patients from healthy subjects based on heart sounds. In this study, the authors used statistical functions (extremes, statistical moments, percentiles, durations) to extract features for the machine learning process. The stacking model of five classifiers had the highest accuracy (F1 0.87).

One study that used a deep learning model to classify HF patients vs normal subjects was by Gjoreski et al[44]. In this study, the authors proposed the combination of deep learning and feature-based learning in identifying chronic HF patients based on heart sound. The generated model was tested on different sound datasets and performed better than logistic regression, random forest, and segment-based classifiers. Additionally, the authors found that the model can differentiate between recompensated and decompensated patients based on 15 selected features. Similarly, in a study by Susič et al[46], the authors aimed to differentiate recompensated and decompensated HF patients. In this study, the authors compared ten models and selected the extracted features to maintain transparency. As expected, most selected features were derived from diastolic parameters, which underscores the prevalence of diastolic dysfunction in HF patients. From the ten selected models, logistic regression had the highest accuracy (0.72).

Another study that used deep learning model was that by Gao et al[45] The authors proposed the use of an advanced deep learning algorithm to classify heart sounds of patients with HFrEF vs HFpEF vs normal subjects. The study included 42 HFrEF patients, 66 HFpEF patients, and 1297 healthy patients. The authors used an improved recurrent neural network that utilized the time series signal feature of the heart sound. In this deep learning algorithm, the pre-processed data was fed into deep learning using the long short-term memory and gated recurrent unit (GRU) algorithm. Compared to other classifier models including SVM, convolutional deep learning, and Liquid State Machine, the GRU algorithm had the highest average accuracy (F1 98.82), followed by Long Short-Term Memory (F1 96.29), convolutional deep learning (F1 94.65), and SVM (F1 87.62).

Heart sounds for risk stratification and prognostic evaluation in HF patients

In a study by Zheng et al[66], the authors aimed to classify HF patients based on their ACC/AHA stage classification (stage A-D). The authors extracted features based on the scale and domain groups of the heart sounds. The combination of features extracted from the scale and domain groups acquired the best accuracy with SVM compared to random forest classifier.

In addition to population classification, machine learning applied to heart sounds has been used to correlate them with various echocardiographic parameters. A deep learning model generated by Yang et al[67] using the deep convolutional generative adversarial networks demonstrated high accuracy in classifying patients with left ventricular diastolic dysfunction. Diastolic dysfunction is further classified into mild, moderate, and severe based on the American Society of Echocardiography/European Association of Cardiovascular Imaging guidelines which use the E/A ratio, deceleration time, E/e’ ratio, and left atrial volume index parameters.

Furthermore, in a study by Harata et al[68], a novel cardiac performance system (CPS) was developed to capture electrocardiography and acoustic signals through a biosensor attached to the skin. The results showed that this biosensor was correlated with left ventricular ejection fraction (LVEF). Similarly, a study by Howard-Quijano et al[70] showed that LVEF measurement with CPS is not significantly different than measurements with transthoracic echocardiography. The area under the curve (AUC) results of predicting patients with LVEF < 35% and < 50% are 0.974 and 0.916, respectively. A study by Harata et al[68] also proposed the use of sound data obtained from mobile phone microphones to generate a machine learning model to predict LVEF. Simple logistic regression classifier was used, and features were selected by authors to preserve mathematical rigor. The results showed a promising high accuracy (> 0.90) across various populations (age, race, age, and sites).

In a study by Sandler et al[69], the authors utilized seismocardiography (SCG) to detect respiratory variability and the third heart sound amplitude for early detection of worsening HF. Unsupervised learning was implemented in 40 HF patients’ data. The variability of SCG measurements decreased as patients were closer to re-hospitalization.

Another potential use of heart sound in HF patient care includes the detection of complication (aortic regurgitation) following implantation of continuous-flow left ventricular assist device (LVAD). This study applied three selected features from 245 LVAD sounds obtained. The result showed high accuracy (91%) and moderate AUC (0.73) to predict aortic regurgitation[70,71].

PREVIOUS STUDIES ON THE APPLICATION OF MACHINE LEARNING FOR ANALYZING LUNG SOUNDS IN PATIENTS WITH HF

Normal lung sound waveform is relatively stable with little amplitude fluctuation. When adventitious lung sounds are present, amplitude fluctuation will change according to the associated pathologies. There have been limited publications that evaluated lung sounds in HF patients. Most available studies have distinguished lung sounds into various types of adventitious sounds, such as crackles, wheezes, and rubs, or by specific pulmonary diseases like chronic obstructive pulmonary disease and pneumonia. However, these studies have not focused on HF conditions[51]. Currently, only one database by Fraiwan et al[72] included HF patients, but no further analysis was performed.

We identified four studies that evaluated the use of thoracic cavity sound in classifying HF patients. These studies did not separately analyze heart and lung sounds. In brief, a study by Yang et al[73] evaluated the thoracic cavity sound of six HF and six healthy subjects and found that feature extraction with MFCC with kNN classification acquires the highest accuracy (95.7%), followed by kNN classification with PLPC feature extraction (94.7%). Another proposed method by Hong et al[72] using an NMF model resulted in a lower accuracy score (86%). Hong et al[74] also compared SSFE feature extraction and MFCC to distinguish HF patients. They found that SSFE with kNN classification achieved the highest accuracy score (99%). In another study by Hong et al[75], 54 patients with HFrEF and 54 healthy subjects were analyzed with SVM classifier to identify HF subjects based on thoracic cavity sound. The results showed a promisingly high sensitivity and specificity (88% and 98%, respectively) to detect lung crepitation in HF patients.

FUTURE RECOMMENDATIONS

The overall results from these machine learning model studies in HF patients demonstrate a promisingly high level of accuracy. Machine learning models have potential in intervening different pathways of HF patient care. In this literature review, different studies demonstrated that machine learning models of thoracic cavity sound can simulate the purpose of echocardiography, such as in discriminating HF patients according to ejection fractions.

Compared to the gold standard for diagnosis of HF with brain natriuretic peptide (BNP), the AI algorithm shows comparable accuracy with BNP (AUC = 0.94) with a less invasive modality. In addition, AI algorithm incorporating thoracic cavity sound presents a more practical tool for more frequent evaluation of HF patients.

However, several important limitations exist. The included studies are at risk of overfitting and lack generalizability due to the studied population. Additionally, lack of long-term clinical research has limited the application of AI in the medical field. Therefore, future prospective studies be conducted to incorporate these machine learning models and to further assess their robustness and usefulness in daily clinical practice. The integration of machine learning in managing HF patients is undoubtedly important, and with the rapid advancement of technology and knowledge, we expect significant improvements in diagnostic accuracy, treatment personalization, and overall patient outcomes. This is particularly true for reducing the rehospitalization rate in HF patients through the early detection of worsening HF by analyzing the subtle features in thoracic cavity sounds with the aid of machine learning. Future advances in technology will facilitate home-based monitoring for HF patients, where an integrated system involving healthcare personnel can address potential issues before the patient progresses to acute HF, thereby reducing the need for hospitalization and subsequently decreasing disease burden. Lastly, it should also be emphasized that application of AI in the medical field in far more challenging due to the limitation of ethical and regulatory barriers. With the emergence of more robust studies regarding the implementation of AI in the medical field, these barriers could be overcome soon[76].

CONCLUSION

Thoracic cavity sounds may encompass a wealth of informative features that reflect the pathophysiological processes in the hearts of HF patients. These features can only be extracted with the aid of machine learning. Hence, the application of machine learning to analyze thoracic cavity sounds holds potential in the management of HF patients.

Provenance and peer review: Invited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Cardiac and cardiovascular systems

Country of origin: Indonesia

Peer-review report’s classification

Scientific Quality: Grade B

Novelty: Grade C

Creativity or Innovation: Grade C

Scientific Significance: Grade B

Open Access: This article is an open-access article that was selected by an in-house editor and fully peer-reviewed by external reviewers. It is distributed in accordance with the Creative Commons Attribution NonCommercial (CC BY-NC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is non-commercial. See: https://creativecommons.org/Licenses/by-nc/4.0/

P-Reviewer: Cheon DY, Assistant Professor, South Korea S-Editor: Liu H L-Editor: Filipodia P-Editor: Wang CH