Published online Nov 27, 2025. doi: 10.4240/wjgs.v17.i11.112058

Revised: August 16, 2025

Accepted: September 25, 2025

Published online: November 27, 2025

Processing time: 132 Days and 5.6 Hours

The management of liver transplant recipients and their outcome prediction is complex due to nonlinear interaction of multiple pre, peri and postoperative factors. Artificial intelligence (AI) has a potentially significant role in under

Core Tip: Artificial intelligence (AI) has the potential to revolutionize liver transplantation by improving outcomes and optimizing resource allocation. It aids in diagnosis, transplant candidacy, matching donors and recipients, donor liver quality, predicting complexities or complications, and providing real-time surgical guidance. Post-transplant, AI can detect early signs of complications, tailoring immunosuppression, enhancing patient and graft survival. By leveraging advanced algorithms and machine learning techniques, AI provides clinicians with powerful tools to improve patient outcomes and streamline the transplantation process. As we move forward, it is imperative to address ethical challenges and ensure the responsible use of AI in healthcare.

- Citation: Goja S, Yadav SK. Artificial intelligence in liver transplantation: Opportunities and challenges. World J Gastrointest Surg 2025; 17(11): 112058

- URL: https://www.wjgnet.com/1948-9366/full/v17/i11/112058.htm

- DOI: https://dx.doi.org/10.4240/wjgs.v17.i11.112058

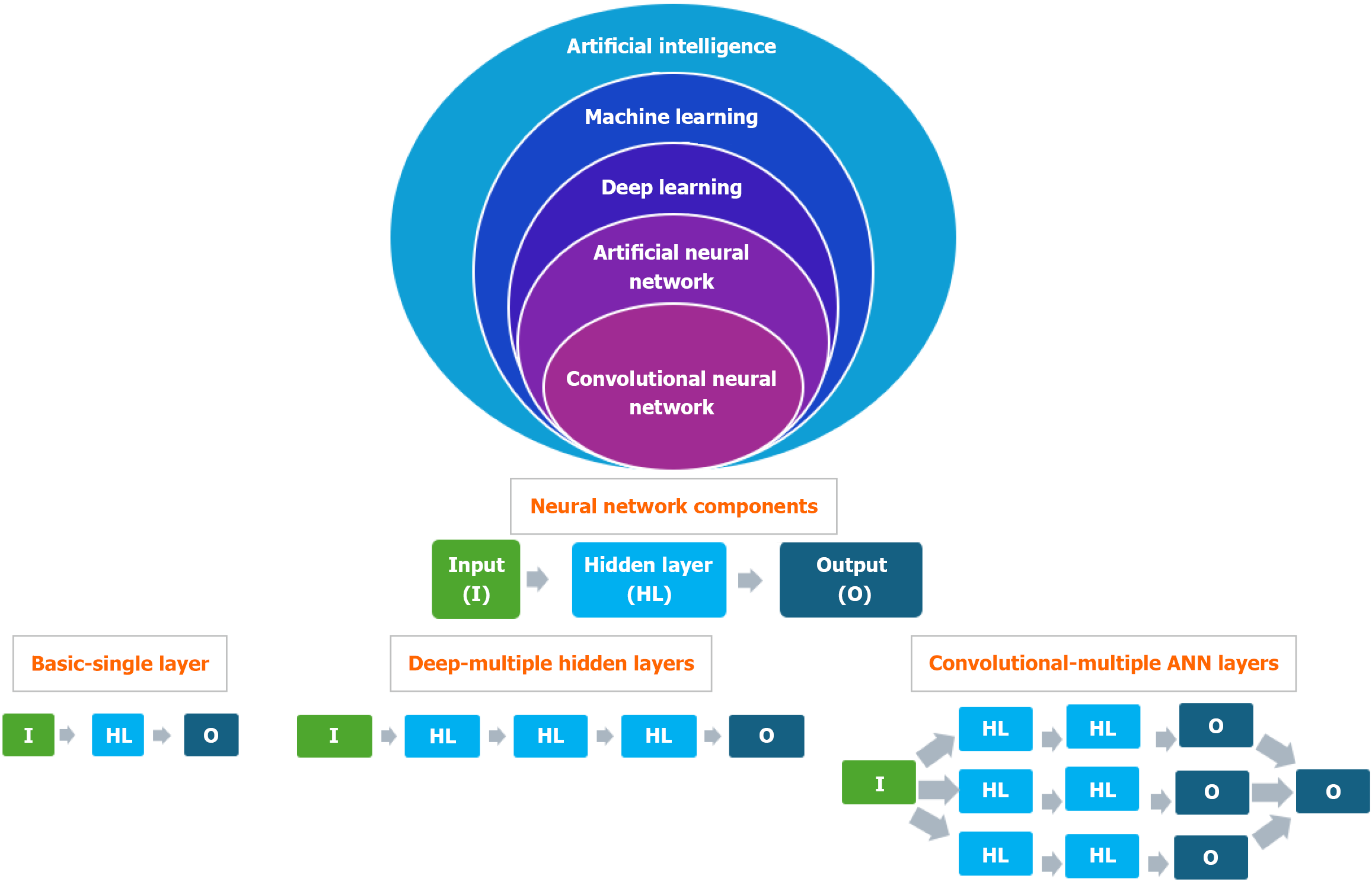

Artificial intelligence (AI) utilizes algorithms and extensive datasets to simulate human cognitive functions, enabling machines to analyze information, identify patterns, make decisions and adapt to novel situations without explicit human intervention (Figure 1). AI encompasses capabilities such as reasoning, learning, problem-solving, perception and comprehending natural language[1].

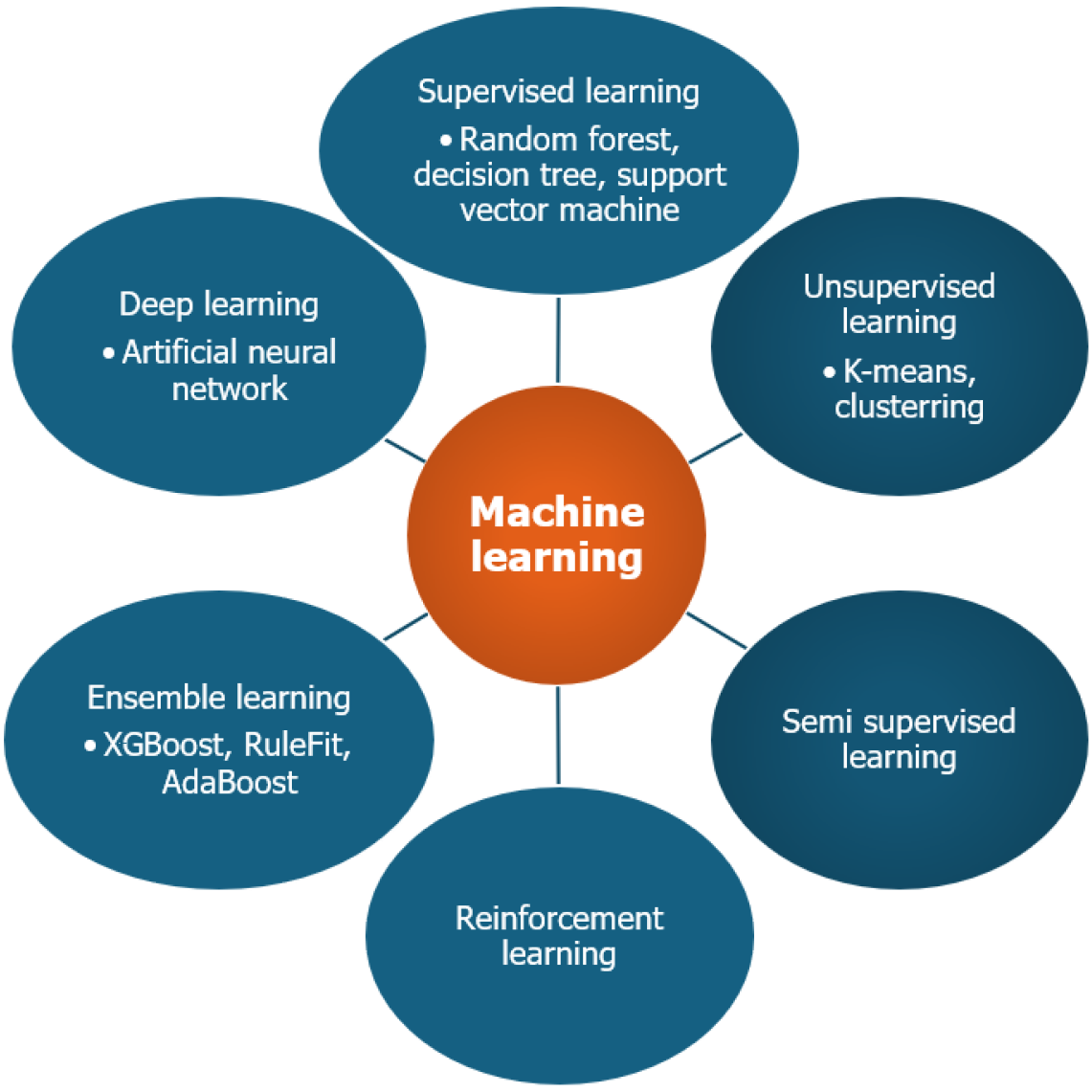

Machine learning (ML) is a type of AI that enables systems to learn from data, adapt to new scenarios and make predictions without explicit programming. The learning process involves feeding large datasets, either labeled or unlabeled, to a ML model which then identifies patterns and relationships within the data to derive meaningful insights and conclusions[2]. Hence, the availability of appropriate data is the backbone of ML. The commonly used ML approaches are shown in Figure 2. The brief description of various types of ML models is shown in Table 1.

| Type of machine learning | Methods & data used | Applications |

| Supervised learning | Labeled data | Predicts known outcome, e.g., SVM |

| Unsupervised learning | Unlabeled data | Identifies hidden pattern in the data, e.g., K-means, clustering |

| Semi supervised learning | Hybrid-less labeled & more unlabeled data | Useful when obtaining labeled data is expensive or time consuming |

| Reinforcement learning | Learning through interaction and feedback (based on evolutionary concept of human behavior of reward and punishment) | Useful in robotics and natural language processing |

| Ensemble learning | Multiple base models combined to develop more accurate predictive model | Enhance accuracy and reduce overfitting |

| Deep learning | Based on Artificial Neuronal Network inspired by the human brain neural networks | Useful in handling unlabeled data, e.g., image recognition, natural language processing |

In supervised learning, the labeled dataset is used to train the models to predict the output of new unseen data. It is mainly used for predicting known results or outcomes[3]. On the other hand, an unlabeled dataset is used to identify hidden patterns, intrinsic structures or relationships within the data, without any explicit guidance on what to look for. This approach is useful for exploratory data analysis, dimensionality reduction and clustering tasks where the objective is to discover natural groupings or to reduce the complexity of the data. The basic difference between supervised and unsupervised ML is shown in Table 2.

| Characteristics | Unsupervised | Supervised |

| Definition | Machine tries to find hidden pattern in the data by itself without human interference | Machines identify the pattern in the new data based on labeled input data with human interference |

| Input data | Unlabeled | Labeled |

| When to use | You do not know what you are looking for in the data | You know what you are looking for in the data |

| Typical tasks | Clustering and association problems | Classification and regression problems |

| Accuracy of result | May provide less accurate result | Provide more accurate result |

| Common algorithm | k-means clustering, hierarchical clustering, principal component analysis | Support vector machine |

| Decision tree | ||

| Random forest | ||

| Example of use | Anomaly detection | Spam filters |

| Customer segmentation | Price prediction | |

| Preparing data for supervised learning | Image identification |

Reinforcement learning is the learning through interaction and feedback. The ML model learns from its own successes and mistakes based on rewards and punishments, mimicking the behavioral evolution of mankind[4]. Reinforcement learning enables AI systems to achieve high performance in complex tasks, often exceeding human capabilities.

In ensemble learning[5], the predictions of multiple base or weak models are used to develop a more accurate and robust predictive model than any individual model alone. The primary benefits of ensemble learning include enhanced accuracy of outcomes and reduced overfitting. Commonly utilized ensemble methods are Bagging (Bootstrap Aggre

Deep learning[6] is a type of ML that utilises artificial neural networks (ANNs) with multiple layers to analyse data and make predictions or decisions. It's inspired by the structure and function of the human brain and is particularly effective at handling large, complex datasets and tasks like image and speech recognition. ANNs[7] are computational models based on the structure of the human brain's neural networks (NN) and serve as building blocks of deep learning. ANNs consist of interconnected layers of nodes or "neurons" which process input information and generate outputs. ANN is composed of an input layer (receives raw data), single or multiple hidden layers, deep NN (DNN) (process data) and output layer (produces the result, such as classification or prediction) (Figure 1).

Table 3 summarizes the ML algorithms used in liver transplantation (LT), with Decision Tree, random forest (RF), and logistic regression (LR) being the most common models.

| Random forest | Can predict post-transplant outcomes |

| Decision tree | Useful for classification and regression tasks |

| Logistic regression | Useful in predicting binary outcomes like survival or graft failure |

| Support vector machines | Useful in classification, regression, and outlier detection |

| can predict patient outcomes from medical records and diagnose diseases | |

| K-Nearest neighbors | Classifies data points based on their proximity to other data points assuming they share similar traits |

| Can predict patient outcomes by comparing new data to historical cases | |

| Artificial neural networks | Based on human brain. Consists of multiple layers of neurons |

| Useful in image, speech recognition and predictive models | |

| Gradient boosting machines | Ensemble learning method |

| Useful in regression and classification problems | |

| Can handle large scale data | |

| XGBoost | Advanced form of GBM |

| Less overfitting as compared to GBM | |

| AdaBoost | Ensemble learning method |

| Useful in image classification, sentiment analysis, and fraud detection | |

| RuleFit | Ensemble learning method |

| combines decision trees and linear models to form interpretable rules that reveal data patterns | |

| useful for understanding decisions, especially in regulatory contexts | |

| Tab transformers | Useful to handle tabular data |

This algorithm generates multiple decision trees during training and uses the mode (for classification) or mean prediction (for regression) as the final output. Each tree is built from a random subset of data, with the overall prediction coming from all trees combined. This method improves accuracy and reduces overfitting and is often used for post-transplant outcome prediction.

A Decision Tree is a ML algorithm for classification and regression. It divides data into subsets based on input features, creating a tree-like structure of decisions. Nodes represent decision points and branches show outcomes. This continues until subsets are homogeneous or another criterion is met. Decision trees are appreciated for their interpretability.

LT is a complex surgery where numerous perioperative factors affect the outcome of patients. The complexity of LT necessitates sophisticated tools that can analyze vast amounts of data and provide actionable insights. AI, with its ability to process and interpret complex datasets, stands at the forefront of this transformation. The integration of AI in LT thus has potential to revolutionize the field of LT.

AI has a potentially crucial role in the pre-transplant assessment by aiding in the evaluation of both donors and recipients. ML algorithms, such as LR and support vector machines (SVM), are employed to predict the suitability of donors and the potential risks for recipient. These algorithms analyze historical data, including medical records, imaging results and laboratory tests, to create predictive models that assist clinicians in making informed decisions.

The process of matching donors and recipients is an important step in LT, as it affects the success of the procedure and long-term outcomes. The application of AI in donor-recipient matching will more likely contribute to improved results. Traditional risk scoring systems, such as the Balance of Risk and Survival Outcomes Following Transplantation scores, have certain limitations in balancing waitlist mortality with post-transplant graft and patient survival. AI algorithms can improve predictive accuracy by analyzing large datasets and detecting patterns that may not be immediately apparent to clinicians. Models such as K-Nearest Neighbor, Gradient Boosting, RF and Decision Trees can incorporate various types of data including clinical, biochemical, imaging and histopathological information, to make more detailed predictions about donor-recipient compatibility. This approach may lead to a more efficient and equitable liver allocation process.

Advanced methodologies such as eXtreme Gradient Boosting (XGBoost) and AdaBoost enhance the matching process by delivering highly accurate predictions. These algorithms are adept at managing imbalanced datasets and recognizing complex patterns, thereby facilitating optimal match identification. Furthermore, ANNs leverage extensive data to assess the likelihood of success in donor-recipient pairings, reducing the risk of graft rejection and associated complications.

The organ shortage results in a significant supply-demand gap, with up to 20% waitlist mortality. Organ allocation typically relies on the Model for End-Stage Liver Disease (MELD) score, though it doesn't fully capture clinical complications of cirrhosis and portal hypertension, which carry significant morbidity and are independently associated with mortality risk. About 10% of patients with low MELD score (≤ 15) face high death risks due to liver-related issues like hepatic hydrothorax, which are not included in the MELD score[8]. Several modifications, including the addition of serum sodium (MELD-Na), albumin and sex adjustments, aim to address these identified disparities[9]. However, MELD still overlooks dynamic factors such as acute on chronic liver failure, sarcopenia, frailty, or social determinants of health.

Currently, there is an unmet need for an ideal organ allocation model that should include a comprehensive clinical assessment, including all the complications associated with liver disease beyond the MELD score. The model should be transparent, ethical and dynamic with real-time updates based on evolving patients' clinical conditions. By leveraging the power of AI, the development of an AI-based organ allocation system is the need of the hour, which can improve predictive accuracy, enhance patient outcomes and ensure a fair and efficient allocation process. As we continue to advance in technology and medical practice, the development of an ideal organ allocation system holds the potential to revolutionize LT and save more lives.

Multiple studies have shown that AI-based models surpass traditional MELD-based approaches in accurately pre

Bertsimas et al[11] developed an optimized prediction of mortality (OPOM) model using AI and machine-learning optimal classification tree models to predict a candidate's 3-month waitlist mortality or dropout. The Liver Simulated Allocation Model (LSAM) was used to compare OPOM to MELD-based allocation. A total of 1618966 observations were explored, analyzing 28 variables, 20 of which related to MELD and interval changes of its elements. OPOM-based allocation reduced mortality by an average of 417.96 (406.8-428.4) deaths per year in LSAM analysis compared to MELD-based allocation, with an AUROC of 0.859 compared to 0.841 for MELD-Na. Improved survival was observed across all candidate demographics, diagnoses and geographic regions. OPOM prioritizes candidates for LT based on disease severity, allowing for more equitable allocation of livers, resulting in additional lives saved every year. Unlike the NN algorithm, objective data was used in the OPOM model. However, the model was trained using unique observations rather than unique patients, which may affect reproducibility in the real world.

Kanwal et al[12] included 107939 patients with cirrhosis followed in the Veterans Affairs health system from 2011 to 2015 to study a large number of clinical and laboratory predictors of survival in cirrhosis. The ML methods used were gradient descent boosting, LR with least absolute shrinkage and selection operator (LASSO) regularisation and LR with LASSO constrained to select no more than 10 predictors (partial pathway model). Finally, the predictors identified in the most parsimonious (the partial path) model were refit using maximum-likelihood estimation to develop the Cirrhosis Mortality Model (CiMM). The predictive performance of the CiMM model with ML-derived clinical variables was significantly better than the MELD-Na score, with area under the curves of 0.78 (95%CI: 0.77-0.79) vs 0.67 (95%CI: 0.66-0.68) for 1-year mortality, respectively. The major limitation of the model was the inclusion of subjective clinical symptoms like ascites, hepatic encephalopathy and the study population was limited to Veterans Affairs cohorts.

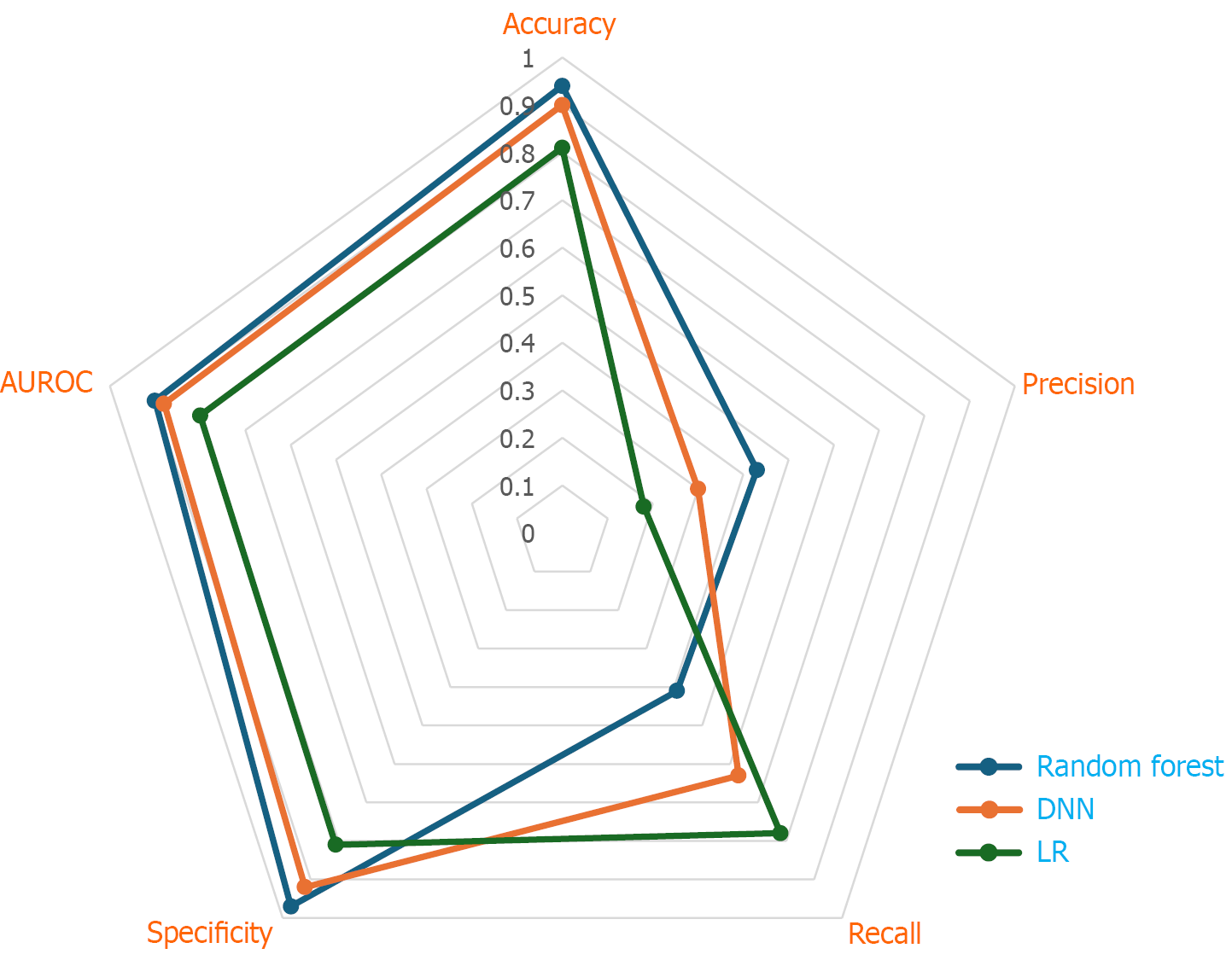

Guo et al[13] used DNN, RF and LR on data of 34575 patients with cirrhosis to compare the predictive value of an AI-based algorithm as compared to the Sodium-Model for End Stage Liver Disease (Na-MELD) score. The AI-based model outperformed the Na-MELD score for all prediction cases and the DNN model outperformed the LR and RF models. Features other than Na-MELD variables, like hemoglobin and alkaline phosphatase, were also the top informative features.

Kwong et al[14] developed a ML model using the RF Model and Spearman's correlation analysis to predict waitlist dropout due to death or clinical deterioration in patients with HCC listed for transplant. Out of 1181 unique variables tested, 12 features involving 5 variables [ascites, tumor size, alpha-fetoprotein, bilirubin and international normalised ratio (INR)] were identified as significant predictors of waitlist dropout and were included in the final model. The algorithm achieved a concordance statistic of 0.74 for the prediction of 3-, 6- and 12-month waitlist dropout for HCC.

Norman et al[15] included 18273 HCC patients in the OPTN database from 2015 to 2022. They used ML approaches: Random survival forests (RSF), gradient boosting, DeepHit, and DeepSurv and Cox proportional hazards models to develop risk stratification model for HCC patients using objective variables at the time of listing: MELD 3.0 score, components of MELD 3.0 (i.e., creatinine, sodium, bilirubin, albumin, international normalized ratio and female sex), alfa-fetoprotein (AFP) and tumor burden. The 3 best models based on discriminatory performance were the gradient boosting model (C-statistic, 0.72; 95%CI: 0.69-0.74), RSF model (C-statistic, 0.71; 95%CI: 0.68-0.73), and a Cox model incorporating MELD 3.0, log (AFP), and tumor burden (C-statistic, 0.71; 95%CI: 0.69-0.74). To balance model transparency, interpretation and performance, the authors proposed the Cox-based model, with MELD 3.0, log (AFP) and tumor burden, as the final model for wait list dropout prediction. The C-statistics of the multi-HCC model were superior to MELD 3.0 alone (P < 0.01) and all other existing scores, including Hazard Associated with Liver Transplantation for HCC, MELD Equivalent and Product of Donor age and MELD) (P < 0.01 for all), but had a similar performance to the AI-based model, like gradient boosted and RSF ML models (P = 0.53). Hence, an urgency-based priority system for patients with HCC, Multi-HCC, can be implemented for organ allocation for patients with HCC.

In case of non-directed (anonymous) live liver donation, the ML model can be utilized in the decision-making process of the graft allocation process rather than using the result of the ML model in isolation. Bambha et al[16] used OPTN data of 6328 living donor LT (LDLT) from 2001-19 to develop a RF survival model to predict 10-year graft survival. The model helped in the decision-making process of identification of a potential recipient for a liver graft from a non-directed live liver donor based on predicted 10-year graft survival, considering the complex interactions of donor and recipient factors.

These studies have shown promising results on the use of an AI model in the organ allocation process in both deceased and live donor LT. One of the significant advantages of AI-driven models is their ability to provide dynamic and real-time updates based on the evolving clinical condition of patients. This ensures that the allocation process remains transparent and ethical, adapting to the needs of patients as their health status changes. However, further studies are needed to address the limitations of AI-based models in LT to ensure the equitable allocation of scarce liver organs for transplant.

Graft recipient weight ratio (GRWR), graft splenic volume ratio and liver remnant, are the fundamental steps in the donor evaluation protocol in LDLT. The remnant of 30% in the donor, along with acceptable GRWR in the recipient, are the key parameters for optimal outcomes in LDLT[17]. Despite the acceptable outcome with lower GRWR graft with inflow modulation, a certain critical graft weight is essential to avoid small for size syndrome in the recipient, which is associated with worse outcomes. Currently, Graft weight is calculated with preoperative graft volume (GV) from computed tomography (CT). However, CT scan overestimates (Right Lobe-18.7%, Left Lobe-39%) and underestimates (Right lobe-11.8%, Left Lobe-15%), which can have profound implications, especially in borderline graft weight with overestimation risk[18].

Several studies have explored the role of AI in graft weight prediction in LDLT. Giglio et al[19] developed and vali

Algorithms were trained using the following predictors: Donor’s age, sex, height, weight, and body mass index (BMI); graft type (left, right, or left lateral lobe); CT-estimated GV; and CT-estimated total liver volume.

The whole data set was randomly split into a training set (80%) and a test set (20%). The study revealed that all the ML models showed similar predictions, but better than the existing modality. The ML model showed good overall precision, with 33.5% and 62% of graft weight prediction errors being inferior to 5% and 10%. Estimation errors ≥ 15% were observed in only 18.4% of predictions. The MAE was 50 ± 61 g, significantly lower than that of uncorrected GV (96 ± 81 g; P < 0.001).

Oh et al[20] have (in a single-center study of 114 donor data from 2022 to 2023) found that the DL model’s volume predictions have a stronger association with the actual graft weights (R2 value-0.76) as compared to the surgeon's estimate (R2 value-0.68).

These studies represent a significant step forward in the use of ML models for the accurate prediction of preoperative graft weight. However, further study with additional significant improvement in the accuracy of graft weight prediction by the ML model can be foreseen by training the ML model with a large amount of data of donors from an international multicentric study including donors of different ethnicities and geographies.

Similarly, the AI model has the potential to assist in the surgical planning of liver donor surgery. In a single-center limited cohort of 250 donor hepatectomies, Oh et al[21] have demonstrated that the ML model U-Net has promising performance in surgical planning of donor surgery for biliary anatomy. However, a larger multicentric study with a large dataset and diverse biliary anatomy in the future holds promise to improve outcomes in donor surgery with such tech

AI can play a crucial role in assessing the quality of liver grafts. For instance, semi-supervised ML models like SVM-SIL have demonstrated 93% sensitivity and 89% accuracy in identifying hepatic steatosis (> 30%) with respect to liver biopsy using smartphone images and donor data[22]. Such innovations can be particularly beneficial in remote areas where expert pathologists may not be readily available, ensuring that only the most viable grafts are used for transplantation.

Similarly, in the LDLT setting, AI-based models can be utilized in assessing the donor steatosis. Gambella et al[23] used 292 allograft liver needle biopsies from 2016 to 2020 to develop and validate automated deep-learning algorithms, a convolutional NN (CNN), for an unbiased automated steatosis assessment (interclass correlation coefficient 0.93) based on Banff consensus recommendations. The implementation of such a CNN model in daily clinical practice will allow for a more efficient and safe allocation of donor organs, which helps in augmenting donor safety and improving post-transplant recipient outcomes.

Many centers include a pre-transplant liver donor biopsy in their donor evaluation protocol. However, Lim et al[24] introduced the DONATION ML model, which predicts hepatic macrovesicular steatosis greater than 5% using nonin

During surgery, AI can assist in real-time decision-making and management. AI-driven systems can monitor vital signs, blood flow and organ function, providing surgeons with critical information throughout the procedure. For example, Tab Transformers can process real-time data to alert surgeons about potential complications or deviations from the optimal surgical plan. Moreover, AI can aid in surgical planning by simulating different scenarios and predicting outcomes. RuleFit algorithms can identify the best surgical techniques based on previous cases, thus enhancing the precision and efficiency of the operation. This real-time assistance ensures that surgeons can make data-driven decisions, ultimately improving the success rate of LT.

AI has the potential to impact post-liver transplant care by supporting improvements in patient and graft survival by enabling personalised treatment, facilitating early detection of complications and improving management efficiency. Research has examined the use of AI models in areas such as predicting and managing complications and survival outcomes following transplantation. LR models are applied to forecast binary outcomes, including graft survival or failure, which allows clinicians to respond accordingly and modify treatment plan as needed.

Yu et al[25] analysed data from 785 deceased donor liver transplant recipients enrolled in the Korean Organ Transplant Registry between 2014 and 2019. The authors employed five ML methods-RF, ANNs, decision tree, naïve Bayes and SVM-and four traditional statistical models-Cox regression, MELD score, donor MELD score, and balance of risk score-to predict post-transplant survival. The RF method demonstrated the highest AUC-ROC values for predicting survival at 1 month (0.80), 3 months (0.85), and 12 months (0.81). Key predictors identified in the RF analysis included cold ischemia time, donor intensive care unit stay, recipient weight, recipient BMI, recipient age, recipient INR and recipient albumin level.

In this 5-year longitudinal study with 1799 observations using survival ML methods, Ridge with RSF as the feature selection method (C-index of 0.699) and RSF with RSF as the feature selection method (C-index of 0.784), were the best models for predicting biliary complications (BC) and mortality respectively. The top predictors of BC, in order of importance, were LT graft types, inflammatory bowel disease in recipients, recipient’s BMI, positive history of portal vein thrombosis in recipients, and previous history of liver transplant. The top predictors for mortality, in order, were immediate post-transplant AST, immediate post-transplant creatinine, recipient’s age, immediate post-transplant alanine aminotransferase and consumption of tacrolimus[26].

Yasodhara et al[27] reported hypertension, decreased renal function and sirolimus maintenance immunosuppression as modifiable risk factors for long-term survival in liver transplant recipients with diabetes mellitus, in a cohort of patients from the SRTR database using ML.

Several predictive models, such as DRI, SOFT, and BAR, have been developed to estimate 90-day post-LT survival. The DRI utilizes only donor characteristics, while other scores incorporate both donor and recipient factors. These models typically use static health status to predict survival within 90 days following LT. Due to the complex and dynamic interactions among various factors, multiple ML models have also been utilised for predicting post-LT survival. Studies have evaluated ML models, including RF, NN, LR, XGBoost and transformer models, reporting AUCs between 0.622 and 0.98 and accuracies up to 95.8% across diverse datasets and time frames. Recent research has noted the significance of dynamic factors in assessing post-LT outcomes, particularly regarding long-term results.

Balakrishnan et al[28] developed a predictive model by incorporating dynamic lab temporal variation features and ML techniques such as RSF and XGBoost models to predict long-term post-LT survival of waiting list patients. The study found that the RSF model combined with temporal variation features had the best predictive performance (C-index 0.71) and an Integrated Brier Score of 0.151, compared to the MELD score (C-index < 0.51). This suggests that the new app

AI-driven tools also facilitate personalised care by analyzing individual patient data. SVM and ANNs can identify patterns in post-transplant recovery, enabling tailored follow-up strategies. These tools can predict the likelihood of infections, organ rejection or other complications, ensuring that patients receive the appropriate care at the right time.

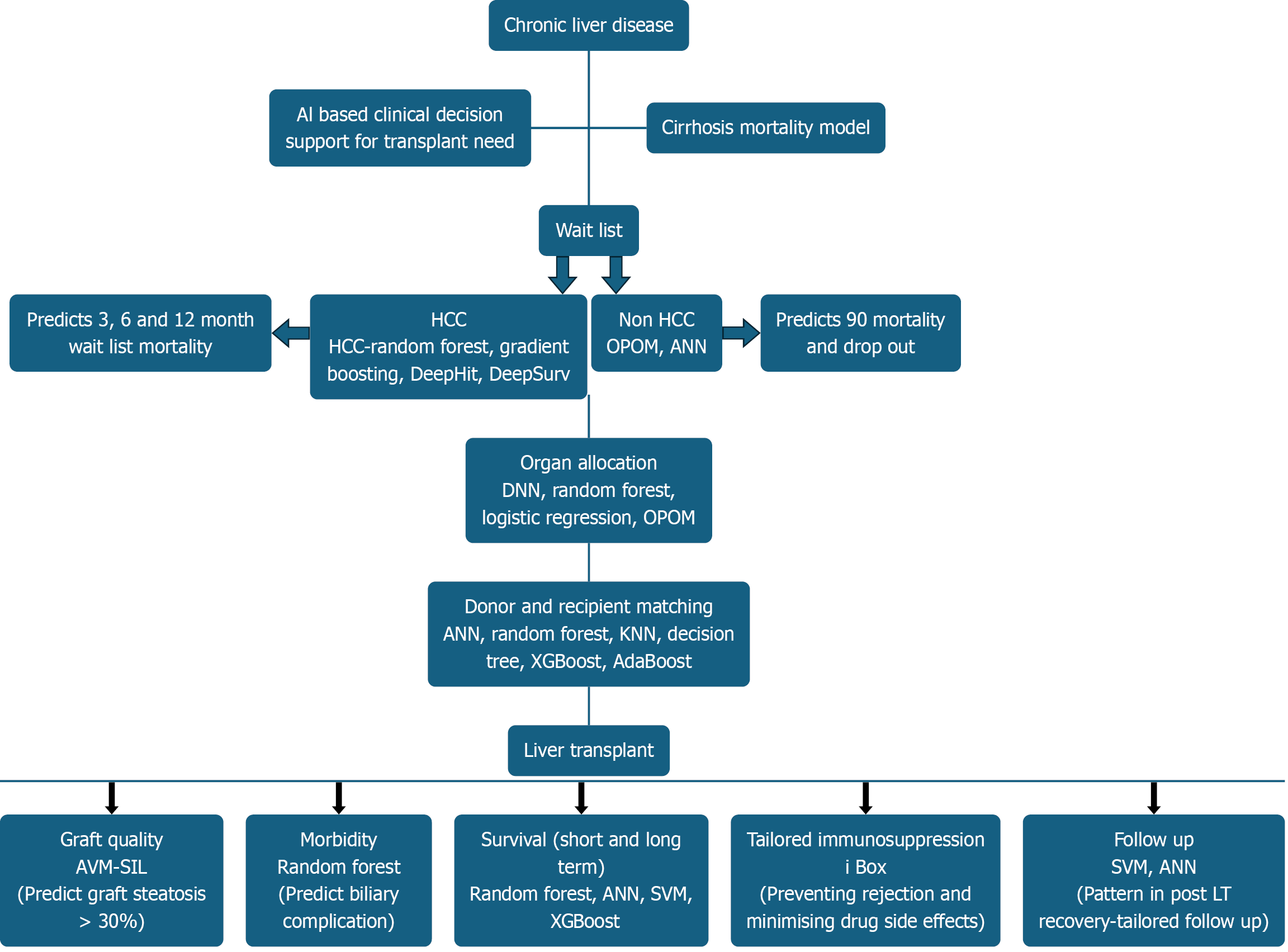

Figure 3 illustrates the application of AI in deceased donor LT.

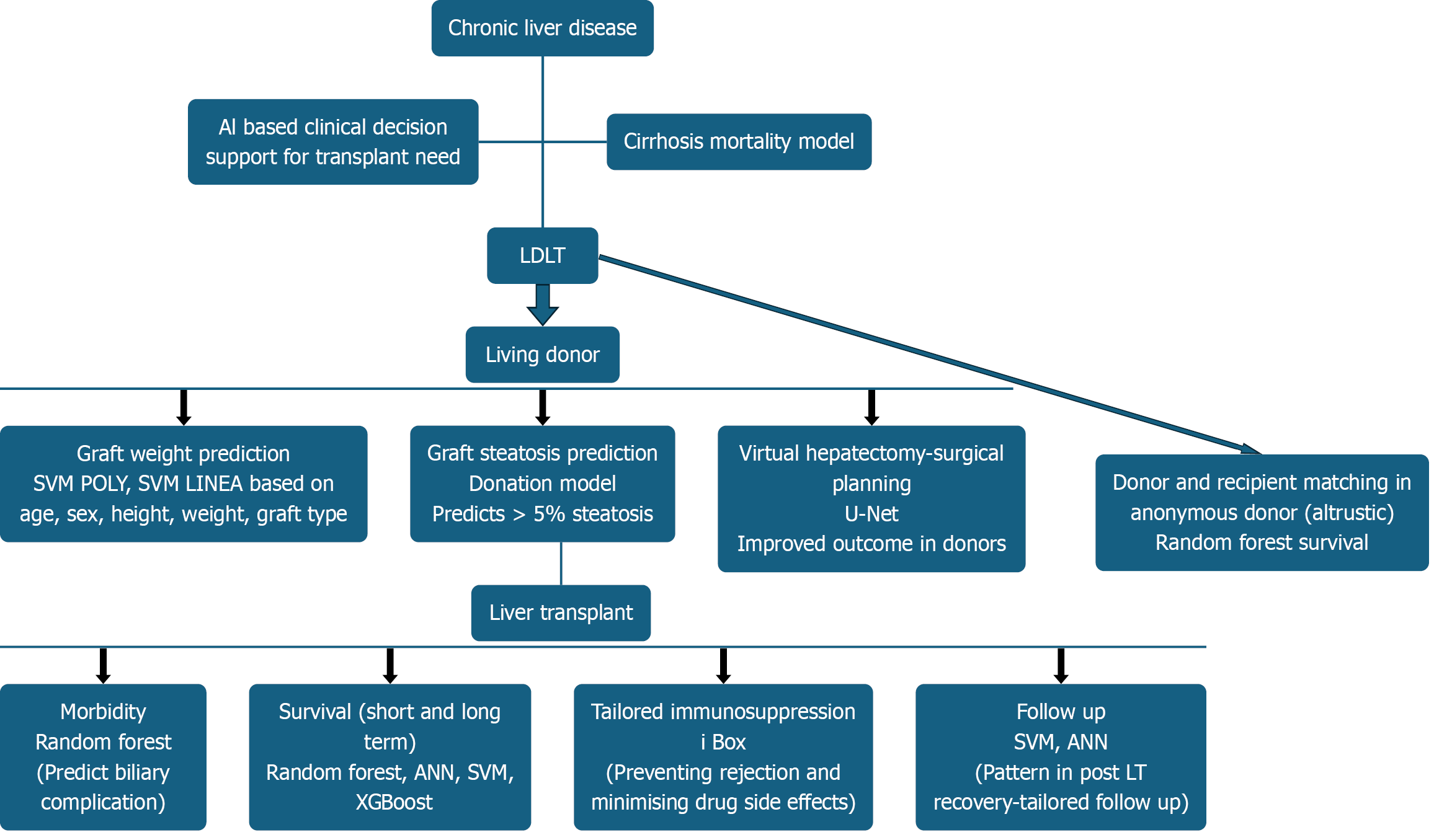

Figure 4 illustrates the application of AI in living donor LT.

Evaluating the performance of various AI models in LT involves more than assessing a single metric. Radar charts, also known as spider charts, serve as powerful graphical tools to visualise and compare model performance across multiple evaluation criteria-such as accuracy, sensitivity (recall), specificity, precision (positive predictive value), F1-score and AUC-PR. Each axis of a radar chart represents a distinct metric, radiating from a central origin and arranged evenly in a circular pattern. The plotted points for each metric are connected to form a polygon, with a larger polygon generally indicating superior overall performance. Ideally models should demonstrate balanced strengths across all metrics. Radar charts facilitate side-by-side comparisons between different AI models or the same model under changing conditions, such as temporal shifts or varying feature importance. This visualisation is particularly useful for evaluating models designed for donor-recipient matching, organ allocation or predicting post-transplant survival and complications. For example, the radar plot of the AI model used in the study by Guo et al[13] to assess the predictive performance of different AI models for 90-day mortality, illustrates how these comparative analyses can help in the selection and optimisation of algorithms in clinical practice (Figure 5).

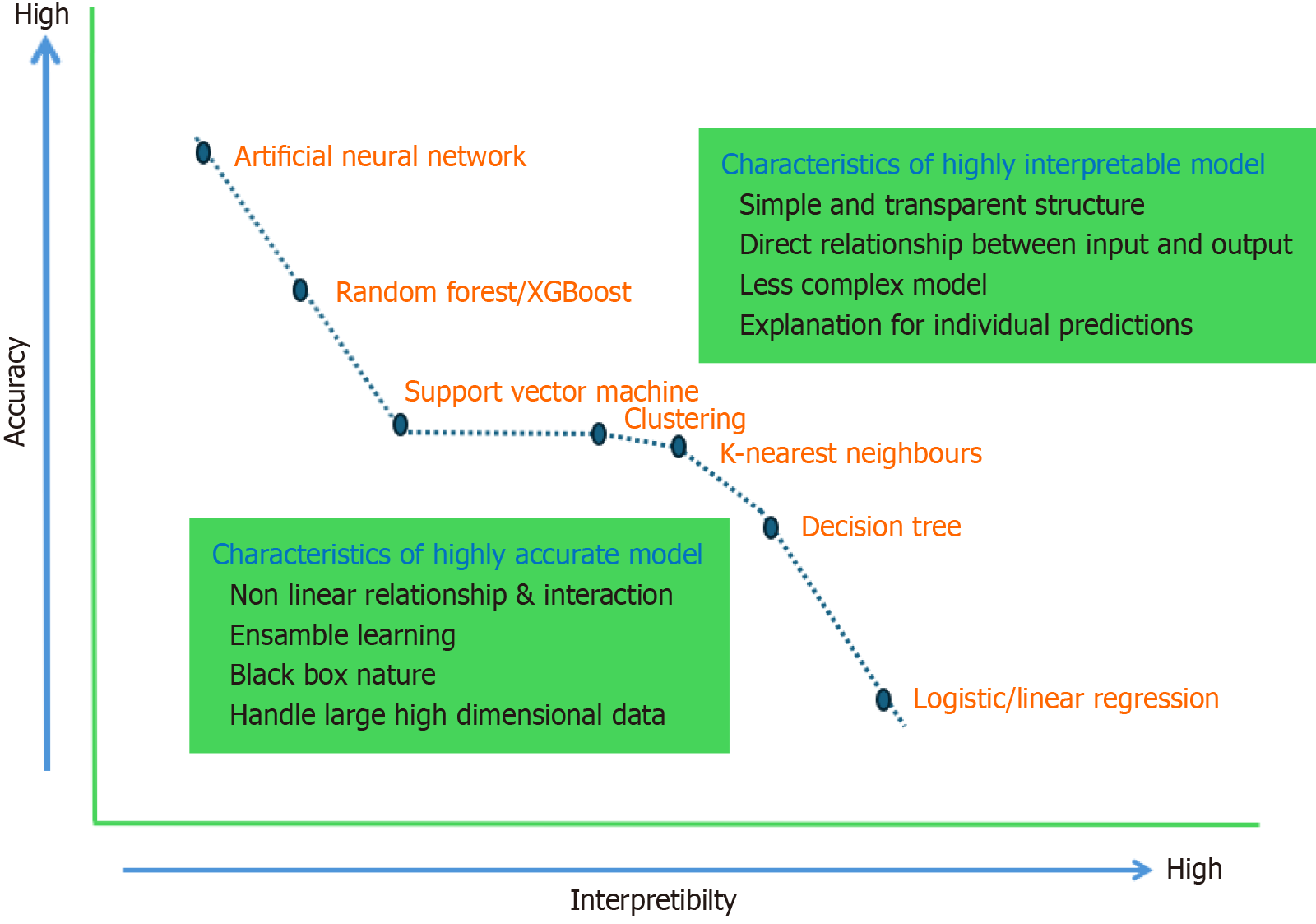

Accuracy refers to how closely model predictions align with the true values, whereas interpretability describes how easily humans can understand the reasoning behind a model’s predictions. There is often a trade-off between the accuracy and interpretability of AI models. Highly accurate models, such as AAN, typically offer lower interpretability, and vice versa.

Figure 6 illustrates the relationship between accuracy and interpretability for various AI models used in LT.

The application of AI in the medical field, particularly in LT, faces several challenges and limitations[29-32].

Data availability and quality: The availability of a high-quality large dataset is the prerequisite for the development of AI models. Limited availability of comprehensive and relevant donor and recipient data can restrict the effectiveness of AI predictions.

Data privacy and security: Patient data privacy and security are significant concerns when using AI in healthcare. Ensuring that AI systems comply with stringent data protection regulations to safeguard sensitive medical information, is crucial.

Complexity of medical data: Medical data can be highly complex and nuanced, posing a challenge for AI models to interpret accurately. The nonlinear interactions and variability in patient conditions require sophisticated algorithms to process effectively.

Ethical concerns: The use of AI in healthcare raises ethical issues, such as the potential for bias in AI decision-making and the transparency of AI processes. Addressing these ethical concerns is vital for the responsible implementation of AI in LT.

Equitable access: Ensuring equitable access to AI technologies across different regions and demographics is another limitation. Disparities in access to advanced medical technologies can impact patient outcomes.

Integration with clinical practice: Integrating AI systems into existing clinical workflows can be challenging. Clinicians need to trust and understand AI recommendations while maintaining oversight and control over medical decisions.

Spectrum bias and overfitting: Spectrum bias occurs when AI models are trained on a subset of patients that do not represent the entire population or the spectrum of disease, like geographical variations. Overfitting bias occurs when models learn specific patterns from the training data, such as spurious correlations or random fluctuations, while failing to extract meaningful underlying and generalizable features.

Hallucinations: In AI, "hallucinations" are the outputs that are nonsensical or inappropriate in context. These occur due to insufficient, noisy, or dirty training data and a lack of constraints. Mitigating hallucinations is essential for improving AI reliability and accuracy through better algorithms and training data refinement.

Other biases: AI is not a magic bullet that can yield answers to all questions. The logical use of AI is based on whether the correct scientific questions are being asked and whether one has the appropriate data to answer those questions. Systemic bias in data collection can affect the type of patterns recognized or predictions made by AI models. This can affect women and racial minorities due to under-representation in clinical trials and patient registry populations[29,30]. To overcome this, the training data set should be a curated dataset including diverse population demographics, with population-based validation of AI models.

Supervised learning is dependent on human labeling of data, which can be expensive to gather, and poorly labeled data will yield poor results.

Transparency: Interpretability is a significant concern for AI models such as NN due to their inherently opaque, "black box" nature. This lack of transparency makes it challenging for humans to understand the rationale behind specific outputs or predictions generated by these models[31]. Consequently, such concerns have limited the adoption of AI algorithms in critical sectors, including medicine. SHapley Additive exPlanations (SHAP) values offer a model-agnostic approach-applicable to any ML model-for elucidating model outputs. Derived from Shapley values in game theory[32], SHAP values quantify each feature's contribution to the final prediction. Importantly, SHAP values are additive: The sum of the SHAP values for all features equals the difference between the model's output and a baseline prediction. By providing detailed insights into how individual features influence specific predictions, SHAP enhances model transpa

Despite these limitations, ongoing research and advancements in AI technology hold promise for overcoming these challenges and enhancing the outcomes of LT in the future (Table 4).

| Limitations of AI | Potential solutions |

| Data and model drift | |

| Data availability and data quality; (b) Complexity of medical database; (c) Missing data points; (d) Inconsistent reporting of data class; (e) Imbalance in training data; and (f) Perpetuation of socioeconomic factors that affect outcomes | (a) Curated datasheets; (b) Adaptation and development of new model; (c) Multimodal AI model; (d) Inclusion of diverse population in data; and (e) Ranking of AI model based on fairness matrices |

| Ethical concern | |

| (a) Data privacy and security; (b) Equitable access; and (c) Integration with clinical practice | (a) Transparency of AI models; (b) Adress biases; (c) Ensure data privacy and security; and (d) Establish accountability with regular audit and monitoring |

| Spectrum bias and overfitting | (a) Diversified and representative training data; (b) Identifying and mitigating bias; (c) Cross validation; and (d) Ensemble learning |

| Hallucinations due to insufficient or dirty training data | (a) High quality training data; (b) Fine tuning of AI model; (c) Inclusion of fact checking mechanism; and (d) Retrieval-augmented generation |

| Generalizability (difficulty in achieving the similar level of accuracy in different geography or populations) | (a) To use curated training data set; and (b) Population based validation of AI model |

| Interpretability (due to Blackbox design of AI model) | (a) Shapley analysis; (b) Explainable AI model; (c) Saliency maps; and (d) Surrogate model |

Beyond clinical use, AI plays a key role in liver transplant research by analyzing large datasets to discover new trends. Algorithms like Gradient Boosting Machines and XGBoost can predict factors affecting LT outcomes and guide innovations. AI tools support developing treatment strategies and devices, speeding up testing through simulations and predictions. Future clinicians need skills in statistics, bioinformatics, and computer science to enhance care. Integrating AI requires proper validation, regulatory oversight, and training for accurate application. Continued collaboration between tech experts and healthcare providers is vital for maximizing AI's benefits and addressing ethical issues.

AI has the potential to transform LT, offering innovative solutions to enhance pre-transplant assessment, donor-recipient matching, intraoperative management, post-transplant care and research. By leveraging advanced algorithms and ML techniques, AI provides clinicians with powerful tools to improve patient outcomes and streamline the transplantation process. As we move forward, it is imperative to address ethical challenges and ensure the responsible use of AI in healthcare. With continued advancements and collaborative efforts, AI has the potential to unlock new horizons in LT and revolutionize the field for the better.

| 1. | Moor J. The Dartmouth College Artificial Intelligence Conference: The Next Fifty Years. AI Mag. 2006;27:87-87. [DOI] [Full Text] |

| 2. | IBM. What is Machine Learning (ML)? 2021. [cited 8 July 2025]. Available from: https://www.ibm.com/think/topics/machine-learning. |

| 3. | Deo RC. Machine Learning in Medicine. Circulation. 2015;132:1920-1930. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1155] [Cited by in RCA: 2132] [Article Influence: 213.2] [Reference Citation Analysis (7)] |

| 4. | MIT Press. Reinforcement Learning. [cited 21 April 2025]. Available from: https://mitpress.mit.edu/9780262039246/reinforcement-learning/. |

| 5. | Dietterichl TG. Ensemble Learning. In: Arbib MA, editor. The Handbook of Brain Theory and Neural Networks, Second Edition. United States: Impressions Book and Journal Services. 2002: 405-408. |

| 6. | IBM. What is Deep Learning? 2024. [cited 8 July 2025]. Available from: https://www.ibm.com/think/topics/deep-learning. |

| 7. | Han SH, Kim KW, Kim S, Youn YC. Artificial Neural Network: Understanding the Basic Concepts without Mathematics. Dement Neurocogn Disord. 2018;17:83-89. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 29] [Cited by in RCA: 46] [Article Influence: 5.8] [Reference Citation Analysis (0)] |

| 8. | Atiemo K, Skaro A, Maddur H, Zhao L, Montag S, VanWagner L, Goel S, Kho A, Ho B, Kang R, Holl JL, Abecassis MM, Levitsky J, Ladner DP. Mortality Risk Factors Among Patients With Cirrhosis and a Low Model for End-Stage Liver Disease Sodium Score (≤15): An Analysis of Liver Transplant Allocation Policy Using Aggregated Electronic Health Record Data. Am J Transplant. 2017;17:2410-2419. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 42] [Cited by in RCA: 46] [Article Influence: 5.1] [Reference Citation Analysis (0)] |

| 9. | Kim WR, Mannalithara A, Heimbach JK, Kamath PS, Asrani SK, Biggins SW, Wood NL, Gentry SE, Kwong AJ. MELD 3.0: The Model for End-Stage Liver Disease Updated for the Modern Era. Gastroenterology. 2021;161:1887-1895.e4. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 244] [Cited by in RCA: 431] [Article Influence: 86.2] [Reference Citation Analysis (0)] |

| 10. | Nagai S, Nallabasannagari AR, Moonka D, Reddiboina M, Yeddula S, Kitajima T, Francis I, Abouljoud M. Use of neural network models to predict liver transplantation waitlist mortality. Liver Transpl. 2022;28:1133-1143. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2] [Cited by in RCA: 19] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 11. | Bertsimas D, Kung J, Trichakis N, Wang Y, Hirose R, Vagefi PA. Development and validation of an optimized prediction of mortality for candidates awaiting liver transplantation. Am J Transplant. 2019;19:1109-1118. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 49] [Cited by in RCA: 86] [Article Influence: 12.3] [Reference Citation Analysis (0)] |

| 12. | Kanwal F, Taylor TJ, Kramer JR, Cao Y, Smith D, Gifford AL, El-Serag HB, Naik AD, Asch SM. Development, Validation, and Evaluation of a Simple Machine Learning Model to Predict Cirrhosis Mortality. JAMA Netw Open. 2020;3:e2023780. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 41] [Cited by in RCA: 61] [Article Influence: 10.2] [Reference Citation Analysis (0)] |

| 13. | Guo A, Mazumder NR, Ladner DP, Foraker RE. Predicting mortality among patients with liver cirrhosis in electronic health records with machine learning. PLoS One. 2021;16:e0256428. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 7] [Cited by in RCA: 24] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 14. | Kwong A, Hameed B, Syed S, Ho R, Mard H, Arshad S, Ho I, Suleman T, Yao F, Mehta N. Machine learning to predict waitlist dropout among liver transplant candidates with hepatocellular carcinoma. Cancer Med. 2022;11:1535-1541. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1] [Cited by in RCA: 23] [Article Influence: 5.8] [Reference Citation Analysis (0)] |

| 15. | Norman JS, Mehta N, Kim WR, Liang JW, Biggins SW, Asrani SK, Heimbach J, Charu V, Kwong AJ. Model of Urgency for Liver Transplantation in Hepatocellular Carcinoma: A Practical Model to Prioritize Patients With Hepatocellular Carcinoma on the Liver Transplant Waitlist. Gastroenterology. 2025;168:784-794.e4. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 9] [Article Influence: 9.0] [Reference Citation Analysis (0)] |

| 16. | Bambha K, Kim NJ, Sturdevant M, Perkins JD, Kling C, Bakthavatsalam R, Healey P, Dick A, Reyes JD, Biggins SW. Maximizing utility of nondirected living liver donor grafts using machine learning. Front Immunol. 2023;14:1194338. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 3] [Reference Citation Analysis (0)] |

| 17. | Goja S, Yadav SK, Saigal S, Soin AS. Right lobe donor hepatectomy: is it safe? A retrospective study. Transpl Int. 2018;31:600-609. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 17] [Cited by in RCA: 26] [Article Influence: 2.9] [Reference Citation Analysis (0)] |

| 18. | Goja S, Yadav SK, Yadav A, Piplani T, Rastogi A, Bhangui P, Saigal S, Soin AS. Accuracy of preoperative CT liver volumetry in living donor hepatectomy and its clinical implications. Hepatobiliary Surg Nutr. 2018;7:167-174. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 20] [Article Influence: 2.5] [Reference Citation Analysis (0)] |

| 19. | Giglio MC, Zanfardino M, Franzese M, Zakaria H, Alobthani S, Zidan A, Ayoub II, Shoreem HA, Lee B, Han HS, Penna AD, Nadalin S, Troisi RI, Broering DC. Machine learning improves the accuracy of graft weight prediction in living donor liver transplantation. Liver Transpl. 2023;29:172-183. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 7] [Article Influence: 2.3] [Reference Citation Analysis (0)] |

| 20. | Oh N, Kim JH, Rhu J, Jeong WK, Choi GS, Kim J, Joh JW. Comprehensive deep learning-based assessment of living liver donor CT angiography: from vascular segmentation to volumetric analysis. Int J Surg. 2024;110:6551-6557. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 3] [Cited by in RCA: 6] [Article Influence: 3.0] [Reference Citation Analysis (0)] |

| 21. | Oh N, Kim JH, Rhu J, Jeong WK, Choi GS, Kim JM, Joh JW. 3D auto-segmentation of biliary structure of living liver donors using magnetic resonance cholangiopancreatography for enhanced preoperative planning. Int J Surg. 2024;110:1975-1982. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 7] [Cited by in RCA: 10] [Article Influence: 5.0] [Reference Citation Analysis (0)] |

| 22. | Cesaretti M, Brustia R, Goumard C, Cauchy F, Poté N, Dondero F, Paugam-Burtz C, Durand F, Paradis V, Diaspro A, Mattos L, Scatton O, Soubrane O, Moccia S. Use of Artificial Intelligence as an Innovative Method for Liver Graft Macrosteatosis Assessment. Liver Transpl. 2020;26:1224-1232. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 30] [Article Influence: 5.0] [Reference Citation Analysis (0)] |

| 23. | Gambella A, Salvi M, Molinaro L, Patrono D, Cassoni P, Papotti M, Romagnoli R, Molinari F. Improved assessment of donor liver steatosis using Banff consensus recommendations and deep learning algorithms. J Hepatol. 2024;80:495-504. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 14] [Reference Citation Analysis (0)] |

| 24. | Lim J, Han S, Lee D, Shim JH, Kim KM, Lim YS, Lee HC, Jung DH, Lee SG, Kim KH, Choi J. Identification of hepatic steatosis in living liver donors by machine learning models. Hepatol Commun. 2022;6:1689-1698. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 2] [Cited by in RCA: 7] [Article Influence: 1.8] [Reference Citation Analysis (0)] |

| 25. | Yu YD, Lee KS, Man Kim J, Ryu JH, Lee JG, Lee KW, Kim BW, Kim DS; Korean Organ Transplantation Registry Study Group. Artificial intelligence for predicting survival following deceased donor liver transplantation: Retrospective multi-center study. Int J Surg. 2022;105:106838. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 24] [Reference Citation Analysis (0)] |

| 26. | Andishgar A, Bazmi S, Lankarani KB, Taghavi SA, Imanieh MH, Sivandzadeh G, Saeian S, Dadashpour N, Shamsaeefar A, Ravankhah M, Deylami HN, Tabrizi R, Imanieh MH. Comparison of time-to-event machine learning models in predicting biliary complication and mortality rate in liver transplant patients. Sci Rep. 2025;15:4768. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 2] [Reference Citation Analysis (0)] |

| 27. | Yasodhara A, Dong V, Azhie A, Goldenberg A, Bhat M. Identifying Modifiable Predictors of Long-Term Survival in Liver Transplant Recipients With Diabetes Mellitus Using Machine Learning. Liver Transpl. 2021;27:536-547. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 6] [Cited by in RCA: 21] [Article Influence: 4.2] [Reference Citation Analysis (0)] |

| 28. | Balakrishnan K, Olson S, Simon G, Pruinelli L. Machine learning for post-liver transplant survival: Bridging the gap for long-term outcomes through temporal variation features. Comput Methods Programs Biomed. 2024;257:108442. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 2] [Reference Citation Analysis (0)] |

| 29. | Jüni P, Altman DG, Egger M. Systematic reviews in health care: Assessing the quality of controlled clinical trials. BMJ. 2001;323:42-46. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2379] [Cited by in RCA: 2171] [Article Influence: 86.8] [Reference Citation Analysis (0)] |

| 30. | Murthy VH, Krumholz HM, Gross CP. Participation in cancer clinical trials: race-, sex-, and age-based disparities. JAMA. 2004;291:2720-2726. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1452] [Cited by in RCA: 1726] [Article Influence: 78.5] [Reference Citation Analysis (1)] |

| 31. | Sussillo D, Barak O. Opening the black box: low-dimensional dynamics in high-dimensional recurrent neural networks. Neural Comput. 2013;25:626-649. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 191] [Cited by in RCA: 221] [Article Influence: 15.8] [Reference Citation Analysis (0)] |

| 32. | A Value for n-Person Games. [cited 13 August 2025]. Available from: https://bibbase.org/network/publication/shapley-s-avaluefornpersongames-1953. |