PROTEOMICS APPROACHES: PATH TO EARLY DIAGNOSIS

This article highlights a proteomics pipeline that is being applied to develop and validate a panel of candidate biomarkers suitable for early hepatocellular carcinoma (HCC) diagnosis. However, the strategies described are also applicable to other objectives including other diseases, drug development, and therapeutic monitoring. Given the time and money involved in bringing a drug to market, the availability of biomarkers capable of identifying potential drug failures early in development is key, but this depends increasingly on advanced proteomic technologies. The characterization of unique protein patterns associated with specific diseases as a discovery strategy to identify candidate biomarkers is one of the most promising areas of clinical proteomics. Cancer, although often classified as a genetic disease, is functionally a proteomic disease. The proteomic tissue microenvironment directly impacts the tumor-host communication system by affecting enzymatic events and the sharing of growth factors[1], so the tumor microenvironment represents a potential source for biomarkers. An example of an early disease biomarker is the prostate-specific antigen (PSA). Today, serum PSA levels are regularly used in the diagnosis of prostate cancer in men. Unfortunately, a reliance on a single protein biomarker is frequently found to be unreliable. Despite decades of effort, single biomarkers generally lack the specificity and sensitivity required for routine clinical use[2]. Due to the heterogeneity that exists from tumor to tumor, biomarker discovery is moving away from the pursuit for an idealized single cancer-specific biomarker in favor of identifying a panel of markers. Disease complexity almost dictates that accurate screening and diagnosis of HCC will require multiple biomarkers. Proteomics affords us the ability to simultaneously interrogate the entire proteome or sub-proteome in order to identify correlations between protein expression (or modifications) and disease progression. In this manner, a panel of biomarkers can be constructed that exhibit the desired sensitivity and specificity necessary for the detection and monitoring of the disease. Recent advances in proteome analysis have focused on the more accessible body fluids including plasma, serum, urine, cerebrospinal fluid, saliva, bronchoalveolar lavage fluid, synovial fluid, nipple aspirate fluid, tear fluid, and amniotic fluid[3]. These body fluids are obtained using minimally invasive procedures, are readily processed, and hence represent clinically tractable cost effective sources of biological material[4].

An effective, clinically useful biomarker should be quantifiable in a readily accessible body fluid such as serum. Since blood comes in contact with almost every tissue, it constitutes a treasure trove of potential biomarkers that provide a systemic picture of the physiological state of the entire body. Every cell in the body contributes to the blood proteome through normal metabolic processes, consequently defined changes characteristic of disease will be reflected in the blood proteome due to perfusion of the affected tissue or organ[5]. Therefore alteration of serum protein profiles can effectively reflect the pathological state of liver injury. It is also ideal to use serum because its sampling is minimally invasive and it is easily prepared with high reproducibility. The disease-related differences in the proteome profile can be attributed to altered protein levels reflecting changes in expression, or dysregulated proteolytic activity affecting protein turnover in diseased cells.

While on the surface this sounds simple to discover a biomarker in serum, the actual process is laborious and time consuming because of the inherent complexity of the human serum proteome, which is composed of thousands of individual proteins. Moreover, the prevalence of serum proteins spans a wide dynamic range-approximately 9 orders of magnitude from pg/mL to mg/mL. These properties represent substantial challenges that often prevent the development and wide-scale utilization of this treasure chest of biological information.

TARGET DISEASE BACKGROUND: HEPATITIS C AND HCC

Liver cancer accounted for 745000 deaths in 2012 alone (World Health Organization. Fact Sheet No 297: 2014). Worldwide, the prevalence of HCC is estimated to affect 180 million people and the incidence continues to rise[6], especially in the United States. It is the fifth most common cancer in men (554000 cases, 7.5% of the total) and the ninth in women (228000 cases, 3.4%)[7]. Chronic infection with hepatitis C virus (HCV) is a major risk factor for the development of HCC with an estimated 130-150 million people having chronic HCV infection (World Health Organization. Fact Sheet No 164:2014). The prognosis for HCC is very poor with an overall 5 year survival rate below 5%, primarily because HCC frequently goes undetected prior to advanced stage disease when therapeutic options are limited. Major risk factors for HCC are infection by the HCV and HBV, alcoholic liver disease, and associated liver cirrhosis. In the developed world however, non-alcoholic fatty liver disease (NAFLD) is increasingly being recognized as a risk factor for HCC without evidence of underlying cirrhosis. Currently, HBV and HCV account for 80%-90% of all HCC worldwide[8-10]. Although HBV remains the most common HCC risk factor worldwide to date, the use of a HBV vaccine in newborns is expected to decrease the HCC incidence associated with HBV infection[8]. In contrast, despite the existence of HCV tests and moderately effective anti-viral therapies, HCV remains a major risk factor for HCC. In fact, the incidence of HCC increased from 2.7 per 100000 to 3.2 per 100000 in 5 years and an estimated 78% of this increase was attributable to latent HCV infections in the general population[11]. In the United States, the incidence of HCC is on the rise stemming from HCV exposures several decades earlier[12], and retrospective studies suggest that once cirrhosis develops, liver disease progresses to either hepatic decompensation (liver failure) or HCC occurs at a rate of 2% to 7% per year[12-16].

The absence of randomized clinical trials notwithstanding, there is compelling evidence suggesting that surgical resection, liver transplantation, or ablative therapies significantly improve survival in HCC patients[17,18]. However, few patients with advanced HCC meet the criteria for these therapeutic modalities. Hence, these clinical options are generally only available to individuals fortunate enough to have been diagnosed with early stage HCC, typically where the tumor is less than 3 cm in diameter without vascular involvement[19,20]. Since early HCC tumors are asymptomatic[17,21,22], routine surveillance of high-risk patients such as those with cirrhosis is recommended as a strategy to detect tumors at a time when therapeutic intervention still offers markedly improved survival rates[23]. Surgical resection offers a 5-year survival rate of approximately 35%, increasing to 45% for small tumors (2-5 cm), thereby highlighting the value of early detection[24]. Hence a screening modality that provides the requisite sensitivity and specificity for early HCC detection would be of significant clinical benefit[25].

Current diagnostic tools for early HCC detection are unfortunately insensitive and/or nonspecific. To date, most established serological diagnostic test for HCC is measures serum alpha-fetoprotein (AFP) levels, however the assay is insufficiently sensitive (39% to 65%) or specificity (65% to 94%) to be very reliable[26-28]. For example, HCV patients with necro-inflammation and liver fibrosis may register high serum AFP levels unrelated to HCC. AFP levels are also elevated in hyperthyroidism[29] and pancreatitis[30] limiting its efficacy as a reliable biomarker for HCC. Biopsied and histopathologically tested samples to discriminate early HCC from benign nodules can be difficult even for expert pathologists[31]. The two newer serological biomarkers, DCP and AFP-L3, fared no better than AFP as their elevation was nonspecifically common in patients without HCC and was influenced by race, gender, age, and severity of liver disease. Therefore it was concluded that screening protocols based on AFP, AFP-L3, and DCP are in rveillance[32]. More recently, screening modalities based on markers including Dickkopf-1 and Midkine designed to complement AFP, are being developed to facilitate screening and diagnosing HCC at an earlier stage[33,34]. However, rigorous validation studies are required before their clinical value is established. According to the National Cancer Institute’s Early Detection Research Network guidelines for biomarker development, robust prospective, randomized, controlled, multi-center trials using a large cohort of patients with hepatitis B and hepatitis C infectious liver disease, NAFLD, and alcohol-induced liver disease are required for validation[35]. Currently, imaging with triphasic computed tomography scanning and magnetic resonance imaging with intravenous gadolinium can improve the diagnostic accuracy, but these techniques are time consuming and very expensive, and are not practical for screening the millions of people identified with known risk factors for HCC. Although ultrasound is very sensitive (in the order of 80%) it is extremely operator dependent[36-38] and is not well suited to differentiate between malignant and benign nodules in the cirrhotic liver. Consequently, development of a minimally invasive test using serum-based biomarkers with the necessary sensitivity and specificity will enhance surveillance, widespread screening, and early HCC detection among the millions who are at risk of developing liver cancer.

SAMPLE PREPARATION: FRACTIONATION AND ENRICHMENT

High abundance serum proteins comprise fewer than two dozen proteins, including albumin and the immunoglobulins, which account for approximately 99% of the total serum protein[39]. The presence of these highly abundant proteins masks the ready detection of medium and low abundance proteins that comprise the repertoire of potential biomarkers. This renders identification of the biomarkers extremely challenging. Serum contains 60% - 80 mg/mL protein, but approximately 65% of this is serum albumin, and approximately 15% are γ-globulins[40-42]. Finding a disease-related protein in such a complex mixture is like searching for a needle in a haystack. So it becomes important to compress the serum protein large dynamic range and reduce the few over-represented (i.e., abundant) proteins by depleting highly abundant proteins to allow detection of lower abundant proteins.

Developments in biomarker-based proteomics technologies are dramatically impacted by the recent realization that a high percentage of the diagnostically useful lower molecular weight serum protein entities are bound to higher molecular weight carrier proteins such as albumin[5,43-45]. In fact, these carrier proteins likely serve to amplify and protect lower molecular weight proteins from clearance by the renal system[46,47]. Conventional protocols for biomarker discovery discard the abundant high molecular weight carrier species such as albumin without realizing the valuable cargo they harbor. Albumin is a carrier/transport protein that sequesters numerous other serum components. Consequently, stripping away albumin from a serum sample risks removing potentially important species. This is like “throwing the baby out with the bath water” by failing to capture the information associated with this valuable resource. Researchers believe that the albumin-bound proteomic signature in serum can be used for early detection and staging of HCC[48]. Therefore, in choosing a method for removal of over represented proteins, the chosen strategy should protect against the nonspecific loss of unrelated proteins. A novel methodology is the aptamer-based Proteominer technology (Bio-Rad) designed to preserve the complexity of the serum proteome using a strategy that does not merely deplete carrier proteins. It constitutes a novel sample preparation protocol that narrows the dynamic range of the serum protein profile without losing the complexity of the entire proteome. This is accomplished through the use of a highly diverse hexabead-based library of combinatorial peptide ligands[49,50]. When complex biological samples such as serum are applied to the beads, the high-abundance proteins such as albumin readily saturate the finite high affinity sites on the beads. However, the retention of carrier proteins such as albumin guarantees that albumin-bound entities are retained in the enriched sample. Medium- and low abundance proteins on the other hand are concentrated by binding to the specific aptamers. As a consequence, the dynamic range of protein profile is reduced while preserving the full complexity of the protein sample. Before performing subsequent high-resolution identification strategies, the samples should be desalted (using standard cleanup kits or desalting columns) to remove electrolytes and other impurities present in the sample[50].

PROTEIN EXPRESSION PROFILING: 2D-DIFFERENCE IN-GEL ELECTROPHORESIS DISCOVERY

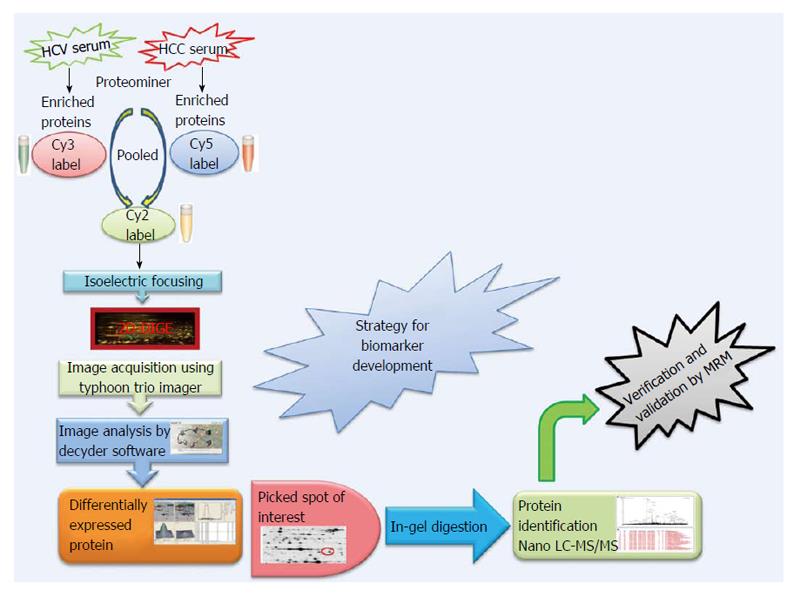

Expression profiling is central to proteomics and depends on methods that are able to provide accurately and reproducibly differentiate between the expression profiles in two or more biological samples. Despite recent technological advances in methods for separating and analyzing complex protein mixtures, two-dimensional gel electrophoresis (2D-PAGE) remains a widely used approach in proteomics research[51-55]. 2D-PAGE allows for the separation of thousands of proteins on the basis of both size and charge from a tissue or biological fluid. However, inter-gel variability and the extensive time and labor needed to resolve differences in protein expression have hampered the efficacy of 2D-PAGE. A major technical improvement in 2D-PAGE involves development of the multiplexed fluorescent two-dimensional-difference in-gel electrophoresis (2D-DIGE) method[56] which utilizes direct labeling of the lysine groups on proteins with cyanine (Cy) dyes before isoelectric focusing fractionation in the first dimension. 2D-DIGE is a robust technique proving fruitful in the identification of proteins exhibiting differential expression between samples. It is considered to be one of the most significant advances in quantitative proteomics technology and is crucial to the success of many proteomics initiatives[57,58]. A critical benefit of 2D-DIGE technology is the ability to label 2-3 distinct samples using specific dyes prior to isoelectric focusing of the samples on a single (non-linear) IPG strip[59]. This feature limits spot pattern variability in experimental replicates and therefore the number of gels required to establish confidence in spot pattern differences. Consequently, 2D-DIGE successfully identified statistically significant changes in the serum proteome using only 6 sets of randomly paired patient samples (e.g., 6 HCV and 6 HCC) in 3-5 wk. By labeling the 6 random pairs of HCV and HCC samples with three distinct CyDyes (Cy2, Cy3 and Cy5), we generated 18 HCV and 18 HCC samples for 2D-DIGE and identified 43 significantly differentially expressed proteins. Specifically, 2D-DIGE was used to examine changes in protein abundance between HCV and HCC patient samples by performing Cy3 and Cy5 dye swap experiments under conditions involving a Cy2-labeled internal standard-comprising a pooled preparation of all the patient samples - to normalize between technical replicates[58]. Moreover the substantial sensitivity and broad dynamic range available using these dyes enhances the quantitative accuracy beyond that attainable using silver staining[60]. These advantages renders 2D-DIGE more reliable than 2D-PAGE as a qualitative and quantitative method to interrogate complex proteomes[43], and thus has found utility in proteomics studies examining several human cancers (Figure 1).

Figure 1 Proteomics strategy for biomarker discovery.

HCC: Hepatocellular carcinoma; HCV: Hepatitis C virus; Cy: Cyanine; 2D-DIGE: 2D-difference in-gel electrophoresis; MS: Mass spectrometry; LC: Liquid chromatography.

The CyDye DIGE Fluor minimal dye chemistry relies on a N-hydroxysuccinimidyl ester reactive group that readily forms an amide linkage covalent bond with the epsilon amino group of lysine in proteins. The positive charge of the CyDye substitutes the positive charge in adducted lysine residues at neutral and acidic pH, thus keeping the pI of the protein essentially unchanged. The labeling reaction is designed to be dye that the dye labels approximately 1%-2% of lysine residues. Therefore, each labeled protein typically harbors on average only one dye-labeled residue and is visualized as a single protein spot. The labeling reaction is rapid, taking only 30 min and is quenched by the addition of 1 μL of 10 mmol/L lysine for 10 min. It should be noted that CyDye labeling contributes approximately 500 Da to the mass of the labeled proteins; however, this modest increase in molecular weight does not corrupt the 2D gel pattern since all visualized proteins are labeled. The increased molecular weight conferred by a single CyDye labeling event per protein nor the hydrophobicity of the fluorophore substantially alter gel migration of the labeled proteins. The labeling protocol dictates that the ratio of label to protein be optimized so that less abundant proteins are tagged while keeping the highly abundant proteins in the linear dynamic range for quantitative imaging. 2D-DIGE benefits from the use of a pooled internal standard (i.e., a pooled sample comprising an equal aliquot from each sample in the experiment) labeled with the Cy2 dye. The internal standard is essential for assessing biological and experimental variations and increasing the confidence of the statistical analysis. Sequential scanning of Cy2-, Cy3- and Cy5-labeled proteins (gels) is achieved by the following laser/emission filters: 488/520, 532/580 and 633/670 nm, respectively[61] using a Typhoon Trio Imager (GE Healthcare). Image analysis of the fluorescently labeled proteins requires sequential evaluation of the spot patterns using proprietary (Decyder) software for differential in-gel analysis. In order to reveal changes in protein abundance intra-gel statistical analysis, Cy5/Cy3:Cy2 normalization, and biological variation analysis-which performs inter-gel statistical analysis to provide relative abundance in various groups-are performed. Cy5 or Cy3 samples are normalized against the Cy2 dye-labeled sample (i.e., Cy5:Cy2 and Cy3:Cy2). In the discovery of HCC biomarkers, log abundance ratios were compared between pre-cancerous and cancerous samples from all gels using the chosen statistical analysis (e.g., t-test and ANOVA) using software packages such as DeCyder (GE Healthcare)[62-64]. In addition to being sensitive and quantitative, 2D-DIGE is also compatible with downstream mass spectrometry (MS) protein characterization protocols since most lysine residues in a given protein remain untagged and are accessible for tryptic digestion. Spot detection, the matching and picking of differentially expressed spots of interest among various samples, is done by identifying the spots that reproducibly show expression differences between the cancerous and pre-cancerous samples across biological replicates. Differentially expressed protein spots that satisfy the selection criteria using a statistical significance of P < 0.05 and a threshold of > 1.5-fold change in abundance are selected, and these protein spots are picked from preparative gels involving the 2D-PAGE fractionation of substantially greater amounts of the same protein samples for identification by MALDI-TOF and/or nano-Liquid chromatography (LC)-MS/MS. The combination of 2D-DIGE to confidently detect changes in protein abundance between two samples, with contemporary MS techniques capable of identifying proteins in complex mixtures greatly enhances the biomarker discovery pipeline.

The many advantages of this approach notwithstanding, there remain significant caveats. For example, proteins with a high percentage of lysine residues are more susceptible to multiple labeling events than proteins encoding few or no lysines. Therefore, it is conceivable that a highly abundant protein with few lysine restudies may be readily detectable by conventional 2D-PAGE but be poorly labeled by the CyDye fluorophores in 2D-DIGE and hence be underestimated. Also, while LC-MS/MS typically requires only 1-5 μg of protein, preparative 2D-gels require substantially more protein (approximately equal to 500 μg) for reliable spot detection, which may become a limiting factor in discovery proteomics. Moreover, despite recent advances in high-resolution mass spectrometers that facilitate quantitative analyzes of thousands of proteins, the technology is still not capable of comprehensively characterizing the entire proteome in complex mixtures such as serum. Thorough assessments of these complex samples require prior fractionations to reduce sample complexity using strategies including multidimensional separation (gel-based and chromatography-based technology). Some of the most common methods used for these complex mixtures are 2D-DIGE, isotope-coded affinity tags, isotope-coded protein labeling, tandem mass tags, isobaric tags for relative and absolute quantitation, stable isotope labeling, and label-free quantification. It is noteworthy that the lower abundance proteins detected by 2D-DIGE are refractory to identification by mass spectrometry due to the detection limits of currently available mass spectrometers.

Proteome analysis is often achieved by the sequential use of 2D-PAGE and MS. However, traditional 2D-PAGE techniques are hamstrung by constraints associated with detection limits of low-abundance proteins in complex samples. These limitations have been addressed by the development of sophisticated front-end separation technologies. LC in combination with tandem LC-MS/MS affords researchers the ability to directly analyze complex mixtures in much greater detail without incurring the detection issues associated with 2D-PAGE[65]. The evolution of proteomics technologies has catalyzed large-scale analyzes of differentially expressed proteins under various experimental conditions, which has greatly enriched our understanding of the global physiological processes that occur at the protein level during cellular signaling events[66]. Bottom-up or shotgun proteomics is a high-throughput strategy capable of characterizing very large numbers of proteins simultaneously. Using LC, hundreds of proteins or peptides can be efficiently separated chromatographically into much simpler protein mixtures if not individual species, prior to identification by MS. By pairing distinct prefractionation technologies with complementary MS capabilities, the researcher can customize the analytical resources to meet their specific experimental needs. For example, Orbitrap mass analyzers are frequently coupled to LC to take full advantage of the MS capabilities. Other common configurations include the quadrupole-TOF and linear ion trap quadrupole-Orbitrap to obtain mass determinations with high accuracy and resolution[67,68].

18O-16O LABELING: VERIFICATION

To increase the odds of success an independent, alternative strategy for biomarker development can be used. For this purpose, enriched or fractionated sera is subjected to differential 18O/16O stable isotope labeling, a quantitative MS-based proteomics technique that separates individual peptides on the basis of a 4 Da m/z change. The ratio of 16O labeled (pre-cancerous) and 18O labeled (cancerous) tryptic digestion products can be analyzed by nano LC-MS/MS to determine quantitative changes in peptide abundance between the samples. 18O/16O labeling can be also used in preliminary experiments of selective reaction monitoring to verify the proteins discovered by 2D-DIGE, and to identify optimal precursor and transition product ions for relative quantitation before doing more expensive absolute quantitation using AQUA peptides. Since the spots in 2D-DIGE gels have more than one protein, this approach also increases the confidence in identification of a protein with an altered expression profile.

Investigators planning to use 18O/16O labeling technique need to be aware that incorporation of 18O atoms into peptides can vary when using trypsin as a catalyst[69]. This issue can be ameliorated under conditions of extensive trypsinization. Also, until recently the availability of suitable computational tools was lacking, but this deficit has been addressed with the development of computational algorithms designed to evaluate 18O/16O labeling spectra[70,71]. It is noteworthy that 18O labeling is far less expensive than the stable labeling techniques, rendering it especially attractive for use in biomarker discovery where large numbers of samples are generally analyzed concurrently[71].

In general, the development and validation of biomarkers for clinical use includes four phases: discovery, quantification, verification, and clinical validation[72,73]. Discovery-phase platforms have to date generated large numbers of candidate cancer biomarkers. However, a comparable system for subsequent quantitative assessment and verification of the myriad candidates is lacking, and constitutes the rate-limiting step in the biomarker pipeline[74]. Clearly, discovery “-omics” experiments only serve to identify candidate biomarkers, which must survive rigorous verification and validation before their clinical utility becomes evident[72,75]. At present, established immunoassay platforms, in particular ELISA, are the paragon for quantitative analysis of protein analytes in sera. However, a reliance on immunological methods to verify candidate biomarkers is impractical given the time, effort and cost required to generate the necessary reagents while their value remains uncertain. These constraints restrict the development of immunoassays to the short list of already highly credentialed candidates. Instead, the large majority of new, unproven candidate biomarkers are best examined using intermediate verification technologies with shorter assay development times, lower assay costs, suitable to multiplexing 10-100 s of candidates, small sample requirements, and a high-throughput capability for analyzing hundreds to thousands of serum or plasma samples with high precision[76,77]. The objective is to winnow down the initial list of candidate biomarkers to a more manageable number worth advancing to traditional candidate-validation.

SELECTIVE REACTION MONITORING: VALIDATION

Targeted or quantitative proteomics has emerged as a new technical approach in proteomics and is an essential step in biomarker development. Among the several types of quantification methods, selected reaction monitoring (SRM) and multiple reaction monitoring (MRM) which enable MS-based absolute quantification (also termed AQUA) are emerging as potential rivals to immunoassays[78]. Where once biomarker discovery workflows were bottlenecked at the verification step, steady improvements over the years have resolved the issues such that MS-based techniques now represent viable strategies for biomarker verification[79,80]. SRM is a powerful tandem mass spectrometry method that can be used to monitor target peptides within a complex protein mixture. The specificity surpasses that of traditional immunoassays that may not identify or distinguish between post-translationally modified peptides. The sensitivity and specificity of the SRM assay to identify and quantify a unique peptide in a complex mixture by triple quadrupole mass spectrometry, lends itself to biomarker discovery and validation. This is especially poignant where affinity-based quantitative assays for proteins are unavailable and generating one is hampered by homology between isotypes. However, even isotypic variants of proteins with high homology can be quantified using SRM[81]. Stable-isotope-dilution MRM mass spectrometry (SID-MRM-MS) is a relatively new technique that enables quantification of a protein using isotopically heavy amino acid labeled peptide internal standards that correspond to the protein of interest. By including three to five diagnostic peptides representing a particular protein, the concentration of a protein of interest can be accurately determined based on the known amount of an internal standard added to the sample. The technique is amenable to multiplexing for the simultaneous quantification of 50 or more proteins, and requires only very small samples. SID-MRM-MS is a high-throughput method and has emerged as a valuable technique for validating multiple potential biomarkers[82]. MRM-MS of peptides using stable isotope-labeled internal standards is increasingly being adopted in the development of quantitative assays for proteins in complex biological matrices. These resultant assays are precise (providing primary amino acid sequence information for the analyte), quantitative, are compatible with tandem MS/MS data, and can be developed very rapidly in comparison to immunoassays. Since hundreds of MRM assays can be incorporated into a single method, the multiplexed nature of the technique allows for parallel monitoring of many targets. This is highly attractive from both a scientific and economic perspective. Furthermore, SRM assay design can target predetermined regions within a protein sequence, which would complement methods designed to enrich targets prior to SRM analysis[83]. Even subtle changes in a protein can be readily measured using the SRM approach. Once a SRM or MRM assay is developed, its utility extends to numerous experimental situations where the target protein(s) are to be measured. This has motivated the development of public repositories containing configured MRM assays[84,85].

The availability of previous tandem mass spectrometry data provide reliable information as to which fragment ions will yield the greatest signal in an SRM assay using a triple quadrupole mass spectrometer. The concept of monitoring specific peptides from proteins of interest is well established. As the methods exhibit high specificity and sensitivity within a complex mixture, they can be performed in a fraction of the instrument time relative to discovery-based methods. In addition, the capability to multiplex measurements of numerous analytes in parallel highlights the value of this technology in hypothesis-driven proteomics[77,82,86]. Multiplexing also helps with minimizing the amount of sample needed, an important consideration when working with hard to acquire samples.

ASSAY DEVELOPMENT: BIOMARKER VERIFICATION AND VALIDATION

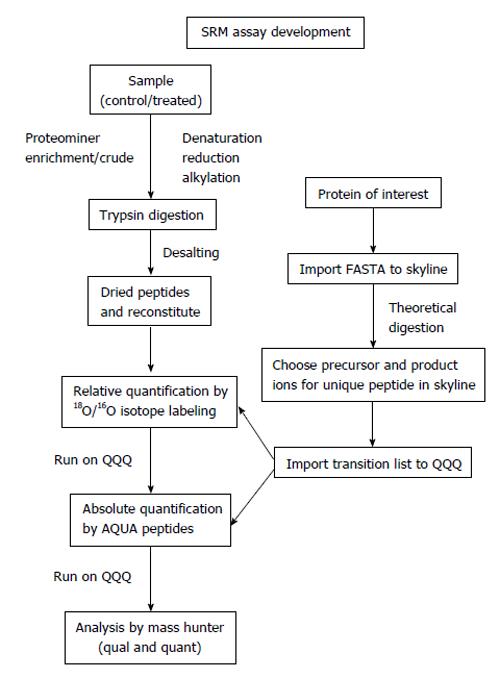

There are a number of criteria to consider when selecting the optimal transitions for MRM analysis[87,88]. Once the proteins of interest found in the discovery phase are selected, the following steps and considerations should be incorporated in the design of an absolute quantification assay using AQUA-peptides (Figure 2).

Figure 2 Targeted proteomics work flow for relative and absolute quantification.

SRM: Selected reaction monitoring.

Peptide design

First, the peptide sequence for the synthesis of the internal standard is selected. If the absolute amount of a protein is to be quantified, theoretically, any peptide of the protein produced by a proteolytic digestion (e.g., trypsin, chymotrypsin, Glu-C, Lys-C) can be selected. Ideally, two to five peptides are chosen per target protein[82,86,89]. Peptides that deliver strong MS signatures and uniquely identify the target protein - or a specific isoform thereof - have to be identified empirically. Such peptides are termed proteotypic peptides[90] to ensure accurate quantification and exclude artifacts that can originate from unknown modifications of the endogenous protein. In any case, it is essential that the chosen peptide/peptides should be unique to the protein of interest and have a high response in the MS system to afford the greatest sensitivity (“signature peptide”)[91]. Ideally, peptides that have been previously sequenced in a shotgun experiment are chosen to ensure optimal chromatographic behavior, ionization, fragmentation and SRM transitions. Information on sequenced peptides can be found in published proteomics data sets and repositories such as Peptide Atlas[92] (http://www.peptideatlas.org/), Human Proteinpedia[93] (http://www.humanproteinpedia.org/) or PRIDE[94] (http://www.ebi.ac.uk/pride/). These databases can be queried by protein ID, accession number, or peptide sequence. If a peptide is in these databases, the search results return detailed information characterizing the peptide, previously recorded mass spectra of modified and unmodified versions, and information on the samples in which it was identified. To develop methods for targeted SRM analysis on a triple quadrupole mass spectrometer, a key resource exists in academic open source, software called Skyline (http://proteome.gs.washington.edu/software/skyline). The Skyline user interface facilitates the development of mass spectrometer methods and the analysis of data from targeted proteomics experiments performed using SRM. It enables access to MS/MS spectral libraries from a wide variety of sources, to choose SRM filters and verify results based on previously observed ion trap data[95]. The spectrum library can be used to classify fragment ions ranked by intensity, and enable the user to define how many product ions are required to provide a specific and selective measurement given the target sample. The transition lists can be easily exported to triple quadrupole instruments. The Skyline files are in a fast and compact format and are easily shared, even for experiments requiring many sample injections. For a peptide that has not yet been sequenced, an unlabeled peptide can be generated by peptide synthesis and inspected for the ability to function as a reliable AQUA peptide or bioinformatics prediction programs like Skyline can be used to choose potential peptides. In these cases, it is important to take into consideration that certain amino acid sequences or modifications can change the cleavage pattern of the selected protease. For instance, the protease trypsin normally cleaves at the carboxylic side of arginine and lysine. However, if proline is at the amino side of these residues, the bond is resistant to trypsin cleavage. Similarly, if the amino acid on the amino-terminal side is phosphorylated, trypsin cleavage may be inhibited. If the protein contains a series of arginines and lysines, trypsin might cleave after the first arginine or lysine, or after any one following those, creating “missed cleavages” or “ragged ends”. So these theoretical software programs provide a starting point. After using Skyline in preliminary verification, using 18O/16O labeling to check the ratios (relative quantitation) and method development between particular samples is useful because AQUA peptide used for absolute quantitation are very expensive and it is better to be sure which peptides are best suited for absolute quantitation once the method is developed.

Peptide synthesis

AQUA peptides with a peptide sequence corresponding to that generated during digestion of the endogenous protein are synthesized. During peptide synthesis, amino acids containing stable isotopes (18O, 13C, 2H or 15N) are incorporated into the peptide, leading to a peptide with the same chemical and physical characteristics as the endogenous target, but with a defined mass difference. Most commonly, 13C or 15N are used as stable isotopes because they do not lead to chromatographic retention shifts seen in deuterated peptides. Usually, one heavy isotope-labeled leucine, proline, valine, phenylalanine or tyrosine is incorporated into an AQUA peptide leading to a mass shift of 6-8 Da. For tryptic peptides, the C-terminal arginine or lysine is often heavy isotope-labeled such that the resulting y-ion series can be used for monitoring. The peptide is purified, and the exact amount of peptide is determined by amino acid analysis or total nitrogen content. Many commercial vendors synthesize AQUA peptides (including incorporation of a stable isotope-labeled amino acid; e.g., Sigma-Aldrich, Thermo Fisher Scientific or Cell Signaling Technologies).

Peptide validation

After synthesis, the AQUA peptide is analyzed by LC-MS/MS to verify its chromatographic behavior and fragmentation spectra. If they correspond to the previously detected or predicted characteristics, the peptide is ready for use. Quadrupole-based instruments are much better suited to SRM methods by virtue of their ability to generate a continuous ion beam in the SRM transition. In general, trapping instruments are easier to set up and operate, whereas quadrupole-based instruments often require more expertise to optimize fully. However, well-designed targeted SRM methods on a triple quadrupole MS instrument are capable of significantly lower limits of quantification in mixtures of very high complexity, such as whole-cell lysate or unfractionated serum.

Method optimization

An MS spectrum of the peptide (or peptides) is first collected, either by infusion or by LC-MS or by theoretical digestion (using Skyline). Typically, the initial charge-state distribution is interrogated to establish the most sensitive charge state for further monitoring. Note that the actual charge state distribution in a complex mixture may be different than that observed from purified peptides. When an AQUA method is first deployed in a real biological matrix, it is advisable to test multiple charge states to ensure that the most sensitive form of the analyte is ultimately used. A SIM method with a narrow m/z scan range for the charge state with the highest intensity that covers both the AQUA peptide and the endogenous peptide is established from this MS spectrum. For SRM-based methods, the MS/MS spectra of the most intense precursor ions of the AQUA peptide are collected and inspected. Fragment ions at m/z ratios higher than the precursor ion are often more suitable for monitoring because of reduction of noise compared with the lower m/z space. Ion intensity and retention time can be optimized by varying the amount of organic solvent in the peptide loading buffer and in the column equilibration phase of an LC-MS method. In addition, software tools are now available to assist in developing scheduled SRM methods and interpreting their data.

Sample preparation

The biological samples are collected and digested with trypsin. The sample can be directly protease digested, or, to reduce complexity, the sample can be fractionated or enriched before digestion. Measurement of protein abundance by AQUA is indirect and based on the abundance of the resulting peptides; therefore, complete proteolysis is essential, and care should be taken to digest the target mixture with increasing amounts of protease and/or for longer time periods. Before digestion, a denaturation step is important for optimal trypsinization followed by reduction and alkylation. To remove any salts, desalting of sample is also very important.

MS analysis

The peptide mixture containing the endogenous and the AQUA peptide is analyzed on the mass spectrometer by a SIM or a SRM method, and the amount of endogenous peptide is determined. In contrast to a full MS scan, in a SIM experiment only a very narrow mass range is scanned, often by selectively injecting or trapping ions from the narrow scan range to increase the target ion signal-to-noise ratio. In an SRM experiment, a fragment ion or set of fragment ions is monitored. Typically, triplicate measurements for well-designed AQUA experiments produce coefficients of variation between 8%-15%. Using this workflow of proteomics technologies with other novel biostatistical tools, along with the inclusion of clinical factors such as age and gender shown to improve predictive performance[96], will increase the probability of early diagnosis.

These results can be analyzed using machine learning statistical approaches such as a Multivariate Adaptive Regression Splines (MARS) model, which has the ability to search through a large number of candidate predictor variables to determine those most relevant to the classification model. It is a nonparametric regression procedure that seeks to create a classification model based on piecewise linear regressions. MARS are able to reliably track the very complex data structures that are often present in multi-dimensional data[97-100]. In this way, MARS effectively reveals important data patterns and relationships that other models are typically unable to detect.

CONCLUDING REMARKS: THE FUTURE OF CLINICAL PROTEOMICS

Proteomics is a fast maturing discipline that brings the great promise for extending our understanding of the molecular basis of human diseases, and to identify novel biomarkers suitable for patient diagnosis, prognosis and treatment. Hopefully, this improved understanding will inform precision medicine and its application to patient care in the clinical setting. Several recent advances in proteomics have substantially simplified the analysis of serum proteins. Powerful workflows open up new possibilities for biomarker research that may lead to improved clinical assays and faster, more robust drug discovery and development. However there are still some considerations that must be addressed to meet the conditions that satisfy clinical applications. One key issue concerns sample collection, which varies from site to site, but this can be evaluated using the reference sample set from the National Cancer Institute’s Early Detection Research Network, and will serve as a quality control during validation studies. Finally, the power of a panel of biomarkers is exemplified by AFP which requires huge sample numbers compared to statistical approaches. By contrast, quantifiable biomarker panel greatly reduces the requirements for large sample sets during validation[101].

Drugs are launched to market after the lengthy process of development. Despite careful preclinical assessment to identify the most promising candidates, drug development is a lengthy and expensive process. The risk that a drug candidate is withdrawn from testing during clinical trials, especially in the latter stages, is a real concern that comes at considerable cost. There is an enormous impetus within the pharmaceutical industry to adopt new technologies that will hasten drug development and reduce the costs associated with bringing new drugs to market. Biomarkers are emerging as valuable tools in identifying potential drug failures at an early stage that can inform go/no-go decisions. Omics technologies serve an increasingly important role in biomarker discovery and the latter stages of drug development (e.g., target discovery, mechanism of action or predicting toxicity). Recent advances in mass spectrometry including SRM and novel high-resolution capabilities have catalyzed the advent of proteomics and metabolomics as a central component in biomarker discovery, quantification, validation, and clinical verification.

Clinical proteomics is poised to bring important direct “bedside” applications. We foresee a future in which the physician rely upon targeted proteomic analyses during various aspects of disease management. The paradigm shift will directly affect medicine from the development of new therapeutics to clinical practice during the treatment of patients.

P- Reviewer: Coling D, Gam LH, Li Z, Provenzano JC S- Editor: Ji FF L- Editor: A E- Editor: Liu SQ

Open-Access: This article is an open-access article which was selected by an in-house editor and fully peer-reviewed by external reviewers. It is distributed in accordance with the Creative Commons Attribution Non Commercial (CC BY-NC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is non-commercial. See: http://creativecommons.org/licenses/by-nc/4.0/