Published online Feb 14, 2025. doi: 10.3748/wjg.v31.i6.102090

Revised: December 8, 2024

Accepted: December 23, 2024

Published online: February 14, 2025

Processing time: 93 Days and 23.6 Hours

Inflammatory bowel disease (IBD) is a global health burden that affects millions of individuals worldwide, necessitating extensive patient education. Large language models (LLMs) hold promise for addressing patient information needs. However, LLM use to deliver accurate and comprehensible IBD-related medical information has yet to be thoroughly investigated.

To assess the utility of three LLMs (ChatGPT-4.0, Claude-3-Opus, and Gemini-1.5-Pro) as a reference point for patients with IBD.

In this comparative study, two gastroenterology experts generated 15 IBD-related questions that reflected common patient concerns. These questions were used to evaluate the performance of the three LLMs. The answers provided by each model were independently assessed by three IBD-related medical experts using a Likert scale focusing on accuracy, comprehensibility, and correlation. Simultaneously, three patients were invited to evaluate the comprehensibility of their answers. Finally, a readability assessment was performed.

Overall, each of the LLMs achieved satisfactory levels of accuracy, comprehensibility, and completeness when answering IBD-related questions, although their performance varies. All of the investigated models demonstrated strengths in providing basic disease information such as IBD definition as well as its common symptoms and diagnostic methods. Nevertheless, when dealing with more complex medical advice, such as medication side effects, dietary adjustments, and complication risks, the quality of answers was inconsistent between the LLMs. Notably, Claude-3-Opus generated answers with better readability than the other two models.

LLMs have the potential as educational tools for patients with IBD; however, there are discrepancies between the models. Further optimization and the development of specialized models are necessary to ensure the accuracy and safety of the information provided.

Core Tip: This study evaluated the performance of three large language models, ChatGPT-4.0, Claude-3-Opus, and Gemini-1.5-Pro, in providing accurate and comprehensible information for patients with inflammatory bowel disease. Although all three models showed satisfactory accuracy and relevance, Claude-3-Opus excelled in terms of readability and patient comprehensibility. However, discrepancies in the provision of complex medical advice highlight the need for further optimization and specialized model development to ensure safe and accurate patient education.

- Citation: Zhang Y, Wan XH, Kong QZ, Liu H, Liu J, Guo J, Yang XY, Zuo XL, Li YQ. Evaluating large language models as patient education tools for inflammatory bowel disease: A comparative study. World J Gastroenterol 2025; 31(6): 102090

- URL: https://www.wjgnet.com/1007-9327/full/v31/i6/102090.htm

- DOI: https://dx.doi.org/10.3748/wjg.v31.i6.102090

Inflammatory bowel disease (IBD) is a chronic condition affecting millions of people worldwide[1,2]. It is characterized by inflammation of the gastrointestinal tract, leading to a range of symptoms and potential complications[3] and causing psychological problems in patients[4,5]. As IBD is a lifelong disease, many patients and their families have questions and concerns regarding the disease, such as its causes, diagnosis, treatment, and long-term management. Notably, the rapid development of artificial intelligence (AI) and natural language processing in recent years has led to the emergence of chat-based AI systems that provide information and support to patients with various health conditions. The vast para

AI use has been explored in various medical fields[9,10]. For instance, Hillmann et al[11] found that chat-based AI provided accurate and easily understandable information regarding atrial fibrillation and cardiac implantable electronic devices for patients, highlighting the potential of AI for patient education and shared decision making. In gastroenterology, Kerbage et al[12] reported that the responses of ChatGPT, a popular AI language model, were generally accurate in providing information on common gastrointestinal diseases and could potentially impact patient education and provider workload. Specifically focusing on IBD, Sciberras et al[13] reported that although ChatGPT responses were largely accurate in providing information with regards to the European Crohn's and Colitis Organization guidelines, some discrepancies were observed. Thus, further validation and refinement of AI-based patient information systems are needed.

Due to the specialized nature of medical consultations, the accuracy and rigor of LLM-generated answers require evaluation by medical experts[14]. Currently, there is a lack of recognized methods for assessing LLMs in the field of medical consultation. In our previous study, we assessed the feasibility of three popular LLMs in different languages as counseling tools for Helicobacter pylori infection[15], and the LLMs provided satisfactory responses to Helicobacter pylori infection-related questions. Nevertheless, studies on comparisons between different LLM models remain scarce, and the accuracy and comprehensibility of these systems for providing IBD-related information have not been studied extensively.

In this study, we aimed to compare whether the IBD-related medical consultation answers generated by different LLMs can accurately and rigorously meet the needs of patients. This study also sought to provide some evaluation methodology ideas for future researchers.

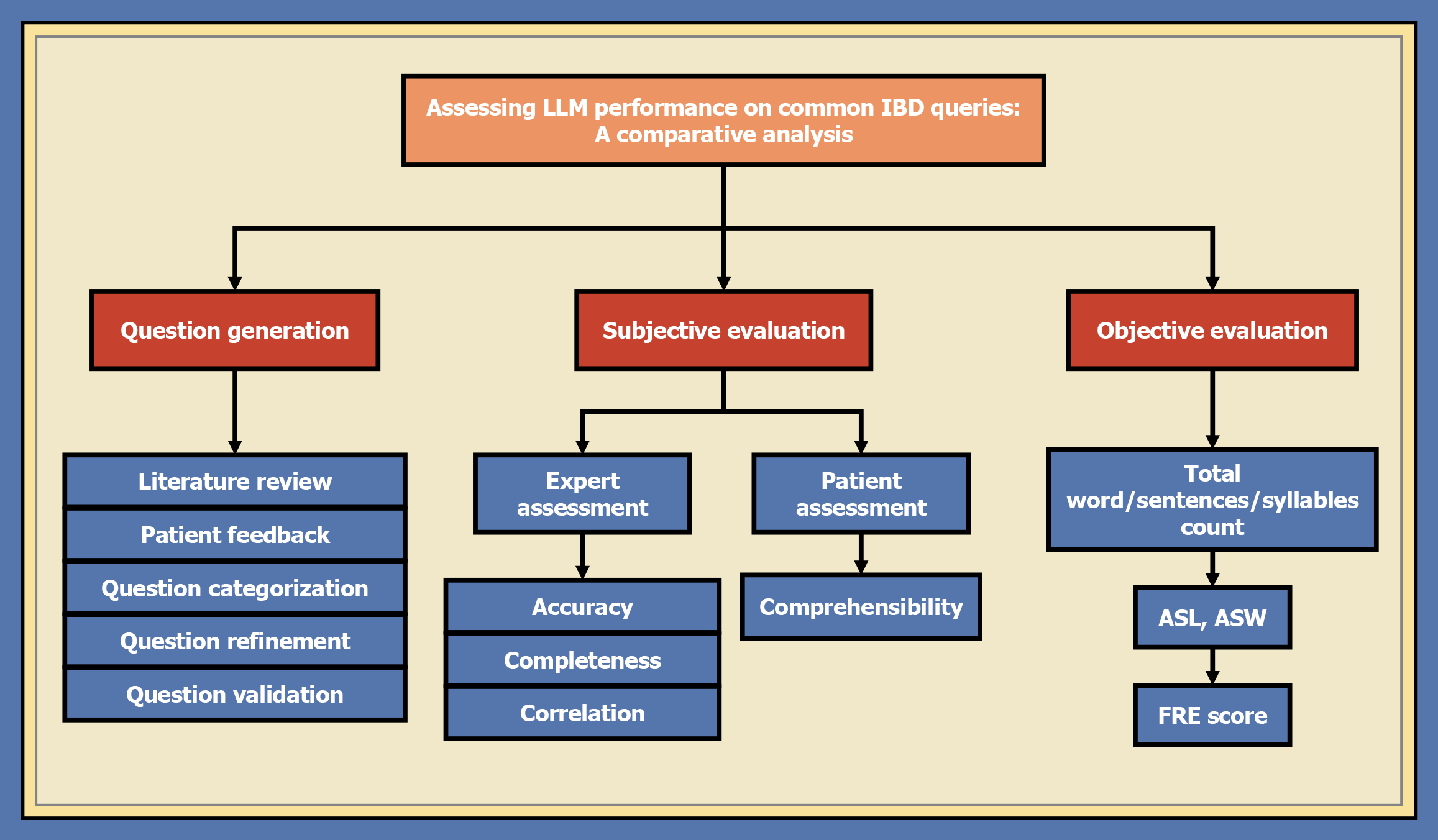

This study's design complies with the ethical principles of the Declaration of Helsinki. Since the study was observational and data were anonymous, informed consent was waived. This study followed a comprehensive research process involving several key stages: Question generation, input into LLMs, evaluation by medical experts, readability assessment by patients, and statistical analysis. The overall assessment methodology is illustrated in Figure 1.

An expert panel of two experienced gastroenterologists (Yan-Qing Li and Xiu-Li Zuo), each with more than 10 years of expertise in the field of IBD, generated a set of questions that reflected the common concerns and information needs of IBD patients. These questions encompass various critical aspects of IBD, including its introduction, diagnosis, treatment, and follow-up; the questions also provide a comprehensive reflection of the effectiveness of LLMs in delivering information related to IBD. The question generation process was conducted as follows.

Literature review: The expert panel conducted an exhaustive review of contemporary medical literature, clinical guidelines, and educational resources to identify the most pertinent and frequently inquired topics for patients with IBD.

Patient feedback: Drawing on their clinical experience, the expert panel incorporated patient feedback, including common inquiries during consultations and those raised within support groups and educational platforms, to generate the questions.

Question categorization: Based on the literature review and patient feedback, the expert panel identified four main categories of questions: Introduction to IBD, diagnosis, treatment plan, and follow-up. These categories were selected to cover key aspects of IBD management and ensure that the generated questions comprehensively addressed patient needs.

Question refinement: The panel collaboratively refined the questions to ensure clarity, brevity, and clinical relevance, aiming to make them accessible to a non-specialist audience.

Question validation: The expert panel consulted a group of five patients with IBD, recruited from the IBD clinic of Qilu Hospital, and two other gastroenterologists from the Department of Gastroenterology to validate the generated questions. Their feedback was incorporated to refine the questions further and ensure that the questions effectively captured the perspectives and information needs of the patient community. Table 1 presents the final set of 15 questions.

| Theme | Questions |

| Introduction | What is IBD? |

| Why did I get IBD? | |

| Does IBD run in families? | |

| Diagnosis | What are the common symptoms of IBD? |

| How is IBD diagnosed? | |

| What diseases need to be differentiated and diagnosed from IBD? | |

| Treatment | What medications are used to treat IBD? |

| What are the potential side effects of common IBD medications? | |

| Will IBD need medication for life long? | |

| Can IBD be cured? | |

| Follow-up | What are the signs and symptoms of a flare-up? |

| What dietary changes are recommended for people with IBD? | |

| What are the potential complications of IBD? | |

| Does IBD increase the risk of colon cancer? | |

| Does having IBD affect fertility? |

ChatGPT-4.0 (GPT-4, OpenAI, San Francisco, California, United States), Claude-3-Opus (Anthropic, San Francisco, CA, United States), and Gemini-1.5-Pro (AI21 Labs, Tel Aviv, Israel) were the three LLMs selected to evaluate the performance of the AI-generated materials. ChatGPT-4.0, an advanced AI model developed by OpenAI, is known for its high-quality language generation and understanding capabilities. Claude-3-Opus, created by Anthropic, is another state-of-the-art AI model that has shown promising results in various language tasks. Gemini-1.5-Pro, developed by AI21 Labs, is a powerful language model that has demonstrated strong performance in question answering and content generation.

The final set of questions were used as prompts for the three LLMs to generate detailed answers. Each LLM generated one detailed answer for each question, resulting in 45 answers (15 questions × 3 LLMs).

A panel of three gastroenterologists (Guo Jing, Liu Jun, and Liu Han), who were not involved in generating the questions, evaluated the answers from the LLMs. Using a tailored scoring rubric, the experts assessed each answer across three dimensions: Accuracy, completeness, and correlation. Accuracy assesses the agreement between the diagnostic outcomes provided by the LLMs and actual conditions, Completeness referred to the inclusion of all essential information in the answers, whereas correlation focused on the relevance of answers to questions. The evaluation criteria are presented in Table 2, and the score range for each evaluation dimension was 1-5 points, with higher scores indicating a better performance.

| Evaluation dimension | Score | Scoring criteria |

| Accuracy | 1 | The answer contains serious errors or misleading information that may harm patients |

| 2 | The answer contains some errors or inaccurate information, but it will not cause obvious harm to patients | |

| 3 | The information in the answer is mostly accurate, but there are a few ambiguous or uncertain statements | |

| 4 | The information in the answer is accurate, clearly stated, and without obvious errors | |

| 5 | The information in the answer is highly accurate, professionally and authoritatively stated, and fully consistent with current medical knowledge | |

| Completeness | 1 | The answer is very brief, missing key information, and provides little to no help for the patient's actual question |

| 2 | Although the answer mentions some relevant content, it lacks a significant amount of important information and provides limited help to the patient | |

| 3 | The answer covers the main relevant content but still omits some important information, making the guidance for the patient not comprehensive enough | |

| 4 | The answer covers most of the key content, and although it may omit a small amount of minor information, it is already very helpful to the patient | |

| 5 | The answer is very complete, covering all key information and providing a comprehensive answer to the patient's question | |

| Correlation | 1 | The answer is almost completely unrelated to the patient's actual question, and the information lacks targeting |

| 2 | Although some content in the answer is related to the question, most of the information deviates from the main topic and lacks targeting | |

| 3 | The main point of the answer is basically related to the patient's question, but there is a small amount of irrelevant or off-topic content | |

| 4 | The answer closely follows the patient's question, and almost all content is directly related, but there may be a few pieces of irrelevant information | |

| 5 | The answer is completely on-topic, and all content is highly relevant to the patient's question, making the information very targeted |

To ensure that the information was presented in an accessible manner, readability of the answers was assessed using the Flesch Reading Ease (FRE) score[9], a standard metric for estimating text readability. The FRE score was calculated based on two main factors, sentence length and number of syllables per word, using the following formula: FRE = 206.835 - (1.015 × ASL) - (84.6 × ASW) where ASL is the average number of words per sentence, and ASW is the average number of syllables per word.

ASL and ASW are calculated as follows: ASL = total words/total sentences; ASW = total syllables/total words.

The FRE scores range from 0 to 100, with higher scores indicating greater readability. A score between 60 and 70 is considered easily understandable, whereas a score between 90 and 100 indicates that the text is very easy to understand.

We further evaluated the comprehensibility of the answers. This evaluation was done by a distinct cohort of three IBD patients. They had not been involved in the previous question generation process. These patients rated the understandability of the information using a standardized scale. The scoring criteria for their evaluation of the answers can be found in Table 3.

| Score | Scoring criteria |

| 1 | Completely do not understand, unable to understand the content of the answer |

| 2 | Difficult to understand, hard to understand the content of the answer, requiring further explanation |

| 3 | Partially understand, able to understand some of the content of the answer, but there is some confusion |

| 4 | Basically understand, able to understand most of the content of the answer, but still have some doubts |

| 5 | Fully understand, able to accurately summarize the content of the answer and resolve all doubts |

The analysis of all data was conducted utilizing GraphPad Prism software, specifically version 9.5.1, which is a product of GraphPad Software. Additionally, SPSS software, version 26.0, from IBM Corp., was also employed. Data recording and organization were facilitated by Microsoft Excel 2021. In terms of continuous variables, statistical measures including the median and range were applied to characterize the central tendencies and distribution patterns. Furthermore, graphical illustrations were used to offer a clear and thorough depiction of the data. The Friedman test was applied to assess differences among three groups. Hypothesis testing was conducted to ascertain significant intergroup differences, with the null hypothesis being rejected at the P < 0.05 significance level.

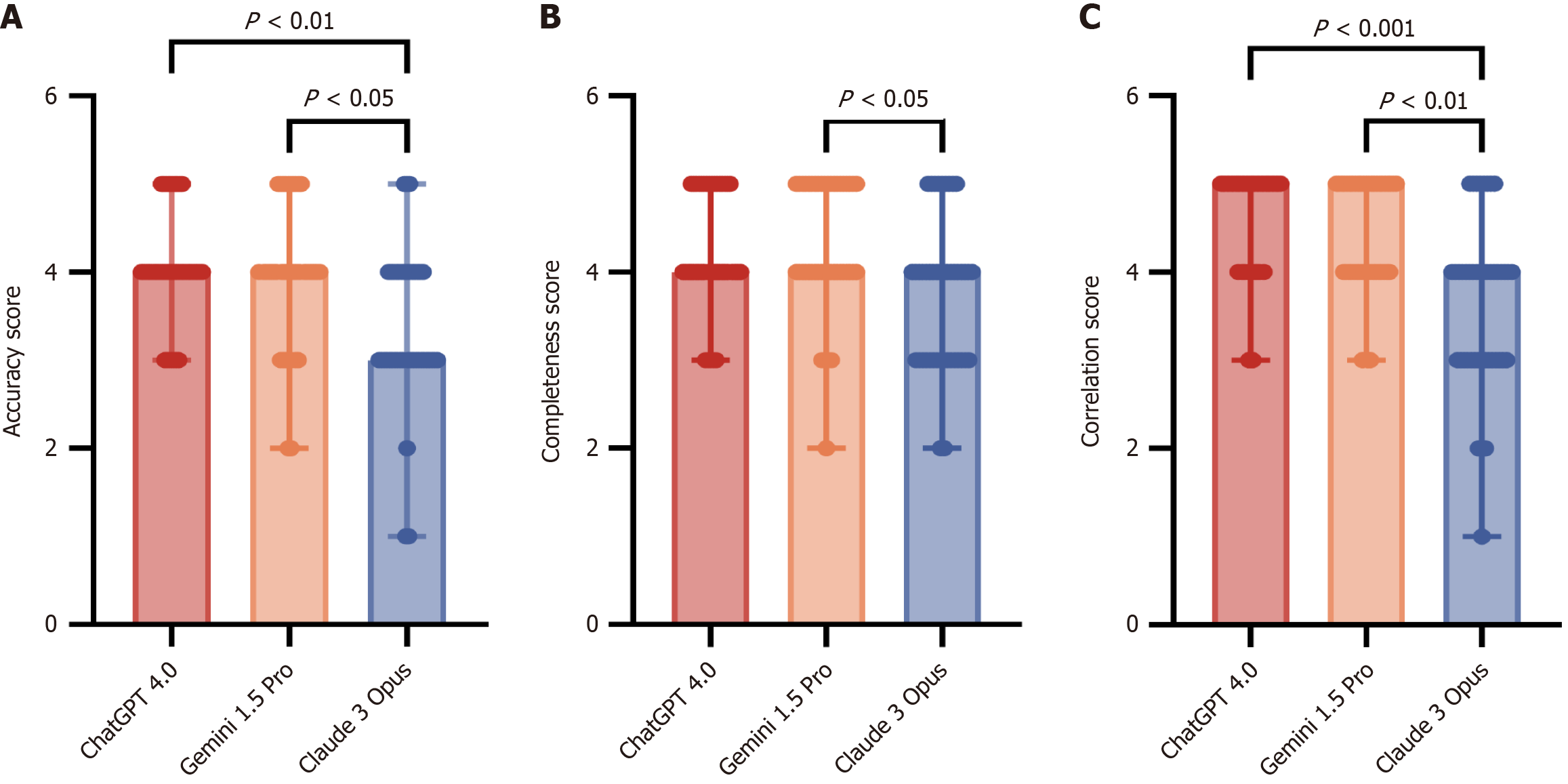

Table 4 shows that the average accuracy scores for all three models were slightly above 4, indicating that most of the answers were correct. However, the SD for Claude-3-Opus (4.02) was approximately 0.5, higher than that for ChatGPT-4.0 and Gemini-1.5-Pro (both 4.06), suggesting a higher likelihood of a controversial diagnosis. Furthermore, the median score for Claude-3-Opus was only 3 (Table 5). Figure 2A shows that while all three LLMs demonstrated identical maximum scores, the median and third quartile scores for ChatGPT-4.0 and Gemini-1.5-Pro surpassed those of Claude-3-Opus. Additionally, Claude-3-Opus had a notably lower minimum score than ChatGPT-4.0 and Gemini-1.5-Pro. This suggests a marked disparity in the output precision between Claude-3-Opus and the other two LLMs. The Friedman test revealed no significant differences between ChatGPT-4.0 and Gemini-1.5-Pro (P > 0.05), but significant differences between the two LLMs and Claude-3-Opus (P < 0.05), with particularly pronounced distinction between ChatGPT-4.0 and Claude-3-Opus (P < 0.01).

| Groups | Items | ChatGPT-4.0 | Gemini-1.5-Pro | Claude-3-Opus |

| Expert assessment | Accuracy, mean (SD) | 4.06 (0.61) | 4.06 (0.62) | 4.02 (0.66) |

| Completeness, mean (SD) | 4.24 (0.64) | 4.20 (0.66) | 4.27 (0.58) | |

| Correlation, mean (SD) | 4.57 (0.62) | 4.54 (0.66) | 4.52 (0.66) | |

| Patient assessment | Comprehensibility, mean (SD) | 4.02 (0.75) | 4.07 (0.75) | 4.56 (0.66) |

| Objective evaluation | FRE score, mean (SD) | 32.25 (6.91) | 36.92 (8.99) | 54.44 (8.22) |

| Groups | Items | ChatGPT-4.0 | Gemini-1.5-Pro | Claude-3-Opus |

| Expert assessment | Accuracy, median (Q1, Q3) | 4 (4, 4) | 4 (4, 4) | 3 (3, 4) |

| Completeness, median (Q1, Q3) | 4 (4, 5) | 4 (4, 5) | 4 (3, 4) | |

| Correlation, median (Q1, Q3) | 5 (4, 5) | 5 (4, 5) | 4 (3, 4) | |

| Patient assessment | Comprehensibility, median (Q1, Q3) | 4 (3, 5) | 4 (4, 5) | 5 (4, 5) |

| Objective evaluation | FRE score, median (Q1, Q3) | 31.10 (27.50, 34.30) | 32.79 (30.53, 42.61) | 51.47 (49.82, 56.09) |

Table 4 shows that all three LLMs achieved a mean score of approximately 4, indicating their capability to provide comprehensive analyses and integrative diagnoses. Claude-3-Opus had a slightly higher mean value (4.27) and lower standard deviation (0.58) than ChatGPT-4.0 (4.24 and 0.64, respectively) and Gemini-1.5-Pro (4.20 and 0.66, respectively). The median score for all LLMs was 4. ChatGPT-4.0 and Gemini-1.5-Pro consistently showed no significant differences, indicating similar capacities for understanding and recognizing medical issues. Figure 2B illustrates a modest yet statistically significant difference in completeness scores between Gemini-1.5-Pro and Claude-3-Opus (P < 0.05), with Claude-3-Opus showing lower score quantiles than ChatGPT-4.0 and Gemini-1.5-Pro.

ChatGPT4.0 attained the highest mean score of 4.57, which is 0.03 and 0.05 higher than Gemini-1.5-Pro and Claude-3-Opus, respectively. Both ChatGPT4.0 and Gemini-1.5-Pro achieved a maximum median score of 5, whereas Claude-3-Opus scored 4, indicating a notable difference in content relevance. Within the graphical representation, this disparity was further accentuated, with Claude-3-Opus's quantiles trailing ChatGPT-4.0 and Gemini-1.5-Pro by 1-2 points (Figure 2C). A highly significant difference was observed between ChatGPT-4.0 and Claude-3-Opus (P < 0.001), and a significant difference was also observed between Gemini-1.5-Pro and Claude-3-Opus (P < 0.01). These results indicate that Claude-3-Opus provided answers with a comparatively lower level of professionalism and medical expertise than ChatGPT-4.0 and Gemini-1.5-Pro - the two LLMs with negligible difference in their performance.

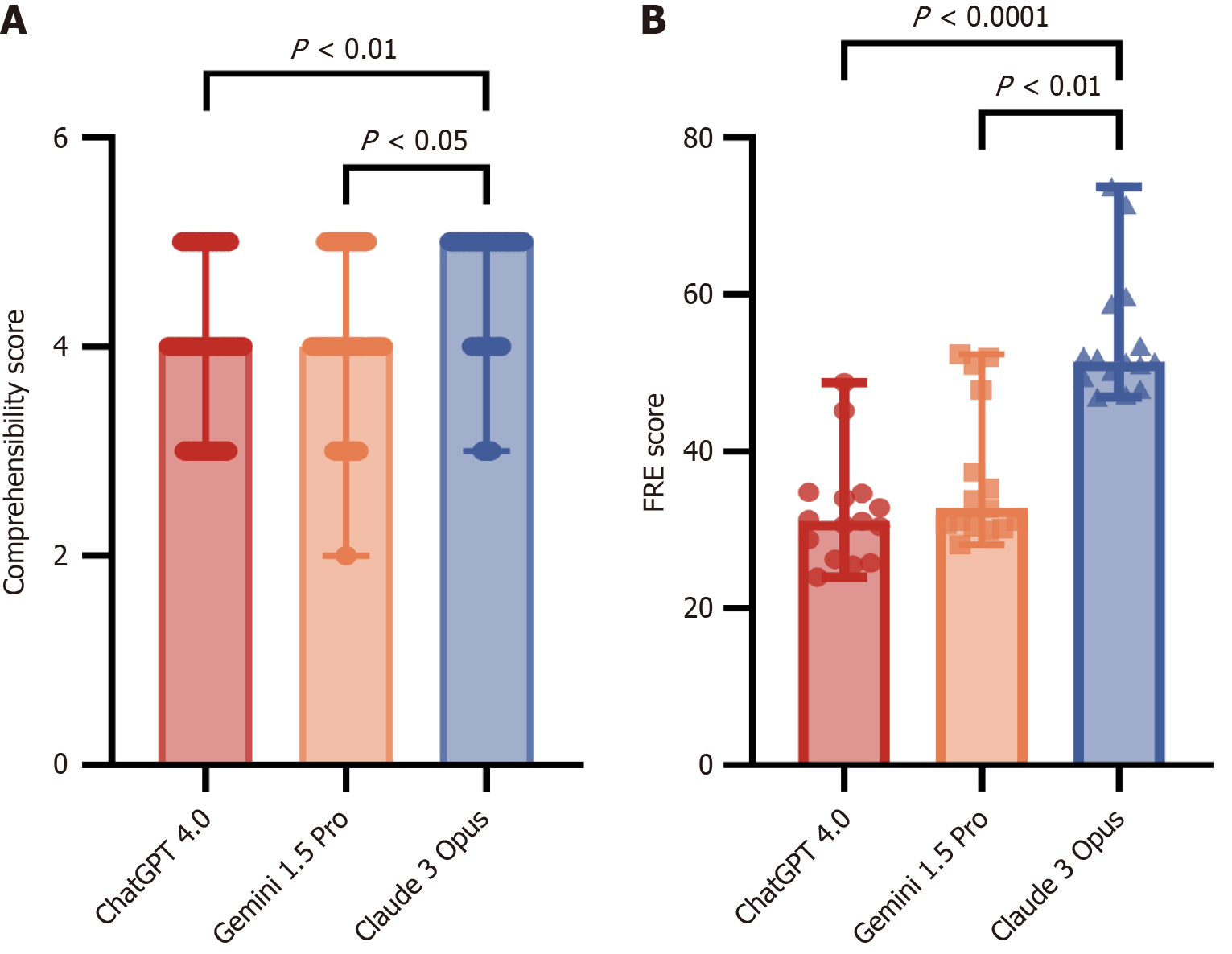

Claude-3-Opus received the highest ratings (median score: 5; mean score: 4.56), indicating that the patients found their answers to be the most comprehensible. ChatGPT-4.0 and Gemini-1.5-Pro showed minor differences in perceived comprehensibility, with median scores of 4 and mean scores of 4.02 and 4.07, respectively. Figure 3A shows that Claude-3-Opus had a higher median score than the other two LLMs. The variance analysis indicated significant differences between Claude-3-Opus and ChatGPT-4.0 (P < 0.01) and between Claude-3-Opus and Gemini-1.5-Pro (P < 0.05).

Claude-3-Opus demonstrated superior readability, with a median FRE score of 51.47 and mean score of 54.44, higher than ChatGPT-4.0 (mean: 31.10, median: 32.25) and Gemini-1.5-Pro (mean: 32.79, median: 36.92; Figure 3B). This finding highlights Claude-3-Opus's significant advantage in generating coherent and fluent results. The Claude-3-Opus score was > 50% higher than those of ChatGPT-4.0 and Gemini-1.5-Pro. Additionally, the variance analysis demonstrated extremely significant differences between Claude-3-Opus and the other two LLMs (P < 0.0001 for Claude-3-Opus vs ChatGPT-4.0, and P < 0.01 for Claude-3-Opus vs Gemini-1.5-Pro).

This study aimed to evaluate the accuracy and comprehensibility of three LLMs, ChatGPT-4.0, Gemini-1.5-Pro, and Claude-3-Opus, in providing information related to IBD. The results indicate that these models performed well in terms of accuracy, completeness, and correlation, with average scores above 4 out of 5. Our findings were consistent with the results of several related studies[16], indicating that LLMs have strong capabilities in generating material well-suited to human reading. Additionally, each model has unique strengths and weaknesses. In the evaluation by medical experts, ChatGPT and Gemini showed higher accuracy than Claude, which corroborates the affirmation of ChatGPT model accuracy in the study conducted by Kerbage et al[12]. In the patient evaluation, Claude-3-Opus received the highest comprehensibility scores, indicating that the patients found their answers to be the most understandable. This finding was further supported by the readability assessments. This may be related to Claude's specialized training in text comprehension[17,18]. Regarding response to highly technical or subjective questions, all three models had issues with both accuracy and readability. ChatGPT uses obscure language that is difficult to understand, whereas Gemini and Claude omit important information, leading to patient misunderstanding. This suboptimal expression of professional information could be the biggest obstacle to the independent use of LLMs by patients without doctors. Moreover, the inherent black-box nature of AI technology exacerbates this risk and introduces additional uncertainties[19].

Overall, LLMs have shown significant potential in patient education and can handle IBD-related educational tasks. However, the differences in completeness and readability among different models highlight the need for continuous AI technology development to achieve trustworthiness and reliability, which engender confidence[20,21]. Notabaly, medical professionals should be aware of the limitations of these tools and should use them as supplementary resources for professional medical advice rather than as replacements.

This study has several strengths, including the use of a systematic approach to generate a comprehensive set of IBD-related questions, participation of medical experts and patients in the evaluation, and comparison between multiple state-of-the-art LLMs. However, we did not group the patients owing to the limited number of patients, thereby overlooking the potential bias arising from different availability of data for LLMs pertaining to different patient subgroups. In future studies, we plan to expand the sample size to ensure better representation across age, ethnicity, and disease severity. In addition, we plan to collect more clinical cohort data to comprehensively evaluate the application of LLMs in different patient groups. We will also conduct longitudinal observations to assess the long-term impact of LLMs on patient disease awareness and health status.

Future studies should focus on improving the completeness and reliability of LLMs in the context of medical counseling. Such research could involve the development of specialized medical LLMs trained on large datasets of medical literature and guidelines. In addition, involving healthcare professionals in the training and evaluation of these models could help ensure that the information provided is accurate, complete, and relevant.

Our study presented a thorough evaluation of three advanced LLMs in the context of education for patients with IBD. Although the models showed promise in terms of accuracy and comprehensibility, there is room for improvement, particularly in ensuring the completeness of the information provided. As AI continues to develop, it is essential to balance the benefits of these tools with those of rigorous validation and refinement in clinical practice.

| 1. | Ciorba MA, Konnikova L, Hirota SA, Lucchetta EM, Turner JR, Slavin A, Johnson K, Condray CD, Hong S, Cressall BK, Pizarro TT, Hurtado-Lorenzo A, Heller CA, Moss AC, Swantek JL, Garrett WS. Challenges in IBD Research 2024: Preclinical Human IBD Mechanisms. Inflamm Bowel Dis. 2024;30:S5-S18. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 11] [Cited by in RCA: 16] [Article Influence: 8.0] [Reference Citation Analysis (0)] |

| 2. | Click B, Cross RK, Regueiro M, Keefer L. The IBD Clinic of Tomorrow: Holistic, Patient-Centric, and Value-based Care. Clin Gastroenterol Hepatol. 2024. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 21] [Article Influence: 21.0] [Reference Citation Analysis (0)] |

| 3. | Ng SC, Shi HY, Hamidi N, Underwood FE, Tang W, Benchimol EI, Panaccione R, Ghosh S, Wu JCY, Chan FKL, Sung JJY, Kaplan GG. Worldwide incidence and prevalence of inflammatory bowel disease in the 21st century: a systematic review of population-based studies. Lancet. 2017;390:2769-2778. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2677] [Cited by in RCA: 4466] [Article Influence: 496.2] [Reference Citation Analysis (111)] |

| 4. | Xiong Q, Tang F, Li Y, Xie F, Yuan L, Yao C, Wu R, Wang J, Wang Q, Feng P. Association of inflammatory bowel disease with suicidal ideation, suicide attempts, and suicide: A systematic review and meta-analysis. J Psychosom Res. 2022;160:110983. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2] [Cited by in RCA: 21] [Article Influence: 5.3] [Reference Citation Analysis (0)] |

| 5. | Bisgaard TH, Allin KH, Elmahdi R, Jess T. The bidirectional risk of inflammatory bowel disease and anxiety or depression: A systematic review and meta-analysis. Gen Hosp Psychiatry. 2023;83:109-116. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 26] [Cited by in RCA: 35] [Article Influence: 11.7] [Reference Citation Analysis (0)] |

| 6. | Tarabanis C, Zahid S, Mamalis M, Zhang K, Kalampokis E, Jankelson L. Performance of Publicly Available Large Language Models on Internal Medicine Board-style Questions. PLOS Digit Health. 2024;3:e0000604. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 10] [Reference Citation Analysis (0)] |

| 7. | Benítez TM, Xu Y, Boudreau JD, Kow AWC, Bello F, Van Phuoc L, Wang X, Sun X, Leung GK, Lan Y, Wang Y, Cheng D, Tham YC, Wong TY, Chung KC. Harnessing the potential of large language models in medical education: promise and pitfalls. J Am Med Inform Assoc. 2024;31:776-783. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 15] [Cited by in RCA: 49] [Article Influence: 24.5] [Reference Citation Analysis (0)] |

| 8. | Parodi S, Filiberti R, Marroni P, Libener R, Ivaldi GP, Mussap M, Ferrari E, Manneschi C, Montani E, Muselli M. Differential diagnosis of pleural mesothelioma using Logic Learning Machine. BMC Bioinformatics. 2015;16 Suppl 9:S3. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 16] [Cited by in RCA: 15] [Article Influence: 1.4] [Reference Citation Analysis (0)] |

| 9. | Finlayson SG, Subbaswamy A, Singh K, Bowers J, Kupke A, Zittrain J, Kohane IS, Saria S. The Clinician and Dataset Shift in Artificial Intelligence. N Engl J Med. 2021;385:283-286. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 122] [Cited by in RCA: 371] [Article Influence: 74.2] [Reference Citation Analysis (0)] |

| 10. | Longwell JB, Hirsch I, Binder F, Gonzalez Conchas GA, Mau D, Jang R, Krishnan RG, Grant RC. Performance of Large Language Models on Medical Oncology Examination Questions. JAMA Netw Open. 2024;7:e2417641. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 43] [Article Influence: 21.5] [Reference Citation Analysis (0)] |

| 11. | Hillmann HAK, Angelini E, Karfoul N, Feickert S, Mueller-Leisse J, Duncker D. Accuracy and comprehensibility of chat-based artificial intelligence for patient information on atrial fibrillation and cardiac implantable electronic devices. Europace. 2023;26. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 37] [Cited by in RCA: 32] [Article Influence: 10.7] [Reference Citation Analysis (0)] |

| 12. | Kerbage A, Kassab J, El Dahdah J, Burke CA, Achkar JP, Rouphael C. Accuracy of ChatGPT in Common Gastrointestinal Diseases: Impact for Patients and Providers. Clin Gastroenterol Hepatol. 2024;22:1323-1325.e3. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 38] [Article Influence: 19.0] [Reference Citation Analysis (0)] |

| 13. | Sciberras M, Farrugia Y, Gordon H, Furfaro F, Allocca M, Torres J, Arebi N, Fiorino G, Iacucci M, Verstockt B, Magro F, Katsanos K, Busuttil J, De Giovanni K, Fenech VA, Chetcuti Zammit S, Ellul P. Accuracy of Information given by ChatGPT for Patients with Inflammatory Bowel Disease in Relation to ECCO Guidelines. J Crohns Colitis. 2024;18:1215-1221. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 32] [Article Influence: 16.0] [Reference Citation Analysis (0)] |

| 14. | McPeak G, Sautmann A, George O, Hallal A, Simal EA, Schwartz AL, Abaluck J, Ravi N, Pless R. An LLM's Medical Testing Recommendations in a Nigerian Clinic: Potential and Limits of Prompt Engineering for Clinical Decision Support. 2024 IEEE 12th International Conference on Healthcare Informatics (ICHI); 2024 June 03-06; Orlando, FL, USA. IEEE, 2024: 586-591. [DOI] [Full Text] |

| 15. | Kong QZ, Ju KP, Wan M, Liu J, Wu XQ, Li YY, Zuo XL, Li YQ. Comparative analysis of large language models in medical counseling: A focus on Helicobacter pylori infection. Helicobacter. 2024;29:e13055. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 6] [Article Influence: 3.0] [Reference Citation Analysis (0)] |

| 16. | Roster K, Kann RB, Farabi B, Gronbeck C, Brownstone N, Lipner SR. Readability and Health Literacy Scores for ChatGPT-Generated Dermatology Public Education Materials: Cross-Sectional Analysis of Sunscreen and Melanoma Questions. JMIR Dermatol. 2024;7:e50163. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 18] [Reference Citation Analysis (0)] |

| 17. | Schmidl B, Hütten T, Pigorsch S, Stögbauer F, Hoch CC, Hussain T, Wollenberg B, Wirth M. Assessing the use of the novel tool Claude 3 in comparison to ChatGPT 4.0 as an artificial intelligence tool in the diagnosis and therapy of primary head and neck cancer cases. Eur Arch Otorhinolaryngol. 2024;281:6099-6109. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 5] [Cited by in RCA: 25] [Article Influence: 12.5] [Reference Citation Analysis (0)] |

| 18. | Fujimoto M, Kuroda H, Katayama T, Yamaguchi A, Katagiri N, Kagawa K, Tsukimoto S, Nakano A, Imaizumi U, Sato-Boku A, Kishimoto N, Itamiya T, Kido K, Sanuki T. Evaluating Large Language Models in Dental Anesthesiology: A Comparative Analysis of ChatGPT-4, Claude 3 Opus, and Gemini 1.0 on the Japanese Dental Society of Anesthesiology Board Certification Exam. Cureus. 2024;16:e70302. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 13] [Reference Citation Analysis (0)] |

| 19. | Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25:44-56. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2376] [Cited by in RCA: 3552] [Article Influence: 507.4] [Reference Citation Analysis (5)] |

| 20. | Preiksaitis C, Ashenburg N, Bunney G, Chu A, Kabeer R, Riley F, Ribeira R, Rose C. The Role of Large Language Models in Transforming Emergency Medicine: Scoping Review. JMIR Med Inform. 2024;12:e53787. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 6] [Cited by in RCA: 48] [Article Influence: 24.0] [Reference Citation Analysis (0)] |

| 21. | Thirunavukarasu AJ, Ting DSJ, Elangovan K, Gutierrez L, Tan TF, Ting DSW. Large language models in medicine. Nat Med. 2023;29:1930-1940. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 7] [Cited by in RCA: 1290] [Article Influence: 430.0] [Reference Citation Analysis (0)] |

Open Access: This article is an open-access article that was selected by an in-house editor and fully peer-reviewed by external reviewers. It is distributed in accordance with the Creative Commons Attribution NonCommercial (CC BY-NC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is non-commercial. See: https://creativecommons.org/Licenses/by-nc/4.0/