Published online Sep 28, 2025. doi: 10.3748/wjg.v31.i36.111137

Revised: August 7, 2025

Accepted: August 26, 2025

Published online: September 28, 2025

Processing time: 87 Days and 18.9 Hours

Gastrointestinal (GI) diseases, including gastric and colorectal cancers, signi

Core Tip: This review summarizes the latest advances in the application of deep learning-based convolutional neural networks in gastrointestinal endoscopy. It highlights convolutional neural networks’ roles in lesion detection, classification, segmentation, and real-time decision support, emphasizing their potential to enhance diagnostic accuracy, reduce variability, and integrate into clinical workflows for improved patient outcomes.

- Citation: Wang YY, Liu B, Wang JH. Application of deep learning-based convolutional neural networks in gastrointestinal disease endoscopic examination. World J Gastroenterol 2025; 31(36): 111137

- URL: https://www.wjgnet.com/1007-9327/full/v31/i36/111137.htm

- DOI: https://dx.doi.org/10.3748/wjg.v31.i36.111137

Gastrointestinal (GI) diseases, from gastric and colorectal cancers (CRC) to inflammatory bowel disease (IBD), represent a major global health burden[1,2]. The early and accurate diagnosis of these conditions is critical for improving patient prognosis and optimizing treatment outcomes[3]. Endoscopic examination is a cornerstone of GI diagnosis, offering direct visualization of the mucosal surface and enabling real-time biopsy and therapeutic intervention[4,5].

Despite its key role in clinical gastroenterology, conventional endoscopy has several limitations[6]. The diagnostic accuracy of conventional endoscopy is often influenced by the experience and observational skills of the endoscopist. This can lead to significant interobserver variability and a risk of missed lesions, particularly small or flat neoplasms[7]. The increasing demand for procedures and the volume of data also challenge the consistency and efficiency of diagnosis[8]. Thus, technological innovations are urgently needed to enhance the reliability of endoscopic evaluations. Artificial intelligence (AI), particularly deep learning (DL), has emerged as a transformative tool in the field of medical imaging[9,10]. Among DL techniques, convolutional neural networks (CNNs) are especially well-suited for analyzing complex endoscopic image data, outperforming traditional machine learning algorithms in various tasks like image recognition, classification, and segmentation[11-15]. Recent studies have increasingly explored integrating CNNs into GI endoscopy to develop computer-aided detection and diagnosis systems[11,16-18]. These AI-driven systems have demonstrated promising results in enhancing the detection of colorectal polyps and classifying gastric lesions, with performance often comparable to or exceeding that of experienced endoscopists[18-20].

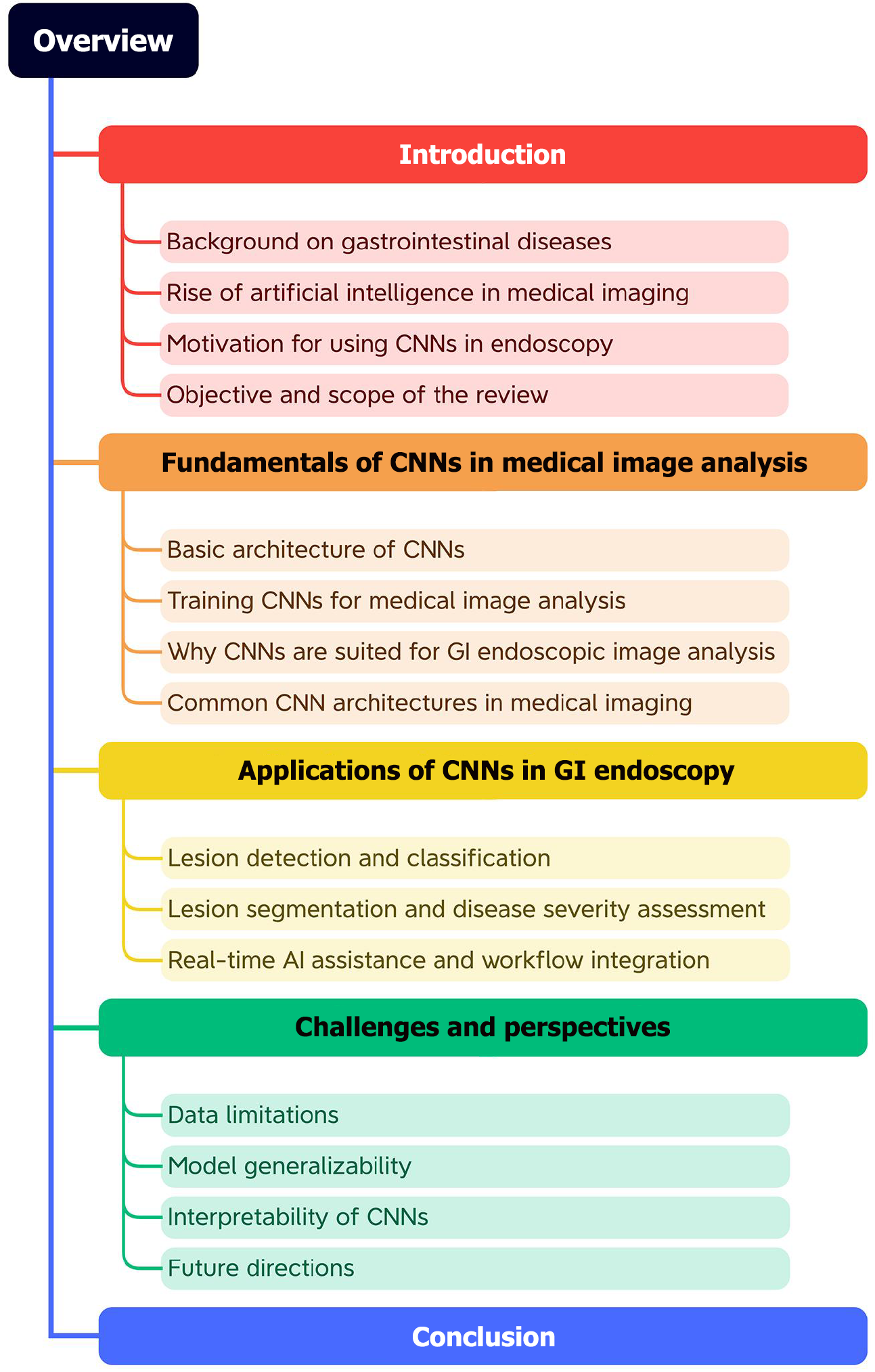

This review provides a comprehensive overview of the current state and future prospects of CNN applications in GI endoscopy. This is a narrative review, and the literature was surveyed by searching recent publications, with a focus on high-impact studies and landmark papers, to ensure a broad and unbiased representation of the field. As depicted in Figure 1, we first introduce fundamental CNN principles, then systematically examine their applications across various GI diagnostic scenarios. Additionally, we discuss model performance, challenges, and future directions to advance the clinical utility of CNN-based systems in endoscopic practice.

CNNs are a class of DL models specifically designed for processing data with a grid-like topology, such as images. CNNs have revolutionized the field of computer vision and have shown outstanding performance in a wide range of medical imaging tasks, including classification, detection, segmentation, and anomaly recognition. Their success lies in their ability to automatically and adaptively learn spatial hierarchies of features through backpropagation, reducing the need for manual feature engineering.

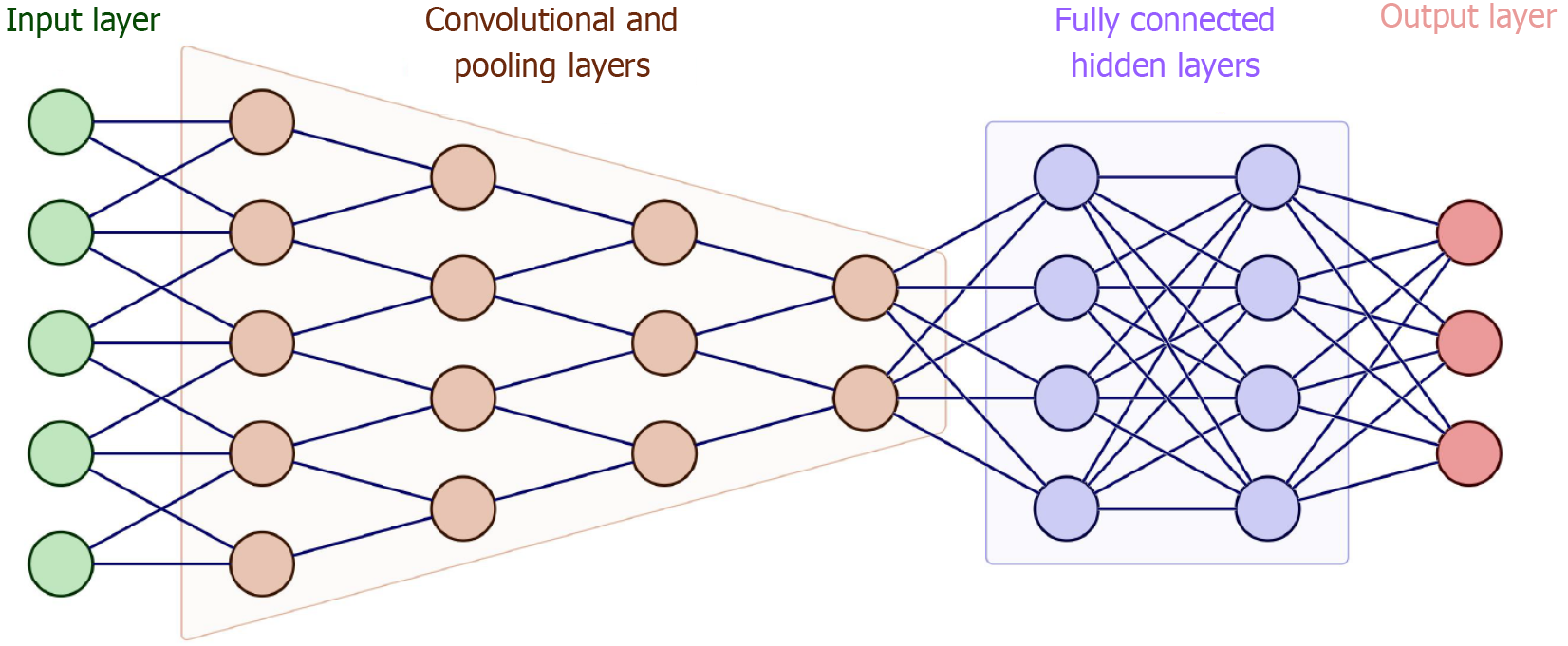

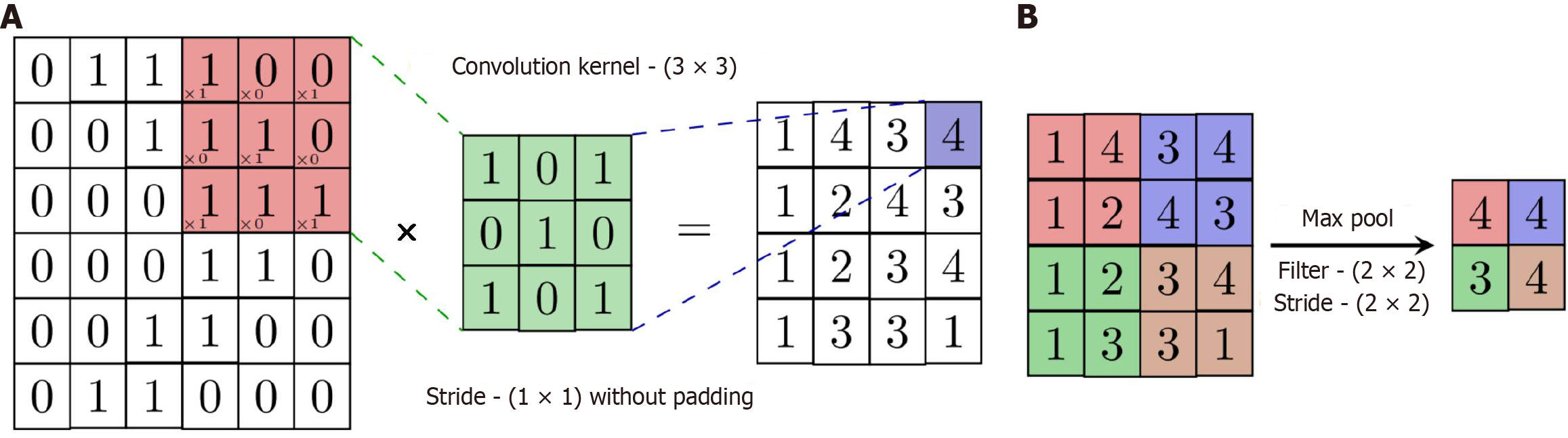

The basic architecture of a CNN typically comprises the following key components: An input layer, convolutional layers, activation functions, pooling layers, fully connected layers, and an output layer (Figure 2). The input layer serves as the entry point of the network, where raw image data, such as endoscopic images for detecting GI diseases, is fed into the model. This input is usually represented as a multi-dimensional tensor, incorporating the width, height, and the number of color channels (e.g., RGB). The convolutional layers are the core building blocks of CNNs. They apply a series of learnable filters (kernels) that slide over the input image to produce feature maps. These filters are designed to detect low-level patterns such as edges, textures, and contours in early layers, as well as more complex features such as lesions or anatomical structures in deeper layers. Figure 3 provides a detailed view of key operations within the network: Panel A shows a convolution operation using a 3 × 3 kernel that produces a 4 × 4 output feature map, while panel B demonstrates max-pooling with a 2 × 2 filter and stride of 2, which reduces the spatial resolution to 2 × 2. These operations not only condense and refine visual information but also enable the network to become increasingly sensitive to clinically relevant patterns in deeper layers. Together, this architectural design allows CNNs to support real-time decision-making in endoscopy, improve diagnostic accuracy, and reduce variability in clinical interpretation.

CNNs are trained using large datasets of labeled images. During training, the network adjusts its weights to minimize a loss function (e.g., cross-entropy for classification tasks), typically using stochastic gradient descent or its variants. In medical imaging, acquiring large, annotated datasets can be challenging due to privacy concerns, labeling costs, and inter-observer variability[21]. To address this, researchers often use data augmentation (e.g., rotations, flipping, and noise addition) and transfer learning, where pre-trained CNNs (e.g., ResNet, VGG, and Inception) are fine-tuned on medical datasets[22,23].

Moreover, model evaluation requires careful selection of performance metrics beyond simple accuracy. Commonly used metrics in medical applications include sensitivity, specificity, precision, F1-score, and area under the receiver operating characteristic curve, as these metrics provide a highly comprehensive assessment of the clinical relevance of a model[24,25]. For segmentation tasks, Dice coefficient and intersection over union are widely used[26,27]. However, high accuracy alone is insufficient; models must also generalize across diverse populations and imaging conditions. To this end, validation strategies such as cross-validation, external validation, and prospective testing are employed, with external and real-time clinical validations being particularly critical[28,29]. Public datasets like Kvasir and GIANA support standardized benchmarking but are limited by variability in annotation and image quality[30,31]. Furthermore, consistent reporting by following AI-specific guidelines such as CONSORT-AI and TRIPOD-AI is vital for reproducibility and comparison across studies[32].

GI endoscopic images present complex visual features such as mucosal patterns, vascular structures, and subtle lesion morphology. CNNs excel in recognizing such hierarchical and spatial features because of their local receptive fields and weight-sharing mechanisms[33]. Unlike traditional machine learning algorithms that heavily rely on handcrafted features, CNNs automatically extract relevant features, enabling more objective and scalable diagnostic tools[34,35]. Furthermore, CNNs can be applied to both static images and dynamic video streams, making them particularly suitable for real-time applications in GI endoscopy[36]. Advanced architectures such as 3D CNNs or temporal convolutional networks have also been developed to handle sequential video data, capturing spatial-temporal information critical for detecting transient abnormalities or tracking lesion progression[37,38].

As demonstrated in Table 1[39-50], several CNN architectures, originally developed for computer vision tasks, have been successfully adapted and applied to the domain of medical image analysis. These adaptations often involve fine-tuning pre-trained models or designing novel architectures tailored to the specific characteristics and challenges inherent in medical imaging data. The success of CNNs in medical applications highlights their robust feature extraction capabilities and their ability to learn complex patterns from image data. This has led to advancements in tasks such as disease detection, segmentation, and diagnosis across various medical modalities.

| Ref. | Architecture | Key strengths | Limitations | Applications |

| [39] | AlexNet | Introduced rectified linear unit, dropout, graphics processing unit acceleration | Overfitting on small datasets | Histopathology image classification |

| [40] | VGGNet | Uniform structure, easy to implement | Large number of parameters, memory-intensive | Polyp detection, organ segmentation |

| [41] | GoogLeNet | Multi-scale feature extraction, fewer parameters than VGG | Complex architecture, harder to modify | Lesion classification, colonoscopy image analysis |

| [42] | ResNet | Residual connections solve vanishing gradient | Can overfit if dataset is small | Detection of GI tumors, segmentation of ulcers |

| [43] | U-Net | Excellent for biomedical segmentation, works with few images | Limited to segmentation tasks | Polyp segmentation, mucosal layer delineation |

| [44] | DenseNet | Strong gradient flow, parameter-efficient | Computationally intensive | Endoscopic image classification, disease grading |

| [45] | Attention U-Net | Incorporates attention for better focus on relevant regions | Increased complexity and longer training time | IBD severity scoring; small bowel bleeding detection |

| [46] | EfficientNet | Optimized trade-off between accuracy and speed | Requires careful scaling and tuning | Lightweight, mobile-compatible GI image classification |

| [47] | Swin-CNN | Window-based attention + CNN; balances local and global features | Complex design; tuning is more demanding | GI endoscopy video anomaly detection; GI tumor recognition |

| [48] | TransUNet | Combines CNN for feature extraction and Transformer for context modeling | Resource intensive; slower training | Computed tomography/magnetic resonance imaging organ segmentation; GI lesion boundary detection |

| [49] | MedT (medical transformer) | Pure transformer-based; excellent at long-range dependency modeling | Not optimal for small datasets; data hungry | Intestinal lesion segmentation; colorectal cancer prediction |

| [50] | ConvNeXt | Combines CNN stability with Transformer-like design; efficient training | Relatively new; limited ecosystem maturity | Multi-organ classification; tumor region detection |

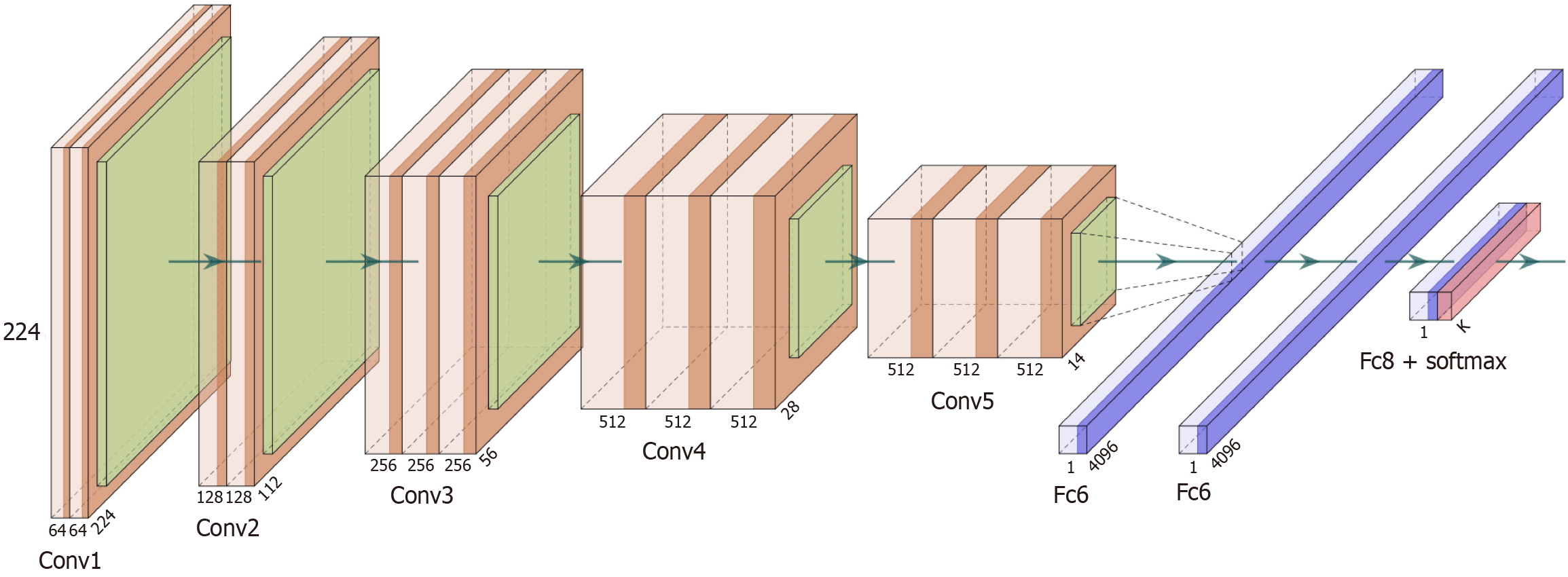

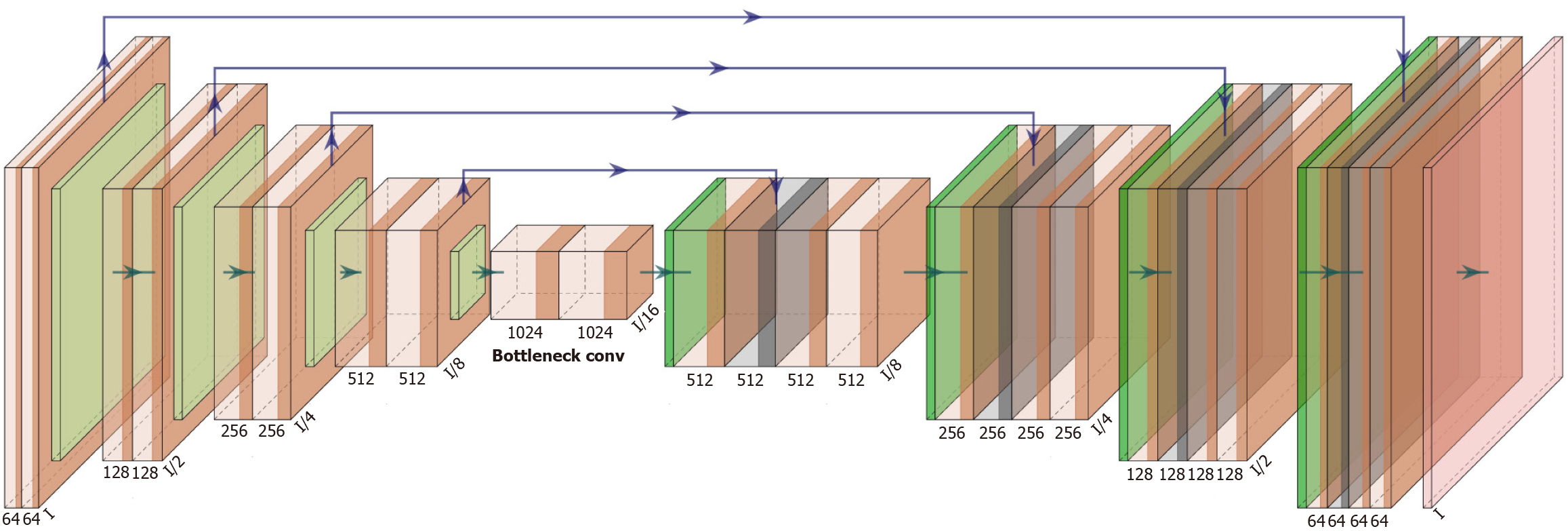

VGGNet, exemplified by VGG-16 (Figure 4), is notable for its depth and use of uniform 3 × 3 convolution kernels, enabling a simpler yet more powerful design than earlier models like AlexNet. Its success laid the foundation for deeper networks such as ResNet. U-Net (Figure 5), widely used in medical image segmentation, features a symmetric U-shaped architecture with a Contracting Path (encoder) that captures context through downsampling, and an Expansive Path (decoder) that restores spatial resolution for precise localization. Skip connections link encoder and decoder layers at corresponding levels, enhancing the network’s ability to learn both global and local features. This design makes U-Net especially effective in delineating fine structures in medical images.

The choice of CNN architecture is critical for achieving optimal performance, and various models have been adopted for GI endoscopy tasks, each with its own strengths and weaknesses. A direct comparison of these architectures reveals important trade-offs between performance, computational complexity, and suitability for specific tasks. For classification tasks, models like ResNet and EfficientNet are commonly used. ResNet, with its residual connections, effectively mitigates the vanishing gradient problem, allowing for the training of very deep networks. It has been widely adopted for polyp and lesion classification due to its robust performance. However, its high number of parameters can lead to significant computational overhead. In contrast, EfficientNet utilizes a compound scaling method to uniformly scale network depth, width, and resolution, leading to a much more efficient model with fewer parameters and higher accuracy. This makes it particularly suitable for scenarios where computational resources are limited, such as real-time deployment on edge devices.

For segmentation tasks, architectures based on the U-Net family are dominant. The classic U-Net architecture, with its contracting and expanding paths and skip connections, is highly effective for segmenting lesions with high precision. Its strengths lie in its ability to fuse high-resolution features from the encoder with high-level features from the decoder, which is crucial for pixel-level prediction. The more recent TransUNet, which integrates Transformer-based self-attention mechanisms into the U-Net structure, aims to capture long-range dependencies more effectively than pure CNN-based models. While TransUNet may offer improved performance on certain complex segmentation tasks, it often comes with a higher computational cost and a greater demand for large-scale training data. The decision between these architectures often depends on the specific clinical application. For instance, a ResNet or EfficientNet might be sufficient for a classification system to quickly screen for polyps, while a U-Net or its variants would be necessary for precise boundary detection for surgical planning or automated lesion size measurement.

CNNs have demonstrated transformative potential in GI endoscopy, where the accurate and timely interpretation of endoscopic images is critical for disease diagnosis and management[51]. By learning spatial hierarchies of visual features directly from data, CNNs have enabled automation of key diagnostic tasks, reducing interobserver variability and improving lesion detection accuracy[51]. Their core clinical applications in GI endoscopy can be categorized into three major domains: Lesion detection and classification, lesion segmentation and disease severity assessment, and real-time clinical assistance and workflow integration.

The detection and classification of lesions represent foundational applications of CNNs in GI endoscopy[52,53]. These tasks are critical for the early diagnosis of malignancies and the prevention of cancer progression, especially in the context of CRC and upper GI neoplasms. CNNs enable automatic extraction of hierarchical image features and recognition of subtle visual patterns in endoscopic images, which may be challenging even for expert clinicians to identify.

In colonoscopy, CNN-based systems have been particularly effective in identifying colorectal polyps[54-56]. Missed polyps, especially flat or diminutive ones, are a well-known contributor to interval CRCs. Studies have demonstrated that CNN models trained on large annotated image datasets can achieve high sensitivity and specificity, often surpassing generalist endoscopists in polyp detection. For example, CNNs have been employed to distinguish adenomatous from hyperplastic polyps in real time, facilitating immediate clinical decision-making regarding resection or surveillance. Additionally, CNNs have been trained to classify polyps based on size, morphology, and surface patterns, such as the narrow-band imaging international colorectal endoscopic or the Workgroup Serrated Polyps and Polyposis classifications, further aiding risk stratification[57]. In upper GI endoscopy, lesion detection is equally critical but often more complex because of the heterogeneous nature of mucosal appearance. CNNs have shown promising results in detecting early gastric cancer, which may present as subtle discoloration, textural changes, or shallow depressions[57]. Similarly, CNN models have been developed to identify esophageal squamous cell carcinoma and dysplastic Barrett’s esophagus, outperforming or matching the performance of experienced specialists[57,58]. Many of these models are trained using narrow-band imaging, blue-light imaging, or linked color imaging to enhance mucosal contrast and improve lesion visibility[59,60].

One of the major strengths of CNNs in classification tasks lies in their ability to learn directly from raw pixel data without requiring handcrafted features. This feature allows them to generalize across different imaging modalities, endoscope brands, and lighting conditions, provided they are trained on diverse and well-curated datasets. Some models are now being designed with attention mechanisms or multi-task architectures to simultaneously detect and classify lesions while highlighting salient image regions to improve interpretability[61,62]. Looking forward, the integration of CNNs with electronic health records, histopathology, and genetic data could enable more personalized risk prediction and lesion characterization[63]. Furthermore, federated learning and domain adaptation techniques may allow CNNs to perform consistently across institutions, even when local data cannot be shared due to privacy concerns. Ultimately, CNNs hold significant promise to standardize and enhance lesion detection and classification in GI endoscopy, reducing variability, improving outcomes, and enabling earlier intervention. Table 2 offers a comprehensive overview of studies that used CNNs for lesion detection and classification in GI disease[64-109].

| Data | Architecture | Application | Key findings | Ref. |

| Endoscopic images | Deep CNN with high-resolution endoscopic input | Aids in detecting laterally spreading tumors in the colon using deep learning to enhance endoscopic visualization accuracy | The CNN model significantly improved the detection rate of laterally spreading tumors compared to conventional endoscopy, reducing oversight and potentially improving early colorectal cancer diagnosis. Accuracy increased notably in identifying flat lesions that are otherwise difficult to detect manually | [64] |

| GI endoscopy images | Deep CNN trained on large annotated image dataset | Designed to detect and classify a wide range of GI tract diseases in real-time using endoscopic imagery | The model achieved high diagnostic accuracy across multiple disease categories, outperforming traditional classifiers. It demonstrated strong potential for integration into clinical decision support tools, increasing consistency and efficiency in endoscopic diagnoses | [65] |

| Wireless capsule endoscopy images | CNN with real-time image processing pipeline | Supports non-invasive detection of GI diseases using capsule endoscopy video sequences | Achieved expert-level accuracy in detecting abnormal lesions in small bowel images. This system allows for automated and accurate screening, significantly reducing time spent by clinicians reviewing lengthy capsule footage | [66] |

| White-light endoscopy | CNN model trained on early gastric cancer cases | Early detection of gastric cancer through white-light endoscopy imagery with AI assistance | The model enabled early cancer identification with high sensitivity and specificity, improving upon the detection rates of endoscopists. Its implementation could lead to reduced gastric cancer mortality by facilitating prompt treatment decisions | [67] |

| Endoscopic images | CNN with multi-center training data | Detecting Helicobacter pylori infection from endoscopy images | The CNN demonstrated high performance and generalizability across institutions. It offers a non-invasive, fast diagnostic method, comparable to biopsy confirmation, enabling real-time feedback during endoscopic exams | [68] |

| Gastroscopy images | Deep dense CNN with channel attention mechanism | Automated classification of esophageal disease types (e.g., ulcers, neoplasms) | By incorporating attention modules, the model excelled in distinguishing subtle texture differences among esophageal pathologies, showing great promise in supporting less experienced endoscopists | [58] |

| Capsule endoscopy images | CNN for object detection | Automatic detection of small intestinal hookworms | The proposed system achieved high precision in detecting parasitic lesions, helping clinicians quickly identify the presence of hookworm infections, which are often missed due to subtle image features | [69] |

| Capsule endoscopy images | CNN trained on hemorrhagic potential lesions | Identification and differentiation of small bowel lesions with bleeding risk | Accurately categorized lesions by bleeding risk, offering clinicians critical insight for prioritizing cases. The system reduces subjectivity in evaluating lesion severity | [70] |

| Endoscopic images | Deep CNN classifier for esophageal lesion types | Differentiation of protruding esophageal lesions (e.g., GI stromal tumors, leiomyomas) | CNN provided more accurate differential diagnosis than generalist endoscopists. Can support pre-biopsy decision-making, potentially reducing unnecessary interventions | [71] |

| Endoscopic images | Transfer CNN architecture (e.g., ResNet-based) | Detection of early gastric cancer | Transfer learning from pre-trained CNNs enabled rapid training on limited labeled data while maintaining strong detection performance. Highlights usefulness of transfer learning in GI imaging where large datasets are rare | [72] |

| Endoscopic images | Fusion of multiple CNNs via fuzzy Minkowski distance | Multi-class classification of GI disorders | This fuzzy fusion method significantly boosted CNN decision reliability in classifying various GI tract diseases. Particularly effective in handling noisy or borderline cases by leveraging ensemble confidence | [73] |

| Double-balloon enteroscopy | Custom deep CNN with lesion region focusing | Detecting and classifying small bowel lesions (e.g., ulcers, tumors) during double-balloon enteroscopy | Achieved high sensitivity for subtle and rare lesions in the small intestine. Helped reduce diagnostic time and enabled detection of hard-to-access areas, improving double-balloon enteroscopy clinical utility | [74] |

| Endoscopic images | CNN with transfer learning (e.g., ResNet pretrained on ImageNet) | Detecting GI abnormalities in multi-center datasets | Transfer learning approach enhanced classification accuracy with limited training data. It proved robust across image variations, useful in practical endoscopy deployments with different imaging devices | [75] |

| GI tract images | Deep CNN with region proposal and hierarchical labeling | Classification of syndromes in the GI tract (e.g., inflammation, bleeding, neoplasms) | Outperformed traditional classifiers with over 90% accuracy on several syndrome categories. System demonstrated early signs of suitability for autonomous decision-making in AI-assisted endoscopy | [76] |

| Capsule endoscopy images | CNN with spatial attention and region localization | Detection and precise localization of GI diseases in capsule footage | The model not only identified lesions but also marked their spatial location, reducing time required for manual review. Showed high generalization across bleeding, polyps, and ulcers | [53] |

| Endoscopic images | Multi-fusion CNN with spatial and feature fusion | Diagnosis of complex GI disease states through multiple feature paths | Fusion approach combined texture, edge, and color patterns from multiple CNN streams, improving classification of ambiguous or compound lesions. Enabled more granular diagnosis | [77] |

| Capsule endoscopy images | Deep multi-class CNN classifier | Distinguishing ulcerative colitis, polyps, and dyed-lifted polyps | The model achieved strong performance on multi-label lesion classification, aiding clinicians in identifying inflammation patterns vs neoplastic changes from wireless capsule endoscopy images | [56] |

| Endoscopic images | Deep CNN for histological prediction | Detection of chronic atrophic gastritis | The system outperformed general practitioners in identifying chronic atrophic gastritis on white-light images. This could enable earlier intervention for patients at risk of gastric cancer | [78] |

| Endoscopic images | CNN-based diagnostic prediction model | Classification of multiple GI disorders including gastritis and neoplasms | The system offered a probabilistic diagnosis output that correlated well with clinical reports. Could serve as a second reader to reduce misdiagnosis | [79] |

| Endoscopic images | Deep CNN trained on villous atrophy cases | Detection of duodenal villous atrophy, a hallmark of celiac disease | Model enabled accurate identification of atrophic mucosal features that are often missed by the naked eye. Can improve non-invasive screening for celiac disease | [80] |

| Endoscopic images | CNN with lesion localization layer | Early gastric cancer classification and localization | The system not only detected cancer but pinpointed lesion boundaries, assisting in targeted biopsies. Shown to reduce oversight in flat lesions | [81] |

| Endoscopic images | Deep CNN prospectively tested | Diagnosis of chronic atrophic gastritis | Prospective validation showed significant improvement in diagnosis rate compared to conventional methods. Demonstrated practical deployment readiness | [82] |

| Endoscopic images | CNN trained and validated against multiple architectures | Detection of gastric mucosal lesions | Compared multiple CNN methods and showed the proposed model performed best in both sensitivity and specificity. Suggested a robust preprocessing pipeline | [83] |

| Endoscopic images | Deep CNN with classification and detection modules | Broad GI disease detection and classification, including ulcers and tumors | The CNN model integrated multiple diagnostic modules, enabling both lesion classification and visual confirmation. It significantly outperformed traditional rule-based systems and provided explainable outputs to support clinical decisions | [84] |

| Endoscopic images | Deep CNN trained on annotated gastric polyp datasets | Detection of gastric polyps with high sensitivity | The model effectively identified polyps, including flat and small ones. It helped reduce miss rates in routine gastroscopy and can act as a real-time assistant for novice endoscopists | [54] |

| Endoscopic images | Ensemble machine learning with CNN and decision tree comparisons | GI disease classification from diverse image types | Though primarily a comparative study, CNNs were shown to outperform other models in most lesion categories. Highlighted CNNs’ suitability for complex image recognition tasks in GI settings | [85] |

| Endoscopic images | Deep convolutional architecture trained on expert-labeled data | Diagnosis of common gastric lesions including gastritis and erosions | Demonstrated high diagnostic accuracy and reduced inter-observer variability, suggesting CNN use can help standardize endoscopic assessments across institutions | [86] |

| Capsule endoscopy | Deep CNN with binary output mode | Binary lesion detection (normal vs abnormal) in capsule endoscopy | The binary CNN model achieved excellent sensitivity in identifying abnormal frames, acting as an efficient pre-filter to aid clinicians reviewing thousands of capsule images | [87] |

| Endoscopic images | Deep CNN tested across multiple centers | Gastritis classification using AI comparable to gastroenterologists | Achieved expert-level performance in classifying gastritis subtypes. Multicenter validation proved its robustness across varied endoscopic systems and clinical environments | [20] |

| Endoscopic images | Attention-guided CNN with feature weighting | GI disease classification enhanced with attention mechanisms | Attention modules enabled the model to focus on key lesion areas, improving classification accuracy for overlapping or complex cases. Boosted explainability in predictions | [62] |

| Wireless capsule endoscopy images | Pretrained CNN fine-tuned on wireless capsule endoscopy images | GI tract disease classification from capsule endoscopy | The transfer learning-based CNN adapted well to wireless capsule endoscopy image features. It accurately classified disease regions, even under motion blur and illumination changes | [88] |

| Endoscopic images | GI-Net: CNN with anomaly detection capability | Multi-label classification of anomalies in GI tract | GI-Net offered near real-time classification across numerous disease types, showing strong performance in clinical pilot tests. Especially useful in large-scale screenings | [89] |

| Endoscopic images | Deep CNN trained on Helicobacter pylori-positive and negative images | Evaluation of Helicobacter pylori infection | Model achieved diagnostic accuracy comparable to experienced gastroenterologists. Could be integrated into live endoscopy for on-the-spot infection prediction | [90] |

| Gastric endoscopic images | Deep CNN optimized for malignancy detection | Classification of gastric malignancies | CNN showed excellent discrimination between benign and malignant lesions. Real-time feedback capability demonstrated potential for improving early gastric cancer outcomes | [91] |

| Endoscopic images | CNN for cancer detection trained on large datasets | Automatic gastric cancer detection from endoscopy | High sensitivity and specificity achieved. Could act as an AI “second observer” for difficult-to-see lesions during live endoscopy | [92] |

| Endoscopic images | Deep learning model with Helicobacter pylori-specific feature extraction | Helicobacter pylori diagnosis using AI interpretation of mucosal patterns | Enabled real-time assessment of infection risk without biopsy, saving time and cost. Also reduced patient discomfort by avoiding invasive tests | [93] |

| GI tract images | Ensemble of deep CNN + texture feature classifiers | GI abnormality detection from diverse image types | Fusion of deep and handcrafted features improved robustness in challenging image conditions. Showed enhanced adaptability across multiple GI lesion types | [94] |

| Capsule endoscopy images | CNN tailored for parasitic structure detection | Hookworm detection in small bowel | Model achieved very high detection rate and reduced review time. Critical in endemic regions where hookworm infections are often overlooked | [95] |

| Endoscopic images | CNN optimized for mucosal pattern recognition | Diagnosis of Helicobacter pylori infection based on endoscopic images | Enhanced early infection detection through image-only methods. Potentially replaces biopsy in low-resource settings | [96] |

| Endoscopic images | CNN using hierarchical feature fusion | Lesion detection in GI endoscopy | Feature fusion helped distinguish similar lesions, improving accuracy. Suitable for deployment in routine screening environments | [97] |

| Endoscopic images | Deep CNN with blind-spot awareness | Early gastric cancer detection in real-time | The model avoided blind spots, a common issue in manual diagnosis, reducing miss rate for flat and depressed lesions. Demonstrated strong potential for real-time applications | [98] |

| Capsule endoscopy images | Neural network with small-bowel angiectasia training | Detection of GI angiectasia | Achieved accurate identification of angiectasias with high sensitivity. Provided a non-invasive method to detect obscure GI bleeding causes, potentially reducing missed diagnoses during manual video review | [99] |

| Capsule endoscopy images | CNN-based ulcer detection system | Automated ulcer detection in small bowel | The CNN model effectively distinguished ulcers from normal mucosa, even in poor lighting or with capsule motion. Enhanced early diagnosis of Crohn’s disease and non-steroidal anti-inflammatory drug-induced ulcers | [100] |

| Endoscopic images | CNN architecture tailored for Helicobacter pylori features | Evaluation of Helicobacter pylori infection status | The model achieved performance comparable to histology-based diagnosis. Useful for non-invasive, in-procedure assessment, aiding in same-visit treatment planning | [101] |

| Endoscopic images | CNN trained on gastric tumor categories | Gastric neoplasm classification (e.g., adenomas, carcinomas) | Outperformed junior endoscopists in differentiating benign vs malignant neoplasms. Accelerated workflow and supported biopsy decision-making | [102] |

| Endoscopic images | Deep learning classifier with ensemble optimization | GI disease recognition in endoscopic images | Handled a wide range of disease types with strong classification performance. Showed potential for integration into automated report generation systems | [103] |

| Capsule endoscopy images | Deep CNN with binary detection of ulceration | Detection of erosions and ulcers | Demonstrated over 90% accuracy in detecting clinically relevant ulcerative lesions. Reduced time spent reviewing wireless capsule endoscopy footage by half | [104] |

| Biopsy images | Deep CNN for histopathological image classification | Detection of diseases (e.g., gastritis, carcinoma) in GI biopsy samples | Model classified histopathological lesions with high fidelity. Useful in screening large volumes of slides, especially in resource-constrained pathology labs | [105] |

| Endoscopic images | Deep CNN architecture for visual pathology classification | Gastric pathology detection (e.g., erosions, polyps, cancer) | Showed robust multi-label classification capacity across common gastric pathologies. Aided endoscopists in characterizing mucosal abnormalities with high sensitivity | [106] |

| Barium X-ray radiography | CNN trained on radiograph features | Gastritis detection from double-contrast GI series | Brought deep learning to a classic imaging modality. Enabled detection of gastritis without the need for endoscopy, useful in areas lacking access to scopes | [107] |

| Endoscopic images | CNN classifier for esophageal cancer | Esophageal cancer diagnosis using endoscopy | CNN matched the diagnostic accuracy of experienced clinicians. Rapid lesion classification suggested potential use in screening and early detection programs | [108] |

| Capsule endoscopy images | Deep learning classifier trained with expert input | Small-bowel disease and variant classification | Demonstrated gastroenterologist-level accuracy across a wide range of small-bowel findings. Significantly improved efficiency and reliability in interpreting long capsule videos | [109] |

The reviewed literature in Table 2 highlights the versatility of these models, with applications spanning colonoscopy, gastroscopy, and capsule endoscopy. A key finding is the consistent achievement of high diagnostic accuracy and sensitivity, often surpassing that of generalist endoscopists, particularly for subtle lesions like laterally spreading tumors or small polyps. The table also reveals a trend toward using advanced architectures, including transfer learning from models like ResNet, attention mechanisms, and multi-fusion CNNs to improve performance, generalizability, and interpretability. Furthermore, many studies demonstrate the potential for real-time application and the ability to reduce inter-observer variability, supporting the integration of these AI systems into routine clinical workflows.

While this section has primarily focused on the application of CNNs, it is also important to consider that traditional machine learning methods such as support vector machines, random forests, and gradient boosting machines have also played an important role in the assisted diagnosis of GI diseases. These methods typically rely on manually extracted image features (e.g., texture, color, and shape features), which are then used by a classifier to categorize lesions. For example, some early studies used support vector machines to classify polyps in colonoscopy images or random forests to differentiate esophageal lesions[110,111]. However, compared to CNNs, these traditional methods are less automated in their feature extraction, relying heavily on feature engineering. This limits their performance and generalization capabilities when dealing with large-scale, diverse endoscopic image data. With the rapid development of DL, CNNs have become the dominant approach in this field due to their powerful automatic feature learning capabilities, demonstrating superior performance in most image classification tasks. Nevertheless, in certain specific scenarios, such as when data is limited or when higher model interpretability is required, traditional machine learning methods still hold unique value, and their integration with DL models (e.g., feature fusion) is a promising avenue for future research.

Lesion segmentation and disease severity assessment are essential components of GI endoscopic analysis, especially in the management of neoplastic and inflammatory conditions[101,112-114]. While detection tasks aim to localize the presence of lesions, segmentation goes a step further by precisely delineating their boundaries, enabling accurate measurements of lesion size, shape, and extent. CNNs, particularly those based on fully convolutional architectures such as U-Net, SegNet, and DeepLab, have been widely adopted for this purpose in GI endoscopy[101,115-118].

In colorectal endoscopy, CNN-based segmentation models are instrumental for assessing polyps identified during colonoscopy. Accurate segmentation allows for quantifying polyp dimensions, estimating surface area, and distinguishing sessile or flat morphologies, which directly influence clinical decisions regarding resection strategy. In particular, U-Net and its 3D variants have demonstrated excellent performance in segmenting polyps from both 2D frames and volumetric colonoscopy data[119]. Furthermore, models trained with pixel-level annotations enable high-precision margin detection, critical for ensuring complete removal during endoscopic mucosal resection or submucosal dissection. In upper GI endoscopy, CNNs have been developed to segment early gastric cancer, esophageal neoplasms, and gastric ulcers. These models help differentiate subtle cancerous changes from inflamed or atrophic mucosa, which is often visually similar. CNNs have also been adapted to multimodal imaging inputs[120,121], such as combining white-light endoscopy with narrow-band imaging or magnifying chromoendoscopy, further improving segmentation accuracy in complex cases. For IBD, CNN-based models can assess disease severity by segmenting inflamed vs normal mucosa and scoring the presence of ulcerations, bleeding, and edema[99,114,122]. Automated scoring systems trained on standard indices, such as the Mayo endoscopic subscore or the Ulcerative Colitis Endoscopic Index of Severity, can classify disease activity levels with high concordance to expert reviewers[123]. This characteristic allows for objective, reproducible evaluation of mucosal healing, a key target in IBD therapy. Moreover, CNNs have been successfully applied to capsule endoscopy, where it is impractical to conduct a manual review of over 50000 frames per procedure[100,109,124]. Segmentation models in this context automatically identify bleeding sites, erosions, and vascular lesions, dramatically reducing review time. Some systems further incorporate anatomical localization, helping clinicians pinpoint the precise site of pathology within the small bowel. Table 3 Lists studies that used CNNs for lesion segmentation and severity assessment in GI diseases[125-136].

| Data | Architecture | Application | Key findings | Ref. |

| Double-balloon endoscopy images | Deep CNN with lesion segmentation and severity scoring | Automated detection and severity grading of Crohn’s ulcers from double-balloon endoscopy | The model accurately segmented ulcers and assessed their severity, enabling objective Crohn’s Disease monitoring. Outperformed traditional scoring methods and showed potential to reduce inter-observer variability | [125] |

| Capsule endoscopy images | CNN segmentation network trained on angiodysplasias | Automated segmentation of vascular lesions in small bowel images | Provided accurate lesion boundary identification for angiodysplasias, facilitating hemorrhage risk stratification. Supports quicker and more consistent diagnosis compared to manual review | [126] |

| Colon capsule endoscopy | CNN trained to detect blood and mucosal lesions | Simultaneous detection and segmentation of bleeding and mucosal abnormalities | Enabled precise localization of lesions and bleeding points in colon capsule footage. Improved lesion coverage and reduced diagnostic delay | [127] |

| Endoscopic images | CNN trained to grade UC severity | Automated assessment of UC severity from colonoscopy | Model achieved expert-level grading accuracy across multiple severity stages. Significantly reduced inter-observer bias, suggesting suitability for clinical trial endpoints | [128] |

| Colonoscopy images | CNN-based model for pattern recognition | Differentiation of Crohn’s disease vs UC from colonoscopy | The system accurately distinguished UC and Crohn’s disease patterns, providing real-time decision support for disease type classification | [114] |

| Endoscopic images | Deep learning classifier for depth prediction | Predicting submucosal invasion in gastric neoplasms | Model reliably estimated invasion depth, reducing unnecessary surgical intervention. Useful in pre-treatment risk stratification | [129] |

| Endoscopic images | CNN for multi-feature prediction | Prediction of early gastric cancer, invasion depth, and differentiation | AI model outperformed experts in cancer invasion depth and differentiation. Enabled non-invasive yet accurate diagnosis during endoscopy | [130] |

| Capsule endoscopy images | Deep neural network tailored to stricture detection | Detection of Crohn’s-related intestinal strictures | The model improved the detection of strictures, which are often missed in manual review. Accelerates diagnosis and may guide therapeutic decisions | [131] |

| Confocal laser endomicroscopy images | CNN trained for mucosal healing assessment | Confirmation of mucosal healing in Crohn’s disease | Enabled fine-grained assessment of healing vs inflammation. Provided high-resolution insight for assessing treatment efficacy | [132] |

| Endoscopic images | AI-assisted classification model | UC disease activity scoring using Mayo classification | Provided consistent and reproducible activity scores. Reduced assessment variability, improving clinical and research utility | [133] |

| Endoscopic images | CNN vs human graders comparison | Grading UC severity from colonoscopy | CNN showed equal or superior performance to human reviewers in grading UC. Suggested for use in high-throughput settings or trials | [134] |

| Capsule endoscopy videos | CNN for quantitative feature extraction | Quantitative analysis of celiac disease lesions | Model extracted and quantified villous atrophy and mucosal abnormalities. Offered an objective metric to monitor disease progression | [135] |

| Conventional endoscopy images | CNN trained on histological labels | Predicting invasion depth of gastric cancer | CNN-assisted depth prediction provided decision support for therapy planning. Could reduce need for unnecessary biopsies | [136] |

Based on the studies outlined in Table 3, CNNs are instrumental in advancing from simple lesion detection to precise segmentation and objective disease severity assessment. The reviewed research demonstrates the use of segmentation-focused architectures to delineate lesion boundaries, enabling quantitative measurements of ulcers and vascular lesions. A significant trend is the development of models for automated severity grading of IBDs like ulcerative colitis and Crohn’s disease, with results showing high concordance to expert-level assessments and a reduction in inter-observer bias. This capability is further extended to predicting submucosal invasion in gastric neoplasms, which can aid in pre-treatment stratification and reduce unnecessary procedures. The applications in capsule endoscopy also show that CNNs are effectively used to segment and localize lesions, thereby dramatically reducing the manual review time for clinicians.

A major innovation in GI endoscopy is the integration of CNNs into real-time clinical workflows. Modern computer-aided detection and diagnosis systems powered by CNNs can process high-resolution endoscopic videos in real time, providing immediate feedback during procedures[133,137,138]. This includes flagging potential lesions, highlighting abnormal regions, and suggesting histological risk levels. These tools support endoscopists by improving the detection rate, particularly for subtle or diminutive lesions, and reducing cognitive fatigue during long procedures. Real-time assistance has been shown to increase the adenoma detection rate, a key quality indicator in colonoscopy, thereby directly contributing to CRC prevention[139,140]. Furthermore, the deployment of real-time CNNs in upper GI procedures, such as esophagogastroduodenoscopy and endoscopic ultrasound-guided interventions, is an increasingly common practice[141]. In endoscopic ultrasound, CNNs aid in identifying anatomical structures, differentiating cystic lesions from the solid ones, and supporting fine-needle aspiration targeting[142,143]. In capsule endoscopy, CNNs enable a rapid triage of massive image volumes, highlighting frames with suspected pathology for physician review[144,145]. This feature has been shown to dramatically reduce interpretation time from hours to minutes while maintaining diagnostic integrity. However, it is crucial to recognize that many studies in this domain are based on relatively small or imbalanced datasets, which can limit the robustness of the reported performance. The development of models that are validated on diverse, multi-center data remains a key challenge. CNNs are also being explored for robotic endoscopy and autonomous navigation systems, where real-time tissue recognition and decision-making will be essential for safe, automated procedures[146,147]. However, while these studies consistently report high performance, many are retrospective and lack prospective validation in real-world clinical workflows. Furthermore, the variability in endoscope imaging settings and lighting conditions can affect the reported results and needs to be addressed in future research. Achieving this requires specific hardware and software considerations. Real-time inference necessitates efficient processing, often leveraging dedicated hardware like graphics processing unit or specialized edge devices integrated directly into the endoscopy suite. Minimizing computational latency is paramount to ensure the AI’s feedback is synchronized with the endoscopist’s movements, thereby preventing workflow disruption and maximizing clinical utility. The field’s translational maturity is evidenced by the emergence of commercially available systems that have received regulatory approvals, such as the United States Food and Drug Administration and the Conformite Europeenne mark in Europe, for real-time polyp detection during colonoscopy. Prominent examples include the GI GeniusTM (Medtronic, MN, United States), CAD EYETM (Fujifilm, Japan), and CADDIETM (Odin Medical/Olympus, PA, United States) systems. Furthermore, professional societies like the European Society of GI Endoscopy and the American Society for GI Endoscopy have issued position statements and guidelines on the expected value and proper clinical integration of AI, which are crucial for defining performance metrics and fostering adoption[148,149]. Table 4 Lists representative studies demonstrating the use of CNN-based systems for real-time AI support and workflow integration in GI endoscopy. The integration of these models into commercial platforms and regulatory-approved devices marks an important step toward clinical translation[150-157].

| Data | Architecture | Application | Key findings | Ref. |

| Gastroscopy images | Real-time anatomical classification with CNN | Real-time anatomical recognition during gastroscopy procedures | CNN accurately classified anatomical positions in real-time, reducing mislabeling and improving procedural documentation. Enabled smoother workflow integration with minimal latency | [150] |

| Gastroscopy videos | Frame-wise CNN classification | Automated disease detection during endoscopy video review | The system provided real-time frame classification, improving detection of GI abnormalities in long video sequences and assisting in efficient case triage | [151] |

| White-light endoscopy | CNN for real-time Helicobacter pylori status | Immediate assessment of Helicobacter pylori infection during endoscopy | Real-time feedback from the CNN allowed on-the-spot therapeutic decision-making and eliminated delays caused by biopsy processing | [113] |

| Esophagogastroduodenoscopy videos | CNN-based detection system integrated with endoscope | Real-time detection of gastroesophageal varices | The system was validated across multiple centers and significantly reduced miss rates of varices in real-time, enhancing early intervention | [152] |

| Colonoscopic images | Deep CNN classifier for anatomical site recognition | Automated real-time anatomical classification of colon images | CNN correctly labeled anatomical sites, reducing reliance on user memory and ensuring consistent documentation. Improved novice performance | [153] |

| Upper GI endoscopy | CNN detection assistant in randomised controlled trial | Detection of gastric neoplasms during routine procedures | A deep learning-based system reduced miss rate of gastric neoplasms significantly in a randomized controlled trial. Validated real-world clinical benefit | [154] |

| Upper GI anatomy images | Multi-task CNN model | Simultaneous detection of anatomical landmarks and structures | Model performed both classification and segmentation, improving navigation and assisting less experienced users in orientation | [155] |

| Esophagogastroduodenoscopy images | CNN trained on large anatomical dataset | Real-time classification of esophagogastroduodenoscopy anatomy | CNN classified images with expert-level accuracy, aiding report generation and training in real-time | [141] |

| GI endoscopy videos | CNN vs global feature comparison | Real-time disease detection efficiency benchmarking | CNNs proved significantly more accurate than traditional global features. Demonstrated readiness for real-time clinical deployment | [156] |

| GI tract videos | CNN with automated reporting module | End-to-end disease detection and report generation | Automatically generated reports based on real-time CNN classification, reducing documentation time and enhancing standardization | [157] |

The studies presented in Table 4 demonstrate a clear trend toward developing and validating systems for immediate application during procedures, encompassing tasks from real-time anatomical classification and recognition to automated disease detection and a real-time assessment of Helicobacter pylori status. A significant finding across these studies is the consistent improvement in clinical efficiency, including reduced documentation time, enhanced detection rates for subtle abnormalities, and improved procedural documentation. The table also underscores the expanding application of these real-time systems to various procedures, such as gastroscopy and esophagogastroduodenoscopy, marking a key step toward the practical, real-world clinical translation of CNN-based tools.

In our view, a major barrier to the clinical implementation of CNNs in GI endoscopy lies in the limitations related to data and model performance[158,159]. High-quality, annotated endoscopic image datasets are essential for training effective CNN models, yet they remain scarce due to data privacy concerns, the high cost of expert labeling, and inconsistencies across institutions. As a result, models are often trained on single-center datasets and may not generalize well to broader patient populations or different equipment settings. Moreover, variations in image quality, lighting conditions, bowel preparation, and endoscopic device types further challenge the robustness of these models in real-world applications[159,160]. Even when trained on adequate datasets, CNNs may struggle to adapt to new clinical environments without careful domain adaptation and validation across diverse settings. To address this, techniques like domain adaptation and federated learning are being explored. Domain adaptation helps models perform on new data distributions, while federated learning enables collaborative training across multiple institutions without sharing sensitive patient data, thus improving generalizability while protecting privacy.

Another critical issue is the interpretability of CNN predictions[161,162]. This is because, despite these models matching or exceeding human-level performance, their “black-box” nature makes it difficult for clinicians to understand or trust the decision-making process. This suggests that the absence of explainable outputs may reduce user confidence and hinder clinical adoption. To enhance trust and clinical adoption, methods for explainable AI are crucial. Techniques such as Grad-CAM, layer-wise relevance propagation, and SHapley Additive exPlanations can provide visual or numerical explanations for model decisions, making the AI’s reasoning more transparent to clinicians. Additionally, the practical integration of CNNs into real-time endoscopic workflows presents technical hurdles, including computational latency, interface design, and the risk of workflow disruption. To overcome these barriers, future research must focus on multi-center data collaboration, the development of explainable AI techniques, and close interdisciplinary cooperation to ensure that CNN-based tools are not only accurate but also usable and trustworthy in clinical settings.

In recent years, Transformer-based models, originally developed for natural language processing, have also gained significant attention in medical image analysis. Architectures like the vision transformer[163] and its medical variants (e.g., MedT) have shown promising results in both classification and segmentation tasks. Unlike CNNs that rely on local convolutional operations, transformers utilize a self-attention mechanism to capture long-range dependencies across the entire image[164]. This capability makes them particularly well-suited for tasks where global context is crucial. While their application in GI endoscopy is still emerging, the performance of models like TransUNet, which combines a U-Net-like structure with a transformer encoder, demonstrates their potential to address some of the limitations of pure CNN models[165]. Future research is expected to further explore the integration of Transformer-based models to enhance the accuracy and robustness of AI-assisted diagnosis in GI endoscopy.

CNNs have demonstrated significant potential to advance GI endoscopy by enhancing the accuracy and efficiency of lesion detection and classification. These models can support clinicians by reducing diagnostic errors and improving the consistency of endoscopic assessments. However, several barriers hinder their widespread clinical adoption, including limited and imbalanced datasets, poor generalizability across clinical settings, lack of model interpretability, and technical issues in real-time deployment. Ethical and regulatory concerns, such as data privacy and bias, also require attention. To address these challenges, future research should focus on developing explainable and robust models, conducting external and prospective validations, and fostering collaborations across institutions. Standardized reporting and alignment with clinical needs will be crucial for translating CNN-based systems into practice. While CNNs are not yet fully integrated into clinical GI workflows, they represent a promising tool that could transform endoscopic diagnosis and patient care. With continued improvements in hardware and enhanced interpretability, CNN-based assistance is likely to become a standard component of next-generation intelligent endoscopy systems.

| 1. | Rogler G, Singh A, Kavanaugh A, Rubin DT. Extraintestinal Manifestations of Inflammatory Bowel Disease: Current Concepts, Treatment, and Implications for Disease Management. Gastroenterology. 2021;161:1118-1132. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 86] [Cited by in RCA: 572] [Article Influence: 114.4] [Reference Citation Analysis (1)] |

| 2. | Wang Y, Huang Y, Chase RC, Li T, Ramai D, Li S, Huang X, Antwi SO, Keaveny AP, Pang M. Global Burden of Digestive Diseases: A Systematic Analysis of the Global Burden of Diseases Study, 1990 to 2019. Gastroenterology. 2023;165:773-783.e15. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 83] [Cited by in RCA: 108] [Article Influence: 36.0] [Reference Citation Analysis (0)] |

| 3. | Huang RJ, Hwang JH. Improving the Early Diagnosis of Gastric Cancer. Gastrointest Endosc Clin N Am. 2021;31:503-517. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 16] [Cited by in RCA: 31] [Article Influence: 6.2] [Reference Citation Analysis (0)] |

| 4. | Spiceland CM, Lodhia N. Endoscopy in inflammatory bowel disease: Role in diagnosis, management, and treatment. World J Gastroenterol. 2018;24:4014-4020. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 46] [Cited by in RCA: 97] [Article Influence: 12.1] [Reference Citation Analysis (0)] |

| 5. | Moran CP, Neary B, Doherty GA. Endoscopic evaluation in diagnosis and management of inflammatory bowel disease. World J Gastrointest Endosc. 2016;8:723-732. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 14] [Cited by in RCA: 18] [Article Influence: 1.8] [Reference Citation Analysis (1)] |

| 6. | Kim SH, Chun HJ. Capsule Endoscopy: Pitfalls and Approaches to Overcome. Diagnostics (Basel). 2021;11:1765. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1] [Cited by in RCA: 21] [Article Influence: 4.2] [Reference Citation Analysis (0)] |

| 7. | Ferrari C, Tadros M. Enhancing the Quality of Upper Gastrointestinal Endoscopy: Current Indicators and Future Trends. Gastroenterol Insights. 2024;15:1-18. [RCA] [DOI] [Full Text] [Cited by in Crossref: 2] [Cited by in RCA: 5] [Article Influence: 1.7] [Reference Citation Analysis (0)] |

| 8. | Gimeno-García AZ, Hernández-Pérez A, Nicolás-Pérez D, Hernández-Guerra M. Artificial Intelligence Applied to Colonoscopy: Is It Time to Take a Step Forward? Cancers (Basel). 2023;15:2193. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 18] [Reference Citation Analysis (0)] |

| 9. | Ali H, Muzammil MA, Dahiya DS, Ali F, Yasin S, Hanif W, Gangwani MK, Aziz M, Khalaf M, Basuli D, Al-Haddad M. Artificial intelligence in gastrointestinal endoscopy: a comprehensive review. Ann Gastroenterol. 2024;37:133-141. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 16] [Article Influence: 8.0] [Reference Citation Analysis (0)] |

| 10. | Lewis JR, Pathan S, Kumar P, Dias CC. AI in Endoscopic Gastrointestinal Diagnosis: A Systematic Review of Deep Learning and Machine Learning Techniques. IEEE Access. 2024;12:163764-163786. [DOI] [Full Text] |

| 11. | Mohan BP, Khan SR, Kassab LL, Ponnada S, Chandan S, Ali T, Dulai PS, Adler DG, Kochhar GS. High pooled performance of convolutional neural networks in computer-aided diagnosis of GI ulcers and/or hemorrhage on wireless capsule endoscopy images: a systematic review and meta-analysis. Gastrointest Endosc. 2021;93:356-364.e4. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 33] [Cited by in RCA: 29] [Article Influence: 5.8] [Reference Citation Analysis (0)] |

| 12. | Choi J, Shin K, Jung J, Bae HJ, Kim DH, Byeon JS, Kim N. Convolutional Neural Network Technology in Endoscopic Imaging: Artificial Intelligence for Endoscopy. Clin Endosc. 2020;53:117-126. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 45] [Cited by in RCA: 38] [Article Influence: 6.3] [Reference Citation Analysis (1)] |

| 13. | Ma H, Wang L, Chen Y, Tian L. Convolutional neural network-based artificial intelligence for the diagnosis of early esophageal cancer based on endoscopic images: A meta-analysis. Saudi J Gastroenterol. 2022;28:332-340. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 12] [Reference Citation Analysis (0)] |

| 14. | Yan T, Wong PK, Qin YY. Deep learning for diagnosis of precancerous lesions in upper gastrointestinal endoscopy: A review. World J Gastroenterol. 2021;27:2531-2544. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 18] [Cited by in RCA: 15] [Article Influence: 3.0] [Reference Citation Analysis (0)] |

| 15. | Zhao Y, Hu B, Wang Y, Yin X, Jiang Y, Zhu X. Identification of gastric cancer with convolutional neural networks: a systematic review. Multimed Tools Appl. 2022;81:11717-11736. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 7] [Cited by in RCA: 12] [Article Influence: 3.0] [Reference Citation Analysis (0)] |

| 16. | Weigt J, Repici A, Antonelli G, Afifi A, Kliegis L, Correale L, Hassan C, Neumann H. Performance of a new integrated computer-assisted system (CADe/CADx) for detection and characterization of colorectal neoplasia. Endoscopy. 2022;54:180-184. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 22] [Cited by in RCA: 61] [Article Influence: 15.3] [Reference Citation Analysis (0)] |

| 17. | Attallah O, Sharkas M. GASTRO-CADx: a three stages framework for diagnosing gastrointestinal diseases. PeerJ Comput Sci. 2021;7:e423. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 26] [Cited by in RCA: 26] [Article Influence: 5.2] [Reference Citation Analysis (0)] |

| 18. | Raju ASN, Venkatesh K, Gatla RK, Eid MM, Flah A, Slanina Z, Ghaly RNR. Expedited Colorectal Cancer Detection Through a Dexterous Hybrid CADx System With Enhanced Image Processing and Augmented Polyp Visualization. IEEE Access. 2025;13:17524-17553. [DOI] [Full Text] |

| 19. | Ali H, Sharif M, Yasmin M, Rehmani MH, Riaz F. A survey of feature extraction and fusion of deep learning for detection of abnormalities in video endoscopy of gastrointestinal-tract. Artif Intell Rev. 2020;53:2635-2707. [RCA] [DOI] [Full Text] [Cited by in Crossref: 14] [Cited by in RCA: 24] [Article Influence: 3.4] [Reference Citation Analysis (0)] |

| 20. | Mu G, Zhu Y, Niu Z, Li H, Wu L, Wang J, Luo R, Hu X, Li Y, Zhang J, Hu S, Li C, Ding S, Yu H. Expert-level classification of gastritis by endoscopy using deep learning: a multicenter diagnostic trial. Endosc Int Open. 2021;9:E955-E964. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 2] [Cited by in RCA: 12] [Article Influence: 2.4] [Reference Citation Analysis (0)] |

| 21. | Li M, Jiang Y, Zhang Y, Zhu H. Medical image analysis using deep learning algorithms. Front Public Health. 2023;11:1273253. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 5] [Cited by in RCA: 115] [Article Influence: 38.3] [Reference Citation Analysis (0)] |

| 22. | Escobar J, Sanchez K, Hinojosa C, Arguello H, Castillo S. Accurate Deep Learning-based Gastrointestinal Disease Classification via Transfer Learning Strategy. In: 2021 XXIII Symposium on Image, Signal Processing and Artificial Vision (STSIVA); 2021 Sept 15-17; Popayán, Colombia. IEEE: NY, United States, 2021: 1-5. [DOI] [Full Text] |

| 23. | Su Q, Wang F, Chen D, Chen G, Li C, Wei L. Deep convolutional neural networks with ensemble learning and transfer learning for automated detection of gastrointestinal diseases. Comput Biol Med. 2022;150:106054. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 34] [Cited by in RCA: 28] [Article Influence: 7.0] [Reference Citation Analysis (0)] |

| 24. | Hicks SA, Strümke I, Thambawita V, Hammou M, Riegler MA, Halvorsen P, Parasa S. On evaluation metrics for medical applications of artificial intelligence. Sci Rep. 2022;12:5979. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 8] [Cited by in RCA: 358] [Article Influence: 89.5] [Reference Citation Analysis (0)] |

| 25. | Kaur G, Saini S. Comparative analysis of RMSE and MAP metrics for evaluating CNN and LSTM models. AIP Conf Proc. 2024;3121:040003. [DOI] [Full Text] |

| 26. | Listyalina L, Mustiadi I, Dharmawan DA. Joint Dice and Intersection over Union Losses for Deep Optical Disc Segmentation. In: 2020 3rd International Conference on Biomedical Engineering (IBIOMED); 2020 Oct 6-8; Yogyakarta, Indonesia. IEEE: NY, United States, 2020: 49-54. [DOI] [Full Text] |

| 27. |

Rahman MA, Wang Y.

Optimizing Intersection-Over-Union in Deep Neural Networks for Image Segmentation. In: Bebis G, Boyle R, Parvin B, Koracin D, Porikli F, Skaff S, Entezari A, Min J, Iwai D, Sadagic A, Scheidegger C, Isenberg T |

| 28. | Bradshaw TJ, Huemann Z, Hu J, Rahmim A. A Guide to Cross-Validation for Artificial Intelligence in Medical Imaging. Radiol Artif Intell. 2023;5:e220232. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 94] [Article Influence: 31.3] [Reference Citation Analysis (0)] |

| 29. | Sejuti ZA, Islam MS. A hybrid CNN-KNN approach for identification of COVID-19 with 5-fold cross validation. Sens Int. 2023;4:100229. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 17] [Reference Citation Analysis (0)] |

| 30. | Smedsrud PH, Thambawita V, Hicks SA, Gjestang H, Nedrejord OO, Næss E, Borgli H, Jha D, Berstad TJD, Eskeland SL, Lux M, Espeland H, Petlund A, Nguyen DTD, Garcia-Ceja E, Johansen D, Schmidt PT, Toth E, Hammer HL, de Lange T, Riegler MA, Halvorsen P. Kvasir-Capsule, a video capsule endoscopy dataset. Sci Data. 2021;8:142. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 157] [Cited by in RCA: 66] [Article Influence: 13.2] [Reference Citation Analysis (1)] |

| 31. | Jaspers TJM, Boers TGW, Kusters CHJ, Jong MR, Jukema JB, de Groof AJ, Bergman JJ, de With PHN, van der Sommen F. Robustness evaluation of deep neural networks for endoscopic image analysis: Insights and strategies. Med Image Anal. 2024;94:103157. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 13] [Reference Citation Analysis (0)] |

| 32. | Ibrahim H, Liu X, Rivera SC, Moher D, Chan AW, Sydes MR, Calvert MJ, Denniston AK. Reporting guidelines for clinical trials of artificial intelligence interventions: the SPIRIT-AI and CONSORT-AI guidelines. Trials. 2021;22:11. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 14] [Cited by in RCA: 74] [Article Influence: 14.8] [Reference Citation Analysis (1)] |

| 33. | Taye MM. Theoretical Understanding of Convolutional Neural Network: Concepts, Architectures, Applications, Future Directions. Computation. 2023;11:52. [DOI] [Full Text] |

| 34. | Jia F, Lei Y, Lin J, Zhou X, Lu N. Deep neural networks: A promising tool for fault characteristic mining and intelligent diagnosis of rotating machinery with massive data. Mech Syst Signal Process. 2016;72-73:303-315. [DOI] [Full Text] |

| 35. | Shin HC, Roth HR, Gao M, Lu L, Xu Z, Nogues I, Yao J, Mollura D, Summers RM. Deep Convolutional Neural Networks for Computer-Aided Detection: CNN Architectures, Dataset Characteristics and Transfer Learning. IEEE Trans Med Imaging. 2016;35:1285-1298. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 3594] [Cited by in RCA: 1992] [Article Influence: 199.2] [Reference Citation Analysis (1)] |

| 36. | Du W, Rao N, Liu D, Jiang H, Luo C, Li Z, Gan T, Zeng B. Review on the Applications of Deep Learning in the Analysis of Gastrointestinal Endoscopy Images. IEEE Access. 2019;7:142053-142069. [DOI] [Full Text] |

| 37. | Lea C, Flynn MD, Vidal R, Reiter A, Hager GD. Temporal Convolutional Networks for Action Segmentation and Detection. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017 Jul 21-26; Honolulu, HI, United States. IEEE: NY, United States, 2017: 1003-1012. [DOI] [Full Text] |

| 38. | Tan J, Gao Y, Liang Z, Cao W, Pomeroy MJ, Huo Y, Li L, Barish MA, Abbasi AF, Pickhardt PJ. 3D-GLCM CNN: A 3-Dimensional Gray-Level Co-Occurrence Matrix-Based CNN Model for Polyp Classification via CT Colonography. IEEE Trans Med Imaging. 2020;39:2013-2024. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 75] [Cited by in RCA: 55] [Article Influence: 9.2] [Reference Citation Analysis (0)] |

| 39. | Igarashi S, Sasaki Y, Mikami T, Sakuraba H, Fukuda S. Anatomical classification of upper gastrointestinal organs under various image capture conditions using AlexNet. Comput Biol Med. 2020;124:103950. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 27] [Cited by in RCA: 47] [Article Influence: 7.8] [Reference Citation Analysis (0)] |

| 40. | Nouman Noor M, Nazir M, Khan SA, Song O, Ashraf I. Efficient Gastrointestinal Disease Classification Using Pretrained Deep Convolutional Neural Network. Electronics. 2023;12:1557. [DOI] [Full Text] |

| 41. | Yang J, Ou Y, Chen Z, Liao J, Sun W, Luo Y, Luo C. A Benchmark Dataset of Endoscopic Images and Novel Deep Learning Method to Detect Intestinal Metaplasia and Gastritis Atrophy. IEEE J Biomed Health Inform. 2023;27:7-16. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 19] [Reference Citation Analysis (0)] |

| 42. | Ye B, Shu Z, Wang B, Wang S, Fu Y, Zhang L, Qi B, Dwivedi AK, Liu S. Attention Mechanism Guided SE + ResNet-H Model for Gastrointestinal Endoscopy Image Classification. IEEE Trans Instrum Meas. 2024;73:1-13. [DOI] [Full Text] |

| 43. | Beeche C, Singh JP, Leader JK, Gezer S, Oruwari AP, Dansingani KK, Chhablani J, Pu J. Super U-Net: a modularized generalizable architecture. Pattern Recognit. 2022;128:108669. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 28] [Cited by in RCA: 21] [Article Influence: 5.3] [Reference Citation Analysis (0)] |

| 44. | Souaidi M, Lafraxo S, Kerkaou Z, El Ansari M, Koutti L. A Multiscale Polyp Detection Approach for GI Tract Images Based on Improved DenseNet and Single-Shot Multibox Detector. Diagnostics (Basel). 2023;13:733. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 6] [Reference Citation Analysis (0)] |

| 45. | Petit O, Thome N, Rambour C, Themyr L, Collins T, Soler L. U-Net Transformer: Self and Cross Attention for Medical Image Segmentation. In: Machine Learning in Medical Imaging: 12th International Workshop, MLMI 2021, Held in Conjunction with MICCAI 2021, 2021 Sept 27; Strasbourg, France. Berlin: Springer, 2021: 267-276. [DOI] [Full Text] |

| 46. | Vezakis IA, Georgas K, Fotiadis D, Matsopoulos GK. EffiSegNet: Gastrointestinal Polyp Segmentation through a Pre-Trained EfficientNet-based Network with a Simplified Decoder. Annu Int Conf IEEE Eng Med Biol Soc. 2024;2024:1-4. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 4] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 47. | Zhang X, Yang S, Luo W, Gao L, Zhang W. Video Compression Artifact Reduction by Fusing Motion Compensation and Global Context in a Swin-CNN Based Parallel Architecture. In: Thirty-Seventh AAAI Conference on Artificial Intelligence, AAAI 2023, 2023 Feb 7-14; Washington, DC, United States. AAAI Press: CA, United States, 2023: 3489-3497. [DOI] [Full Text] |

| 48. | Ji Z, Sun H, Yuan N, Zhang H, Sheng J, Zhang X, Ganchev I. BGRD-TransUNet: A Novel TransUNet-Based Model for Ultrasound Breast Lesion Segmentation. IEEE Access. 2024;12:31182-31196. [DOI] [Full Text] |

| 49. | Wagner SJ, Reisenbüchler D, West NP, Niehues JM, Zhu J, Foersch S, Veldhuizen GP, Quirke P, Grabsch HI, van den Brandt PA, Hutchins GGA, Richman SD, Yuan T, Langer R, Jenniskens JCA, Offermans K, Mueller W, Gray R, Gruber SB, Greenson JK, Rennert G, Bonner JD, Schmolze D, Jonnagaddala J, Hawkins NJ, Ward RL, Morton D, Seymour M, Magill L, Nowak M, Hay J, Koelzer VH, Church DN; TransSCOT consortium, Matek C, Geppert C, Peng C, Zhi C, Ouyang X, James JA, Loughrey MB, Salto-Tellez M, Brenner H, Hoffmeister M, Truhn D, Schnabel JA, Boxberg M, Peng T, Kather JN. Transformer-based biomarker prediction from colorectal cancer histology: A large-scale multicentric study. Cancer Cell. 2023;41:1650-1661.e4. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 48] [Cited by in RCA: 142] [Article Influence: 47.3] [Reference Citation Analysis (0)] |

| 50. | Kaur J, Kaur P. A systematic literature analysis of multi-organ cancer diagnosis using deep learning techniques. Comput Biol Med. 2024;179:108910. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 3] [Article Influence: 1.5] [Reference Citation Analysis (0)] |

| 51. | Bhardwaj P, Kumar S, Kumar Y. A Comprehensive Analysis of Deep Learning-Based Approaches for the Prediction of Gastrointestinal Diseases Using Multi-class Endoscopy Images. Arch Computat Methods Eng. 2023;30:4499-4516. [DOI] [Full Text] |

| 52. | Patil RM, Giripunje S. Deep Learning-Based Detection and Classification of Gastrointestinal Tract Diseases in Endoscopy Images. In: 2024 2nd DMIHER International Conference on Artificial Intelligence in Healthcare, Education and Industry (IDICAIEI); 2024 Aug 16; Wardha, India. IEEE: NY, United States, 2024: 1-6. [DOI] [Full Text] |

| 53. | Bajhaiya D, Narayanan Unni S. Deep learning-enabled detection and localization of gastrointestinal diseases using wireless-capsule endoscopic images. Biomed Signal Process Control. 2024;93:106125. [DOI] [Full Text] |

| 54. | Durak S, Bayram B, Bakırman T, Erkut M, Doğan M, Gürtürk M, Akpınar B. Deep neural network approaches for detecting gastric polyps in endoscopic images. Med Biol Eng Comput. 2021;59:1563-1574. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 10] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 55. | Ozawa T, Ishihara S, Fujishiro M, Kumagai Y, Shichijo S, Tada T. Automated endoscopic detection and classification of colorectal polyps using convolutional neural networks. Therap Adv Gastroenterol. 2020;13:1756284820910659. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 105] [Cited by in RCA: 81] [Article Influence: 13.5] [Reference Citation Analysis (0)] |

| 56. | Malik H, Naeem A, Sadeghi-niaraki A, Naqvi RA, Lee S. Multi-classification deep learning models for detection of ulcerative colitis, polyps, and dyed-lifted polyps using wireless capsule endoscopy images. Complex Intell Syst. 2024;10:2477-2497. [DOI] [Full Text] |

| 57. | Gadi SR, Muralidharan SS, Glissen Brown JR. Colonoscopy Quality, Innovation, and the Assessment of New Technology. Tech Innov Gastrointest Endosc. 2024;26:177-192. [RCA] [DOI] [Full Text] [Cited by in Crossref: 2] [Cited by in RCA: 2] [Article Influence: 1.0] [Reference Citation Analysis (0)] |

| 58. | Du W, Rao N, Dong C, Wang Y, Hu D, Zhu L, Zeng B, Gan T. Automatic classification of esophageal disease in gastroscopic images using an efficient channel attention deep dense convolutional neural network. Biomed Opt Express. 2021;12:3066-3081. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 30] [Cited by in RCA: 24] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 59. | Eelbode T, Hassan C, Demedts I, Roelandt P, Coron E, Bhandari P, Neumann H, Pech O, Repici A, Maes F, Bisschops R. Tu1959 Bli and Lci Improve Polyp Detection and Delineation Accuracy for Deep Learning Networks. Gastrointest Endosc. 2019;89:AB632. [DOI] [Full Text] |

| 60. | Fonollà R, E. W. van der Zander Q, Schreuder RM, Masclee AAM, Schoon EJ, van der Sommen F, de With PHN. A CNN CADx System for Multimodal Classification of Colorectal Polyps Combining WL, BLI, and LCI Modalities. Appl Sci. 2020;10:5040. [RCA] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 14] [Article Influence: 2.3] [Reference Citation Analysis (0)] |

| 61. | Zhou P, Cao Y, Li M, Ma Y, Chen C, Gan X, Wu J, Lv X, Chen C. HCCANet: histopathological image grading of colorectal cancer using CNN based on multichannel fusion attention mechanism. Sci Rep. 2022;12:15103. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1] [Cited by in RCA: 19] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 62. | Lonseko ZM, Adjei PE, Du W, Luo C, Hu D, Zhu L, Gan T, Rao N. Gastrointestinal Disease Classification in Endoscopic Images Using Attention-Guided Convolutional Neural Networks. Appl Sci. 2021;11:11136. [RCA] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 16] [Article Influence: 3.2] [Reference Citation Analysis (0)] |

| 63. | Zhao J, Feng Q, Wu P, Lupu RA, Wilke RA, Wells QS, Denny JC, Wei WQ. Learning from Longitudinal Data in Electronic Health Record and Genetic Data to Improve Cardiovascular Event Prediction. Sci Rep. 2019;9:717. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 108] [Cited by in RCA: 110] [Article Influence: 15.7] [Reference Citation Analysis (0)] |

| 64. | Lin Y, Zhang X, Li F, Zhang R, Jiang H, Lai C, Yi L, Li Z, Wu W, Qiu L, Yang H, Guan Q, Wang Z, Deng L, Zhao Z, Lu W, Lun W, Dai J, He S, Bai Y. A deep neural network improves endoscopic detection of laterally spreading tumors. Surg Endosc. 2025;39:776-785. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 5] [Article Influence: 5.0] [Reference Citation Analysis (0)] |

| 65. | Uma R, Ganesh K, Sudheer P, Muthu Kumar M. A Deep Learning Based Detection and Classification of Gastrointestinal Diseases. In: 2024 6th International Conference on Electrical, Control and Instrumentation Engineering (ICECIE); 2024 Nov 23-23; Pattaya, Thailand. IEEE: NY, United States, 2024: 1-7. [DOI] [Full Text] |

| 66. | El-ghany SA, Mahmood MA, Abd El-aziz AA. An Accurate Deep Learning-Based Computer-Aided Diagnosis System for Gastrointestinal Disease Detection Using Wireless Capsule Endoscopy Image Analysis. Appl Sci. 2024;14:10243. [DOI] [Full Text] |

| 67. | Zhou B, Rao X, Xing H, Ma Y, Wang F, Rong L. A convolutional neural network-based system for detecting early gastric cancer in white-light endoscopy. Scand J Gastroenterol. 2023;58:157-162. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 8] [Reference Citation Analysis (0)] |