Published online Jan 21, 2025. doi: 10.3748/wjg.v31.i3.101092

Revised: October 29, 2024

Accepted: December 3, 2024

Published online: January 21, 2025

Processing time: 106 Days and 21.9 Hours

Patients with hepatitis B virus (HBV) infection require chronic and personalized care to improve outcomes. Large language models (LLMs) can potentially provide medical information for patients.

To examine the performance of three LLMs, ChatGPT-3.5, ChatGPT-4.0, and Google Gemini, in answering HBV-related questions.

LLMs’ responses to HBV-related questions were independently graded by two medical professionals using a four-point accuracy scale, and disagreements were resolved by a third reviewer. Each question was run three times using three LLMs. Readability was assessed via the Gunning Fog index and Flesch-Kincaid grade level.

Overall, all three LLM chatbots achieved high average accuracy scores for subjective questions (ChatGPT-3.5: 3.50; ChatGPT-4.0: 3.69; Google Gemini: 3.53, out of a maximum score of 4). With respect to objective questions, ChatGPT-4.0 achieved an 80.8% accuracy rate, compared with 62.9% for ChatGPT-3.5 and 73.1% for Google Gemini. Across the six domains, ChatGPT-4.0 performed better in terms of diagnosis, whereas Google Gemini demonstrated excellent clinical manifestations. Notably, in the readability analysis, the mean Gunning Fog index and Flesch-Kincaid grade level scores of the three LLM chatbots were significantly higher than the standard level eight, far exceeding the reading level of the normal population.

Our results highlight the potential of LLMs, especially ChatGPT-4.0, for delivering responses to HBV-related questions. LLMs may be an adjunctive informational tool for patients and physicians to improve outcomes. Nevertheless, current LLMs should not replace personalized treatment recommendations from physicians in the management of HBV infection.

Core Tip: Hepatitis B virus (HBV) infection remains a global health problem that may cause chronic hepatitis, liver cirrhosis, or hepatocellular carcinoma. There is a notable trend among the public to acknowledge HBV-related information to improve outcomes. Artificial intelligence is a large language model that provides updated and helpful knowledge. Since the ChatGPT was developed by OpenAI, an increasing number of studies have explored its utility in responding to medical questions. This study evaluates and compares the abilities of OpenAI’s ChatGPT and Google’s Gemini in answering test questions concerning HBV using both subjective and objective metrics.

- Citation: Li Y, Huang CK, Hu Y, Zhou XD, He C, Zhong JW. Exploring the performance of large language models on hepatitis B infection-related questions: A comparative study. World J Gastroenterol 2025; 31(3): 101092

- URL: https://www.wjgnet.com/1007-9327/full/v31/i3/101092.htm

- DOI: https://dx.doi.org/10.3748/wjg.v31.i3.101092

Hepatitis B virus (HBV) infection affects approximately 296 million people worldwide and is the leading cause of cirrhosis and liver cancer. In 2019, the number of deaths from HBV-related cirrhosis was estimated at 331000, and the number of deaths from HBV-related liver cancer was estimated at 192000, representing an increase from 156000 in 2010[1]. Despite the World Health Organization’s goal to reduce the incidence of viral hepatitis by 90% and its associated mortality by 65%, by 2030, the annual number of deaths from HBV worldwide is projected to increase by 39% from 2015 to 2030 if the current status continues[2]. Owing to the substantial clinical and economic burden on patients, caregivers, and society, the effective management of chronic HBV infection is pivotal[3].

In recent decades, natural language processing models, especially large language models (LLMs), have undergone a striking evolution from their traditional counterparts to generate more human-like responses through the use of self-supervised learning approaches and training on a large pool of textual data[4,5]. ChatGPT, an LLM introduced by OpenAI in 2022, has demonstrated a performance level that approximates the passing grade of the United States Medical Licensing Examination[6,7]. This achievement underscores the deep understanding of ChatGPT and highlights its potential to assist users in clinical care. Two versions of ChatGPT are currently available. The first is ChatGPT-3.5, which was released in November 2022 and offered as a free version. The second is ChatGPT-4.0, which was introduced in March 2023 and offered as a paid version[8].

Recently, several studies have explored LLMs’ ability to aid in provide information for patients and the realm of medicine. Yeo et al[9] evaluated the performance of ChatGPT-3.5 in answering questions about cirrhosis and hepatocellular carcinoma and reported an encouraging proportion of 76.9% correct responses. The authors reported that ChatGPT-3.5 had poorer performance in specifying treatment duration and did not have knowledge of regional guideline variations[9]. In addition, Miao et al[10] assessed the accuracy of ChatGPT on nephrology test questions and reported total accuracy rates of 51% for ChatGPT-3.5 and 74% for ChatGPT-4.0. However, the accuracy rates remained below the passing threshold and average scores of nephrology examinees. According to an investigation in the United States, approximately 2 out of 3 adults search for medical knowledge on the internet, and 1 out of 3 adults self-diagnose using online information[11]. Considering the rapid development of LLMs, it is plausible that patients and caregivers will increasingly use LLM chatbots to search for information on chronic diseases, including HBV infection. However, the accuracy of responses to queries regarding HBV infection generated by LLM chatbots has yet to be determined.

In this study, we aimed to evaluate and compare the performances of three publicly available LLMs, OpenAI’s ChatGPT-3.5 and ChatGPT-4.0 and Google’s Gemini, in response to common questions related to HBV infection. The accuracy and readability of each LLM chatbot response were examined rigorously. Our findings provide valuable insights into the potential benefits and risks of LLM chatbots in answering common HBV infection questions.

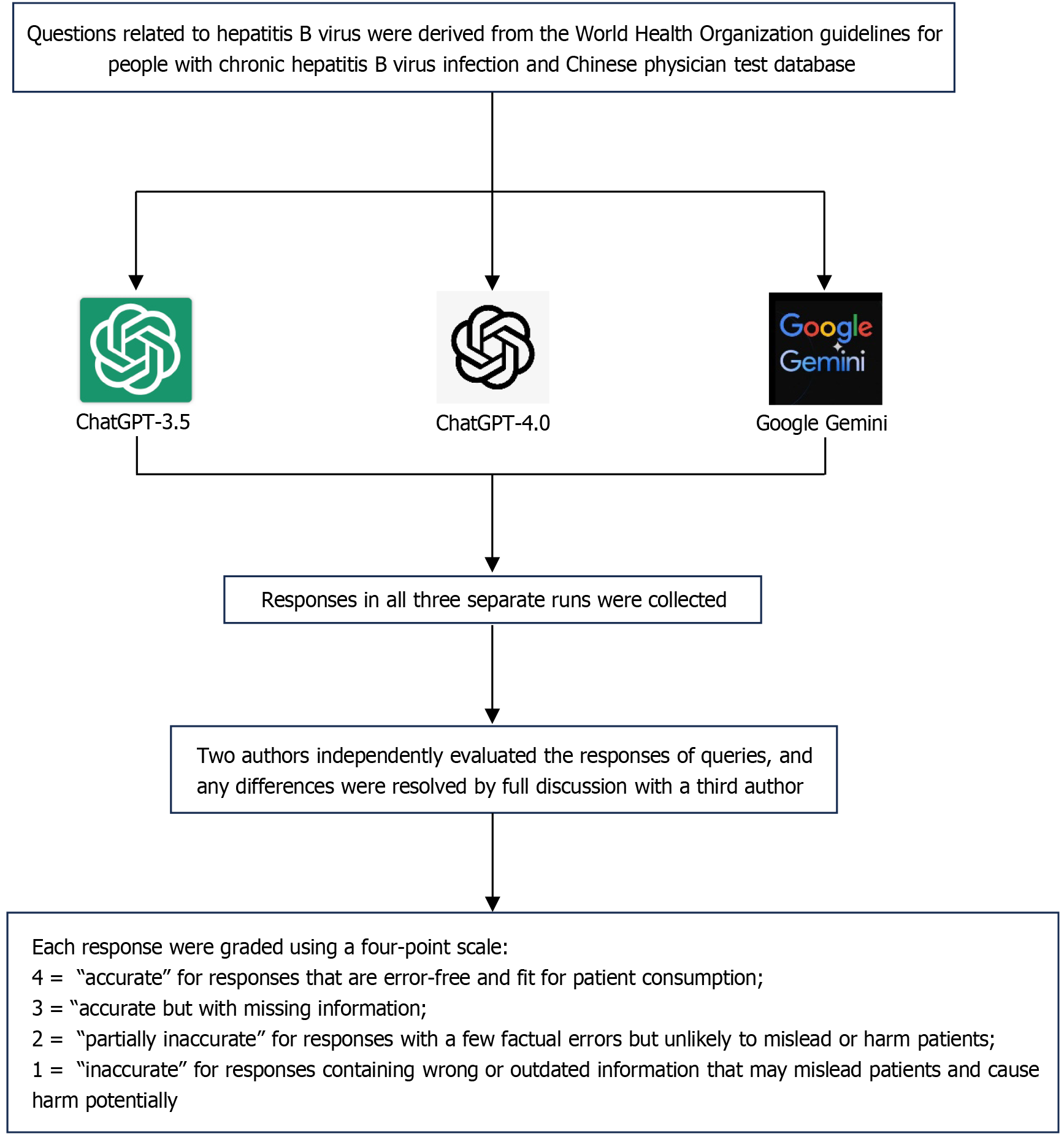

As shown in Supplementary Table 1, this study was conducted by using 12 subjective questions derived from the World Health Organization guidelines for individuals with chronic HBV infection[12]. Additionally, the 52 objective questions with the highest number of tests were selected from the Chinese professional physician test database (Supplementary Table 2). The 64 questions covered six aspects: Risk factors, clinical manifestations, diagnosis, treatment, prevention, and prognosis. From July 8 to July 24, responses were generated by ChatGPT (version GPT-3.5 and GPT-4.0) and Google Gemini. Each question was tested in three separate runs within an 8-day interval, allowing us to analyze the use of LLMs precisely in a real-world scenario[13,14].

Two independent physicians with a minimum of eight years of practice experience evaluated the subjective questions using a 4-point scale. Any conflict was thoroughly discussed with the third author, and a majority consensus approach was used to determine the final rating of each chatbot answer. Each response generated by the LLM chatbots was graded on a four-point scale as follows: (1) “Inaccurate” for responses containing wrong or outdated information that may mislead patients and cause harm; (2) “Partially inaccurate” for responses with a few factual errors but unlikely to mislead or harm patients; (3) “Accurate but with missing information”; and (4) “Accurate” for responses that are error-free and fit for patient consumption. The sum of the scores from the graders was used to determine the total accuracy scores of the LLM chatbot responses. A flowchart of the overall study design is presented (Figure 1).

The readability was measured by using two objective indices of the reading level of the texts: The Flesch-Kincaid grade level (FKGL) and the Gunning Fog index (GFI). Ideally, the index of medical information for patients should be eight or less. Measurements were conducted via an online readability website (https://readable.com) and were assessed on July 29, 2024.

Statistical analyses were performed via SPSS 27.0 for Windows (SPSS Inc., Chicago, IL, United States), and the data are presented as mean ± SD. Average accuracy scores, the percentage of accuracy ratings, and two-sided Student’s t-tests were used to examine differences in accuracy. For the readability analysis, two-sided Student’s t-tests were employed. P values less than 0.05 were considered statistically significant.

As shown in Table 1, the answer length for the HBV-related questions ranged from 133 words to 421 words. Both ChatGPT and Google Gemini were extremely accurate in responding to questions related to HBV infection. Overall, ChatGPT-4.0 demonstrated a superior average accuracy score of 3.69, surpassing both ChatGPT-3.5 (3.5) and Google Gemini (3.53). Next, we performed a detailed analysis of accuracy scores in six subfields as assessed by three experienced physicians. Compared with ChatGPT-3.5 and Google Gemini, ChatGPT-4.0 achieved higher scores in the domains of risk factors (4), diagnosis (4), treatment (3.6), and prognosis (4). The ChatGPT-4.0 model scored 4 in all three runs in the categories of risk factors, diagnosis, and prognosis. In terms of clinical manifestations, the accuracy scores of Google Gemini (3) were lower than those of ChatGPT-3.5 (3.67) and ChatGPT-4.0 (3.67). Notably, Google Gemini received higher scores (4) than ChatGPT-3.5 (3.56) and ChatGPT-4.0 (3.56) in the subfield of prevention.

| Common questions | Sources of answers | Answer lengths, 1st run | Answer lengths, 2nd run | Answer lengths, 3rd run | Grades, mean | Grades, P value |

| Overall (mean) | ChatGPT-3.5 | 275 | 366 | 352 | 3.50 | 0.2 |

| ChatGPT-4.0 | 274 | 252 | 238 | 3.69 | ||

| Google Gemini | 307 | 322 | 325 | 3.53 | ||

| Risk factors | ||||||

| What are the transmission modes of hepatitis B virus? | ChatGPT-3.5 | 189 | 316 | 400 | 3.67 | 0.296 |

| ChatGPT-4.0 | 358 | 241 | 220 | 4 | ||

| Google Gemini | 264 | 291 | 291 | 3.33 | ||

| Clinical manifestation | ||||||

| What are the symptoms of hepatitis B infection? | ChatGPT-3.5 | 247 | 333 | 356 | 3.67 | 0.216 |

| ChatGPT-4.0 | 269 | 276 | 295 | 3.67 | ||

| Google Gemini | 226 | 349 | 352 | 3 | ||

| Diagnosis | ||||||

| What is the most accurate test for diagnosing Hepatitis B infection? | ChatGPT-3.5 | 223 | 341 | 348 | 3.67 | 0.027 |

| ChatGPT-4.0 | 307 | 349 | 280 | 4 | ||

| Google Gemini | 281 | 281 | 281 | 3 | ||

| Treatment | ||||||

| Can hepatitis B infection be cured clinically? | ChatGPT-3.5 | 334 | 357 | 395 | 3.67 | 0.216 |

| ChatGPT-4.0 | 271 | 324 | 264 | 3.67 | ||

| Google Gemini | 268 | 360 | 359 | 3 | ||

| What are the indications of antiviral therapy for patients infected with hepatitis B virus? | ChatGPT-3.5 | 368 | 367 | 402 | 3.67 | 0.296 |

| ChatGPT-4.0 | 351 | 334 | 296 | 3.33 | ||

| Google Gemini | 385 | 384 | 392 | 3 | ||

| Can patients infected with hepatitis B virus be pregnant during antiviral treatment? | ChatGPT-3.5 | 341 | 392 | 383 | 3.33 | 0.079 |

| ChatGPT-4.0 | 319 | 247 | 242 | 4 | ||

| Google Gemini | 369 | 352 | 351 | 4 | ||

| Do patients diagnosed with chronic hepatitis B during pregnancy need antiviral therapy? | ChatGPT-3.5 | 366 | 416 | 383 | 3 | 0.296 |

| ChatGPT-4.0 | 230 | 313 | 256 | 3.33 | ||

| Google Gemini | 325 | 330 | 375 | 3.67 | ||

| Can patients diagnosed with chronic hepatitis B during lactation be treated with antiviral therapy? | ChatGPT-3.5 | 366 | 419 | 391 | 3.33 | 0.296 |

| ChatGPT-4.0 | 245 | 190 | 218 | 3.67 | ||

| Google Gemini | 362 | 328 | 330 | 4 | ||

| Prevention | ||||||

| How long should a newborn receive the first dose of hepatitis B vaccine after birth? | ChatGPT-3.5 | 133 | 392 | 185 | 3.67 | 0.296 |

| ChatGPT-4.0 | 182 | 146 | 146 | 3.33 | ||

| Google Gemini | 193 | 207 | 201 | 4 | ||

| Can pregnant women receive hepatitis B vaccine? | ChatGPT-3.5 | 181 | 397 | 338 | 4 | |

| ChatGPT-4.0 | 179 | 183 | 149 | 4 | ||

| Google Gemini | 277 | 318 | 318 | 4 | ||

| How often should patients with hepatitis B virus infection be reexamined? | ChatGPT-3.5 | 209 | 421 | 328 | 3 | 0.027 |

| ChatGPT-4.0 | 275 | 171 | 205 | 3.33 | ||

| Google Gemini | 330 | 334 | 334 | 4 | ||

| Prognosis | ||||||

| What are the complications of hepatitis B infection? | ChatGPT-3.5 | 343 | 235 | 305 | 3.33 | 0.216 |

| ChatGPT-4.0 | 300 | 245 | 280 | 4.00 | ||

| Google Gemini | 405 | 326 | 318 | 3.33 | ||

With respect to objective questions, ChatGPT-4.0 performed better, achieving an 80.8% accuracy rate, compared with 62.9% for ChatGPT-3.5 and 73.1% for Google Gemini (Table 2). In the risk factor domain, both ChatGPT and Google Gemini achieved 100% accuracy ratings. ChatGPT-4.0 performed superiorly in terms of diagnosis, achieving 83.3% in three runs, whereas Google Gemini performed excellently in the clinical manifestation domain.

| Test questions by subfields | ChatGPT-3.5, correct | ChatGPT-3.5, incorrect | ChatGPT-4.0, correct | ChatGPT-4.0, incorrect | Google Gemini, correct | Google Gemini, incorrect |

| All test questions | 52 | 52 | 52 | |||

| 1st run | 34 (65.4) | 18 (34.6) | 43 (82.7) | 9 (17.3) | 37 (71.1) | 15 (28.9) |

| 2nd run | 30 (57.7) | 22 (42.3) | 41 (78.9) | 11 (21.1) | 38 (73.1) | 14 (26.9) |

| 3rd run | 34 (65.4) | 18 (34.6) | 42 (80.8) | 10 (19.2) | 39 (75) | 13 (25) |

| Concordance among 3 runs | 41 (78.9) | 46 (88.4) | 50 (96.2) | |||

| Total accuracy (%) | 62.9 | 80.8 | 73.1 | |||

| Risk factors (n) | 5 | 5 | 5 | |||

| 1st run | 5 (100) | 0 (0) | 5 (100) | 0 (0) | 5 (100) | 0 (0) |

| 2nd run | 5 (100) | 0 (0) | 5 (100) | 0 (0) | 5 (100) | 0 (0) |

| 3rd run | 5 (100) | 0 (0) | 5 (100) | 0 (0) | 5 (100) | 0 (0) |

| Concordance among 3 runs | 5 (100) | 5 (100) | 5 (100) | |||

| Total accuracy (%) | 100 | 100 | 100 | |||

| Clinical manifestation | 7 | 7 | 7 | |||

| 1st run | 2 (40) | 5 (71.4) | 4 (57.1) | 3 (42.9) | 5 (71.4) | 2 (28.6) |

| 2nd run | 2 (40) | 5 (71.4) | 4 (57.1) | 3 (42.9) | 5 (71.4) | 2 (28.6) |

| 3rd run | 3 (42.9) | 4 (57.1) | 4 (57.1) | 3 (42.9) | 5 (71.4) | 2 (28.6) |

| Concordance among 3 runs | 5 (71.4) | 6 (85.7) | 7 (100) | |||

| Total accuracy (%) | 33.3 | 57.1 | 71.4 | |||

| Diagnosis (n) | 18 | 18 | 18 | |||

| 1st run | 9 (50) | 9 (50) | 15 (83.3) | 3 (16.7) | 13 (72.2) | 5 (27.8) |

| 2nd run | 8 (44.4) | 10 (55.6) | 15 (83.3) | 3 (16.7) | 14 (77.8) | 4 (22.2) |

| 3rd run | 11 (61,1) | 7 (38.9) | 15 (83.3) | 3 (16.7) | 15 (83.3) | 3 (16.7) |

| Concordance among 3 runs | 12 (66.7) | 16 (88.9) | 16 (88.9) | |||

| Total accuracy (%) | 51.9 | 83.3 | 77.8 | |||

| Treatment (n) | 11 | 11 | 11 | |||

| 1st run | 11 (100) | 0 (0) | 10 (90.9) | 1 (9.1) | 9 (81.9) | 2 (18.1) |

| 2nd run | 10 (90.9) | 1 (9.1) | 10 (90.9) | 1 (9.1) | 9 (81.9) | 2 (18.1) |

| 3rd run | 10 (90.9) | 1 (9.1) | 11 (100) | 0 (0) | 9 (81.9) | 2 (18.1) |

| Concordance among 3 runs | 10 (90.9) | 10 (90.9) | 11 (100) | |||

| Total accuracy (%) | 93.9 | 93.9 | 81.9 | |||

| Prevention (n) | 7 | 7 | 7 | |||

| 1st run | 4 (57.1) | 3 (42.9) | 6 (85.7) | 1 (14.3) | 3 (42.9) | 4 (57.1) |

| 2nd run | 3 (42.9) | 4 (57.1) | 4 (57.1) | 3 (42.9) | 3 (42.9) | 4 (57.1) |

| 3rd run | 3 (42.9) | 4 (57.1) | 4 (57.1) | 3 (42.9) | 3 (42.9) | 4 (57.1) |

| Concordance among 3 runs | 6 (85.7) | 5 (71.4) | 7 (100) | |||

| Total accuracy (%) | 47.6 | 66.7 | 42.9 | |||

| Prognosis (n) | 4 | 4 | 4 | |||

| 1st run | 3 (75) | 1 (25) | 3 (75) | 1 (25) | 2 (50) | 2 (50) |

| 2nd run | 2 (50) | 2 (50) | 3 (75) | 1 (25) | 2 (50) | 2 (50) |

| 3rd run | 2 (50) | 2 (50) | 3 (75) | 1 (25) | 2 (50) | 2 (50) |

| Concordance among 3 runs | 3 (75) | 4 (100) | 4 (100) | |||

| Total accuracy (%) | 58.3 | 75 | 50 | |||

The total concordance rates of the three LLMs were 78.9%, 88.4%, and 96.2%, respectively (Table 2). A subfield analysis revealed that all three LLMs exhibited high accuracy rates in the risk factor category. Notably, Google Gemini’s responses concerning clinical manifestations (100%), treatment (100%), and prevention (100%) yielded a higher total concordance rate than did the two versions of ChatGPT.

A readability analysis using validated reading-level metrics revealed that answers generated by ChatGPT and Google Gemini had strikingly higher-grade reading levels than the recommended 8th-grade thresholds. Compared to the 8th-grade reading level, the average readability of ChatGPT-3.5 ranged from 13.68 to 21.22 for GFI and 10.75 to 17.22 for FKGL (Table 3). The overall responses and answers related to treatment and prevention from ChatGPT-3.5 far exceeded the reading level of the public (P < 0.001). For ChatGPT-4.0, the GFI and FKGL values were much greater than the standard values, especially in the domains of treatment and prevention (Table 4, P < 0.001). With respect to Google Gemini, the reading levels of the answers far surpassed those of the normal public, particularly in the subfields of diagnosis, treatment, and prevention (Table 5, P < 0.001).

| Subfield | GFI | P value | FKGL | P value |

| Risk factors | 16.73 ± 1.77 | 0.013 | 12.60 ± 1.72 | 0.043 |

| Clinical manifestation | 13.68 ± 2.12 | 0.043 | 10.75 ± 1.72 | 0.109 |

| Diagnosis | 15.46 ± 1.65 | 0.016 | 12.12 ± 1.61 | 0.048 |

| Treatment | 21.22 ± 1.99 | < 0.001 | 17.22 ± 1.47 | < 0.001 |

| Prevention | 18.89 ± 1.80 | < 0.001 | 15.53 ± 1.72 | < 0.001 |

| Prognosis | 18.52 ± 1.85 | 0.010 | 15.51 ± 2.17 | 0.027 |

| Overall | 18.93 ± 3.03 | < 0.001 | 15.31 ± 2.67 | < 0.001 |

| Subfield | GFI | P value | FKGL | P value |

| Risk factors | 14.79 ± 0.24 | < 0.001 | 11.45 ± 0.35 | 0.003 |

| Clinical manifestation | 11.05 ± 0.89 | 0.027 | 9.06 ± 0.73 | 0.130 |

| Diagnosis | 14.40 ± 0.42 | 0.001 | 11.28 ± 0.47 | < 0.001 |

| Treatment | 18.18 ± 1.45 | < 0.001 | 14.57 ± 1.27 | < 0.001 |

| Prevention | 16.49 ± 1.27 | < 0.001 | 13.49 ± 1.09 | < 0.001 |

| Prognosis | 16.10 ± 0.52 | 0.001 | 13.18 ± 0.05 | < 0.001 |

| Overall | 16.39 ± 2.38 | < 0.001 | 13.19 ± 1.96 | < 0.001 |

| Subfield | GFI | P value | FKGL | P value |

| Risk factors | 14.54 ± 0.46 | 0.002 | 10.73 ± 0.16 | 0.001 |

| Clinical manifestation | 13.06 ± 0.42 | 0.002 | 9.81 ± 0.68 | 0.043 |

| Diagnosis | 17.71 ± 0.30 | < 0.001 | 13.54 ± 0.24 | < 0.001 |

| Treatment | 19.93 ± 1.44 | < 0.001 | 15.65 ± 1.06 | < 0.001 |

| Prevention | 15.63 ± 1.96 | < 0.001 | 11.71 ± 1.82 | < 0.001 |

| Prognosis | 14.81 ± 0.62 | 0.003 | 12.37 ± 0.27 | 0.001 |

| Overall | 17.22 ± 2.86 | < 0.001 | 13.32 ± 2.44 | < 0.001 |

In this study, by employing a robust study design, we rigorously evaluated the accuracy and readability of ChatGPT-3.5, ChatGPT-4.0, and Google Gemini for addressing HBV-related questions frequently asked by patients or caregivers. Unlike previous studies that focused on standardized exams[15,10], our study delved into a real-world scenario in which patients or their families may seek assistance through online search engines. A review by three experienced physicians revealed that both the ChatGPT and Google Gemini LLMs presented extensive knowledge about HBV infection, especially with respect to risk factors and diagnosis for ChatGPT-4.0 and prevention and prognosis for Google Gemini. Our findings revealed that LLM chatbots, particularly ChatGPT-4.0, can deliver accurate and comprehensive responses related to HBV infection. Furthermore, we examined the readability of the responses generated by the three LLM chatbots. Notably, these answers far surpassed the reading level of the public, which may limit the utility of LLM chatbots in spreading HBV-related knowledge.

The differences among the three LLM chatbots in terms of their architecture and training data can impact their accuracy and suitability for different scenarios. ChatGPT is based on the generative pre-trained transformer architecture, which is a deep learning technique that is used to train a model on massive data before fine-tuning it on tasks. ChatGPT-3.5 costs fewer computational resources and generates responses quickly, making it ideal for real-time conversations and more lightweight scenarios. The continuous development and refinement of the model’s capabilities resulted in ChatGPT-4.0 in March 2023, which features a larger database, more reliable availability, and extensive training. The improvements of ChatGPT-4.0 make it more reliable for complex tasks but slower to generate responses than ChatGPT-3.5. Google Gemini is founded on Google’s Language Model for Dialogue Applications neural network architecture, allowing the model to better understand the context and generate more accurate responses. Notably, Google Gemini has access to live datasets on the internet, while the dataset knowledge cutoff for ChatGPT is September 2021.

This study first analyzed the accuracy of the responses from the two versions of ChatGPT and Google Gemini. Among the three LLM chatbots, ChatGPT-4.0 was proven to be more proficient than the other two LLMs, achieving the highest accuracy scores and the largest proportions of “totally accurate” ratings in addressing HBV-related questions. Our results are consistent with those of previous studies conducted by Ali et al[16], who highlighted the ability of ChatGPT-4.0, in addition to other LLMs, to assist in neurosurgery examinations, and Lim et al[13], who underscored the considerable potential of ChatGPT-4.0 to deliver accurate and comprehensive responses to myopia-related queries. The superior performance of ChatGPT-4.0 can be attributed to several factors, including its expansive parameter set, advanced reasoning and instruction-following capabilities, up-to-date training data, an enormous investment in experts and sources, and integrated information from previously practically applied LLMs, such as ChatGPT-3.5[17]. Nevertheless, even with these improvements, the overall accuracy ratings of ChatGPT-4.0 remained below 70% in our study, and recent research that assessed ChatGPT performance on various examinations indicated that the accuracy of ChatGPT-4.0 fell behind the average scores achieved by medical students or examinees[18,19]. Because LLMs are limited in recognizing and preventing potential misinformation, it is vital for information obtained from LLMs or similar platforms to be discussed further by medical professionals.

Next, we evaluated the performance of ChatGPT-3.5, ChatGPT-4.0, and Google Gemini across the six subfields. The strengths and weaknesses of the three LLM chatbots differed across the different domains. Our findings suggest that ChatGPT-4.0 was strongly useful for diagnosis, whereas Google Gemini was proficient in clinical manifestations. There were also other aspects in which all three LLM chatbots performed suboptimally, including prevention. One of the reasons for ChatGPT’s less satisfactory performance in prevention may be that the model was trained on internet data available up until September 2021, resulting in the information it provided to the questioners being inconsistent with the latest published consensus. Apart from the potential limitations of training data, the evolving HBV prevention landscape may also contribute to LLM chatbots not fully aligning with the latest advancements in this field. For example, the latest consensus indicated that newborns are recommended to receive the hepatitis B vaccine preferably within 12 hours after birth[20,21]. However, only the Google Gemini model mentioned this point in all three runs. Notably, the role of LLM chatbots potentially varies across different medical specialties. For example, a study conducted by Lahat et al[22] demonstrated poorer accuracy of ChatGPT-3.5 in the domain of “diagnosis” when answering questions related to gastrointestinal health. In another study, Rasmussen et al[23] reported a less satisfying performance of ChatGPT-3.5 in the treatment and prevention of vernal keratoconjunctivitis. This phenomenon can be attributed to the various internet information and sources related to different topics. In conclusion, performance and pitfalls still require thorough evaluation across different medical topics.

Compared with searching for answers from massive information on the internet, LLM chatbots can produce responses conversationally on the internet. To determine the reading level of the responses generated by the LLMs, we used the GFI and FKGL tools and found that the texts mostly required a college-level education. These results were similar to those of a study by Onder et al[24], which indicated that responses from ChatGPT-4.0 were at an advanced reading level. Therefore, although the responses from LLM chatbots can be more comprehensible than those from professional guidelines and primary literature, further developments are needed to increase readability and the ability to provide guidance for patients and clinicians separately.

Our study has several limitations. First, patient raters were not included in this study, and the group in which HBV-related responses occurred was ultimately predetermined. Second, both the number of questions and the number of raters were small, limiting the broad generalizability of the study. Further research should explore the responses to a larger sample of questions and populations, including patient raters. Third, the distributions of the questions across the six categories were unequal, with 41.67% pertaining to “treatment”. Finally, the subjectivity of graders cannot be avoided, although we selected three experienced physicians to rate the answers and employed a consensus-based scoring approach.

In conclusion, this study is the first to show the accuracy and readability of LLM chatbot responses to common questions regarding the management and care of patients with HBV infection. Both ChatGPT and Google Gemini provided “totally accurate” responses, although some of the responses were labeled “accurate but incomplete”. Notably, across the six domains, ChatGPT-4.0 outperformed both ChatGPT-3.5 and Google Gemini in responding to diagnosis-related medical questions, whereas Google Gemini performed excellently in terms of clinical manifestations. However, it is crucial to emphasize that the readability of answers far exceeds the reading level of the average person, and LLMs should complement, rather than replace, the expertise of professional physicians. Further research is warranted to explore the performance of LLMs in the management of other diseases that require chronic and personalized care, and whether LLMs can update the latest medical knowledge across regions and guidelines also needs to be determined. Nevertheless, with the evolving training and improvement in the accuracy of LLMs, collaborations between LLMs and professionals have the potential to enhance the application of LLMs in clinical practice, treatment strategy formulation, patient education, and even advancements in medical research.

| 1. | World Health Organization. Global progress report on HIV, viral hepatitis and sexually transmitted infections, 2021. [cited 30 July 2024]. Available from: https://www.who.int/publications/i/item/9789240027077. |

| 2. | World Health Organization. Global Hepatitis Report, 2017. [cited 30 July 2024]. Available from: https://www.who.int/publications/i/item/9789241565455. |

| 3. | Hsu YC, Huang DQ, Nguyen MH. Global burden of hepatitis B virus: current status, missed opportunities and a call for action. Nat Rev Gastroenterol Hepatol. 2023;20:524-537. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 351] [Reference Citation Analysis (1)] |

| 4. | Lee P, Bubeck S, Petro J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N Engl J Med. 2023;388:1233-1239. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 973] [Cited by in RCA: 827] [Article Influence: 275.7] [Reference Citation Analysis (0)] |

| 5. | De Angelis L, Baglivo F, Arzilli G, Privitera GP, Ferragina P, Tozzi AE, Rizzo C. ChatGPT and the rise of large language models: the new AI-driven infodemic threat in public health. Front Public Health. 2023;11:1166120. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 221] [Cited by in RCA: 239] [Article Influence: 79.7] [Reference Citation Analysis (0)] |

| 6. | Kung TH, Cheatham M, Medenilla A, Sillos C, De Leon L, Elepaño C, Madriaga M, Aggabao R, Diaz-Candido G, Maningo J, Tseng V. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit Health. 2023;2:e0000198. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1564] [Cited by in RCA: 1706] [Article Influence: 568.7] [Reference Citation Analysis (0)] |

| 7. | Will ChatGPT transform healthcare? Nat Med. 2023;29:505-506. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 129] [Reference Citation Analysis (0)] |

| 8. | OpenAI. Introducing ChatGPT. [cited 30 July 2024]. Available from: https://openai.com/index/chatgpt/. |

| 9. | Yeo YH, Samaan JS, Ng WH, Ting PS, Trivedi H, Vipani A, Ayoub W, Yang JD, Liran O, Spiegel B, Kuo A. Assessing the performance of ChatGPT in answering questions regarding cirrhosis and hepatocellular carcinoma. Clin Mol Hepatol. 2023;29:721-732. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 177] [Cited by in RCA: 385] [Article Influence: 128.3] [Reference Citation Analysis (0)] |

| 10. | Miao J, Thongprayoon C, Garcia Valencia OA, Krisanapan P, Sheikh MS, Davis PW, Mekraksakit P, Suarez MG, Craici IM, Cheungpasitporn W. Performance of ChatGPT on Nephrology Test Questions. Clin J Am Soc Nephrol. 2024;19:35-43. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 52] [Cited by in RCA: 48] [Article Influence: 24.0] [Reference Citation Analysis (0)] |

| 11. | Kuehn BM. More than one-third of US individuals use the Internet to self-diagnose. JAMA. 2013;309:756-757. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 29] [Cited by in RCA: 57] [Article Influence: 4.4] [Reference Citation Analysis (0)] |

| 12. | World Health Organization. Guidelines for the prevention, diagnosis, care and treatment for people with chronic hepatitis B infection. [cited 30 July 2024]. Available from: https://www.who.int/publications/i/item/9789240090903. |

| 13. | Lim ZW, Pushpanathan K, Yew SME, Lai Y, Sun CH, Lam JSH, Chen DZ, Goh JHL, Tan MCJ, Sheng B, Cheng CY, Koh VTC, Tham YC. Benchmarking large language models' performances for myopia care: a comparative analysis of ChatGPT-3.5, ChatGPT-4.0, and Google Bard. EBioMedicine. 2023;95:104770. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 193] [Reference Citation Analysis (36)] |

| 14. | Du RC, Liu X, Lai YK, Hu YX, Deng H, Zhou HQ, Lu NH, Zhu Y, Hu Y. Exploring the performance of ChatGPT on acute pancreatitis-related questions. J Transl Med. 2024;22:527. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 9] [Cited by in RCA: 9] [Article Influence: 4.5] [Reference Citation Analysis (0)] |

| 15. | Mihalache A, Popovic MM, Muni RH. Performance of an Artificial Intelligence Chatbot in Ophthalmic Knowledge Assessment. JAMA Ophthalmol. 2023;141:589-597. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 174] [Cited by in RCA: 194] [Article Influence: 64.7] [Reference Citation Analysis (0)] |

| 16. | Ali R, Tang OY, Connolly ID, Fridley JS, Shin JH, Zadnik Sullivan PL, Cielo D, Oyelese AA, Doberstein CE, Telfeian AE, Gokaslan ZL, Asaad WF. Performance of ChatGPT, GPT-4, and Google Bard on a Neurosurgery Oral Boards Preparation Question Bank. Neurosurgery. 2023;93:1090-1098. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 220] [Cited by in RCA: 152] [Article Influence: 50.7] [Reference Citation Analysis (0)] |

| 17. | OpenAI, Achiam J, Adler S, Agarwal S, Ahmad L, Akkaya I, Aleman FL, Almeida D, Altenschmidt J, Altman S, Anadkat S, Avila R, Babuschkin I, Balaji S, Balcom V, Baltescu P, Bao H, Bavarian H, Belgum J, Bello I, Berdine J, Bernadett-Shapiro G, Berner C, Bogdonoff L, Boiko O, Boyd M, Brakman AL, Brockman G, Brooks T, Brundage M, Button K, Cai T, Campbell R, Cann A, Carey B, Carlson C, Carmichael R, Chan B, Chang C, Chantzis F, Chen D, Chen S, Chen R, Chen J, Chen M, Chess B, Chester Cho C, Chu C, Chung HW, Cummings D, Currier J, Dai YX, Decareaux C, Degry T, Deutsch N, Deville D, Dhar A, Dohan D, Dowling S, Dunning S, Ecoffet A, Eleti A, Eloundou T, Farhi D, Fedus L, Felix N, Fishman SP, Forte J, Fulford I, Gao L, Georges E, Gibson C, Goel V, Gogineni T, Goh G, Gontijo-Lopes R, Gordon J, Grafstein M, Gray S, Greene R, Gross J, Gu SS, Guo Y, Hallacy C, Han J, Harris J, He Y, Heaton M, Heidecke J, Hesse C, Hickey A, Wade Hickey W, Peter Hoeschele P, Brandon Houghton B, Kenny Hsu K, Hu S, Hu X, Huizinga J, Jain S, Jain S, Jang J, Jiang A, Roger Jiang R, Haozhun Jin H, Denny Jin D, Shino Jomoto S, Billie Jonn B, Heewoo Jun H, Tomer Kaftan T, Łukasz Kaiser L, Kamali A, Kanitscheider I, Keskar NS, Khan T, Kilpatrick L, Kim JW, Kim C, Kim Y, Kirchner JH, Kiros J, Knight M, Kokotajlo D, Kondraciuk L, Kondrich A, Konstantinidis A, Kosic K, Krueger G, Kuo V, Lampe M, Lan I, Lee T, Leike J, Leung J, Levy D, Li CM, Lim R, Lin M, Lin S, Litwin M, Lopez T, Lowe R, Lue P, Makanju A, Malfacini K, Manning S, Markov T, Markovski Y, Martin B, Mayer K, Mayne A, McGrew B, McKinney SM, McLeavey C, McMillan P, McNeil J, Medina D, Mehta A, Menick J, Metz L, Mishchenko A, Mishkin P, Monaco V, Morikawa E, Mossing D, Mu T, Murati M, Murk O, Mély D, Nair A, Nakano R, Nayak R, Neelakantan A, Ngo R, Noh H, Ouyang L, O'Keefe C, Pachocki J, Paino A, Palermo J, Pantuliano A, Parascandolo G, Parish J, Parparita E, Passos A, Pavlov M, Peng A, Perelman A, Peres FAB, Petrov M, Pinto HPO, Pokorny M, Pokrass M, Pong VH, Powell T, Power A, Power B, Proehl E, Puri R, Radford A, Rae J, Ramesh A, Raymond C, Real F, Rimbach K, Ross C, Rotsted B, Roussez H, Ryder N, Saltarelli M, Sanders T, Santurkar S, Sastry G, Schmidt H, Schnurr D, Schulman J, Selsam D, Sheppard K, Sherbakov T, Shieh J, Shoker S, Shyam P, Sidor S, Sigler E, Simens M, Sitkin J, Slama K, Sohl I, Sokolowsky B, Song Y, Staudacher N, Such FP, Summers N, Sutskever I, Tang J, Tezak N, Thompson MB, Tillet P, Tootoonchian A, Tseng E, Tuggle P, Turley N, Tworek J, Uribe JFC, Vallone A, Vijayvergiya A, Voss C, Wainwright C, Wang JJ, Wang A, Wang B, Ward J, Wei J, Weinmann CJ, Welihinda A, Welinder P, Weng J, Weng L, Wiethoff M, Willner D, Winter C, Wolrich S, Wong H, Workman L, Wu S, Wu J, Wu M, Xiao K, Xu T, Yoo S, Yu K, Yuan Q, Zaremba W, Zellers R, Zhang C, Zhang M, Zhao S, Zheng T, Zhuang J, Zhuk W, Zoph B. GPT-4 technical report. 2023 Preprint. Available from: arXiv:2303.08774. [DOI] [Full Text] |

| 18. | Rosoł M, Gąsior JS, Łaba J, Korzeniewski K, Młyńczak M. Evaluation of the performance of GPT-3.5 and GPT-4 on the Polish Medical Final Examination. Sci Rep. 2023;13:20512. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 40] [Cited by in RCA: 65] [Article Influence: 21.7] [Reference Citation Analysis (0)] |

| 19. | Takagi S, Watari T, Erabi A, Sakaguchi K. Performance of GPT-3.5 and GPT-4 on the Japanese Medical Licensing Examination: Comparison Study. JMIR Med Educ. 2023;9:e48002. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 179] [Reference Citation Analysis (0)] |

| 20. | Martin P, Nguyen MH, Dieterich DT, Lau DT, Janssen HLA, Peters MG, Jacobson IM. Treatment Algorithm for Managing Chronic Hepatitis B Virus Infection in the United States: 2021 Update. Clin Gastroenterol Hepatol. 2022;20:1766-1775. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 45] [Article Influence: 11.3] [Reference Citation Analysis (0)] |

| 21. | You H, Wang F, Li T, Xu X, Sun Y, Nan Y, Wang G, Hou J, Duan Z, Wei L, Jia J, Zhuang H; Chinese Society of Hepatology, Chinese Medical Association; Chinese Society of Infectious Diseases, Chinese Medical Association. Guidelines for the Prevention and Treatment of Chronic Hepatitis B (version 2022). J Clin Transl Hepatol. 2023;11:1425-1442. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 17] [Cited by in RCA: 122] [Article Influence: 40.7] [Reference Citation Analysis (0)] |

| 22. | Lahat A, Shachar E, Avidan B, Glicksberg B, Klang E. Evaluating the Utility of a Large Language Model in Answering Common Patients' Gastrointestinal Health-Related Questions: Are We There Yet? Diagnostics (Basel). 2023;13:1950. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 65] [Reference Citation Analysis (1)] |

| 23. | Rasmussen MLR, Larsen AC, Subhi Y, Potapenko I. Artificial intelligence-based ChatGPT chatbot responses for patient and parent questions on vernal keratoconjunctivitis. Graefes Arch Clin Exp Ophthalmol. 2023;261:3041-3043. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 60] [Article Influence: 20.0] [Reference Citation Analysis (0)] |

| 24. | Onder CE, Koc G, Gokbulut P, Taskaldiran I, Kuskonmaz SM. Evaluation of the reliability and readability of ChatGPT-4 responses regarding hypothyroidism during pregnancy. Sci Rep. 2024;14:243. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 9] [Cited by in RCA: 65] [Article Influence: 32.5] [Reference Citation Analysis (0)] |