Published online Jun 7, 2025. doi: 10.3748/wjg.v31.i21.107601

Revised: April 14, 2025

Accepted: May 19, 2025

Published online: June 7, 2025

Processing time: 68 Days and 22.2 Hours

Video capsule endoscopy (VCE) is a noninvasive technique used to examine small bowel abnormalities in both adults and children. However, manual review of VCE images is time-consuming and labor-intensive, making it crucial to develop deep learning methods to assist in image analysis.

To employ deep learning models for the automatic classification of small bowel lesions using pediatric VCE images.

We retrospectively analyzed VCE images from 162 pediatric patients who under

Abdominal pain was the most common indication for VCE, accounting for 62% of cases, followed by diarrhea, vomiting, and gastrointestinal bleeding. Abnormal lesions were detected in 93 children, with 38 diagnosed with inflammatory bowel disease. Among the deep learning models, DenseNet121 and ResNet50 demonstrated excellent classification performance, achieving accuracies of 90.6% [95% confidence interval (CI): 89.2-92.0] and 90.5% (95%CI: 89.9-91.2), respectively. The AU-ROC values for these models were 93.7% (95%CI: 92.9-94.5) for DenseNet121 and 93.4% (95%CI: 93.1-93.8) for ResNet50.

Our deep learning-based diagnostic tool developed in this study effectively classified lesions in pediatric VCE images, contributing to more accurate diagnoses and increased diagnostic efficiency.

Core Tip: This study addresses the challenges clinicians face in manually reviewing video capsule endoscopy (VCE) images, a process that is both time-consuming and labor-intensive. To alleviate this burden, we utilize deep learning models, including DenseNet121, Visual geometry group-16, ResNet50, and vision transformer, to automatically classify small bowel lesions in pediatric VCE images. Our models effectively distinguished between normal tissue, erosions/erythema, ulcers, and polyps with high accuracy. This approach significantly enhances the efficiency and accuracy of diagnosing lesions in pediatric VCE, offering a promising tool for clinical applications.

- Citation: Huang YH, Lin Q, Jin XY, Chou CY, Wei JJ, Xing J, Guo HM, Liu ZF, Lu Y. Classification of pediatric video capsule endoscopy images for small bowel abnormalities using deep learning models. World J Gastroenterol 2025; 31(21): 107601

- URL: https://www.wjgnet.com/1007-9327/full/v31/i21/107601.htm

- DOI: https://dx.doi.org/10.3748/wjg.v31.i21.107601

Video capsule endoscopy (VCE) is a noninvasive method that provides detailed visualization of the intestinal mucosa. Since its introduction in 2000, VCE has become a crucial diagnostic technology due to its ability to thoroughly inspect the entire intestine without causing pain, requiring anesthesia, using air insufflation, or emitting radiation, unlike other imaging procedures[1,2]. This innovation marked a significant milestone in the field of endoscopy[3]. The Food and Drug Administration approved the use of VCE as a diagnostic option for adults in 2001, for pediatric patients ten years and above in 2003, and for children over the age of two in 2009[4]. Recently, some studies have reported that VCE could be done safely for children younger than one year or lighter than eight kg[5,6]. After the patient swallows the capsule, it travels smoothly through the gastrointestinal (GI) tract from the mouth to the anus without causing any discomfort, while transmitting videos at the same time[7,8]. Both adult and pediatric patients undergo VCE for similar indications, such as obscure GI bleeding, tumors, iron deficiency anemia, inflammatory bowel disease (IBD) investigation, inherited polyp syndromes, or disease extent assessment[9,10]. However, due to the small bowel’s length and location, VCE captures images for over six to eight hours, producing over 50000 images per examination[11]. Clinicians must review these images in order, frame by frame, to identify any abnormalities[12]. Even experienced gastroenterologists may require two hours to precisely examine all the images and detect lesions[13].

Therefore, there is a need to develop deep learning methods for automated lesion detection to improve the diagnostic accuracy and efficiency of VCE reviewing work[14-17]. To date, applications of artificial intelligence (AI) for VCE have been well-studied. Deep learning technology has been shown to demonstrate at least 90% accuracy in detecting GI bleeding, angioectasias, esophagus lesions, and small bowel mucosal ulcers[18-23]. In VCE image analysis, various AI-based models for automating GI disease diagnosis have recently been reported. Fan et al[24] collected small bowel images from 104 patients and used the AlexNet model to detect ulcers and erosions with an accuracy of 95.16%. Tsuboi et al[25] developed a convolutional neural network (CNN)-based model for detecting angioectasia. Trained on 2337 images, the model demonstrated high diagnostic performance with a sensitivity of 98.8% and a specificity of 98.4%. Hwang et al[26] trained a Visual geometry group (VGG) Net model to classify hemorrhagic and ulcerative lesions, achieving an overall accuracy of 96.83%. Saito et al[27] used 30584 images from 292 patients to train a CNN model for identifying protruding lesions, such as polyps, nodules, epithelial tumors, submucosal tumors, and venous structures, with an accuracy of 98.6%. Ding et al[28] collected data from 1970 patients, comprising 158235 images, to develop a CNN-based model for classifying ten types of lesions, including inflammation, ulcers, polyps, lymphangiectasia, bleeding, vascular disease, protruding lesions, lymphatic follicular hyperplasia, diverticulum, and parasites. The model achieved a sensitivity of 99.90% and a specificity of 100% for the AI-assisted group.

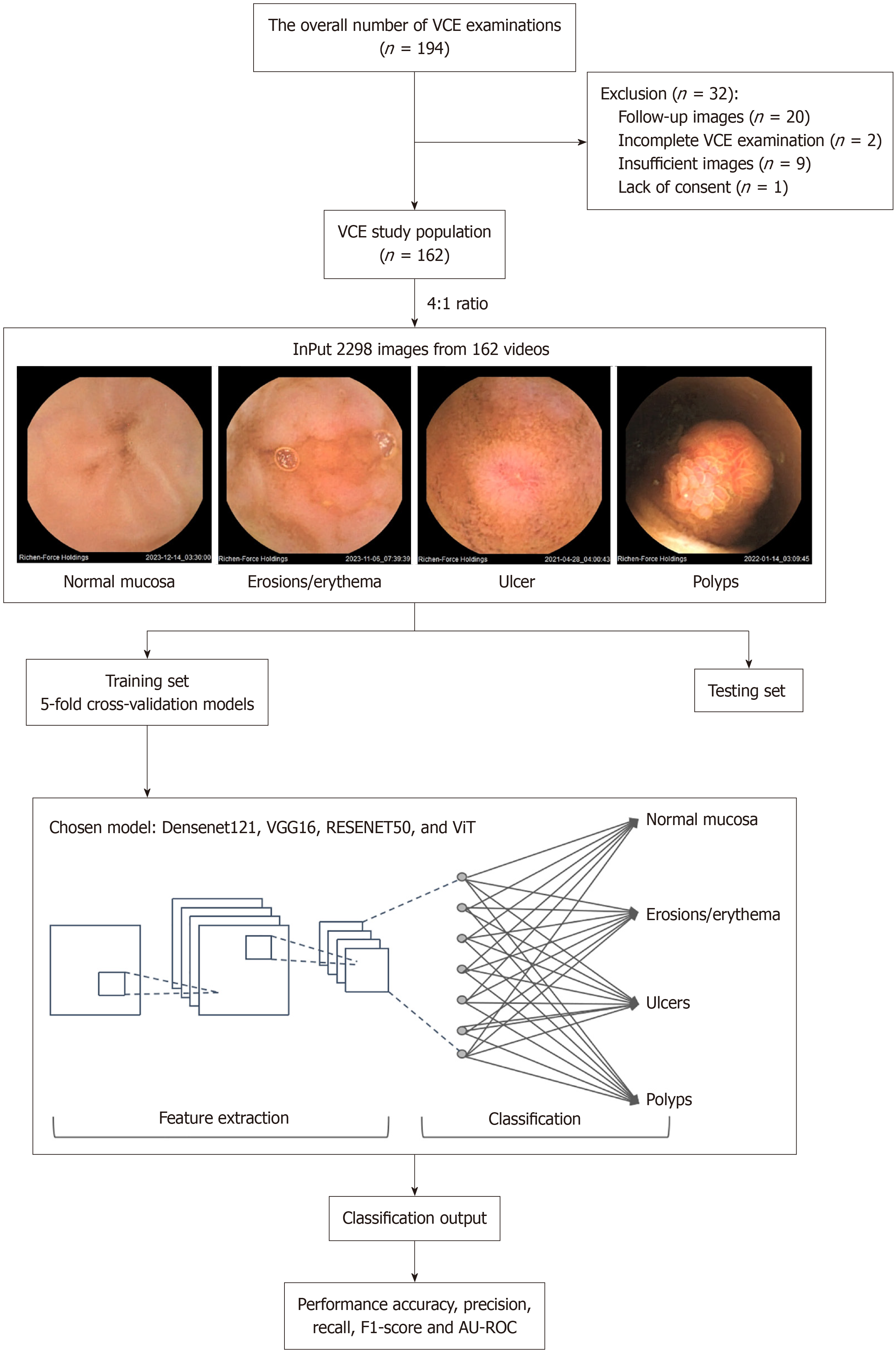

Nevertheless, to date, all studies have focused on building VCE machine-learning models in adults. Compared to adults, children have smaller and more delicate GI tracts, which can affect the capsule’s navigation and the interpretation of images. A machine-learning model trained on adult VCE images on pediatric VCE images may not be able to accurately identify lesions. Thus, it is necessary to develop ML models trained on pediatric VCE data to ensure that the algorithms are more accurate and relevant for this population. Therefore, the present study aims to apply deep learning techniques to pediatric VCE images to detect and classify small bowel mucosal lesions, including normal mucosa, erosions/erythema, ulcers, and polyps.

This single-center, retrospective study was conducted at the Children’s Hospital of Nanjing Medical University (Nanjing, Jiangsu Province, China). Participants with suspected small bowel disorders who have already undergone other imaging exams, like endoscopy and panendoscopy. The study included patients who underwent VCE, managed by the Department of Gastroenterology, between January 2021 and December 2023. The study was approved by the Hospital’s Institutional Review Board (No. 202409001-1). Written informed consent was obtained from all patients or their legal guardians before the VCE examinations.

Participants aged 2 to 18 were eligible for the study if they were recommended for VCE to investigate suspected Crohn’s disease (CD), obscure GI bleeding, polyps, tumors, or other conditions. VCE was also recommended following negative gastroscopy and ileocolonoscopy results according to the guidelines of the American and European endoscopic societies and the European Society of Pediatric Gastroenterology, Hepatology, and Nutrition[4,8,29]. Children with contraindications, including suspected obstruction, bowel stricture, bowel fistula, allergy to materials, etc., were excluded from the study.

Two days before the VCE, the children were placed on a liquid diet. The day before the examination, they were given polyethylene glycol electrolyte solution orally. On the examination day, they were required to fast, abstaining from solid foods for 12 hours and liquids for 4 hours prior to the procedure. All VCE procedures were performed using MiroCam® small bowel capsules (IntroMedic, Seoul, South Korea) and reviewed using the MiroView™ Reader software version 2.5 (IntroMedic, Seoul, South Korea).

One hour after swallowing the capsule, the operator, in this case, the gastroenterologist, will verify if the capsule has reached the duodenum by checking the images transmitted in real time. Patients were allowed to drink water after 2 hours and consume a small amount of solid food after 4 hours. If a child has difficulty swallowing the capsule or if the capsule has not reached the duodenum within two hours, the capsule will be transferred to the duodenum manually via gastroscopy under anesthesia. The patient can resume their normal diet once the capsule was excreted, typically within 24 to 72 hours, or at the end of the examination. The MiroCam® VCE device captures continuous video data composed of 320 × 320-pixel video frames, which were compressed and stored in JPG format. Representative frames were extracted from the video stream using the MiroView® clinical review platform. Gastroenterology fellows labeled the extracted images. An abdominal X-ray is recommended two weeks after VCE if the video did not show the capsule passing into the colon or if the patient did not observe the natural passage of the capsule.

The dataset comprises 2298 images from 162 pediatric patients who underwent VCE. Among these patients, 93 had positive findings indicative of GI abnormalities, and 69 showed no abnormal findings and were considered as the control group. These control patients were diagnosed with conditions unrelated to mucosal pathology, such as disorders of gut-brain interaction, and their VCE images were confirmed to show normal mucosa by expert endoscopists.

All images were categorized into the following four classes: Normal mucosa, erosions/erythema, ulcers, and polyps. These images are divided at random into a training set or a testing set with a 4:1 ratio, respectively. Specifically, 1840 images were allocated for training and 458 images for testing. There is no overlap between the two datasets. Of the 1840 images used for training, 916 images were of normal mucosa, 272 images were of erosions/erythema, 151 images were of ulcers, and 501 images were of polyps. Of the 458 images used for testing, 228 images were of normal mucosa, 68 images were of erosions/erythema, 37 images were of ulcers, and 125 images were of polyps. The data distribution is shown in Table 1.

| Training set | Testing set | Total | |

| Normal mucosa | 916 | 228 | 1144 |

| Erosions/erythema | 272 | 68 | 340 |

| Ulcers | 151 | 37 | 188 |

| Polyps | 501 | 125 | 626 |

| Total images | 1840 | 458 | 2298 |

For this study, four deep learning models [DenseNet121, VGG16, ResNet50, and vision transformer (ViT)] were developed to classify the VCE images captured by the MiroCam® small bowel capsule, into four categories: Normal mucosa, erosions/erythema, ulcers, and polyps (Figure 1). All four models are pre-trained models, using transfer learning to optimize their performance. VGG16 is characterized by its thirteen convolutional layers, three fully connected layers, and the use of 3 × 3 filters with max pooling[30]. ResNet50, a deep CNN architecture, consists of 50 layers and integrates residual connections across its 50 layers, enabling effective training of very deep networks[31]. DenseNet121, another CNN architecture, employs dense connectivity between layers, facilitating feature reuse and parameter efficiency across its 121 layers[32]. ViT has emerged as a transformative approach in image classification by applying the transformer architecture, traditionally used in natural language processing, to large-scale datasets, surpassing CNN[33]. The original ViT framework incorporates patch embedding, position embedding, transformer encoder blocks, a class token, and a classification head[34]. This model usually has a primary dataset of approximately 14 million images but as medical datasets are generally a lot smaller (approximately 500 images)[35], the application of this model in this experiment was adapted using two modules proposed by Lee et al[36], shifted patch tokenization (SPT) and locality self-attention (LSA)-as described in their compact transformers framework. SPT improves the model’s ability to capture local contextual information by applying small spatial shifts to the input image before patch tokenization, thus preserving fine-grained features typically lost in conventional ViT. LSA introduces a learnable locality bias into the attention mechanism, encouraging attention to nearby patches rather than distant ones, which is especially beneficial when training from scratch on limited data. These adaptations allowed for more effective feature extraction and improved model performance on our pediatric capsule endoscopy dataset. We used both modules to enhance the model’s ability to capture local features, thereby enabling effective training of ViT from scratch on smaller datasets. All experiments in this study are conducted using Intel Xeon Gold CPU 5317 @ 3.0 GHz. The analyses were performed on a system with a 3.0 GHz Intel® Xeon® Gold 5317 processor and a double NVIDIA Geforce RTX 4090®.

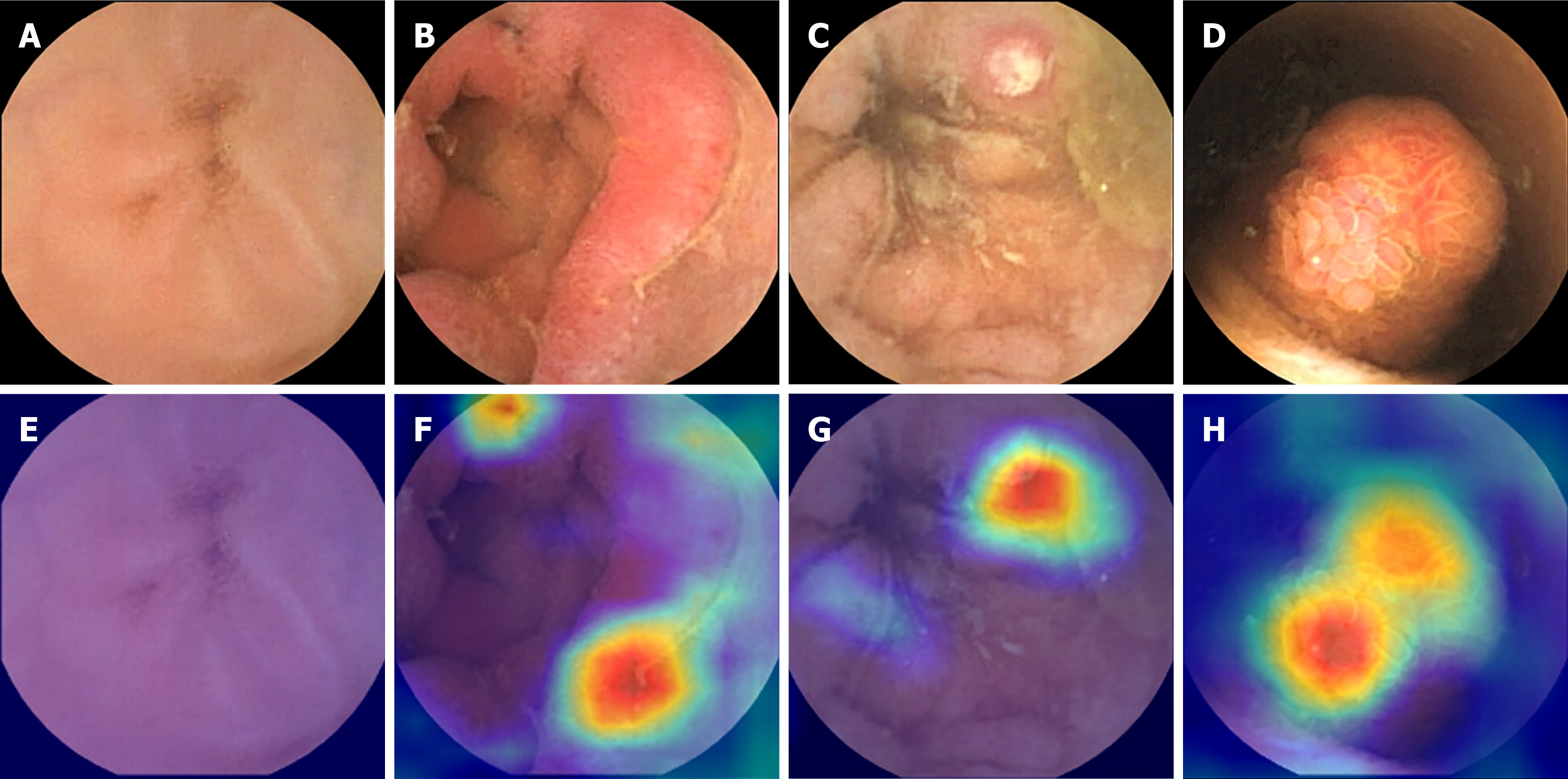

Table 2 shows the adjusted hyperparameters used for each deep learning model. All models were trained using a 5-fold cross-validation strategy to ensure robust performance estimates and reduce overfitting risk. For the ViT model, we used 300 training epochs, a batch size of 16, a learning rate of 0.006, and the stochastic gradient descent optimizer, along with a dropout rate of 0.3 and transfer learning from pretrained weights. The VGG16, ResNet50, and DenseNet121 models were trained using 100 epochs, a batch size of 32, and a learning rate of 0.001 with the same optimizer and regularization settings (weight decay: 4 × 10-4, momentum: 0.8). To further address potential overfitting and class imbalance, we applied extensive data augmentation techniques, including horizontal/vertical flipping, color jitter, rotation, and trivial augment wide, with additional augmentation applied to underrepresented classes. Gradient-weighted class activation mapping (Grad-CAM), a widely used explainability method in AI, was employed to visualize abnormal lesion regions in VCE images[37].

| Type of hyper-parameter | DenseNet121 | VGG16 | ResNet50 | ViT |

| Number of epochs | 100 | 100 | 100 | 300 |

| Batch size | 32 | 32 | 32 | 16 |

| Learning rate | 1 × 10-3 | 1 × 10-3 | 1 × 10-3 | 6 × 10-3 |

| Weight decay | 4 × 10-4 | 4 × 10-4 | 4 × 10-4 | 4 × 10-4 |

| momentum | 0.8 | 0.8 | 0.8 | 0.8 |

| Optimizer | SGD | SGD | SGD | SGD |

Clinical data analysis was conducted using SPSS 27.0 software. Due to non-normal distribution confirmed by the Kolmogorov-Smirnov test, non-normally distributed data are presented as medians and interquartile ranges. Model performance analysis utilized Sci-Kit Learn v1.4.0. We performed pairwise comparisons using analysis of variance with Tukey’s Honest significant difference post-hoc tests. The models were evaluated on key performance metrics, including accuracy, precision, recall, F1-score, and area under the receiver operating curve (AU-ROC), and the resulting P values were used to determine whether the observed differences between models were statistically significant at P < 0.05.

The demographic and baseline clinical characteristics of the patients are shown in Table 3.

| Patient characteristics | Value |

| Gender | |

| Female | 56 (35) |

| Male | 106 (65) |

| Age (year), median (IQR) | 11.00 (9.00, 13.00) |

| Chief complaint | |

| Abdominal pain | 101 (62) |

| Diarrhea | 31 (19) |

| Anemia | 2 (1.2) |

| Hematochezia | 18 (11) |

| Vomiting | 13 (8.0) |

| Fever | 8 (4.9) |

| Oral ulceration | 4 (2.5) |

| Mucocutaneous hyperpigmentation of the mouth and lips | 5 (3.1) |

| Perianal abscess | 10 (6.2) |

| Swallowed VCE by the patients | 137 (85) |

| Placed VCE by endoscopy | 25 (15) |

| Stomach transit time (minute), median (IQR) | 22 (5, 55) |

| Small bowel transit time (minute), median (IQR) | 282 (217, 377) |

| Number of lesions per patient | |

| None | 69 (43) |

| Single | 33 (20) |

| Multiple | 60 (37) |

| Diagnosis | |

| Crohn disease | 36 (22) |

| Ulcerative colitis | 2 (1.2) |

| Suspected inflammatory bowel disease | 30 (19) |

| Behcet disease | 2 (1.2) |

| Disorder of gut-brain interaction | 31 (19) |

| Polyps | 12 (7.4) |

| Gastroenteritis | 35 (22) |

| Others | 14 (8.6) |

This study included 162 patients, with 56 females (35%) and 106 males (65%), between the ages of 9 and 13 years old (median age of 11 years). The most common chief complaint among the patients was abdominal pain, affecting 101 individuals (62%), followed by diarrhea in 31 patients (19%), hematochezia in 18 patients (11%), vomiting in 13 patients (8.0%), and perianal abscess in 10 patients (6.2%). Other symptoms included fever in 8 patients (4.9%), mucocutaneous hyperpigmentation of the mouth and lips in 5 patients (3.1%), oral ulceration in 4 patients (2.5%), and anemia in 2 patients (1.2%). The majority of the patients (137, 85%) swallowed the capsule by themselves, and 25 (15%) patients’ capsules were placed in the duodenum manually via gastroscopy. The transit time in the stomach was 22 (5, 55) minutes, and in the small bowel was 282 (217, 377) minutes. Of the 162 patients, 93 patients (57%) have positive findings in their VCE images. Among these, 33 patients (20%) had a single lesion, and 60 patients (37%) had multiple lesions. As for the enrolled patients’ final diagnosis, 36 (22%) had CD, 2 (1.2%) had ulcerative colitis (UC), 30 (19%) were suspected of having IBD, 2 (1.2%) had Behcet disease, 31 (19%) had disorder of gut-brain interaction, 12 (7.4%) had polyps, 35 (22%) had gastroenteritis, and 14 (8.6%) had other conditions.

Table 4 presents the overall accuracy and various lesion detection accuracies, with 95% confidence intervals (CI), for the four deep learning models: Densenet121, VGG16, ResNet50, and ViT. The overall accuracy for the DenseNet121, VGG16, ResNet50, and ViT models was 90.6% (95%CI: 89.2-92.0), 88.3% (87.9-88.8), 90.5% (89.9-91.1), and 88.1% (86.7-89.6), respectively. The accuracy in detecting normal mucosa for DenseNet121, VGG16, ResNet50, and ViT were 98.6% (96.0-100), 92.2% (89.7-94.6), 98.1% (96.8-99.4), and 93.2% (88.5-97.9), respectively. The accuracy in detecting ulcers for Densenet121, VGG16, ResNet50, and ViT were 83.3% (75.6-91.1), 91.6% (89.9-93.3), 87.0% (82.3-91.7), and 87.4% (77.6-97.3), respectively. The accuracy in detecting erosions and erythema for Densenet121, VGG16, ResNet50, and ViT were 81.9% (74.2-89.6), 72.1% (63.6-80.6), 77.3% (72.4-82.2), and 73.0% (61.3-84.8), respectively. The accuracy in detecting polyps for Densenet121, VGG16, ResNet50, and ViT were 100% (100-100), 75.0% (66.8-83.2), 100% (100-100), and 87.9% (76.3-99.5), respectively.

| Model | Overall accuracy (%) (95%CI) | Normal mucosa (%) (95%CI) | Ulcers (%) (95%CI) | Erosions/erythema (%) (95%CI) | Polyps (%) (95%CI) |

| DenseNet121 | 90.6 (89.2-92.0) | 98.6 (96.0-100) | 83.3 (75.6-91.1) | 81.9 (74.2-89.6) | 100 (100-100) |

| VGG16 | 88.3 (87.9-88.8) | 92.2 (89.7-94.6) | 91.6 (89.9-93.3) | 72.1 (63.6-80.6) | 75.0 (66.8-83.2) |

| ResNet50 | 90.5 (89.9-91.1) | 98.1 (96.8-99.4) | 87.0 (82.3-91.7) | 77.3 (72.4-82.2) | 100 (100-100) |

| ViT | 88.1 (86.7-89.6) | 93.2 (88.5-97.9) | 87.4 (77.6-97.3) | 73.0 (61.3-84.8) | 87.9 (76.3-99.5) |

A 5-fold cross-validation was performed in the training stage. The performance of the four deep learning models was analyzed using accuracy, precision, recall, F1-score, and AU-ROC with 95%CIs. The DenseNet121 model achieved an accuracy of 90.6% (89.2-92.0), a precision of 91.8% (89.6-94.0), a recall of 91.0% (89.8-92.1), an F1-score of 91.2% (89.4-92.9), and an AU-ROC of 93.7% (92.9-94.5). The ResNet50 model reported an accuracy of 90.5% (89.9-91.2), a precision of 92.5% (91.6-93.3), a recall of 90.7% (90.0-91.3), an F1-score of 91.3% (90.7-91.9), and an AU-ROC of 93.4% (93.1-93.8). The VGG16 model showed an accuracy of 88.3% (87.9-88.8), a precision of 83.0% (80.7-85.3), a recall of 82.8% (81.0-84.6), an F1-score of 82.6% (81.9-83.3), and an AU-ROC of 89.2% (88.1-90.3). The ViT model yielded an accuracy of 88.1% (86.7-89.6), a precision of 84.4% (78.5-90.3), a recall of 85.4% (81.0-89.7), an F1-score of 84.6% (80.0-89.2), and an AU-ROC of 90.4% (88.1-92.7). Table 5 provides the mean performance metrics for each model along with their 95%CIs. Figure 2 shows the Grad-CAMs of the four models with corresponding VCE images.

| Model | Accuracy (%) (95%CI) | Precision (%) (95%CI) | Recall (%) (95%CI) | F1-score (%) (95%CI) | AU-ROC (%) (95%CI) |

| DenseNet121 | 90.6 (89.2-92.0) | 91.8 (89.6-94.0) | 91.0 (89.8-92.1) | 91.2 (89.4-92.9) | 93.7 (92.9-94.5) |

| VGG16 | 88.3 (87.9-88.8) | 83.0 (80.7-85.3) | 82.8 (81.0-84.6) | 82.6 (81.9-83.3) | 89.2 (88.1-90.3) |

| ResNet50 | 90.5 (89.9-91.2) | 92.5 (91.6-93.3) | 90.7 (90.0-91.3) | 91.3 (90.7-91.9) | 93.4 (93.1-93.8) |

| ViT | 88.1 (86.7-89.6) | 84.4 (78.5-90.3) | 85.4 (81.0-89.7) | 84.6 (80.0-89.2) | 90.4 (88.1-92.7) |

Furthermore, Table 6 presents the results of pairwise comparisons between models for each metric. These results show that DenseNet121 significantly outperformed VGG16 and ViT across all five evaluation metrics (P < 0.05). Meanwhile, ResNet50 also showed statistically significant superiority over VGG16 and ViT (P < 0.05). However, no statistically significant difference was observed between DenseNet121 and ResNet50 (P > 0.05 across metrics), suggesting their performances are comparable.

| Evaluated metrics | DenseNet121 vs VGG16 | DenseNet121 vs ResNet50 | DenseNet121 vs ViT | VGG16 vs ResNet50 | VGG16 vs ViT | ResNet50 vs ViT |

| Accuracy | 0.004 | 0.999 | 0.002 | 0.006 | 0.978 | 0.003 |

| Precision | 0.001 | 0.981 | 0.003 | 0.001 | 0.85 | 0.001 |

| Recall | 0.001 | 0.995 | 0.002 | 0.001 | 0.212 | 0.003 |

| F1-score | 0.001 | 0.999 | 0.001 | 0.001 | 0.421 | 0.001 |

| AU-ROC | 0.001 | 0.984 | 0.001 | 0.001 | 0.304 | 0.002 |

Table 7 shows the accuracy, precision, recall, F1-score, and AU-ROC of the deep learning models when used on the testing dataset. The ResNet50 model achieved the highest accuracy at 89.7%, along with a precision of 87.8%, recall of 81.0%, F1-score of 83.8%, and AU-ROC of 88.5%, indicating its strong overall performance. The DenseNet121 model followed closely, with an accuracy of 88.6%, a precision of 87.5%, a recall of 79%, an F1-score of 82.5%, and an AU-ROC of 87.1%. The ViT model also performed well, with an accuracy of 86.6%, a precision of 81.3%, a recall of 80.0%, an F1-score of 80.5%, and an AU-ROC of 87.5%. Finally, the VGG16 model had the lowest metrics, achieving an accuracy of 85.5%, a precision of 87.0%, a recall of 73.3%, an F1-score of 77.3%, and an AU-ROC of 83.6%.

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) | AU-ROC (%) |

| DenseNet121 | 88.6 | 87.5 | 79.0 | 82.5 | 87.1 |

| VGG16 | 85.5 | 87.0 | 73.3 | 77.3 | 83.6 |

| ResNet50 | 89.7 | 87.8 | 81.0 | 83.8 | 88.5 |

| ViT | 86.6 | 81.3 | 80.0 | 80.5 | 87.5 |

AI is becoming more and more prevalent in all industries, including medicine, thanks to its robust capabilities in feature extraction and classification in medical imaging, particularly for VCE[38]. Compared to conventional methods, VCE offers advantages such as radiation-free imaging, non-invasiveness, patient comfort, and high tolerance, making it an indispensable tool in a pediatrician’s arsenal. When coupled with AI, VCE can accelerate the process of analyzing the images/videos, leading to faster diagnosis of diseases while standardizing diagnostic criteria. Thus far, deep-learning studies using VCE images have shown results in detecting lesions and abnormalities in the small bowel[26]. While various methods have been explored for identifying bleeding, polyps, ulcers, and other conditions in adults[39,40], the full potential of AI in pediatric VCE is yet to be realized. To the best of our knowledge, no previous research has attempted to apply deep learning in classifying normal mucosa, erosions/erythema, ulcers, and polyps using VCE images in children. This study investigates pediatric VCE characteristics among patients admitted to our hospital between 2021 and 2023. Our models demonstrated consistently high accuracy in detecting various types of lesions, resulting in faster but still accurate diagnoses, better patient outcomes, and improved overall care for children.

This study utilizes four deep-learning methods for VCE image classification. The models included classic CNN architectures such as DenseNet121, VGG16, and ResNet50, as well as the recently popular ViT model. We used these models to classify VCE images into four categories: Normal, erosions/erythema, ulcers, and polyps. In our study, models of DenseNet121, VGG16, ResNet50, and ViT demonstrated strong performance overall. Our trained DenseNet121 and ResNet50 models achieved an accuracy of 90%, while VGG16 and ViT models achieved an accuracy of 88%. Recent studies have explored the use of transformers for analyzing and interpreting VCE images in adults[41,42], but their application in pediatric settings remains unexplored. Although our model showed an excellent performance in the overall classification, the accuracy varies when identifying different lesions, as shown in Table 4. The models demonstrated exceptionally high accuracy in identifying normal mucosa (92% to 99%). However, the accuracy of polyp detection was between 75% and 100%. In particular, the VGG16 model was the worst out of the four, likely due to its less balanced performance when handling smaller datasets. The accuracy of ulcer detection is relatively high (83% to 92%), while the detection of erosions and erythema had the lowest accuracy among all the categories (72% to 82%). The lower accuracy in detecting erosions and erythema compared to other lesions might be attributed to several factors as follows: (1) These lesions often exhibit subtle visual features that may resemble normal mucosa or early-stage ulcers, making them more difficult to distinguish; (2) Erosions and erythema can vary significantly in size and shape, which might contribute to inconsistencies in detection; (3) The surrounding features around erosions and erythema might be more complex leading to difficulty in recognizing; and (4) Poor quality and fewer images in training data will also affect the accuracy of recognition.

The use of AI can efficiently aid physicians in accurately diagnosing and managing diseases. The healthcare system is heavily burdened by severe diseases that could have been prevented with early detection. Cancers like breast, colorectal, and lung cancers have benefited from early detection, leading to better prognoses for patients and better quality of life as management tends to be less strenuous on the patient in the early stages. On the same basis, using AI to process VCE images and differentiate between normal mucosa and lesions like erosions/erythema, ulcers, and polyps can help diagnose diseases like CD, IBD, and UC faster while decreasing the cost of performing the exam as fewer specialists are required to process the same number of images, making the exam more accessible. Malnutrition and anemia can result from various GI diseases and have serious repercussions in children[43-45]. Not only does it decrease a child’s quality of life, but it can also cause growth disruptions and psychological distress[46-48]. Timely management improves outcomes. Studies have shown that early intervention in CD has translated well in clinical settings[49,50]. Walters et al[50] has found that early treatment with anti-tumor necrosis factor-α has been very effective, with 85.3% of the pediatric patients in the study achieving remission by one year. In addition, as with any technician-dependent exam, the accuracy of the interpretation of images from VCE is operator-dependent. AI can help standardize diagnostic processes by creating more uniform guidelines for interpreting medical data, reducing variability in diagnoses and improving consistency.

Currently, there are limitations to utilizing AI to diagnose diseases. The quality of AI models is entirely dependent on the datasets on which they are trained. Biases will stem from inaccurate representation of populations (incomplete, skewed, or lacking diversity). Our dataset of 2298 VCE images used for model training and testing may not suffice to ensure the suitability of these algorithms for practical clinical use. For VCEs in particular, this exam can be expensive and children in general are not subject to exams unless it were absolutely necessary, leading to smaller sample sizes of data. Smaller sample sizes are also unable to compensate for slight discrepancies. For example, in training, DenseNet121 had the highest accuracy (90.6%), followed by ResNet50 (90.5%). However, in testing, ResNet50 had the highest accuracy (89.7%), while DenseNet121 had the second highest accuracy (88.6%). The slight differences in training are shown to be negligible through pairwise comparisons (Table 6), so both models can be considered to have performed equally well. ResNet50 performing better in testing could have been due to the limited sample size for testing as well as the innate differences between models and how they each respond to the dataset. These fluctuations in performance can be more pronounced in smaller datasets. In the same vein, ViT showed the poorest accuracy in training (88.1%), with VGG16 coming in second to last (88.3%), but ViT performed better in testing with an accuracy of 86.6%, while VGG16 had an accuracy of 85.5%. Pairwise comparisons of ViT and VGG16’s performances in training were found to be negligible (P > 0.05). Despite the discrepancies between training and testing, the overall trend was maintained. In training, DenseNet121 and ResNet50 performed significantly better than ViT and VGG16 (P < 0.05), as shown in Table 6, and this was seen as well in testing. Aside from inaccurate population representation, identifying less obvious lesions or differentiating between lesions will prove challenging without enough training data as some lesion morphology can look very similar to other types of lesions or regular mucosa or even artifacts like bubbles or food. Therefore, further research is needed in order to produce truly viable models. There remains a notable absence of prospective multi-center research in VCE, a prerequisite for integrating this technology into routine clinical workflows. In addition, studies have mainly focused on specific types of VCE, limiting the generalizability of developed models across different VCE platforms. Lastly, our study, like many others, employed datasets composed of selected still frames rather than full-length videos, potentially introducing selection bias. Moving forward, it is imperative to develop deep learning models capable of directly analyzing VCE videos, including techniques for video-to-image conversion and classification of abnormal images by lesion type in the small bowel. Future studies should involve comprehensive analysis using full VCE videos from diverse medical centers to validate the clinical accuracy of proposed models prior to clinical application.

This study employed VGG16, ResNet50, DenseNAet121, and ViT models along with Grad-CAM to classify video capsule endoscopic images in children. The four deep learning models effectively differentiated normal tissue, erosions/erythema, ulcers, and polyps with high accuracy. This automated detection approach not only enhances clinical practice by significantly reducing the time required to analyze VCE images but also improves diagnostic accuracy, making it a valuable tool for pediatric gastroenterologists.

| 1. | Iddan G, Meron G, Glukhovsky A, Swain P. Wireless capsule endoscopy. Nature. 2000;405:417. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1994] [Cited by in RCA: 1410] [Article Influence: 54.2] [Reference Citation Analysis (2)] |

| 2. | Thomson M, Fritscher-Ravens A, Mylonaki M, Swain P, Eltumi M, Heuschkel R, Murch S, McAlindon M, Furman M. Wireless capsule endoscopy in children: a study to assess diagnostic yield in small bowel disease in paediatric patients. J Pediatr Gastroenterol Nutr. 2007;44:192-197. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 74] [Cited by in RCA: 76] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 3. | Cohen SA, Ephrath H, Lewis JD, Klevens A, Bergwerk A, Liu S, Patel D, Reed-Knight B, Stallworth A, Wakhisi T, Gold BD. Pediatric capsule endoscopy: review of the small bowel and patency capsules. J Pediatr Gastroenterol Nutr. 2012;54:409-413. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 37] [Cited by in RCA: 37] [Article Influence: 2.6] [Reference Citation Analysis (0)] |

| 4. | Friedlander JA, Liu QY, Sahn B, Kooros K, Walsh CM, Kramer RE, Lightdale JR, Khlevner J, McOmber M, Kurowski J, Giefer MJ, Pall H, Troendle DM, Utterson EC, Brill H, Zacur GM, Lirio RA, Lerner DG, Reynolds C, Gibbons TE, Wilsey M, Liacouras CA, Fishman DS; Endoscopy Committee. NASPGHAN Capsule Endoscopy Clinical Report. J Pediatr Gastroenterol Nutr. 2017;64:485-494. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 18] [Cited by in RCA: 27] [Article Influence: 3.0] [Reference Citation Analysis (1)] |

| 5. | Jensen MK, Tipnis NA, Bajorunaite R, Sheth MK, Sato TT, Noel RJ. Capsule endoscopy performed across the pediatric age range: indications, incomplete studies, and utility in management of inflammatory bowel disease. Gastrointest Endosc. 2010;72:95-102. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 37] [Cited by in RCA: 39] [Article Influence: 2.4] [Reference Citation Analysis (0)] |

| 6. | Nuutinen H, Kolho KL, Salminen P, Rintala R, Koskenpato J, Koivusalo A, Sipponen T, Färkkilä M. Capsule endoscopy in pediatric patients: technique and results in our first 100 consecutive children. Scand J Gastroenterol. 2011;46:1138-1143. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 35] [Cited by in RCA: 49] [Article Influence: 3.3] [Reference Citation Analysis (0)] |

| 7. | Eliakim R. Video capsule endoscopy of the small bowel. Curr Opin Gastroenterol. 2010;26:129-133. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 36] [Cited by in RCA: 30] [Article Influence: 1.9] [Reference Citation Analysis (0)] |

| 8. | Pennazio M, Spada C, Eliakim R, Keuchel M, May A, Mulder CJ, Rondonotti E, Adler SN, Albert J, Baltes P, Barbaro F, Cellier C, Charton JP, Delvaux M, Despott EJ, Domagk D, Klein A, McAlindon M, Rosa B, Rowse G, Sanders DS, Saurin JC, Sidhu R, Dumonceau JM, Hassan C, Gralnek IM. Small-bowel capsule endoscopy and device-assisted enteroscopy for diagnosis and treatment of small-bowel disorders: European Society of Gastrointestinal Endoscopy (ESGE) Clinical Guideline. Endoscopy. 2015;47:352-376. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 482] [Cited by in RCA: 574] [Article Influence: 52.2] [Reference Citation Analysis (1)] |

| 9. | ASGE Standards of Practice Committee; Lightdale JR, Acosta R, Shergill AK, Chandrasekhara V, Chathadi K, Early D, Evans JA, Fanelli RD, Fisher DA, Fonkalsrud L, Hwang JH, Kashab M, Muthusamy VR, Pasha S, Saltzman JR, Cash BD; American Society for Gastrointestinal Endoscopy. Modifications in endoscopic practice for pediatric patients. Gastrointest Endosc. 2014;79:699-710. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 117] [Cited by in RCA: 129] [Article Influence: 10.8] [Reference Citation Analysis (1)] |

| 10. | Cohen SA, Klevens AI. Use of capsule endoscopy in diagnosis and management of pediatric patients, based on meta-analysis. Clin Gastroenterol Hepatol. 2011;9:490-496. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 49] [Cited by in RCA: 59] [Article Influence: 3.9] [Reference Citation Analysis (0)] |

| 11. | ASGE Technology Committee; Wang A, Banerjee S, Barth BA, Bhat YM, Chauhan S, Gottlieb KT, Konda V, Maple JT, Murad F, Pfau PR, Pleskow DK, Siddiqui UD, Tokar JL, Rodriguez SA. Wireless capsule endoscopy. Gastrointest Endosc. 2013;78:805-815. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 199] [Cited by in RCA: 197] [Article Influence: 15.2] [Reference Citation Analysis (2)] |

| 12. | Wang Q, Pan N, Xiong W, Lu H, Li N, Zou X. Reduction of bubble-like frames using a RSS filter in wireless capsule endoscopy video. Opt Laser Technol. 2019;110:152-157. [DOI] [Full Text] |

| 13. | Oliva S, Cohen SA, Di Nardo G, Gualdi G, Cucchiara S, Casciani E. Capsule endoscopy in pediatrics: a 10-years journey. World J Gastroenterol. 2014;20:16603-16608. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 23] [Cited by in RCA: 22] [Article Influence: 1.8] [Reference Citation Analysis (0)] |

| 14. | Jia X, Xing X, Yuan Y, Xing L, Meng MQ. Wireless Capsule Endoscopy: A New Tool for Cancer Screening in the Colon With Deep-Learning-Based Polyp Recognition. Proc IEEE. 2020;108:178-197. [RCA] [DOI] [Full Text] [Cited by in Crossref: 58] [Cited by in RCA: 30] [Article Influence: 5.0] [Reference Citation Analysis (0)] |

| 15. | Kim SH, Hwang Y, Oh DJ, Nam JH, Kim KB, Park J, Song HJ, Lim YJ. Efficacy of a comprehensive binary classification model using a deep convolutional neural network for wireless capsule endoscopy. Sci Rep. 2021;11:17479. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1] [Cited by in RCA: 10] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 16. | Kim SH, Lim YJ. Artificial Intelligence in Capsule Endoscopy: A Practical Guide to Its Past and Future Challenges. Diagnostics (Basel). 2021;11. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 3] [Cited by in RCA: 19] [Article Influence: 3.8] [Reference Citation Analysis (0)] |

| 17. | Oh DJ, Hwang Y, Lim YJ. A Current and Newly Proposed Artificial Intelligence Algorithm for Reading Small Bowel Capsule Endoscopy. Diagnostics (Basel). 2021;11. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 8] [Reference Citation Analysis (0)] |

| 18. | Xiao Jia, Meng MQ. A deep convolutional neural network for bleeding detection in Wireless Capsule Endoscopy images. Annu Int Conf IEEE Eng Med Biol Soc. 2016;2016:639-642. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 71] [Cited by in RCA: 69] [Article Influence: 7.7] [Reference Citation Analysis (0)] |

| 19. | Xiao Jia, Meng MQ. Gastrointestinal bleeding detection in wireless capsule endoscopy images using handcrafted and CNN features. Annu Int Conf IEEE Eng Med Biol Soc. 2017;2017:3154-3157. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 26] [Cited by in RCA: 25] [Article Influence: 3.1] [Reference Citation Analysis (0)] |

| 20. | Aoki T, Yamada A, Kato Y, Saito H, Tsuboi A, Nakada A, Niikura R, Fujishiro M, Oka S, Ishihara S, Matsuda T, Nakahori M, Tanaka S, Koike K, Tada T. Automatic detection of blood content in capsule endoscopy images based on a deep convolutional neural network. J Gastroenterol Hepatol. 2020;35:1196-1200. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 46] [Cited by in RCA: 76] [Article Influence: 12.7] [Reference Citation Analysis (0)] |

| 21. | Xing X, Jia X, Meng MQ. Bleeding Detection in Wireless Capsule Endoscopy Image Video Using Superpixel-Color Histogram and a Subspace KNN Classifier. Annu Int Conf IEEE Eng Med Biol Soc. 2018;2018:1-4. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 18] [Cited by in RCA: 20] [Article Influence: 2.5] [Reference Citation Analysis (0)] |

| 22. | Noya F, Alvarez-Gonzalez MA, Benitez R. Automated angiodysplasia detection from wireless capsule endoscopy. Annu Int Conf IEEE Eng Med Biol Soc. 2017;2017:3158-3161. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 13] [Article Influence: 1.4] [Reference Citation Analysis (0)] |

| 23. | Leenhardt R, Vasseur P, Li C, Saurin JC, Rahmi G, Cholet F, Becq A, Marteau P, Histace A, Dray X; CAD-CAP Database Working Group. A neural network algorithm for detection of GI angiectasia during small-bowel capsule endoscopy. Gastrointest Endosc. 2019;89:189-194. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 129] [Cited by in RCA: 152] [Article Influence: 21.7] [Reference Citation Analysis (1)] |

| 24. | Fan S, Xu L, Fan Y, Wei K, Li L. Computer-aided detection of small intestinal ulcer and erosion in wireless capsule endoscopy images. Phys Med Biol. 2018;63:165001. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 83] [Cited by in RCA: 92] [Article Influence: 11.5] [Reference Citation Analysis (0)] |

| 25. | Tsuboi A, Oka S, Aoyama K, Saito H, Aoki T, Yamada A, Matsuda T, Fujishiro M, Ishihara S, Nakahori M, Koike K, Tanaka S, Tada T. Artificial intelligence using a convolutional neural network for automatic detection of small-bowel angioectasia in capsule endoscopy images. Dig Endosc. 2020;32:382-390. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 75] [Cited by in RCA: 110] [Article Influence: 18.3] [Reference Citation Analysis (0)] |

| 26. | Hwang Y, Lee HH, Park C, Tama BA, Kim JS, Cheung DY, Chung WC, Cho YS, Lee KM, Choi MG, Lee S, Lee BI. Improved classification and localization approach to small bowel capsule endoscopy using convolutional neural network. Dig Endosc. 2021;33:598-607. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 42] [Cited by in RCA: 36] [Article Influence: 7.2] [Reference Citation Analysis (0)] |

| 27. | Saito H, Aoki T, Aoyama K, Kato Y, Tsuboi A, Yamada A, Fujishiro M, Oka S, Ishihara S, Matsuda T, Nakahori M, Tanaka S, Koike K, Tada T. Automatic detection and classification of protruding lesions in wireless capsule endoscopy images based on a deep convolutional neural network. Gastrointest Endosc. 2020;92:144-151.e1. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 84] [Cited by in RCA: 114] [Article Influence: 19.0] [Reference Citation Analysis (1)] |

| 28. | Ding Z, Shi H, Zhang H, Meng L, Fan M, Han C, Zhang K, Ming F, Xie X, Liu H, Liu J, Lin R, Hou X. Gastroenterologist-Level Identification of Small-Bowel Diseases and Normal Variants by Capsule Endoscopy Using a Deep-Learning Model. Gastroenterology. 2019;157:1044-1054.e5. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 248] [Cited by in RCA: 221] [Article Influence: 31.6] [Reference Citation Analysis (0)] |

| 29. | Mishkin DS, Chuttani R, Croffie J, Disario J, Liu J, Shah R, Somogyi L, Tierney W, Song LM, Petersen BT; Technology Assessment Committee, American Society for Gastrointestinal Endoscopy. ASGE Technology Status Evaluation Report: wireless capsule endoscopy. Gastrointest Endosc. 2006;63:539-545. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 186] [Cited by in RCA: 169] [Article Influence: 8.5] [Reference Citation Analysis (0)] |

| 30. | Sharma A, Kumar R, Garg P. Deep learning-based prediction model for diagnosing gastrointestinal diseases using endoscopy images. Int J Med Inform. 2023;177:105142. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 26] [Reference Citation Analysis (0)] |

| 31. | Sahaai MB, Jothilakshmi GR, Ravikumar D, Prasath R, Singh S. ResNet-50 based deep neural network using transfer learning for brain tumor classification. AIP Conf Proc. 2022. [DOI] [Full Text] |

| 32. | Huang G, Liu Z, Van Der Maaten L, Weinberger KQ. Densely Connected Convolutional Networks. IEEE 2017: Conference on Computer Vision and Pattern Recognition (CVPR); 2017 July 21-26; Honolulu, HI, United States. 2017: 2261-2269. [DOI] [Full Text] |

| 33. | Huang G, Sun Y, Liu Z, Sedra D, Weinberger K. Deep Networks with Stochastic Depth. 2016. Available from: arXiv:1603.09382. [DOI] [Full Text] |

| 34. | Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, Uszkoreit J, Houlsby N. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. 2021. Available from: arXiv:2010.11929. [DOI] [Full Text] |

| 35. | Khalil M, Khalil A, Ngom A. A Comprehensive Study of Vision Transformers in Image Classification Tasks. 2023. Available from: arXiv:2312.01232. [DOI] [Full Text] |

| 36. | Lee SH, Lee S, Song BC. Vision Transformer for Small-Size Datasets. 2021. Available from: arXiv:2112.13492. [DOI] [Full Text] |

| 37. | Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. Int J Comput Vis. 2020;128:336-359. [RCA] [DOI] [Full Text] [Cited by in Crossref: 977] [Cited by in RCA: 1679] [Article Influence: 239.9] [Reference Citation Analysis (0)] |

| 38. | Cao Q, Deng R, Pan Y, Liu R, Chen Y, Gong G, Zou J, Yang H, Han D. Robotic wireless capsule endoscopy: recent advances and upcoming technologies. Nat Commun. 2024;15:4597. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 37] [Reference Citation Analysis (0)] |

| 39. | Li B, Meng MQ. Computer-based detection of bleeding and ulcer in wireless capsule endoscopy images by chromaticity moments. Comput Biol Med. 2009;39:141-147. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 112] [Cited by in RCA: 63] [Article Influence: 3.7] [Reference Citation Analysis (0)] |

| 40. | Chen Y, Lee J. Ulcer detection in wireless capsule endoscopy video. MM’12: Proceedings of the 20th ACM international conference on Multimedia; 2012 Oct 29; 2012: 1181-1184. [DOI] [Full Text] |

| 41. | Bai L, Wang L, Chen T, Zhao Y, Ren H. Transformer-Based Disease Identification for Small-Scale Imbalanced Capsule Endoscopy Dataset. Electronics. 2022;11:2747. [DOI] [Full Text] |

| 42. | Subedi A, Regmi S, Regmi N, Bhusal B, Bagci U, Jha D. Classification of Endoscopy and Video Capsule Images using CNN-Transformer Model. 2024. Available from: arXiv:2408.10733. [DOI] [Full Text] |

| 43. | Goyal A, Zheng Y, Albenberg LG, Stoner NL, Hart L, Alkhouri R, Hampson K, Ali S, Cho-Dorado M, Goyal RK, Grossman A. Anemia in Children With Inflammatory Bowel Disease: A Position Paper by the IBD Committee of the North American Society of Pediatric Gastroenterology, Hepatology and Nutrition. J Pediatr Gastroenterol Nutr. 2020;71:563-582. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 57] [Cited by in RCA: 52] [Article Influence: 8.7] [Reference Citation Analysis (0)] |

| 44. | Wiskin AE, Wootton SA, Hunt TM, Cornelius VR, Afzal NA, Jackson AA, Beattie RM. Body composition in childhood inflammatory bowel disease. Clin Nutr. 2011;30:112-115. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 37] [Cited by in RCA: 42] [Article Influence: 2.6] [Reference Citation Analysis (0)] |

| 45. | Pawłowska-Seredyńska K, Akutko K, Umławska W, Śmieszniak B, Seredyński R, Stawarski A, Pytrus T, Iwańczak B. Nutritional status of pediatric patients with inflammatory bowel diseases is related to disease duration and clinical picture at diagnosis. Sci Rep. 2023;13:21300. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 5] [Reference Citation Analysis (0)] |

| 46. | Mackner LM, Greenley RN, Szigethy E, Herzer M, Deer K, Hommel KA. Psychosocial issues in pediatric inflammatory bowel disease: report of the North American Society for Pediatric Gastroenterology, Hepatology, and Nutrition. J Pediatr Gastroenterol Nutr. 2013;56:449-458. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 126] [Cited by in RCA: 143] [Article Influence: 11.0] [Reference Citation Analysis (1)] |

| 47. | Mikocka-Walus A, Knowles SR, Keefer L, Graff L. Controversies Revisited: A Systematic Review of the Comorbidity of Depression and Anxiety with Inflammatory Bowel Diseases. Inflamm Bowel Dis. 2016;22:752-762. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 448] [Cited by in RCA: 430] [Article Influence: 43.0] [Reference Citation Analysis (0)] |

| 48. | Ishige T. Growth failure in pediatric onset inflammatory bowel disease: mechanisms, epidemiology, and management. Transl Pediatr. 2019;8:16-22. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 33] [Cited by in RCA: 55] [Article Influence: 7.9] [Reference Citation Analysis (0)] |

| 49. | Kugathasan S, Denson LA, Walters TD, Kim MO, Marigorta UM, Schirmer M, Mondal K, Liu C, Griffiths A, Noe JD, Crandall WV, Snapper S, Rabizadeh S, Rosh JR, Shapiro JM, Guthery S, Mack DR, Kellermayer R, Kappelman MD, Steiner S, Moulton DE, Keljo D, Cohen S, Oliva-Hemker M, Heyman MB, Otley AR, Baker SS, Evans JS, Kirschner BS, Patel AS, Ziring D, Trapnell BC, Sylvester FA, Stephens MC, Baldassano RN, Markowitz JF, Cho J, Xavier RJ, Huttenhower C, Aronow BJ, Gibson G, Hyams JS, Dubinsky MC. Prediction of complicated disease course for children newly diagnosed with Crohn's disease: a multicentre inception cohort study. Lancet. 2017;389:1710-1718. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 393] [Cited by in RCA: 526] [Article Influence: 58.4] [Reference Citation Analysis (0)] |

| 50. | Walters TD, Kim MO, Denson LA, Griffiths AM, Dubinsky M, Markowitz J, Baldassano R, Crandall W, Rosh J, Pfefferkorn M, Otley A, Heyman MB, LeLeiko N, Baker S, Guthery SL, Evans J, Ziring D, Kellermayer R, Stephens M, Mack D, Oliva-Hemker M, Patel AS, Kirschner B, Moulton D, Cohen S, Kim S, Liu C, Essers J, Kugathasan S, Hyams JS; PRO-KIIDS Research Group. Increased effectiveness of early therapy with anti-tumor necrosis factor-α vs an immunomodulator in children with Crohn's disease. Gastroenterology. 2014;146:383-391. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 182] [Cited by in RCA: 228] [Article Influence: 19.0] [Reference Citation Analysis (0)] |

Open Access: This article is an open-access article that was selected by an in-house editor and fully peer-reviewed by external reviewers. It is distributed in accordance with the Creative Commons Attribution NonCommercial (CC BY-NC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is non-commercial. See: https://creativecommons.org/Licenses/by-nc/4.0/