Published online Mar 28, 2025. doi: 10.37126/aige.v6.i1.105674

Revised: March 1, 2025

Accepted: March 17, 2025

Published online: March 28, 2025

Processing time: 52 Days and 19.8 Hours

Recently, it has been suggested that the duodenum may be the pathological locus of functional dyspepsia (FD). Additionally, an image-based artificial intelligence (AI) model was shown to discriminate colonoscopy images of irritable bowel syndrome from healthy subjects with an area under the curve (AUC) 0.95.

To evaluate an AI model to distinguish duodenal images of FD patients from healthy subjects.

Duodenal images were collected from hospital records and labeled as "functional dyspepsia" or non-FD in electronic medical records. Helicobacter pylori (HP) infection status was obtained from the Japan Endoscopy Database. Google Cloud AutoML Vision was used to classify four groups: FD/HP current infection (n = 32), FD/HP uninfected (n = 35), non-FD/HP current infection (n = 39), and non-FD/HP uninfected (n = 33). Patients with organic diseases (e.g., cancer, ulcer, postoperative abdomen, reflux) and narrow-band or dye-spread images were excluded. Sensitivity, specificity, and AUC were calculated.

In total, 484 images were randomly selected for FD/HP current infection, FD/HP uninfected, non-FD/current infection, and non-FD/HP uninfected. The overall AUC for the four groups was 0.47. The individual AUC values were as follows: FD/HP current infection (0.20), FD/HP uninfected (0.35), non-FD/current in

We developed an image-based AI model to distinguish duodenal images of FD from healthy subjects, showing higher accuracy in HP-uninfected patients. These findings suggest AI-assisted endoscopic diagnosis of FD may be feasible.

Core Tip: This study reports a duodenal endoscopic artificial intelligence (AI) image model for detecting functional dyspepsia (FD). Endoscopic images of patients with FD typically lack labeled training data, as their alterations are imperceptible to human observers. In our previous study, we developed an AI model for irritable bowel syndrome and explored whether symptom presence or absence could serve as training labels. Our findings suggest that endoscopic duodenal images of FD patients can be distinguished with high accuracy from those of healthy individuals, stratified by HP infection status. Further investigations are warranted to assess AI's applicability in diagnosing other functional gas

- Citation: Mihara H, Nanjo S, Motoo I, Ando T, Fujinami H, Yasuda I. Artificial intelligence model on images of functional dyspepsia. Artif Intell Gastrointest Endosc 2025; 6(1): 105674

- URL: https://www.wjgnet.com/2689-7164/full/v6/i1/105674.htm

- DOI: https://dx.doi.org/10.37126/aige.v6.i1.105674

The prevalence of functional dyspepsia (FD) is high, ranging from 11.0% to 23.8% in Europe, 15% in the United States, and 7.0% to 17% in Japan[1,2]. It has been reported that 53% of the patients presenting with upper abdominal symptoms have FD[3]. Regarding dyspepsia symptoms and Helicobacter pylori (HP) infection, eradication therapy has been reported to improve dyspepsia symptoms in a certain percentage of HP-infected patients, and a new disease entity called HP-associated dyspepsia (HpD) has been defined[4]. Recently, it was reported that Escherichia coli forms endoscopically visible biofilms in the terminal ileum and ascending colon of patients with irritable bowel syndrome (IBS) patients, and dysbiosis and microinflammation can occur even in areas without biofilm formation[5]. FD is a multifactorial disorder with a wide spectrum of associated or causative factors. Some cross-sectional studies have reported that antral gastritis and certain endoscopic findings, such as linear redness in the antral region, are associated with dyspeptic symptoms, suggesting a potential role of the stomach in FD pathophysiology[6]. On the other hand, recent studies have suggested that the duodenum may also be involved in the disease mechanism, with dysbiosis and microinflammation reported in this region[7]. However, unlike the stomach, no specific endoscopic changes in the duodenum have been consistently documented. Given this gap in evidence, our study aimed to compare findings across these regions to further clarify their respective contributions to FD.

Imaging artificial intelligence (AI) models to detect gastrointestinal endoscopic lesions have been developed, some of which are already in clinical use[8]. A meta-analysis of the diagnostic performance for HP infection demonstrated a sensitivity of 0.87, specificity of 0.86, and an area under the curve (AUC) of 0.92[9], which is a higher diagnostic accuracy than that of medical practitioners. AI model development generally requires structured training datasets. However, for functional gastrointestinal disorders, endoscopic abnormalities are often absent, making labeled datasets scarce. Incorporating clinical variables, such as symptom presence or absence, into training data may facilitate the identification of subtle colonic changes that are otherwise undetectable by human observers. We established an IBS AI image model using the presence or absence of symptoms as teacher data[10] using the Google Cloud Platform (Google Inc., Mountain View, CA). Available from: https://cloud.google.com/vision/. It is thought that AI may be able to detect subtle duodenal alterations that remain imperceptible to the human eye. The ultimate goal was to build an AI model that can diagnose FD and predict treatment efficacy and prognosis based on patient background and endoscopic images. In this study, we aimed to explore whether it is possible to build an image model that can identify the name of FD disease from duodenal AI images.

The study was reviewed and approved by the Toyama University Hospital Ethics Committee (Approval No. R2021032) on 2021/05/11. An opt-out informed consent protocol was used for the use of participant data for research purposes, and this consent procedure was also reviewed and approved by the same committee under the same approval number.

For use in real-world patients with FD, patients were identified not by ROME criteria, but instead based on disease names recorded for insurance purposes between January 2018 and December 2020. By not using ROME criteria, the model reflects a patient group that is closer to real clinical practice, thereby enhancing its applicability in general clinical settings. We included duodenal images acquired from 2020 to 2022 (Olympus, GIF-H290Z) along with the HP infection status from the Japan Endoscopy Database (JED) of our hospital. We investigated whether the Cloud AI construction service could produce a model to determine the status of HP infection with the same success as that previously reported[9]. Ulcer lesions, food residue, post-gastrectomy images, and narrow-band, magnification images were excluded. The entire process was performed by one physician (Mihara H) and one medical technician (Kuraishi S). Endoscopic images themselves were observed and taken by multiple trainees and supervisors in general clinical practice, and patients were not specifically prepared for this study. Furthermore, mucus flushing and magnification observations were not per

We acquired duodenal endoscopic images of FD patients with the disease name in the medical record and patients with abnormal health examinations whose endoscopic report showed no abnormalities and built an AI model to distinguish the four groups of FD and HP infection status [FD/HP current infection (+), FD/HP uninfected(-), non-FD/HP(+), and non-FD/HP(-)], as well as each of the two groups [FD and non-FD among HP(+) or HP(-)]. Patients with post-eradication of HP infections were excluded. For symptomatic patients, esophagogastroduodenoscopy was performed as part of a workup for the symptoms, and asymptomatic patients had undergone gastric cancer screening with ab

The accuracy of the model was improved by building it multiple times after excluding narrow band or dye-spread images. No biofilms were detected. A total of 1-9 images were used, with approximately 1-3 images for regions in each segment per patient. The FD/HP (+), FD/HP (-), non-FD/HP (+), and non-FD/HP (-) groups had 32, 35, 39 and 33 patients, respectively, for which 70, 121, 141, and 152 images, respectively, were used. The accuracy of the model increased with the number of patients; however, approximately 100 images yielded a certain degree of accuracy. Although FD/HP (+) images are fewer than the other three groups, generally around 100 images per group are sufficient for construction in Google's model. The novelty was that a certain model could be constructed by dividing based on the presence or absence of FD and HP. Further examination, including the necessary number of images, will be needed in the future. Thus, the number of patients and images used were considered sufficient to construct this model in this study.

In this study we performed annotation and Algorithm Generation using Google Cloud AutoML Vision from the Google Cloud Platform (Google, Inc.). Four labels were defined in the training dataset (single-label classification): FD/HP (+), FD/HP (-), non-FD/HP (+), and non-FD/HP (-). Three models were produced that differentiated FD/HP (+), FD/HP (-), non-FD/HP (+), and non-FD/HP (-), FD/HP (+) from non-FD/HP (+), and FD/HP (-), from non-FD/HP (-). This procedure was performed by a single physician (Mihara H).

The same method was used as in our previous report[10]. This is briefly described as followed. The Google Cloud AutoML Vision platform automatically and randomly selected training set images (80%), validation set images (10%), and test set images (10%) from the dataset for the algorithm training process. Sixteen nodes (2 h) were used to train the algorithm. Because increasing the sample size did not further increase the accuracy, this sample size was determined to be the optimal sample size for this project. Using metrics, we generated confusion matrix[8] and a contingency table for specificity at a threshold of 0.5. The probability of a given label for each image was presented as a score between 0 and 1. AUC, accuracy, precision, recall, and the precision-recall curve were used as performance metrics to evaluate the model's overall discriminative ability (with higher AUC values indicating better performance), correct prediction rate (accuracy), the proportion of true positives among predicted positives (precision), the proportion of true positives among actual positives (recall), and the model's effectiveness in imbalanced datasets (precision-recall curve), with higher and more stable curves indicating greater clinical applicability.

In this study, we analyzed the differences between the FD and non-FD groups among HP-positive subjects using Explainable AI (XAI). We constructed an FD classification model using transfer learning based on the EfficientNetV2-S model with Python 3.9 and TensorFlow 2.10. The XRAI algorithm was employed to generate saliency maps, which were combined with the input images to develop a system capable of outputting classification predictions and XAI-enhanced images. The local PC environment was equipped with an NVIDIA GeForce RTX™ 3070 GPU.

The first question we addressed was whether AI could distinguish between the 4 groups of patients with FD. In Japan, acotiamide is used for FD, resulting in FD in patients being prescribed acotiamide. The dataset included 70 images from 32 FD/HP patients with current infections, 141 images from 39 patients with non-FD/HP current infections, 121 images from 35 FD/HP uninfected patients, and 152 images from 33 non-FD/HP uninfected patients. We used fewer than 10 images per patient and included the region from the duodenal bulbus to the descending portion of the duodenum. For the training, validation, and test images, 388, 48, and 48 images were used, respectively. The overall AUC of the four groups was 0.46 (Table 1). Using the developed AI model, the algorithm achieved an average precision (positive predictive value) of 45.9%, precision of 43.14%, and recall of 42.31% through automated training and validation. Precision-recall curves were generated for each label and the overall system performance. A threshold of 0.5 was applied to optimize the balance between precision and recall. Table 1 presents the confusion matrix and the model’s discriminative performance, with a total AUC of 0.47 [FD/HP (-) 0.35, FD/HP (+) 0.20, non-FD/HP (-) 0.74, non-FD/HP (+) 0.46]. Because the AUCs of the FD/HP (+) cases were the lowest and those of patients with non-FD/HP (-) were the highest, it was thought that the presence or absence of HP infection affected the accuracy of FD discrimination. Therefore, the same data set was reused as an input for a model to differentiate only the presence or absence of FD with or without HP infection.

| AUC | ||||

| All labels | 0.47 | |||

| FD/HP (-) | 0.35 | |||

| FD/HP (+) | 0.20 | |||

| Non-FD/HP (-) | 0.74 | |||

| Non-FD/HP (+) | 0.46 | |||

| True label/ predictive label | FD/HP (-) | FD/HP (+) | Non-FD/HP (-) | Non-FD/HP (+) |

| FD/HP (-) | 50% | 25% | - | 25% |

| FD/HP (+) | 14% | 14% | 14% | 57% |

| Non-FD/HP (-) | 12% | 6% | 59% | 24% |

| Non-FD/HP (+) | 44% | 13% | 6% | 38% |

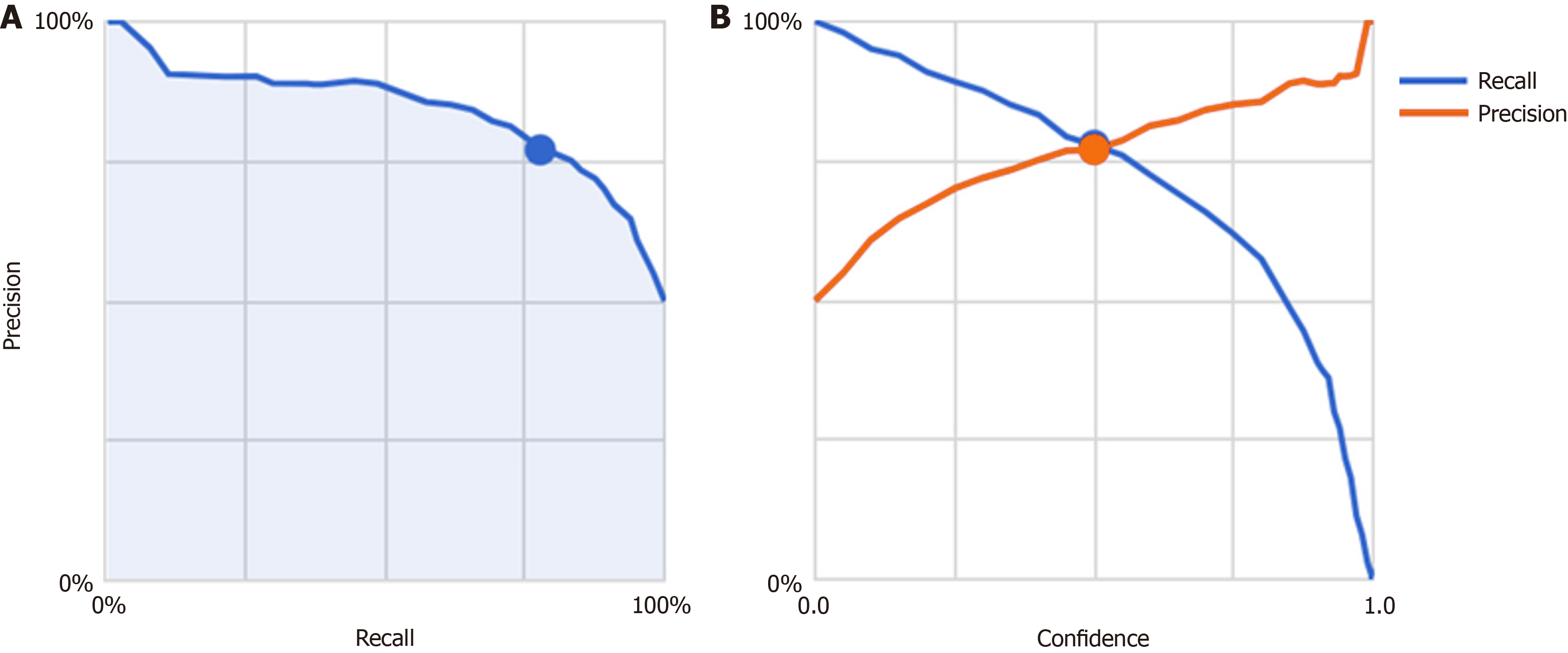

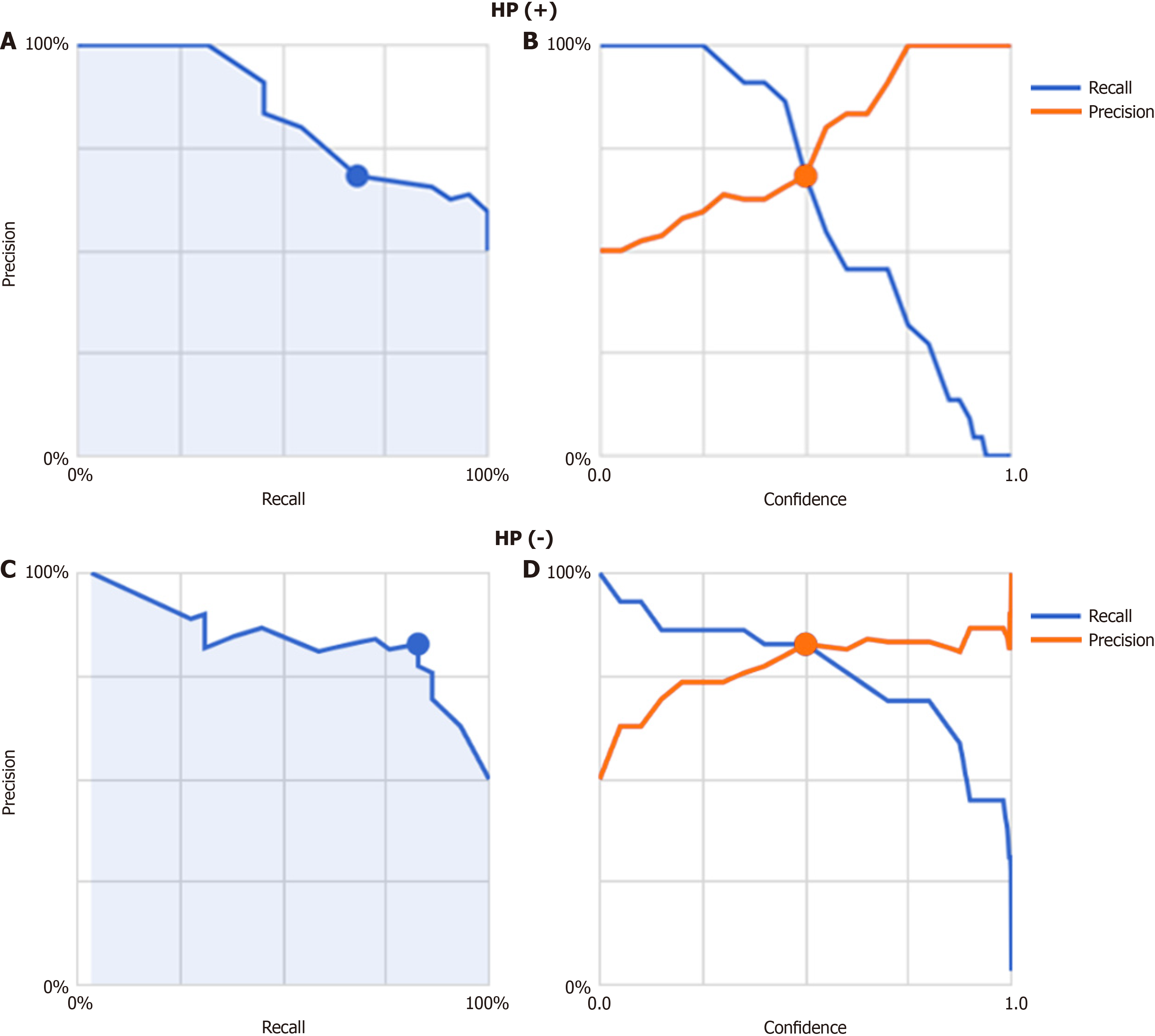

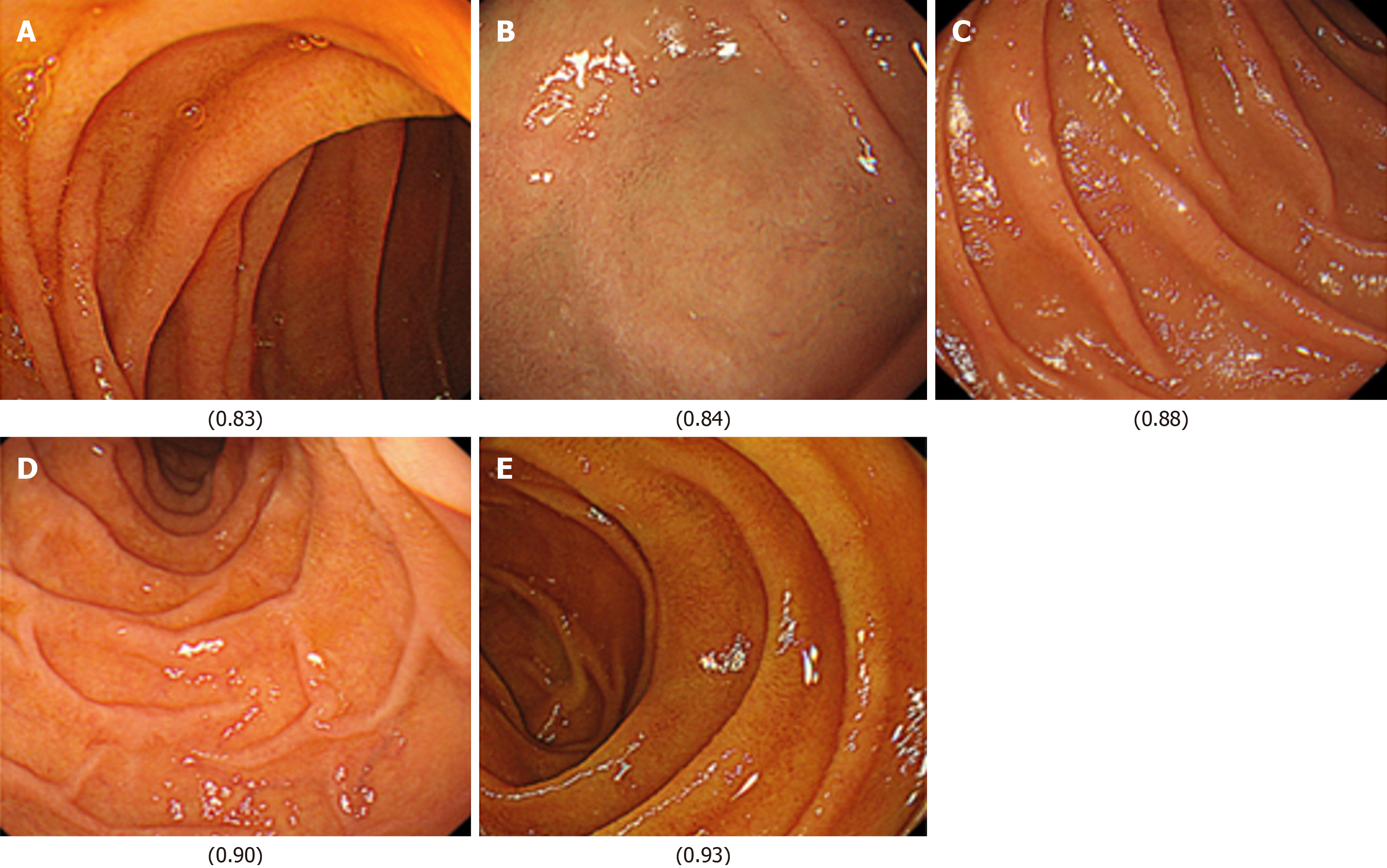

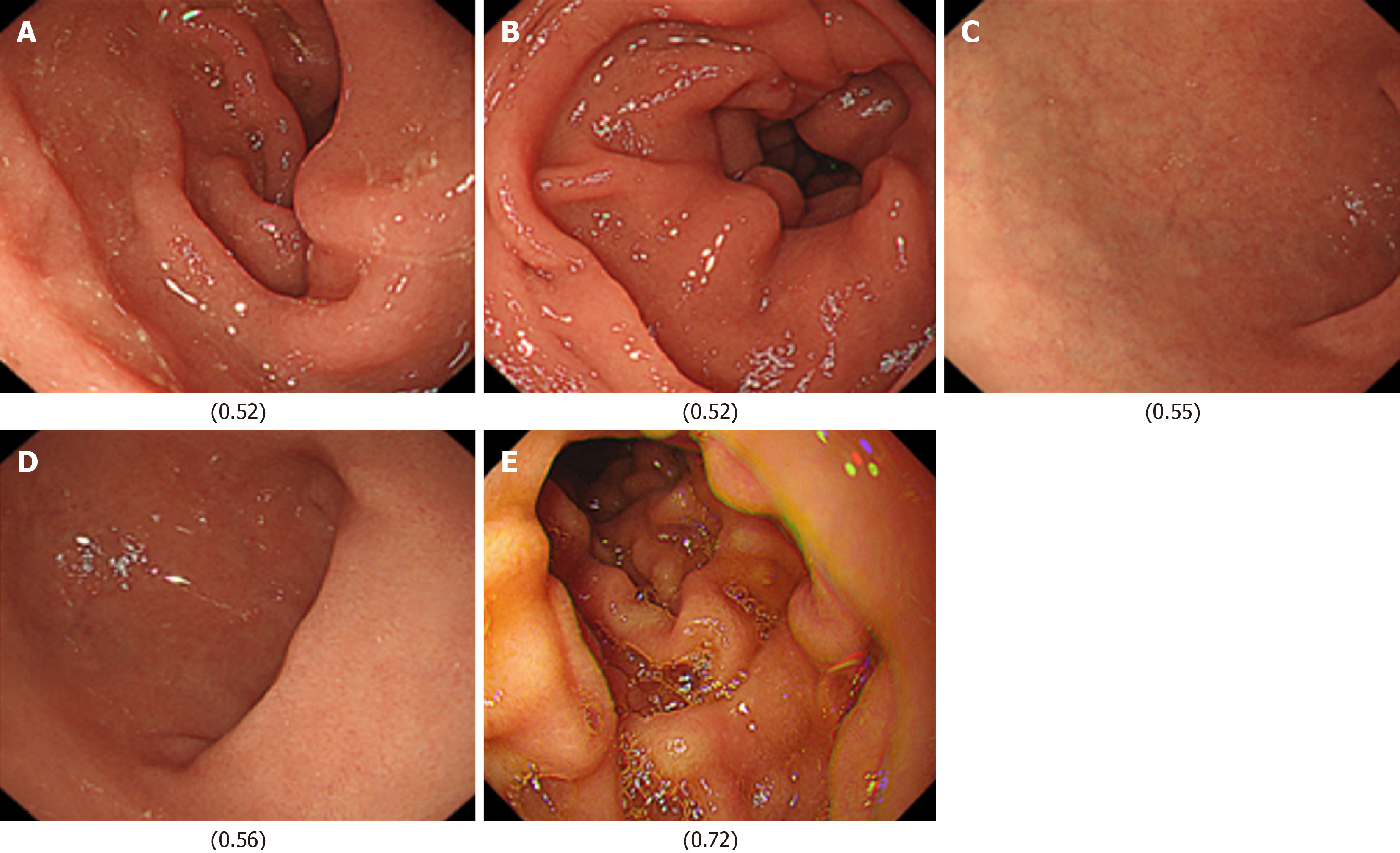

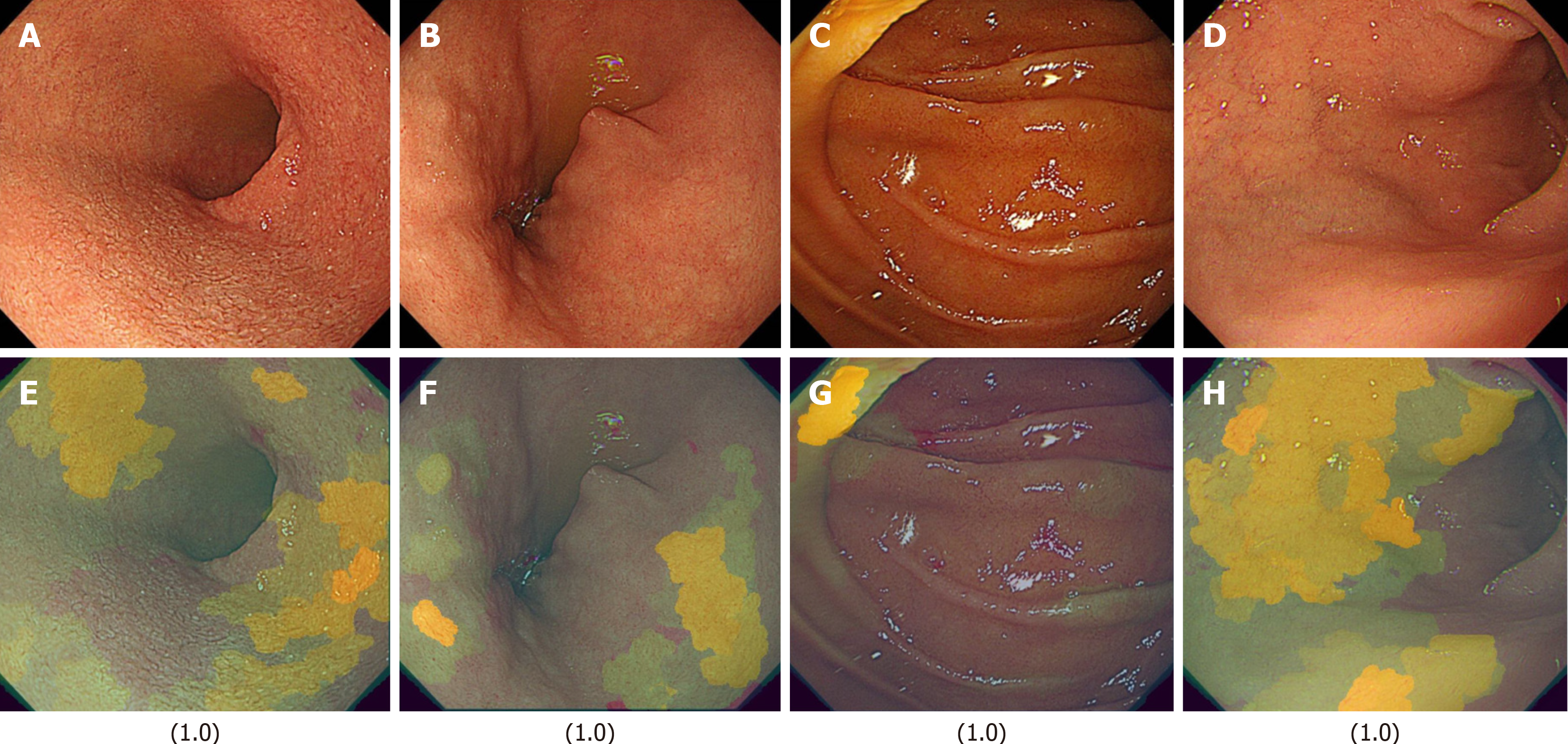

Next, images from FD/HP (+) were compared with images from endoscopy in patients with non-FD/HP (+). For the training, validation, and test images, 169, 21, and 21 images were used, respectively. For these groups, the sensitivity, specificity, positive and negative predictive values, and AUC of the model to determine the presence or absence of FD in currently infected HP patients were 71.4%, 66.7%, 50.0%, 83.3%, and 0.84, respectively (Figure 1, Table 2). High-scoring images in non-FD or FD patients with current HP infection (score 0-1) are presented in Figures 2 and 3.

| HP (+) | AUC | |

| All labels | 0.84 | |

| FD/HP (+) | 0.59 | |

| Non-FD/HP (+) | 0.90 | |

| True label/predictive label | FD/HP (+) | non-FD/HP (+) |

| FD/HP (+) | 71% | 29% |

| Non-FD/HP (+) | 33% | 67% |

| HP (-) | AUC | |

| All labels | 0.85 | |

| FD/HP (-) | 0.83 | |

| Non-FD/HP (-) | 0.84 | |

| True label/predictive label | FD/HP (-) | non-FD/HP (-) |

| FD/HP (-) | 58% | 42% |

| Non-FD/HP (-) | - | 100% |

As detailed above, precision-recall curves and threshold values were configured accordingly. The model’s ability to differentiate between groups is summarized in Table 2, with a total AUC of 0.84 (0.59 for FD/HP-positive cases and 0.90 for non-FD/HP-positive cases). The algorithm achieved the following performance metrics: Average precision (positive predictive value), precision, and recall were 84.0%, 68.2% and 68.2%, respectively.

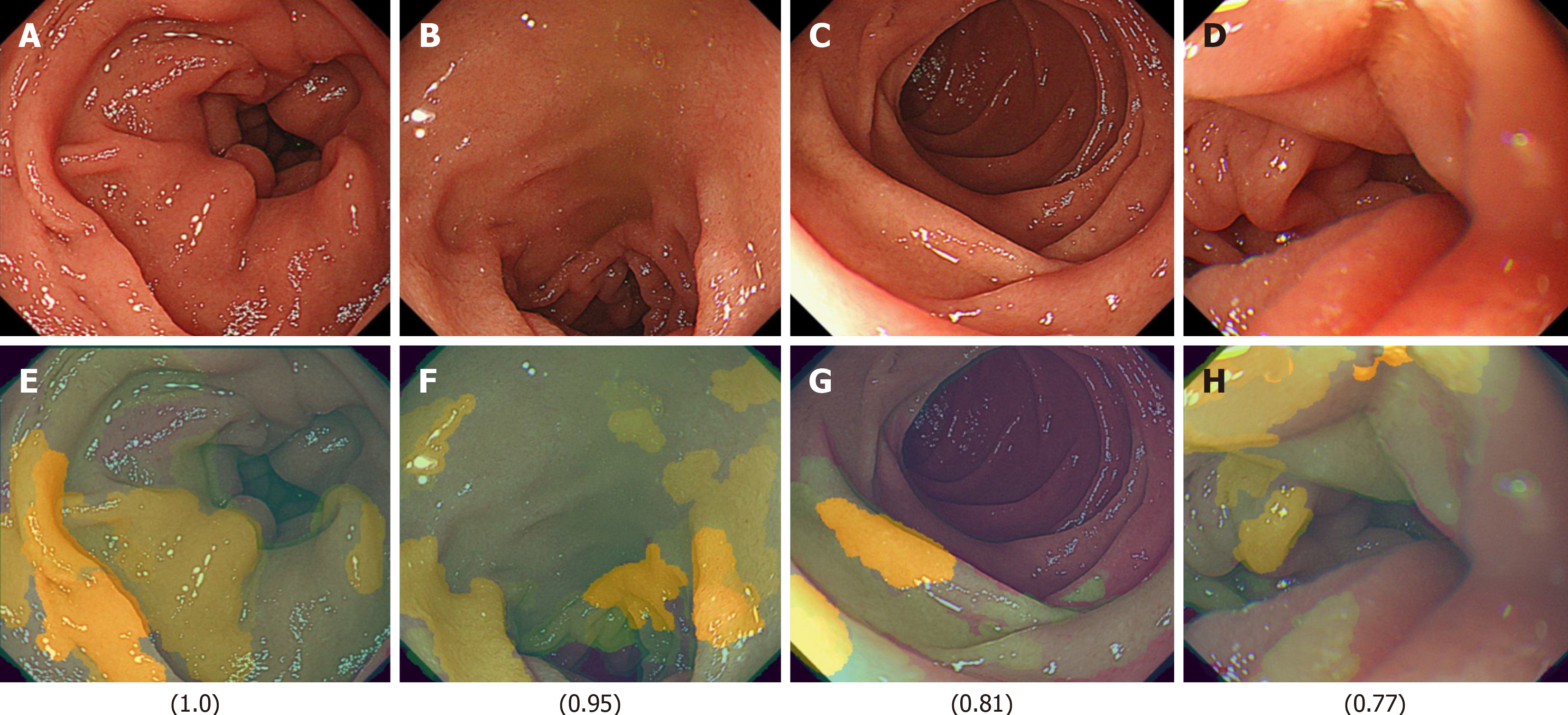

Finally, images from FD/HP (-) were compared with images from endoscopy in patients with non-FD/HP (-). For the training, validation, and test images, 219, 27, and 27 images were used, respectively. For these groups, the sensitivity, specificity, positive and negative predictive values, and AUC of the model to determine the presence or absence of FD in HP un-infected patients were 71.4%, 66.7%, 50.0%, 83.3%, and 0.85, respectively (Figure 2, Table 2).

The model’s classification performance, including the confusion matrix, is summarized in Table 2. The overall AUC was 0.85, with subgroup AUC values of 0.83 for FD/HP-negative cases and 0.84 for non-FD/HP-negative cases. The model attained an average precision (positive predictive value), precision, and recall of 0.85, 82.8%, and 82.8%, res

The FD classification model achieved precision, recall, and F1-score values of 0.93, with an overall accuracy of 0.93. In the XAI image analysis, specific duodenal regions (Figures 4 and 5) were identified as potentially discriminative for distinguishing FD from non-FD cases. However, these features were not visually discernible to human observers.

AI has been shown to detect subtle endoscopic changes associated with microenvironmental alterations, such as minor inflammation, that are difficult for human observers to identify on colonoscopic images. Based on this, we investigated whether AI could similarly detect subtle endoscopic changes of FD in duodenal images. The AUC was 0.49, which was poor. However, when the duodenal image model was re-constructed by stratifying patients with and without FD based on the presence or absence of HP infection, the AUC improved considerably to 0.84 and 0.85, respectively. By not using ROME criteria, the model reflects a patient group that is closer to real clinical practice, thereby enhancing its applicability in general clinical settings. These results suggest that the duodenal microscopic changes in patients with HP infection and FD may be similar, and that HP infection in the stomach may lead to detectable changes in duodenal endoscopic images. It is also appropriate to distinguish HpD, which is one of the factors contributing to dyspepsia symptoms. Based on its pathophysiology, it is assumed that AI can detect mild edema caused by microinflammation. To the best of our knowledge, this is the second AI model capable of detecting functional gastrointestinal diseases on endoscopic images[10]. However, as this model was not constructed separately for the bulb and descending segments, it remains unclear whether either region contributes more significantly to the diagnosis. The advantage of this model is that it returns FD scores independent of the specific duodenal segment. Nevertheless, this model has several limitations. First, the diagnosis of FD was determined based on the disease name recorded for insurance purposes rather than the ROME criteria. Because of insurance coverage policies, patients diagnosed with FD are likely to be prescribed acotiamide frequently. However, the presence or absence of acotiamide administration was not considered in the analysis, which represents a limitation of this study. It cannot be ruled out that the observed changes in endoscopic images were influenced by administered medications, making this an important subject for future research. Given that the ROME IV criteria are not universally applied in routine clinical settings, this model was designed to optimize applicability in real-world clinical settings. Many patients included in this study were considered to have postprandial distress syndrome. If dyspeptic symptoms resolve following eradication therapy, the condition is classified as HpD, whereas persistent symptoms post-eradication suggest FD. However, since this study analyzed data collected before the response to eradication therapy was confirmed, the dataset contained a mixture of HpD and HP-infected FD cases. While this is a limitation, it also highlights an important research direction: Whether endoscopic findings could help distinguish patients whose symptoms improve post-eradication from those with persistent symptoms. Additionally, HP infection status was determined based on data from the JED, and confirmation using test such as urinary antibodies or fecal antigen tests was not always conducted. Therefore, further validation is necessary using an independent clinical dataset to assess the model’s reliability and reproducibility. The inclusion of psychosocial factors could provide insights into identifying patients who might benefit from AI-assisted duodenal evaluation. However, no psychometric evaluations were conducted in this study. It has been suggested that AI-based classification of IBS patients using psychological factors will also be necessary for FD[11], therefore, future analyses incorporating psychosocial factors will also be necessary for FD. Additionally, post-eradication patients were excluded from this study.

Another limitation is that the dataset was constructed without considering factors such as age, gender, or treatment effects. The code-free deep learning methodology implemented in this study offers enhanced accessibility for clinicians lacking machine learning expertise[12]. Additionally, other research groups have documented similar findings for medical image classification and otoscopic diagnosis without requiring coding experience[13-15]. Although the training, validation, and test images were completely distinct, they originated from the same patient population. Therefore, external validation in an independent patient population is required. Regarding model training, we employed Google AutoML, which automatically partitions the dataset into training (80%), validation (10%), and test (10%) sets. Although images originated from the same patient group, those assigned to the test set were entirely separate from those used in training and validation. While user-defined data partitioning is possible, it introduces potential biases, which is why we adopted a randomized allocation approach to ensure objectivity. Importantly, the test images are completely independent and were never used in training or validation, making this an external validation dataset in itself. However, as a future challenge, it will be necessary to validate whether similar results can be obtained using images from other institutions. Furthermore, similar to the previously mentioned limitations, Google AutoML does not allow users to explicitly specify leave-one-out cross-validation or k-fold cross-validation. Instead, AutoML automatically partitions the dataset into training, validation, and test sets, and conducts model training and evaluation. This process is automated, and users are not expected to configure cross-validation settings in detail. On the other hand, by using the custom model feature of Vertex AI, users can have greater control over data partitioning and cross-validation strategies, allowing the application of techniques such as k-fold cross-validation for more rigorous model evaluation. However, the primary focus of this study is to explore the extent to which no-code AI models can be utilized. Therefore, future research should aim to develop more sophisticated AI models using coded approaches. The performance of cloud-based AI model construction compared to standalone PC-based model training depends on hardware capabilities. However, given the practical constraints faced by clinicians, cloud-based AI environments offer significant advantages in accessibility and usability. Although multiple training iterations were performed, iterative refinement during the training phase was not feasible, making it difficult to confirm whether the model converged to an optimal pathway. Additionally, model accuracy may vary depending on differences in endoscopic light source settings and scope types. One common criticism of AI models is the difficulty in interpreting their decision-making process due to the lack of direct visual evidence for specific endoscopic findings. However, this is a general limitation inherent to AI-based diagnostic models. We examined the use of XAI models in an edge computing environment to investigate which image features AI prioritizes in the diagnosis of FD (Figures 5 and 6). While the use of XAI allowed us to visualize distinct regions between the FD and non-FD groups in HP-positive subjects, human observers still could not fully comprehend what specific features AI considered characteristic of FD, highlighting the persistent black-box problem. These findings indicate that even with XAI, visual confirmation of characteristic regions remains challenging in FD. However, if real-time XAI-based assessment becomes feasible, it may enable targeted biopsies from the identified feature regions, mucosal bacterial sampling, and evaluation of epithelial permeability, ultimately leading to a deeper understanding of disease conditions. Future studies could explore the potential of AI in diagnosing other functional gastrointestinal diseases.

By leveraging no-code deep learning, this study demonstrates the potential for distinguishing FD patients from asymptomatic individuals using duodenal images categorized by HP infection status. Our findings highlight the feasibility of AI-assisted diagnosis in this context. However, further external validation is necessary to confirm the model’s diagnostic performance across different facilities, and further work is needed to enhance its interpretability.

We thank Maeda A, Hiraki M, Kuraishi S, and Ogawa K, medical engineering technicians at the Medical Device Management Center, University of Toyama Hospital, for their support in collecting and organizing images.

| 1. | Mahadeva S, Goh KL. Epidemiology of functional dyspepsia: a global perspective. World J Gastroenterol. 2006;12:2661-2666. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in CrossRef: 256] [Cited by in RCA: 283] [Article Influence: 14.2] [Reference Citation Analysis (2)] |

| 2. | Oshima T, Miwa H. Epidemiology of Functional Gastrointestinal Disorders in Japan and in the World. J Neurogastroenterol Motil. 2015;21:320-329. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 104] [Cited by in RCA: 124] [Article Influence: 11.3] [Reference Citation Analysis (0)] |

| 3. | Okumura T, Tanno S, Ohhira M, Tanno S. Prevalence of functional dyspepsia in an outpatient clinic with primary care physicians in Japan. J Gastroenterol. 2010;45:187-194. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 48] [Cited by in RCA: 61] [Article Influence: 3.8] [Reference Citation Analysis (0)] |

| 4. | Stanghellini V, Chan FK, Hasler WL, Malagelada JR, Suzuki H, Tack J, Talley NJ. Gastroduodenal Disorders. Gastroenterology. 2016;150:1380-1392. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 818] [Cited by in RCA: 1033] [Article Influence: 103.3] [Reference Citation Analysis (0)] |

| 5. | Baumgartner M, Lang M, Holley H, Crepaz D, Hausmann B, Pjevac P, Moser D, Haller F, Hof F, Beer A, Orgler E, Frick A, Khare V, Evstatiev R, Strohmaier S, Primas C, Dolak W, Köcher T, Klavins K, Rath T, Neurath MF, Berry D, Makristathis A, Muttenthaler M, Gasche C. Mucosal Biofilms Are an Endoscopic Feature of Irritable Bowel Syndrome and Ulcerative Colitis. Gastroenterology. 2021;161:1245-1256.e20. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 90] [Cited by in RCA: 95] [Article Influence: 19.0] [Reference Citation Analysis (0)] |

| 6. | Tahara T, Arisawa T, Shibata T, Nakamura M, Okubo M, Yoshioka D, Wang F, Nakano H, Hirata I. Association of endoscopic appearances with dyspeptic symptoms. J Gastroenterol. 2008;43:208-215. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 14] [Cited by in RCA: 11] [Article Influence: 0.6] [Reference Citation Analysis (0)] |

| 7. | Miwa H, Oshima T, Tomita T, Fukui H, Kondo T, Yamasaki T, Watari J. Recent understanding of the pathophysiology of functional dyspepsia: role of the duodenum as the pathogenic center. J Gastroenterol. 2019;54:305-311. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 42] [Cited by in RCA: 44] [Article Influence: 6.3] [Reference Citation Analysis (0)] |

| 8. | Kudo SE, Misawa M, Mori Y, Hotta K, Ohtsuka K, Ikematsu H, Saito Y, Takeda K, Nakamura H, Ichimasa K, Ishigaki T, Toyoshima N, Kudo T, Hayashi T, Wakamura K, Baba T, Ishida F, Inoue H, Itoh H, Oda M, Mori K. Artificial Intelligence-assisted System Improves Endoscopic Identification of Colorectal Neoplasms. Clin Gastroenterol Hepatol. 2020;18:1874-1881.e2. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 184] [Cited by in RCA: 162] [Article Influence: 27.0] [Reference Citation Analysis (0)] |

| 9. | Bang CS, Lee JJ, Baik GH. Artificial Intelligence for the Prediction of Helicobacter Pylori Infection in Endoscopic Images: Systematic Review and Meta-Analysis Of Diagnostic Test Accuracy. J Med Internet Res. 2020;22:e21983. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 76] [Cited by in RCA: 71] [Article Influence: 11.8] [Reference Citation Analysis (0)] |

| 10. | Tabata K, Mihara H, Nanjo S, Motoo I, Ando T, Teramoto A, Fujinami H, Yasuda I. Artificial intelligence model for analyzing colonic endoscopy images to detect changes associated with irritable bowel syndrome. PLOS Digit Health. 2023;2:e0000058. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 11] [Cited by in RCA: 11] [Article Influence: 3.7] [Reference Citation Analysis (0)] |

| 11. | Lundervold AJ, Billing JE, Berentsen B, Lied GA, Steinsvik EK, Hausken T, Lundervold A. Decoding IBS: a machine learning approach to psychological distress and gut-brain interaction. BMC Gastroenterol. 2024;24:267. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 6] [Reference Citation Analysis (0)] |

| 12. | Faes L, Wagner SK, Fu DJ, Liu X, Korot E, Ledsam JR, Back T, Chopra R, Pontikos N, Kern C, Moraes G, Schmid MK, Sim D, Balaskas K, Bachmann LM, Denniston AK, Keane PA. Automated deep learning design for medical image classification by health-care professionals with no coding experience: a feasibility study. Lancet Digit Health. 2019;1:e232-e242. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 98] [Cited by in RCA: 144] [Article Influence: 20.6] [Reference Citation Analysis (0)] |

| 13. | Korot E, Guan Z, Ferraz D, Wagner SK, Zhang G, Liu X, Faes L, Pontikos N, Finlayson SG, Khalid H, Moraes G, Balaskas K, Denniston AK, Keane PA. Code-free deep learning for multi-modality medical image classification. Nat Mach Intell. 2021;3:288-298. [RCA] [DOI] [Full Text] [Cited by in Crossref: 34] [Cited by in RCA: 78] [Article Influence: 15.6] [Reference Citation Analysis (0)] |

| 14. | Livingstone D, Chau J. Otoscopic diagnosis using computer vision: An automated machine learning approach. Laryngoscope. 2020;130:1408-1413. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 28] [Cited by in RCA: 54] [Article Influence: 7.7] [Reference Citation Analysis (0)] |

| 15. | Zhang M, Pan J, Lin J, Xu M, Zhang L, Shang R, Yao L, Li Y, Zhou W, Deng Y, Dong Z, Zhu Y, Tao X, Wu L, Yu H. An explainable artificial intelligence system for diagnosing Helicobacter Pylori infection under endoscopy: a case-control study. Therap Adv Gastroenterol. 2023;16:17562848231155023. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 11] [Cited by in RCA: 9] [Article Influence: 3.0] [Reference Citation Analysis (0)] |

Open Access: This article is an open-access article that was selected by an in-house editor and fully peer-reviewed by external reviewers. It is distributed in accordance with the Creative Commons Attribution NonCommercial (CC BY-NC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is non-commercial. See: https://creativecommons.org/Licenses/by-nc/4.0/