Published online Apr 28, 2021. doi: 10.35711/aimi.v2.i2.37

Peer-review started: March 22, 2021

First decision: March 26, 2021

Revised: April 1, 2021

Accepted: April 20, 2021

Article in press: April 20, 2021

Published online: April 28, 2021

Processing time: 36 Days and 4.6 Hours

Artificial intelligence (AI) has seen tremendous growth over the past decade and stands to disrupts the medical industry. In medicine, this has been applied in medical imaging and other digitised medical disciplines, but in more traditional fields like medical physics, the adoption of AI is still at an early stage. Though AI is anticipated to be better than human in certain tasks, with the rapid growth of AI, there is increasing concerns for its usage. The focus of this paper is on the current landscape and potential future applications of artificial intelligence in medical physics and radiotherapy. Topics on AI for image acquisition, image segmentation, treatment delivery, quality assurance and outcome prediction will be explored as well as the interaction between human and AI. This will give insights into how we should approach and use the technology for enhancing the quality of clinical practice.

Core Tip: Artificial intelligence (AI) applications in medical physics and radiotherapy represent an important emerging area in AI applications in medicine. The most notable improvements for the many aspects of radiotherapy are the ability to provide an accurate result with consistency and eliminate inter-and intra-observer variations. Perspectives from physicians and medical physicists about the use of AI are presented, and suggestions of how human can co-exist with AI are made to better equip us for the future.

- Citation: Ip WY, Yeung FK, Yung SPF, Yu HCJ, So TH, Vardhanabhuti V. Current landscape and potential future applications of artificial intelligence in medical physics and radiotherapy. Artif Intell Med Imaging 2021; 2(2): 37-55

- URL: https://www.wjgnet.com/2644-3260/full/v2/i2/37.htm

- DOI: https://dx.doi.org/10.35711/aimi.v2.i2.37

Radiotherapy (RT) is an important component of cancer treatment and nearly half of all cancer patients receive RT during their treatment pathways[1]. Increasingly, the use of new technologies such as artificial intelligence (AI) tools plays an important role in RT in various aspects from image acquisition, tumour segmentation, treatment planning, delivery, quality assurance (QA), etc. The list will no doubt continue to develop and grow over time as the technology continues to mature. Advancements in computing power and data collection have increased the utilization of AI. The adaptation of a more sophisticated modelling approach has become more widespread creating more accurate predictions. Available datasets from radiation oncology have been generally smaller and more limited than datasets from other medical disciplines such as medical imaging, so the performance of AI is constrained in medical physics disciplines by the available data[2].

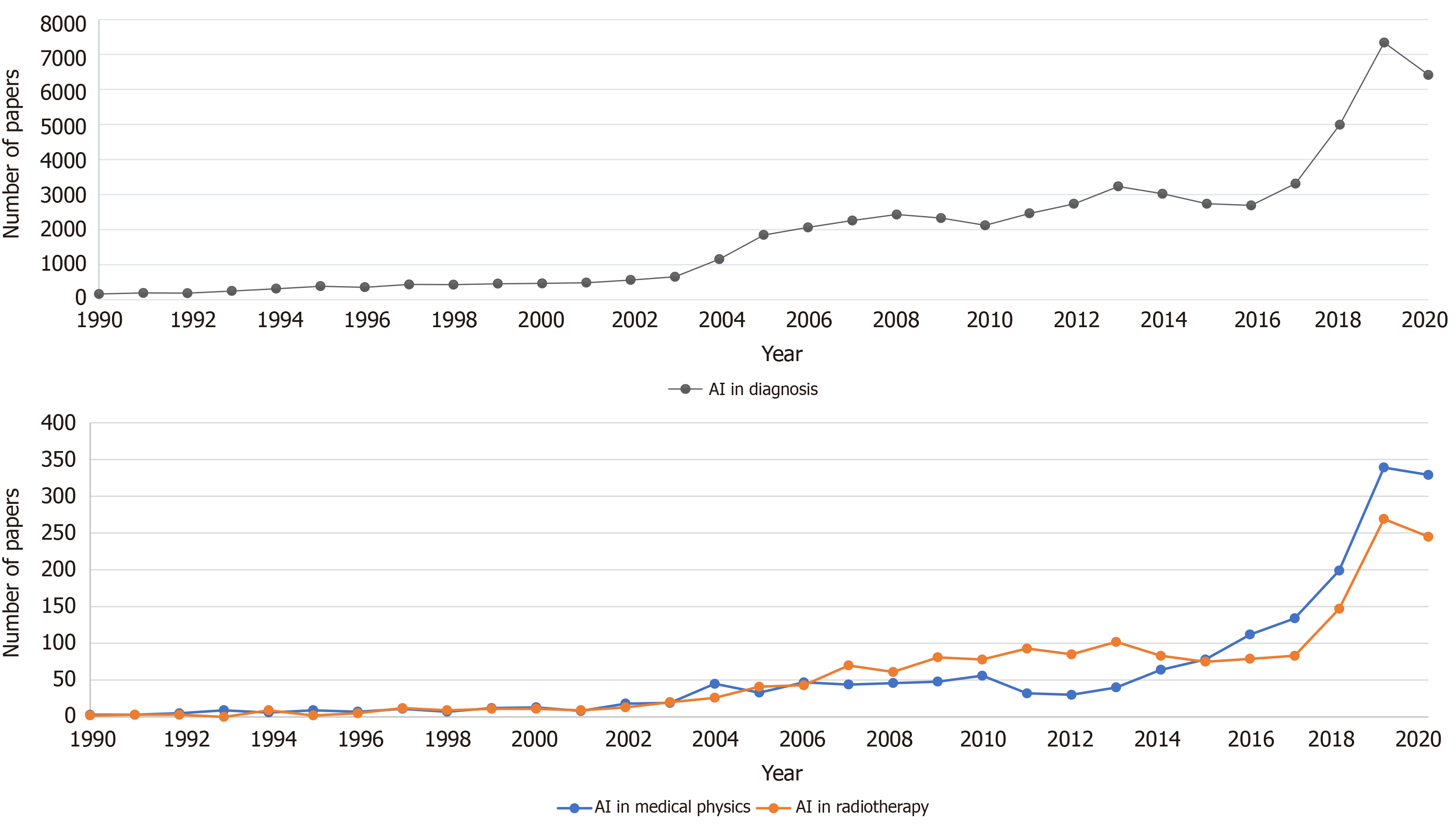

According to the data on PubMed search engine performed in Figure 1, which is queried on March 20, 2021, there is a clear increasing trend in AI in the medical literature. Both graphs show an increasing trend but the numbers in medical physics and RT disciplines are several orders of magnitudes lower than in the general medical diagnosis groups. However, the increasing interest in AI applications in medical physics and RT is clear.

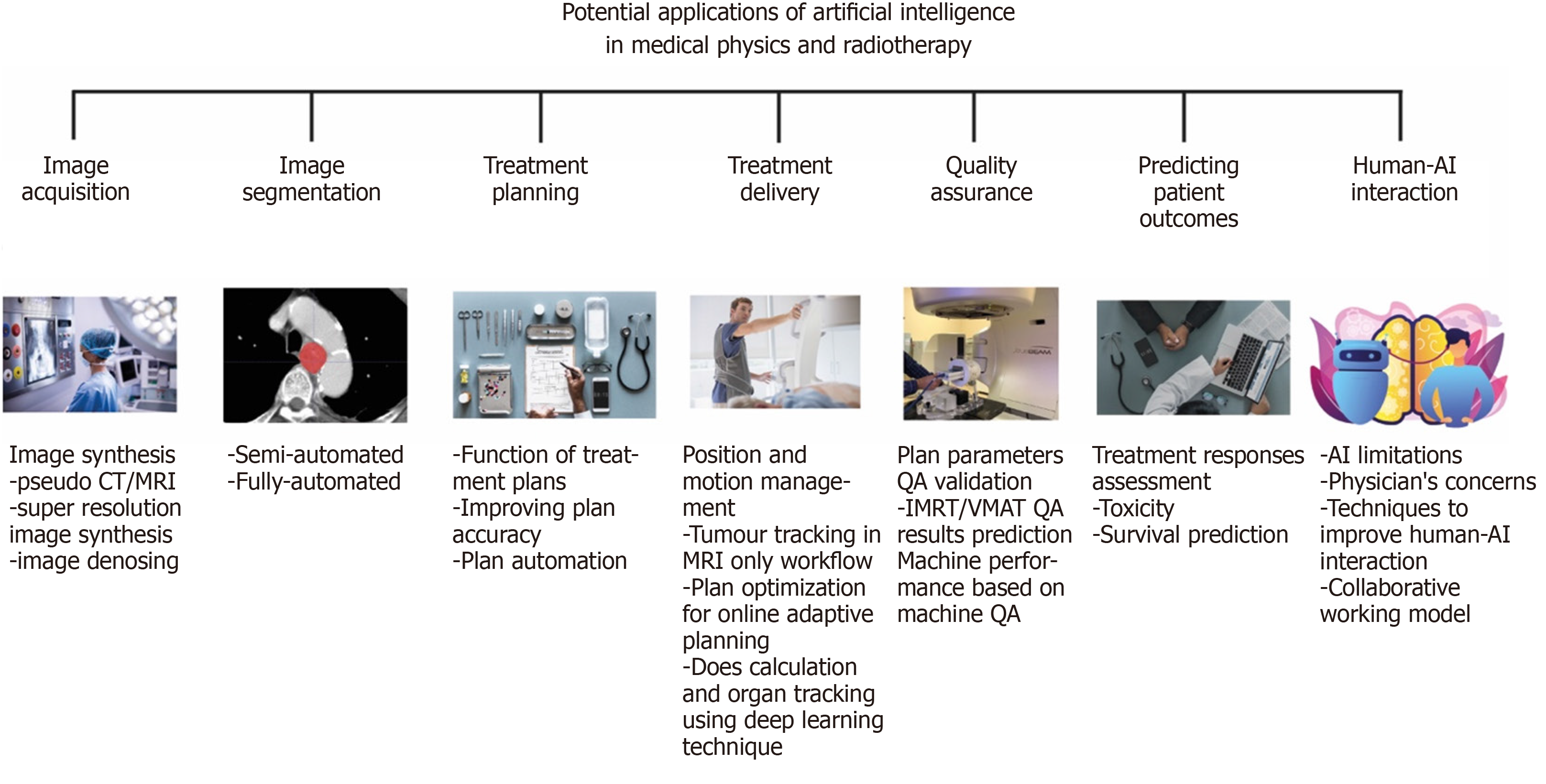

In this review article, we will focus on the different aspects of medical physics practice and RT applications and discuss the emerging applications and potentials relating to each area. This is summarised in Figure 2. The structure of this paper is as follows. In section 1, we introduce image synthesis application and benefit in image acquisition. In section 2, we discuss how AI is being used in image segmentation moving from the traditionally manual labour-intensive task to a more automated system. In section 3, we present the function of treatment planning and demonstrate how AI techniques can improve the plan accuracy. In section 4, we describe the benefit in treatment delivery, such as accuracy in position/motion management, organ tracking and dose calculation. In section 5, we explain how AI can be used to improve the performance in the QA process and the advantages of using AI in QA. In section 6, we talk about the prediction of patient outcome and discuss the concerns of patients and clinicians when using AI in the fields that mentioned above. In section 7, we discuss aspects of human-AI interaction. Finally, in section 8, we summarize and evaluate whether AI involved in medical decision making is a benefit or a threat?

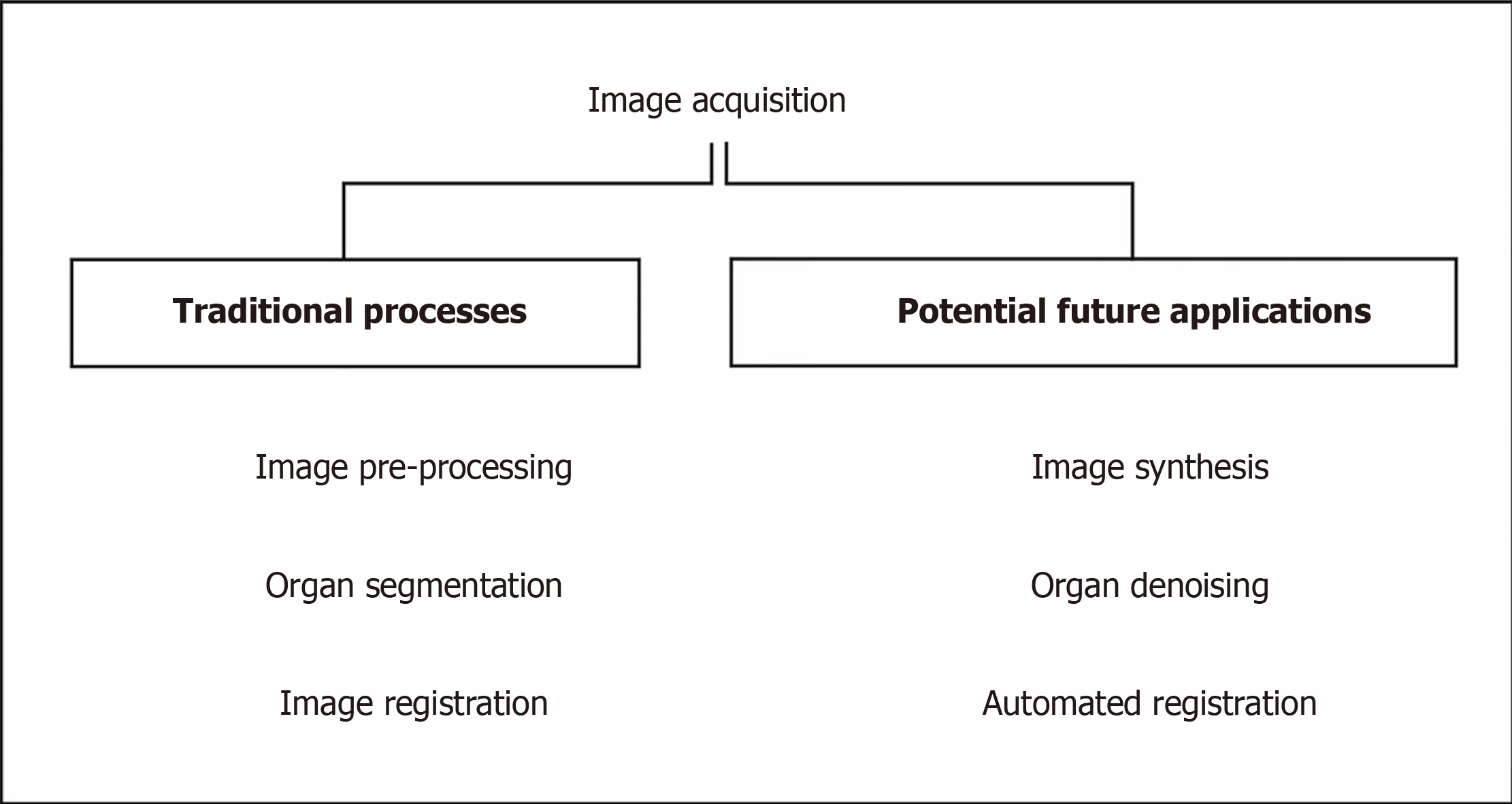

RT planning images are used to segment and contour organ at risks (OARs) and target volume (TV), and to plan the treatment. The images require accurate geometric coordinates and excellent image contrast to accurately contour the target in question. A summary, flow chart of image acquisition is shown in Figure 3. The other prerequisites include having a correct electron density of the tissues being imaged to calculate the amount of dose from the treatment beams being attenuated and absorbed by tissues in treatment planning so that an accurate dose can be delivered to the tumour.

Since magnetic resonance imaging (MRI) has advantages in soft tissue contrast for tissues such as brain and prostate (and allows for more accurate lesion localization) but MRI does not have a correlation of electron density in its image. There is a need to fuse the images together with computed tomography (CT) in the current practice. Therefore, when physicians contour on a set of images, the aligned geometric coordinates can ensure a correct contour registration. However, the patient might require scanning both MRI and CT image and even the diagnostic PET images beforehand. To reduce the workload or increase the efficiency of those MRI, CT machines, many kinds of image synthesis research have been carrying on based on deep learning technique[3]. The followings are the different applications of image synthesis and advancement using AI as applied to image acquisition. Table 1 summarises the most recent contemporary work.

| Ref. | Architecture | Purpose |

| Fu et al[5], 2020 | Cycle consistent generative adversarial network | To enable pseudo CT-aided CT-MRI image registration |

| Liu et al[6], 2019 | Cycle generative adversarial network | To derive electron density from routine anatomical MRI for MRI-based SBRT treatment planning |

| Liu et al[7], 2019 | 3D Cycle-consistent generative adversarial network | To generate pelvic synthetic CT for prostate proton therapy treatment planning |

| Lei et al[8], 2020 | Cycle generative adversarial network for synthesis and fully convolution neural network for delineation | To help segment and delineate of prostate target by pseudo MR synthesis from CT |

| Dong et al[9], 2016 | Super resolution convolution neural network | To develop novel CNN for high- and low-resolution images mapping |

| Bahrami et al[10], 2016 | Convolution neural network | To reconstruct 7T-like super-resolution MRI from 3T MR images |

| Qu et al[11], 2020 | Wavelet-based affine transformation layers network | To synthesize superior quality of 7T MRI from its 3T MR images than existing 7T MR images |

| Yang et al[12], 2018 | Generative adversarial network with Wasserstein distance and perceptual loss function | To denoise low-dose CT image and improve contrast for lesion detection |

| Chen et al[14], 2017 | Deep convolution neural network | To train the mapping between low- and normal-dose images so to efficiently reduce noise in low-dose CT |

| Wang et al[13], 2019 | Cycle-consistent adversarial network with residual blocks and attention gates | To improve the contrast-to noise ratio for low-dose CT simulation in brain stereotactic radiosurgery radiation therapy |

There are several pieces of research on pseudo CT image synthesis from MRI images to help registration of different image modalities or target delineation[4]. Fu et al[5] synthesized CT image using cycle consistent generative adversarial network which is an image synthesis network to assist registration of CT-MRI images by directly registering synthetic-CT to original CT images or to have MR-only treatment planning by generating synthetic-CT for treatment planning based on scanned MR image . In addition, Liu et al[6,7] researched on generating synthetic-CT from MRI-based treatment planning to derive electron density from routine anatomical MRI so that it can be possible to have MRI-only treatment planning for liver, and prostate cancer.

There is also pseudo MRI synthesis from a CT image for prostate target delineation based on the synthetic MR image from CT image using fully convolution network[8].

To improve the image resolution and quality, Dong et al[9] presented a novel super-resolution convolution neural network approach to map between low and high-resolution images in order to synthesize superior-resolution images than other approaches. Bahrami et al[10] and Qu et al[11] also focus on pseudo synthesis of 7T MRI image from normal 3T MRI using deep learning technique. The high resolution can have a better tissue contrast which can enhance contouring accuracy and on the other hand, will not pose additional dose or scanning time for the patient simulation.

Image denoising is important to improve the signal-to-noise ratio of low-dose CT. Yang et al[12] have introduced a CT image denoising method using a generative adversarial network (GAN) with Wasserstein distance and perceptual similarity, so that it can function as conventional CT while keeping a low radiation dose level to the patient. Wang et al[13] and Chen et al[14] also train the low-dose CT data with a fully convolution neural network with residual blocks and attention gates so to generate a set of data with improved noise, contract-to-noise ratio.

With the introduction of machine learning and deep learning, various modalities of images can be artificially synthesized for oncologists to take reference, draw different contours on images with superior tissue contrast and fuse together afterwards with the treatment planning software. This can greatly reduce patient scanning time with different modalities. On the other hand, the improvement of images tissue contrast and resolution can help to reduce the margin of the target in order to reduce the uncertainty and improve the dosimetric accuracy of the RT treatment.

Image segmentation is an important routine for RT for distinguishing anatomical structures and target[15], as well as comprising sets of pixels[16]. Before the advent of AI, radiation oncologists segment those regions of interest on RT simulation scans (i.e., CT and MRI) manually. They originally used a rigid algorithm and need human interference, professional judgement, and experience. These include thresholding, K-means clustering, histogram-based image segmentation and edge detection[16].

The long duration for manual segmentation is one of the main reasons for the delay in the start of RT treatment, especially in clinics with limited resources. The locoregional control and overall survival rates are lowered because of the inefficiency in the workflow. It also hinders the adaptive RT treatment, because the new images indicating the anatomical changes of the patients have to be segmented for an accurate dose accumulation estimation after each treatment cycle[15].

Accurate segmentation for TV and OARs are necessary for RT plans, but inconsistency such as inter-and intra-observer variability for manual segmentation has been reported. This is because the task is subjective in nature; the decision is made based on an individual’s knowledge, judgement and experience. The quantitative and dosimetric analyses are therefore affected, with a varying degree of impact. If an AI tool can be developed with less inherent variability, this would be an invaluable tool for addressing this issue. In order to keep up with modern development, automatic segmentation is needed. It has to overcome image-related problem and provide accurate, efficient and safe RT planning[15].

There are many segmentation types, such as Atlas-based segmentation and Image-based segmentation etc. Deep learning in segmentation is a very broad topic, and in broader medical applications, there are several architectures used (Table 1).

The availability of segmented data and computer power were the main reason for manual segmentation in the earlier years. Most segmentation techniques utilised little to no prior knowledge, and these are regarded as low-level segmentation approach. Examples of these techniques are region growing, heuristic edge detection and intensity thresholding algorithms[15].

In the past twenty years, a good amount of effort has been poured into the medical imaging field to make use of prior knowledge. Anatomical structures, such as the shape and appearance characteristics are used to compensate for the insufficient soft tissue contrast of CT data, in order to produce an accurate definition of the anatomical boundary[15].

In recent years, deep learning-based software for auto-segmentation has been shown to provide a great leap of improvements over previous approaches. The field of deep learning has become more popular, notably after the seminal paper by Krizhevsky et al[17] (2012) which showed a much-improved prediction in image classification and recognition tasks using a deep convolutional neural network (CNN) architecture called AlexNet. More researches followed this approach with the use of a CNN for image segmentation, and the results performed better than prior algorithms, leading to a quick adaptation for deep learning in auto segmentation for medical images[15].

The use of CNNs involves feeding segments of an image as an input, labelling the pixels. The image is scanned by the network, then the network observes the image with a small filter each time until the entire image is mapped[18].

Automatic segmentation is usually used in conjunction with manual and semiautomatic segmentation. Manual segmentation requires considerable time and expertise, but often with poor reproducibility. Semiautomatic segmentation relies on human involvement, errors and mistakes can also be expected. Automatic segmentation can provide more accurate results with minimal errors, however, several limitations such as noise existence, partial volume effects, the complexity of three dimensions (3D) spatial multiclass features, spatial and structural variability hinder the effectiveness of automatic segmentation[19].

DeepLab[20], U-Net[21], fully convolutional networks (FCN)[22], dense FCN and residual dense FCN are some of the state-of-the-art neural networks that have been used to tackle this issue. Qayyum et al[23] proposed volumetric convolutions for processing 3D input slices as a volume, with no postprocessing steps required. It provided an accurate and robust segmentation that indicated the complete volume of a patient at once.

The test between the proposed model and the current state of the art methods using SegTHOR 2019 dataset was compared. The challenge for this dataset is the position and shape of each organ at each slice has low contrast in CT images as well as the great variation in shape and position. The dataset presented a multiclass problem, and performance metrics are used to evaluate existing deep learning methods and the method proposed by Qayyum et al[23] The proposed model provided an improved segmentation performance and produced superior results compared with existing methods.

The training of deep neural networks (DNNs) for 3D models is challenging, as most deep learning architectures are based on FCN. FCN uses a fixed receptive field and objects with varying size can cause a failure in segmentation. Increasing the field of view and using a sliding window based on complete images can solve the fixed field issue[23].

Several other issues have been reported, such as overfitting, prolonged training time and gradient vanishing. Target organs that do not have a homogeneous appearance and ill-defined borders pose a great challenge to automatic segmentation. In addition, the heterogeneity of appearances even for a single disease entity is a challenge e.g., the appearance of the target could change from patient to patient as well as intra-patient variation between treatment cycles (as if often caused by tumour necrosis). These issues can cause a decrease in performance in 3D deep learning models when handling 3D volumetric datasets. Using an atrous spatial pyramid pooling module with multiscale contextual feature information can assist in handling the issue of changes in sizes, locations and heterogeneous appearances of the target organs and nearby tissues[23].

There is also an issue of paucity of data. A large amount of annotated data is required for training accurate segmentation using deep learning approach. Increasingly there are several open-source labelled datasets in medical imaging[24-26]. Increasing numbers as well as diversity are needed to increase innovation in this field.

In modern RT, it is crucial to maximize the radiation to the cancer tumour while minimising radiation and potential damage to the surrounding healthy tissues. Intensity modulated RT (IMRT) and volumetric-modulated arc therapy (VMAT) are the two standard treatment techniques for external beam RT treatments that can achieve the tissue-sparing effect while delivering a suitable amount of dose to the planned TV. The treatment plan often involves dose calculations and dose-volume histogram (DVH) which are tools to evaluate the dose to various organs and help the medical staff to determine the quality of the plan. The plans require a lot of time and effort to produce due to the dose constraints and inter-operator variation[27-29].

Accurate DVH predictions are essential for automated treatment planning, and the predictions keep on improving over the past decade. Concepts such as overlapping volume histogram to describe the geometry of OARs and method for searching similar plans in a clinical database to guide the treatment planning for new patients were proposed. Deep learning methods were used recently to predict the dose distribution in 3D. Because of the nature of DNNs, it relies heavily on the amount and quality of the sample to achieve a high prediction accuracy. The performance could also be affected by parameters such as beam arrangement and voxel spacing in the treatment plan. The robustness of the prediction model can be enhanced with additional pre-processing layers and data augmentation. Through the usage of de-noising auto-encoder for pre-training DNN, more robust feature can be learnt, and less complex neural network can also produce excellent feature fitting capabilities[27].

Treatment planning is time-consuming, and the method used by each person performing the optimisation can affect the quality of the outcome[30-32]. Automating the treatment planning process can potentially lower the time required for manual labour and reduce the interobserver variations for dose planning. It is generally anticipated that the overall plan quality should improve with the use of AI[32].

The dose objective defined by the dosimetrist determines the dose distribution, usually according to the institution-specific guidelines. However, guidelines cannot provide an optimal dose distribution for specific patients, since the lower achievable dose limit to healthy surrounding tissues for the patient is not known. So, each treatment plan is patient-specific and is produced by trained dosimetrists. Optimisation of the plan is still labour-intensive, it makes it difficult to ensure the clinical treatment plan is properly optimised. All of these concerns lead to the need for automation as a solution to reduce the amount of time spent on the plans and the variations between dosimetrists[32].

Auto planning software produces comparable or better results for prostate cancer according to Nawa et al[28] (2017) and Hazell et al[32] (2016). Most OARs receive significant better results with the dose level of the DVHs, and auto planning managed to give clinically acceptable plans for all cases. The results were similar with head and neck cancer treatment. Dosimetrists can potentially have more time to focus on difficult dose planning goals, fine-tuning specific area and spend less time on the mundane tasks of the planning process[32].

AI in the future will have the ability to accurately identify both normal and TV during treatment and estimates the best modality and beam arrangement from various clinical options. This will lead to an increase in local tumour control and reduces the risk of toxicity to surrounding normal tissue. Integration of clinically relevant data from other sources in addition will allow AI system to tailor the treatment approach beyond the current state of the art methods. The time burden of human intervention and the time taken for the overall process can be reduced substantially[2].

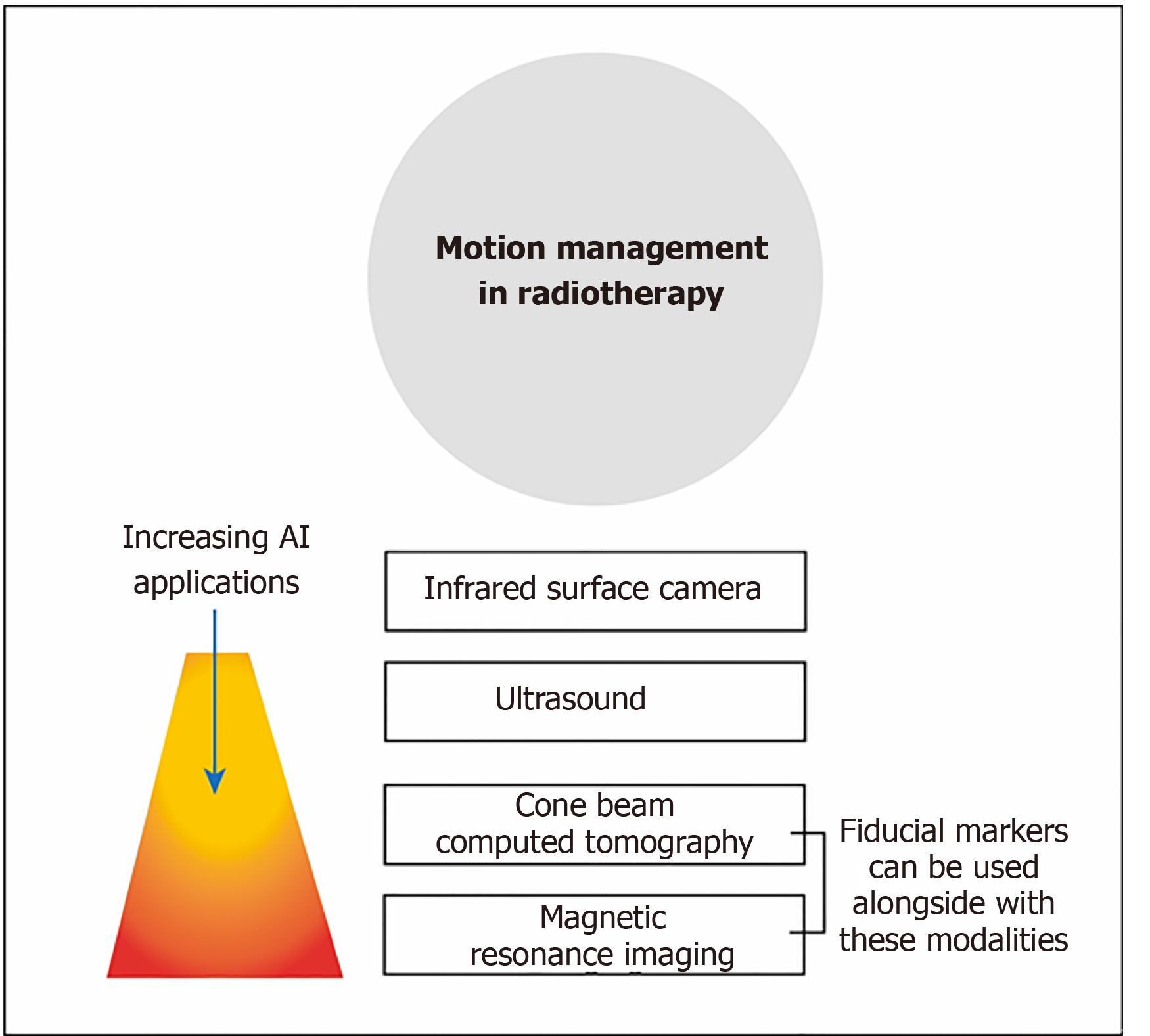

Integrated cone-beam CT (CBCT) is commonly used to image the position of the patients. As CBCT has a much lower quality than planning CT images, AI is needed to improve the image quality of CBCT to enable more accurate positioning for treatment[33]. Other imaging techniques such as onboard MRI, ultrasound and infrared surface camera, are used to monitor the motion of the patients as shown in Figure 4. These provide an opportunity for AI to refine and enhance the monitoring during the treatment[34].

The motion of the patient or organ throughout the treatment contributing to inaccuracies in treatment delivery will inevitably increase the radiation dose to surrounding healthy tissues. Motion managements are used for monitoring the extent of the motion from respiration or digestion[35]. There is a potential for the use of AI to predict the diverse variables by creating patient-specific dynamic motion management models[36]. Complex breathing patterns in real-time to accurately track tumour motion are the major task for predictive algorithms[37].

Throughout the treatment, there are changes in the patient’s anatomy between the planning appointment and treatment delivery, or even throughout the treatment. Re-planning is necessary when the tumour shrinks or grows, or sometimes with anatomical variations such as the movement of internal organs and gas or liquid filling of the bowels and stomach. Adaptive treatments require a new plan to be created based on up-to-date images of the patient’s anatomy. AI tools help predict geometric changes in patient throughout the treatment, thus identify the ideal time point for adaptation[34].

Apart from conventional cone-beam CT images, AI is involved in the RT treatment for motion tracking using MR images. MRI provides superior soft-tissue contrast compared to conventional CT, and thus target delineation in prostate cancer RT using MRI has become more widespread[38]. However, in RT planning, the combination of MRI and CT image is there is a spatial uncertainty of < 2 mm from the image registration for prostate between MRI and CT[39]. A systematic registration error could lead to an error in treatment, so the dose distribution does not conform to the intended target and results in the tumour control being compromised[40].

As briefly mentioned in section 1, one way to minimize the error is to implement an MRI-only workflow so the plan does not rely on the image from CT scanners. Gold fiducial markers are commonly used in prostate cancer for target positioning, they are detected by using the difference in magnetic susceptibility between the gold markers and the tissue nearby. Multi-echo gradient echo sequence is proposed by Gustafsson et al[40] for identifying the fiducial markers. The automatic detection of gold fiducial markers can save time and resources, as well as removing inter-observer differences. From the experiment performed by Gustafsson et al[40] and Persson et al[41], the true positive detection rates achieved were 97.4% and 99.6% respectively. The results were comparable to manual observer results and they were better than most non deep learning automatic detection methods. A quality control method was also introduced to call upon the attention of the clinical staff when a failure in detection had occurred, which provided a step towards AI automation for MRI-only RT especially for the prostate[40].

Besides monitoring the anatomical changes and motion during treatment, AI is heavily involved in the process of delivery of the treatment beam. VMAT delivery is one of the current standard RT technique. Currently, the treatment plan for VMAT is time-consuming[42]. Machine parameter optimization (MPO) is used to determine the sequence of linac parameters such as multileaf collimators (MLCs) movements, the planning usually involves a manual trial and error approach to determine the best optimizer inputs to obtain an acceptable plan, and execution time for the optimizer is escalated further due to it being run multiple times. There is a need for a fast VMAT MPO algorithm, so while the patient is in the treatment position, the MPO can be executed multiple times for online adaptive planning[43-45].

Reinforcement learning (RL) is a form of machine learning approach, trained to estimate the best sequence of actions to reduce a cost as low as possible in a simulated environment through trial and error. It can be applied to new cases to quickly optimise treatment plans, machine parameters and corresponding dose distributions. The result shows RL VMAT approach produces a rapid and consistent result in both training and test cohort, showing a generalisable machine control policy without notable overfitting in the training cohort despite the small number of patients. The total execution time for plan optimisation was 30 s, with the potential to decrease the time even further because the algorithm can be implemented in parallel across different slices within the plan[45].

Dose calculation of RT treatment using Monte Carlo (MC) simulation is very time consuming[1]. Kernel-based algorithm using DNN proposed by Debus et al[46] manages to calculate the peak dose and valley dose in a few minutes with little difference to MC simulation.

Besides the fast calculation speed for dose, kernel-based algorithm is used for identifying the irradiation angle to optimized beam angle for intensity-modulated RT plan. The optimized beam angle spares the organs at risk better in pancreatic and intracranial cancer[47]. It also gives a low-cost computational solution to markerless tracking of tumour motion, such as in kilovoltage fluoroscopy image sequence in image-guided RT (IGRT). The kernel-based algorithm provides a better tracking performance than the conventional template matching method, and it is comparable to the fluoroscopic image sequence[48]. DNN is used to interpret projection X-ray images for markerless prostate localization. The experimental result shows the accuracy is high and can be used for real-time tracking of the prostate and patient positio

QA is a way to figure out and eliminate errors in radiation planning and delivery but more importantly to ensure consistent quality of the treatment plans. It is an important tool in evaluating the dosimetric and geometric accuracy of the machine and treatment plans. There are a lot of QA researches based on deep learning and machine learning technique[50,51] for improving the accuracy and efficiency of QA procedures. Most adopt a ‘human creates while machine verifies’ approach. The followings are different sorts of applications of applying AI onto QA in RT. A summary is presented in Table 2.

| Ref. | Architecture | Purpose |

| Chang et al[52], 2017 | Bayesian network model | To verify and detect external beam radiotherapy physician prescription errors |

| Kalet et al[53], 2015 | Bayesian network model | To detect any unusual outliners from treatment plan parameters |

| Tomori et al[54], 2018 | Convolutional neural network | To predict gamma evaluation of patient-specific QA in prostate treatment planning |

| Nyflot et al[55], 2019 | Convolutional neural network | To detect the presence of introduced RT delivery errors from patient-specific IMRT QA gamma images |

| Granville et al[56], 2019 | Support vector classifier | To predict VMAT patient-specific QA results |

| Li et al[57], 2017 | ANNs and ARMA time-series prediction modelling | To evaluate the prediction ability of Linac’s dosimetry trends from routine machine data for two methods (ANNs and ARMA) |

Machine learning can be applied to automated RT plan verification. It aims to verify the human-created treatment plan to eliminate any outliners in plan parameters, error-containing contours. Chang et al[52] developed a Bayesian network model to detect external beam RT physician order errors ranging from total prescription dose, modality, patient setup options so that these errors can be figured out and rectified as soon as possible without undergoing re-simulation and re-planning. Kalet et al[53] further investigated around 5000 prescription treatment plans within 5 years and construct a Bayesian learning model for estimating the probability of different RT parameters from given clinical information. It can act as a database to cross-reference with existing physicians’ prescription, for example, to safeguard against human errors, e.g., new doctors. However, such QA checking does not mean to override some exceptional case/physicians’ decisions but acts as supporting information as a safety net.

Patient-specific QA is time-consuming, but this is the most direct and comprehensive way to validate an IMRT or VMAT plan that uses sophisticated MLC patterns. Tomori et al[54] made use of a CNN network to predict and estimate the gamma passing rate of these planning plans for prostate cancer based on input training data (volume of planning TV and rectum, monitor unit values of individual field). In the future, patient-specific QA can hopefully be fully automated. Nyflot et al[55] also use a CNN with triplet learning to extract the features from IMRT QA gamma comparison results and train the model to distinguish any introduced RT treatment delivery errors like MLC mispositioning error just based on QA gamma results.

Granville et al[56] also trained a linear support vector classifier to predict the VMAT QA measurements results based on training measured dose distribution using biplanar diode arrays.

To ensure the accuracy and stability of the treatment machine and plans, sufficient QA tests ought to be performed. Kalet et al[50] highlighted that by using machine performance and regular QA measurement logs as input, it can train the model to predict machine performance so as to trigger any preventive maintenance from the service engineers or save time spent to perform additional routine machine QAs.

Li et al[57] have used longitudinal daily Linear accelerator (Linac) QA results over 5 years to build and train the model using artificial neural networks or autoregressive moving average time-series prediction modelling techniques so to help understand Linac’s behaviour over time and predict the trends in the output[57]. In the future, timely preventive maintenance can be scheduled if necessary after prediction.

Chan et al[51] highlighted currently many research applications of AI in RT QA focused on predicting the machine performance and patient-specific QA passing rate results. These QA prediction tools based on deep and machine learning can be incorporated into the treatment planning optimiser so that has a timely prediction of QA gamma rate before finalizing the plan. It minimises time spent on repeating measurement/replanning in case of failing QA tests. By monitoring the machine output performance, it can also help to give feedback to the treatment planning system to improve the accuracy of planning.

AI also has a role in following outcomes of patients being treated with RT. Many prediction models have been developed, which can be organised by the outcomes predicted as well as the methods used. For RT, the main outcomes that have been investigated are treatment response (e.g., local tumour control and survival) and toxicity. However, the methods used to make these predictions vary widely based on the available data. As studies often acquire these data points retrospectively, the availability of ‘ground truth’ data may vary according to the clinical setting. To reflect the heterogeneity of data used in some studies, for example, Xu et al[58] predicted the chemoRT response of NSCLC patients using 2 datasets. The first set did not have surgery, whereas the second set required surgery and thereby providing data for the pathologic response.

Studies also required data in varying quantity. Various combinations of clinical, imaging, dosimetry, pathological, genomic data have been used to generate the models. Longitudinal data is also important, as shown by Shi et al[59] using both a pre-treatment and mid radiation MRI to predict chemoradiation therapy response in rectal cancer . To overcome, difficulties of acquiring large amounts of medical data, techniques such as transfer learning has been used to allow algorithms to train on separate large data sets[60].

Tumor control occurs when the appropriate dose is delivered to the tumor, leading to a reduction in the growth of the tumor. It can be assessed grossly by the degree to which the tumor’s size changes. Increasingly, changes have been assessed at a more microscopic level based on imaging characteristics (e.g., functional imaging and quantitative analysis such as radiomics). It can also be conceptualized over multiple time points, ranging from the initial treatment response to recurrence, and to the overall survival.

For example, Mizutani et al[61] used clinical variables and dosimetry to predict the overall survival of malignant glioma patients after RT using SVM. Oikonomou et al[62] analyzed radiomics of PET/CT to predict recurrence and survival after SBRT for lung cancer. Regarding treatment failure, Aneja et al[63] used a DNN to predict the local failure over 2 years after SBRT for NSCLC, while Zhou et al[64] predicted the distant failure after SBRT for NSCLC using SVM . In shorter time frames, Wang et al[65] predicted the anatomic evolution of lung tumors halfway through the 6-wk course of RT using a CNN. Furthermore, Tseng et al[66] used RL to allow ‘adaptation’ of RT to the tumor response. Several studies have also examined treatment response in terms of prediction of pathological response following neoadjuvant chemotherapy using pre-treatment CT scans using radiomics with machine learning classification[67,68].

There are several studies utilising machine learning and AI in the task of prognostication. For example, a multi-centre study using a radiomics approach was utilised in predicting recurrence-free survival in nasopharyngeal carcinoma using MRI data[69]. In this study, an attempt was also made to explain the model using SHAP analysis which could help derive feature importance used in the predictive model.

Radiation toxicity is the other outcome that has been used for prediction. Whereas tumor control is the desired outcome from radiation targeting tumorous tissue, toxicity is the unwanted effects of radiation inevitably affecting surrounding normal tissue. Various applications have been applied to different sites of cancer. For example, Zhen et al[60] predicted rectum toxicity in cervical cancer using CNN, Ibragimov et al[70] predicted hepatobiliary toxicity after liver SBRT using CNN, and Valdes et al[71] predicted radiation pneumonitis after SBRT for stage I NSCLC using RUSBoost algorithm with regularization.

There have also been works that combine the outcomes of both the toxicity and the tumor response to RT. For example, Qi et al[72], applied a DNN to predict the patient reported quality of life in urinary and bowel symptoms, after SBRT for prostate cancer. The model was trained on the dosimetry data alone. The urinary symptoms were predicted by the volume of the tumor, while the bowel symptoms represent the toxicity to the rectum.

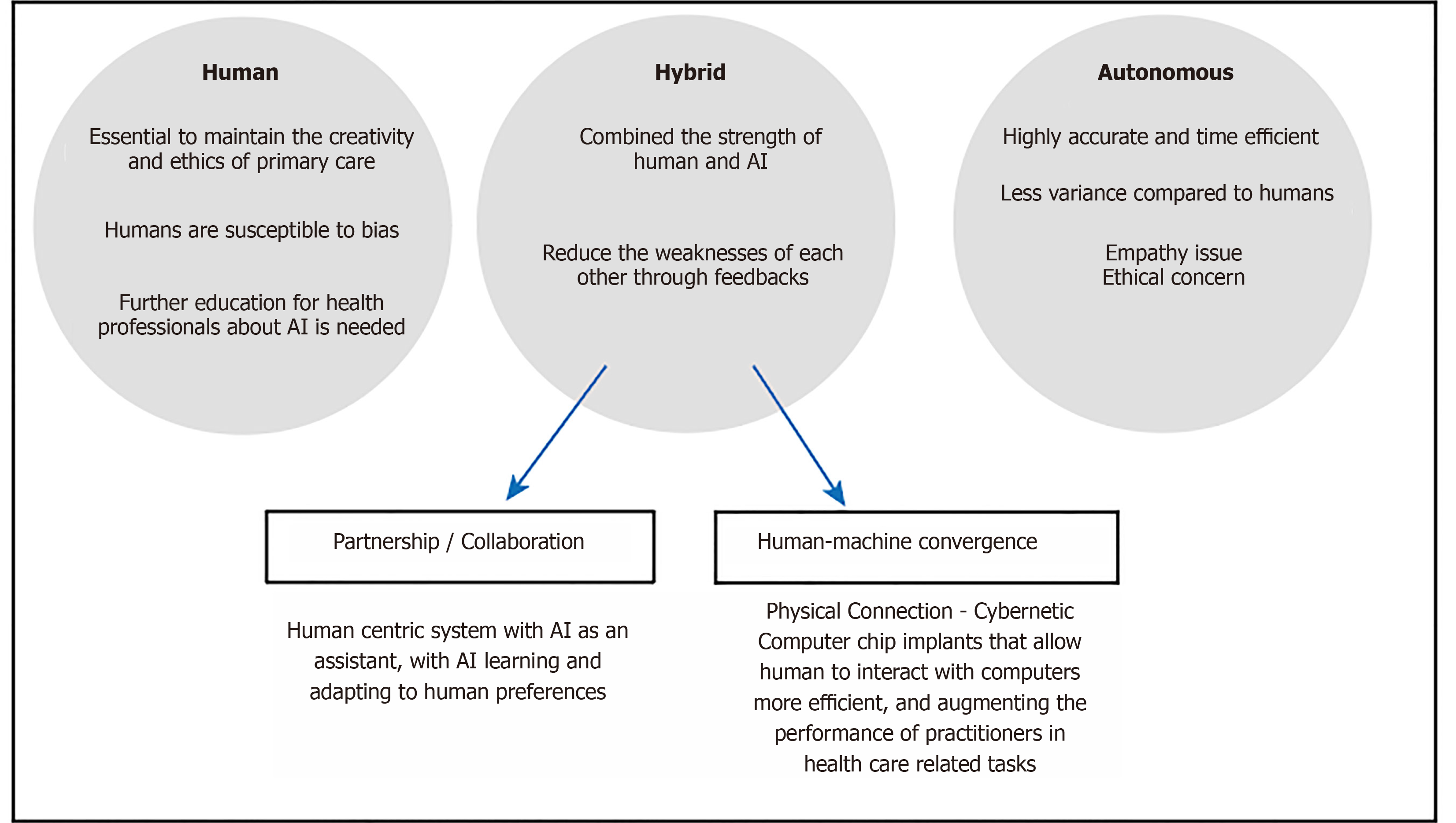

As the use of AI technology progress, we need to examine the role of AI in conjunction with human. In the short term, this is likely to be in a collaborative/hybrid manner, rather AI operating autonomously, although this will depend on the tasks at hand. The impact of AI in the radiation oncology field is increasing rapidly, but at the same time the concern surrounding the use of AI is rising. One of the main concerns is the replacement of many jobs in the field of medical physics and radiation oncology, which can lead to a change in how patients are being treated. It is important to understand the perception of radiation oncology staff about the progression of AI and increase the awareness of the using of AI as a cooperative tool instead of job replacements. With the integration of AI in the profession, there is a huge potential in improving radiation oncology treatments and decision-making processes[73].

The efficiency and accuracy will be revolutionized by AI, but the future role of AI is not as clear, and the responsibility of the AI algorithm and clinicians using the AI needs to be addressed. In RT treatment planning, most plans are generated based on ground truth with low variability, but the optimization requires insights from clinicians to provide a creative solution for the patient. With the heavy reliance on technology, the innovative aspect may be reduced with the lack of human inputs. Safety risks such as AI being reluctant to highlight its own limitations are possible, with the potential of suboptimal plans being passed for treatment[74]. There is likely to be ongoing need of human/clinical oversight, not least due to regulatory requirements.

The concerns of using AI stem from the key issues surrounding the lack of empathy and intuition, unlike human practitioners. The development of empathy, which leads to the clinician focusing on the patients’ well-being could play a subconscious role in providing a creative, innovative and safe RT treatment. This philosophical issue relates to human consciousness, and it contributes to how health practitioners should approach, use and interact with AI. The term preconceptual understanding, can be referred to as common sense for human in general. Since AI is perceived to not possess human common sense, it may affect its ability to perform certain tasks that require incorporation of these kinds of thinking[74].

Human cognition has two main attributes, which are concept and intuition. Human relies on these attributes to relate to the world and people around us. The concept of being affected by other people has an impact on how we behave towards them. Affectivity between individuals makes us take responsibility for other people, altering our behaviours either consciously or unconsciously. The intended consequence is generally thought to be that humans will behave in an ethical manner. In RT practice, clinical guidance exists for clinicians to follow and failure to act ethically would have serious consequences[74].

With the lack of intuition, AI may not behave with identical traits as human. The focus of the AI will be based on preprogrammed objectives, instead of patient outcomes and may even display a lack of creative input. Patient care and communication should be performed by human professionals because human needs to be involved in the RT routines, so the safety, creativity and innovation can be maintained. In the short term, the use of AI may assist treatment planning, potentially saving time. Clinicians will be required to integrate the technology into their practice, being aware of limitations, and how it can assist decision making. The unintended consequence may be that there are less opportunities or experience in training, and the training the future generation of medical staff for providing competent oversight may need to be addressed[74].

Karches[75] proposed that AI should not replace physician judgement. Technology should help us to extract things from their context, but when technological advancement leads us to reduce qualitative into quantitative information, eventually interactions between people could become mere data and information, driven to the point where only the quantifiable entities matter. Karches[75] mentioned two examples, which are stethoscopes and electronic health record (HER), to explain technologies can both help or hinder primary care. A stethoscope allows the physicians to pay attention to the sounds of the patients’ body functions. The physicians merely utilize a tool to increase their ability to extract information, the tool acts as an extension of the physician which still allows the physicians to conform their judgement to the patients’ reality. However, EHR tends to distance the physician from the patient. A collection of fact is presented to the physicians before meeting the patient can surely make the examination process to be more efficient, but the lack of interaction between physician and patient can lead the physician to be less adapted to handle aspects of patient care that is not quantifiable by technology[75]. Limiting patient interaction also leads to less empathy and rapport, potentially leading to less trust in medical professionals.

They are unlikely to devote more time to uncompensated activities such as educating students[75]. These examples are an important reminder of how clinicians should interact with AI, where AI needs to be a tool to assist the clinicians to gain a better understanding of the patient and situation, but not something to distract themselves which compromise primary care. The more optimistic model of AI usage may be that AI frees the physicians or medical practitioners from repetitive or mundane, enabling them to spend more time with patients.

Wong et al have surveyed the Canadian radiation oncology staff in 2020 regarding their views towards the impact of AI. Even though more than 90% of the respondents were interested in learning more about AI, only 12% of them felt they were knowledgeable about AI. For the forecast of AI, the majority of the respondents felt optimistic, and it would save time and benefit the patients. Common concerns among the staff were the economic implications and the lack of patient interaction. The precision of AI in identifying organs at risk is the top priority, and most concurred that AI system could produce better than average performance, but human oversight is still necessary for providing the best quality of patient care. Many respondents, especially radiation trainees, had concerns about AI could replace their professional responsibilities[73].

Medical practitioners have expressed frustration at the technologies because the relationship between the patient and medical staff are undermined. The AI produces a medical judgement, often disregarding the particular circumstances of each patient. This is because any extra consideration for the patient may lead to an increase in cost, lowering efficiency. Many experienced clinicians would not rely solely on the patients’ verbal description because patients could be untruthful about their purpose of visit, or they might understate the burden of their symptoms. AI would tend to take the history of patients at face value, and depending on the technology used, it may never have the ability to interpret subtle non-verbal cues. The ability to understand the patients’ needs remain questionable, as the best patient outcome does not always have a binary result which computers are good at producing[75].

The reduction of time-consuming tasks due to the AI integration may cause a reduction in job opportunities. On the other hand, the decrease in a more time-consuming task can lead to better inter-professional collaboration and an increase in interaction time with the patient. According to the survey from Wong et al[73], the cost benefits of AI was unclear for the respondents and it can be one of the reasons for the limitation on AI advancement. There could be a need for incorporating the knowledge of AI in the early stages of education, this is because the trainees which will be the future generation of practitioners, showed the least positivity towards AI. The fear of the unknown is part of human nature, and therefore, the investment of educating professionals to raise the knowledge and importance of AI is essential[73].

Although AI has the potential to expand or extend beyond the cognitive abilities of humans, it still has its limitations in its current form that only humans can demonstrate such as generalisability and empathy. These limitations are especially pronounced in fields where data is limited and social context is paramount, such as in medicine and RT. There is an idea to create systems that combine humans and AI in symbiosis, with the intention that the whole is greater than the sum of its parts[76]. The ideal hybridized system would allow the two parties to combine the strengths yet hide the weaknesses of each other. However, the key to optimizing these systems is to have an efficient Human-AI interaction process. The interaction process has been subject to recent research. Design principles have been set forth, though applications within RT may be in its infancy.

To conceptualise the process of human-AI interaction, some groups have written guidelines and taxonomies for the design of such processes. Amershi et al[77] have created design guidelines for human-AI interaction, based on the feedback and experience of design practitioners. The focus is on a human-centric system with AI as an assistant. Key features can be divided over different time points of the interaction: (1) Before interaction (initiation): How does AI set expectations on its strengths and limitations? (2) On interaction: How does AI present information to a human? How does human provide feedback to AI? and (3) After interaction (over time): How does AI learn and adapt to human preferences?

The initiation phase occurs before any interaction occurs when expectations are set out for each other. Cai et al[78] have investigated what medical practitioners desired to know about the AI before using it. The requirements were akin to what the users desired to know about their human colleagues when consulting or cooperating with them. The properties of the AI can be described along these lines including its known strengths and limitations (e.g., bias of training data), its functionality (e.g., the task it was trained to perform), its objective (e.g., was it designed to be sensitive or specific) and socioeconomic implications. With appropriate expectations set, the user may be motivated to adopt the system in various modes of collaboration. For example, the human-AI system can divide labour according to their strengths, or they can perform the same task as a second opinion to each other.

During the interaction, the AI and human communicate to share information. Firstly, there is a consideration of what information is to be shared. With current AI systems using deep learning, a decision or prediction is made based on given inputs. However, there is a common concern of interpretability of such decisions of AI systems because of the lack of explicit steps of reasoning between input and output. In order to gain trust in AI decision, interpretability or explanability has been a growing area in AI research in general. To this end, Luo et al[79] reviewed different AI algorithms with improved interpretability for RT outcome prediction. Some examples include using handcrafted features or activation maps. However, there is a trade-off between the algorithm’s interpretability and its accuracy. Other methods include using SHAP analysis which is used to explain feature importance in tree-based models[80]. Secondly, there is a consideration of how to present the information in the workflow so that this integrates well in clinical practice. Ramkumar et al[81] explored the user interaction in semi-automatic segmentation of organs at risk. It was shown that the physicians’ subjective preferences of different workflows play an important role, suggesting flexibility in system design needs to be bourne in mind. The experience and/or personal preference of an individual practitioner may also play a role. A recent study demonstrated that humans are susceptible to bias when given advice and this is particularly more pronounced with doctors with less experience on the task of chest radiograph interpretation[82]. Figure 5 shows the likely future direction of the development of AI and human-AI interaction. The incorporation of AI under human supervision will likely become mainstream in clinical practice in the future, until the AI has sufficient or near-human consciousness to perform tasks autonomously. In between, there may also be a hybrid mode of operation, whereby a direct interface with human may be used. For example, there are developments to implant chips in human brain so that we can directly interface with a computer system. This mode of operation could be used for example, for real-time adjustment in treatment plan during treatment delivery.

The examples of applications and potential of AI provide insights on how and why health care professionals such as medical physicists and radiation oncologists should use AI. The pros and cons with AI usage needs to be understood fully in order to both strengthen our ability to provide primary care and reduce the amount of weaknesses that human and AI possess.

The role of medical physicists will likely migrate away from QA of equipment, towards the QA of the patient treatments and overall treatment environment and processes. The decision-making capacity is expected to be improved and the knowledge gaps between experts and non-experts of a specific domain may be lowered. Clinicians are going to interact with computers more often and the efficiency of the human-computer interface will play a larger role in reducing duplicative and manual efforts. With the advancement of AI in the near future, the performance may, if not already, have surpassed human in specific tasks. It is crucial to re-think the ethical clinical practice, when do we decide to let a human make a “correction” to the output provided by an AI[2], or when can we allow AI system to operate autonomously.

The growth of AI also poses security challenges as the data are shared more often across governance structures and stakeholders. Implications of unintended third-party data reuse may be more common. As a consequent, already there are some efforts such as the increased requirements of European Union’s General Data Protection Regulation to reduce the concern of the breach in privacy. Early AI that is clinically adopted might have flaws that result in patient harm just as some early IMRT systems. Nevertheless, AI will one day become widespread and effective technology[2].

Despite the potential drawbacks, the enormous benefit provided by AI will allow medical practitioners to provide a better healthcare service to patients. In the previous sections of this review, many techniques are currently in research. The clinical practice will be adopting the use of AI more in the future, and the examples listed above will likely become available and applied within the next decade.

While we are still a long way from having fully autonomous AI to determine the best treatment options, steps were taken in this direction such as improving AI algorithms through trainings and feedbacks. In the short term, there are likely to be some changes in the working environment. It would be foolhardy to expect that we maintain the status quo. Although medical practitioners are unlikely to be replaced any time soon, we expect the profession to evolve. Displacement of practitioner’s roles rather than replacement may be the impact in the foreseeable future.

| 1. | Siddique S, Chow JCL. Artificial intelligence in radiotherapy. Rep Pract Oncol Radiother. 2020;25:656-666. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 63] [Cited by in RCA: 60] [Article Influence: 10.0] [Reference Citation Analysis (0)] |

| 2. | Thompson RF, Valdes G, Fuller CD, Carpenter CM, Morin O, Aneja S, Lindsay WD, Aerts HJWL, Agrimson B, Deville C Jr, Rosenthal SA, Yu JB, Thomas CR Jr. Artificial intelligence in radiation oncology: A specialty-wide disruptive transformation? Radiother Oncol. 2018;129:421-426. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 124] [Cited by in RCA: 158] [Article Influence: 19.8] [Reference Citation Analysis (0)] |

| 3. | Meyer P, Noblet V, Mazzara C, Lallement A. Survey on deep learning for radiotherapy. Comput Biol Med. 2018;98:126-146. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 153] [Cited by in RCA: 174] [Article Influence: 21.8] [Reference Citation Analysis (0)] |

| 4. | Wang T, Lei Y, Fu Y, Wynne JF, Curran WJ, Liu T, Yang X. A review on medical imaging synthesis using deep learning and its clinical applications. J Appl Clin Med Phys. 2021;22:11-36. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 170] [Cited by in RCA: 143] [Article Influence: 28.6] [Reference Citation Analysis (0)] |

| 5. | Fu Y, Lei Y, Zhou J, Wang T, Yu DS, Beitler JJ, Curran WJ; Liu T; Yang, X. Synthetic CT-aided MRI-CT image registration for head and neck radiotherapy. In: Gimi BS, Krol A. Medical Imaging 2020: Biomedical Applications in Molecular, Structural, and Functional Imaging. SPIE, 2020: 77. [DOI] [Full Text] |

| 6. | Liu Y, Lei Y, Wang T, Kayode O, Tian S, Liu T, Patel P, Curran WJ, Ren L, Yang X. MRI-based treatment planning for liver stereotactic body radiotherapy: validation of a deep learning-based synthetic CT generation method. Br J Radiol. 2019;92:20190067. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 34] [Cited by in RCA: 53] [Article Influence: 7.6] [Reference Citation Analysis (0)] |

| 7. | Liu Y, Lei Y, Wang Y, Shafai-Erfani G, Wang T, Tian S, Patel P, Jani AB, McDonald M, Curran WJ, Liu T, Zhou J, Yang X. Evaluation of a deep learning-based pelvic synthetic CT generation technique for MRI-based prostate proton treatment planning. Phys Med Biol. 2019;64:205022. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 55] [Cited by in RCA: 51] [Article Influence: 7.3] [Reference Citation Analysis (0)] |

| 8. | Lei Y, Dong X, Tian Z, Liu Y, Tian S, Wang T, Jiang X, Patel P, Jani AB, Mao H, Curran WJ, Liu T, Yang X. CT prostate segmentation based on synthetic MRI-aided deep attention fully convolution network. Med Phys. 2020;47:530-540. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 63] [Cited by in RCA: 59] [Article Influence: 9.8] [Reference Citation Analysis (0)] |

| 9. | Dong C, Loy CC, He K, Tang X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans Pattern Anal Mach Intell. 2016;38:295-307. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5002] [Cited by in RCA: 1667] [Article Influence: 166.7] [Reference Citation Analysis (0)] |

| 10. | Bahrami K, Shi F, Rekik I, Shen D. Convolutional neural network for reconstruction of 7T-like images from 3T MRI using appearance and anatomical features. In: Carneiro G, Mateus D, Peter L, Bradley A, Tavares JMRS, Belagiannis V, Papa JP, Nascimento JC, Loog M, Lu Z, Cardoso JS, Cornebise J. Deep Learning and Data Labeling for Medical Applications. DLMIA 2016, LABELS 2016. Lecture Notes in Computer Science, vol 10008. Cham: Springer, 2016: 39-47. [RCA] [DOI] [Full Text] [Cited by in Crossref: 62] [Cited by in RCA: 39] [Article Influence: 3.9] [Reference Citation Analysis (0)] |

| 11. | Qu L, Zhang Y, Wang S, Yap PT, Shen D. Synthesized 7T MRI from 3T MRI via deep learning in spatial and wavelet domains. Med Image Anal. 2020;62:101663. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 41] [Cited by in RCA: 46] [Article Influence: 7.7] [Reference Citation Analysis (0)] |

| 12. | Yang Q, Yan P, Zhang Y, Yu H, Shi Y, Mou X, Kalra MK, Sun L, Wang G. Low-Dose CT Image Denoising Using a Generative Adversarial Network With Wasserstein Distance and Perceptual Loss. IEEE Trans Med Imaging. 2018;37:1348-1357. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 951] [Cited by in RCA: 653] [Article Influence: 81.6] [Reference Citation Analysis (10)] |

| 13. | Wang T, Lei Y, Tian Z, Dong X, Liu Y, Jiang X, Curran WJ, Liu T, Shu HK, Yang X. Deep learning-based image quality improvement for low-dose computed tomography simulation in radiation therapy. J Med Imaging (Bellingham). 2019;6:043504. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 24] [Cited by in RCA: 19] [Article Influence: 2.7] [Reference Citation Analysis (0)] |

| 14. | Chen H, Zhang Y, Zhang W, Liao P, Li K, Zhou J, Wang G. Low-dose CT via convolutional neural network. Biomed Opt Express. 2017;8:679-694. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 533] [Cited by in RCA: 374] [Article Influence: 41.6] [Reference Citation Analysis (0)] |

| 15. | Cardenas CE, Yang J, Anderson BM, Court LE, Brock KB. Advances in Auto-Segmentation. Semin Radiat Oncol. 2019;29:185-197. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 140] [Cited by in RCA: 289] [Article Influence: 41.3] [Reference Citation Analysis (0)] |

| 16. | Zhou SK, Greenspan H, Shen D. Deep Learning for Medical Image Analysis. 1st ed. San Diego: Elsevier, 2017. |

| 17. | Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. In: Bartlett P, Pereira PCN, Burges CJC, Bottou L, Weinberger KQ. Advances in Neural Information Processing Systems 25. Curran Associates, 2012. |

| 18. | Ibragimov B, Toesca D, Chang D, Koong A, Xing L. Combining deep learning with anatomical analysis for segmentation of the portal vein for liver SBRT planning. Phys Med Biol. 2017;62:8943-8958. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 47] [Cited by in RCA: 62] [Article Influence: 6.9] [Reference Citation Analysis (0)] |

| 19. | Li W, Jia F, Hu Q. Automatic Segmentation of Liver Tumor in CT Images with Deep Convolutional Neural Networks. J Comput Commun. 2015;3:146-151. [RCA] [DOI] [Full Text] [Cited by in Crossref: 151] [Cited by in RCA: 155] [Article Influence: 14.1] [Reference Citation Analysis (0)] |

| 20. | Chen LC, Papandreou G, Schroff F, Adam H. Rethinking atrous convolution for semantic image segmentation. 2017 Preprint. Available from: arXiv:1706.05587. |

| 21. | Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation. In: Navab N, Hornegger J, Wells W, Frangi A. Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. MICCAI 2015. Lecture Notes in Computer Science, vol 9351. Cham: Springer, 2015: 234-241. [RCA] [DOI] [Full Text] [Cited by in Crossref: 13000] [Cited by in RCA: 11303] [Article Influence: 1027.5] [Reference Citation Analysis (1)] |

| 22. | Shelhamer E, Long J, Darrell T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans Pattern Anal Mach Intell. 2017;39:640-651. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4004] [Cited by in RCA: 1825] [Article Influence: 202.8] [Reference Citation Analysis (0)] |

| 23. | Qayyum A, Ahmad I, Mumtaz W, Alassafi MO, Alghamdi R, Mazher M. Automatic Segmentation Using a Hybrid Dense Network Integrated With an 3D-Atrous Spatial Pyramid Pooling Module for Computed Tomography (CT) Imaging. IEEE Access. 2020;8:169794-169803. [DOI] [Full Text] |

| 24. | Clark K, Vendt B, Smith K, Freymann J, Kirby J, Koppel P, Moore S, Phillips S, Maffitt D, Pringle M, Tarbox L, Prior F. Browse Data Collections. The Cancer Imaging Archive (TCIA), 2021. |

| 25. | Freimuth M. NIH Clinical Center releases dataset of 32,000 CT images. National Institutes of Health, US. Available from: https://www.nih.gov/news-events/news-releases/nih-clinical-center-releases-dataset-32000-ct-images. |

| 26. | University of Southern California. Medical imaging data archived in the IDA. Available from: https://ida.loni.usc.edu/services/Menu/IdaData.jsp?project=. |

| 27. | Jiang D, Yan H, Chang N, Li T, Mao R, Du C, Guo B, Liu J. Convolutional neural network-based dosimetry evaluation of esophageal radiation treatment planning. Med Phys. 2020;47:4735-4742. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 7] [Cited by in RCA: 17] [Article Influence: 2.8] [Reference Citation Analysis (0)] |

| 28. | Nawa K, Haga A, Nomoto A, Sarmiento RA, Shiraishi K, Yamashita H, Nakagawa K. Evaluation of a commercial automatic treatment planning system for prostate cancers. Med Dosim. 2017;42:203-209. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 48] [Cited by in RCA: 56] [Article Influence: 6.2] [Reference Citation Analysis (0)] |

| 29. | Krayenbuehl J, Zamburlini M, Ghandour S, Pachoud M, Tanadini-Lang S, Tol J, Guckenberger M, Verbakel WFAR. Planning comparison of five automated treatment planning solutions for locally advanced head and neck cancer. Radiat Oncol. 2018;13:170. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 22] [Cited by in RCA: 36] [Article Influence: 4.5] [Reference Citation Analysis (0)] |

| 30. | Nelms BE, Robinson G, Markham J, Velasco K, Boyd S, Narayan S, Wheeler J, Sobczak ML. Variation in external beam treatment plan quality: An inter-institutional study of planners and planning systems. Pract Radiat Oncol. 2012;2:296-305. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 262] [Cited by in RCA: 378] [Article Influence: 27.0] [Reference Citation Analysis (0)] |

| 31. | Batumalai V, Jameson MG, Forstner DF, Vial P, Holloway LC. How important is dosimetrist experience for intensity modulated radiation therapy? Pract Radiat Oncol. 2013;3:e99-e106. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 49] [Cited by in RCA: 79] [Article Influence: 5.6] [Reference Citation Analysis (0)] |

| 32. | Hazell I, Bzdusek K, Kumar P, Hansen CR, Bertelsen A, Eriksen JG, Johansen J, Brink C. Automatic planning of head and neck treatment plans. J Appl Clin Med Phys. 2016;17:272-282. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 104] [Cited by in RCA: 122] [Article Influence: 12.2] [Reference Citation Analysis (0)] |

| 33. | Kida S, Nakamoto T, Nakano M, Nawa K, Haga A, Kotoku J, Yamashita H, Nakagawa K. Cone Beam Computed Tomography Image Quality Improvement Using a Deep Convolutional Neural Network. Cureus. 2018;10:e2548. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 29] [Cited by in RCA: 65] [Article Influence: 8.1] [Reference Citation Analysis (0)] |

| 34. | Huynh E, Hosny A, Guthier C, Bitterman DS, Petit SF, Haas-Kogan DA, Kann B, Aerts HJWL, Mak RH. Artificial intelligence in radiation oncology. Nat Rev Clin Oncol. 2020;17:771-781. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 64] [Cited by in RCA: 231] [Article Influence: 38.5] [Reference Citation Analysis (0)] |

| 35. | Langen KM, Jones DT. Organ motion and its management. Int J Radiat Oncol Biol Phys. 2001;50:265-278. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 738] [Cited by in RCA: 705] [Article Influence: 28.2] [Reference Citation Analysis (4)] |

| 36. | Murphy MJ, Pokhrel D. Optimization of an adaptive neural network to predict breathing. Med Phys. 2009;36:40-47. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 67] [Cited by in RCA: 56] [Article Influence: 3.3] [Reference Citation Analysis (0)] |

| 37. | Isaksson M, Jalden J, Murphy MJ. On using an adaptive neural network to predict lung tumor motion during respiration for radiotherapy applications. Med Phys. 2005;32:3801-3809. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 92] [Cited by in RCA: 76] [Article Influence: 3.8] [Reference Citation Analysis (0)] |

| 38. | Ménard C, Paulson E, Nyholm T, McLaughlin P, Liney G, Dirix P, van der Heide UA. Role of Prostate MR Imaging in Radiation Oncology. Radiol Clin North Am. 2018;56:319-325. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 11] [Article Influence: 1.2] [Reference Citation Analysis (0)] |

| 39. | Wegener D, Zips D, Thorwarth D, Weiß J, Othman AE, Grosse U, Notohamiprodjo M, Nikolaou K, Müller AC. Precision of T2 TSE MRI-CT-image fusions based on gold fiducials and repetitive T2 TSE MRI-MRI-fusions for adaptive IGRT of prostate cancer by using phantom and patient data. Acta Oncol. 2019;58:88-94. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 10] [Cited by in RCA: 10] [Article Influence: 1.4] [Reference Citation Analysis (0)] |

| 40. | Gustafsson CJ, Swärd J, Adalbjörnsson SI, Jakobsson A, Olsson LE. Development and evaluation of a deep learning based artificial intelligence for automatic identification of gold fiducial markers in an MRI-only prostate radiotherapy workflow. Phys Med Biol. 2020;65:225011. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 11] [Article Influence: 1.8] [Reference Citation Analysis (0)] |

| 41. | Persson E, Jamtheim Gustafsson C, Ambolt P, Engelholm S, Ceberg S, Bäck S, Olsson LE, Gunnlaugsson A. MR-PROTECT: Clinical feasibility of a prostate MRI-only radiotherapy treatment workflow and investigation of acceptance criteria. Radiat Oncol. 2020;15:77. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 18] [Cited by in RCA: 36] [Article Influence: 6.0] [Reference Citation Analysis (0)] |

| 42. | Unkelbach J, Bortfeld T, Craft D, Alber M, Bangert M, Bokrantz R, Chen D, Li R, Xing L, Men C, Nill S, Papp D, Romeijn E, Salari E. Optimization approaches to volumetric modulated arc therapy planning. Med Phys. 2015;42:1367-1377. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 54] [Cited by in RCA: 52] [Article Influence: 4.7] [Reference Citation Analysis (0)] |

| 43. | Bohoudi O, Bruynzeel AME, Senan S, Cuijpers JP, Slotman BJ, Lagerwaard FJ, Palacios MA. Fast and robust online adaptive planning in stereotactic MR-guided adaptive radiation therapy (SMART) for pancreatic cancer. Radiother Oncol. 2017;125:439-444. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 178] [Cited by in RCA: 268] [Article Influence: 29.8] [Reference Citation Analysis (0)] |

| 44. | Lamb J, Cao M, Kishan A, Agazaryan N, Thomas DH, Shaverdian N, Yang Y, Ray S, Low DA, Raldow A, Steinberg ML, Lee P. Online Adaptive Radiation Therapy: Implementation of a New Process of Care. Cureus. 2017;9:e1618. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 14] [Cited by in RCA: 56] [Article Influence: 6.2] [Reference Citation Analysis (0)] |

| 45. | Hrinivich WT, Lee J. Artificial intelligence-based radiotherapy machine parameter optimization using reinforcement learning. Med Phys. 2020;47:6140-6150. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 7] [Cited by in RCA: 31] [Article Influence: 5.2] [Reference Citation Analysis (0)] |

| 46. | Debus C, Oelfke U, Bartzsch S. A point kernel algorithm for microbeam radiation therapy. Phys Med Biol. 2017;62:8341-8359. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 11] [Article Influence: 1.2] [Reference Citation Analysis (0)] |

| 47. | Bangert M, Oelfke U. Spherical cluster analysis for beam angle optimization in intensity-modulated radiation therapy treatment planning. Phys Med Biol. 2010;55:6023-6037. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 33] [Cited by in RCA: 28] [Article Influence: 1.8] [Reference Citation Analysis (0)] |

| 48. | Zhang X, Homma N, Ichiji K, Abe M, Sugita N, Takai Y, Narita Y, Yoshizawa M. A kernel-based method for markerless tumor tracking in kV fluoroscopic images. Phys Med Biol. 2014;59:4897-4911. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 21] [Cited by in RCA: 20] [Article Influence: 1.7] [Reference Citation Analysis (0)] |

| 49. | Zhao W, Han B, Yang Y, Buyyounouski M, Hancock SL, Bagshaw H, Xing L. Incorporating imaging information from deep neural network layers into image guided radiation therapy (IGRT). Radiother Oncol. 2019;140:167-174. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 17] [Cited by in RCA: 30] [Article Influence: 4.3] [Reference Citation Analysis (0)] |

| 50. | Kalet AM, Luk SMH, Phillips MH. Radiation Therapy Quality Assurance Tasks and Tools: The Many Roles of Machine Learning. Med Phys. 2020;47:e168-e177. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 30] [Cited by in RCA: 51] [Article Influence: 7.3] [Reference Citation Analysis (0)] |

| 51. | Vandewinckele L, Claessens M, Dinkla A, Brouwer C, Crijns W, Verellen D, van Elmpt W. Overview of artificial intelligence-based applications in radiotherapy: Recommendations for implementation and quality assurance. Radiother Oncol. 2020;153:55-66. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 64] [Cited by in RCA: 212] [Article Influence: 35.3] [Reference Citation Analysis (0)] |

| 52. | Chang X, Li H, Kalet A, Yang D. Detecting External Beam Radiation Therapy Physician Order Errors Using Machine Learning. Int J Radiat Oncol. 2017;99:S71. [RCA] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 4] [Article Influence: 0.4] [Reference Citation Analysis (0)] |

| 53. | Kalet AM, Gennari JH, Ford EC, Phillips MH. Bayesian network models for error detection in radiotherapy plans. Phys Med Biol. 2015;60:2735-2749. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 40] [Cited by in RCA: 45] [Article Influence: 4.1] [Reference Citation Analysis (0)] |

| 54. | Tomori S, Kadoya N, Takayama Y, Kajikawa T, Shima K, Narazaki K, Jingu K. A deep learning-based prediction model for gamma evaluation in patient-specific quality assurance. Med Phys. 2018;. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 85] [Cited by in RCA: 104] [Article Influence: 13.0] [Reference Citation Analysis (0)] |

| 55. | Nyflot MJ, Thammasorn P, Wootton LS, Ford EC, Chaovalitwongse WA. Deep learning for patient-specific quality assurance: Identifying errors in radiotherapy delivery by radiomic analysis of gamma images with convolutional neural networks. Med Phys. 2019;46:456-464. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 61] [Cited by in RCA: 97] [Article Influence: 12.1] [Reference Citation Analysis (0)] |

| 56. | Granville DA, Sutherland JG, Belec JG, La Russa DJ. Predicting VMAT patient-specific QA results using a support vector classifier trained on treatment plan characteristics and linac QC metrics. Phys Med Biol. 2019;64:095017. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 61] [Cited by in RCA: 51] [Article Influence: 7.3] [Reference Citation Analysis (0)] |

| 57. | Li Q, Chan MF. Predictive time-series modeling using artificial neural networks for Linac beam symmetry: an empirical study. Ann N Y Acad Sci. 2017;1387:84-94. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 34] [Cited by in RCA: 40] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 58. | Xu Y, Hosny A, Zeleznik R, Parmar C, Coroller T, Franco I, Mak RH, Aerts HJWL. Deep Learning Predicts Lung Cancer Treatment Response from Serial Medical Imaging. Clin Cancer Res. 2019;25:3266-3275. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 196] [Cited by in RCA: 358] [Article Influence: 51.1] [Reference Citation Analysis (0)] |

| 59. | Shi L, Zhang Y, Nie K, Sun X, Niu T, Yue N, Kwong T, Chang P, Chow D, Chen JH, Su MY. Machine learning for prediction of chemoradiation therapy response in rectal cancer using pre-treatment and mid-radiation multi-parametric MRI. Magn Reson Imaging. 2019;61:33-40. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 59] [Cited by in RCA: 85] [Article Influence: 12.1] [Reference Citation Analysis (0)] |

| 60. | Zhen X, Chen J, Zhong Z, Hrycushko B, Zhou L, Jiang S, Albuquerque K, Gu X. Deep convolutional neural network with transfer learning for rectum toxicity prediction in cervical cancer radiotherapy: a feasibility study. Phys Med Biol. 2017;62:8246-8263. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 97] [Cited by in RCA: 121] [Article Influence: 13.4] [Reference Citation Analysis (0)] |

| 61. | Mizutani T, Magome T, Igaki H, Haga A, Nawa K, Sekiya N, Nakagawa K. Optimization of treatment strategy by using a machine learning model to predict survival time of patients with malignant glioma after radiotherapy. J Radiat Res. 2019;60:818-824. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 10] [Article Influence: 1.4] [Reference Citation Analysis (1)] |

| 62. | Oikonomou A, Khalvati F, Tyrrell PN, Haider MA, Tarique U, Jimenez-Juan L, Tjong MC, Poon I, Eilaghi A, Ehrlich L, Cheung P. Radiomics analysis at PET/CT contributes to prognosis of recurrence and survival in lung cancer treated with stereotactic body radiotherapy. Sci Rep. 2018;8:4003. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 84] [Cited by in RCA: 107] [Article Influence: 13.4] [Reference Citation Analysis (0)] |

| 63. | Aneja S, Shaham U, Kumar RJ, Pirakitikulr N, Nath SK, Yu JB, Carlson DJ, Decker RH. Deep Neural Network to Predict Local Failure Following Stereotactic Body Radiation Therapy: Integrating Imaging and Clinical Data to Predict Outcomes. Int J Radiat Oncol. 2017;99:S47. [RCA] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 4] [Article Influence: 0.4] [Reference Citation Analysis (0)] |

| 64. | Zhou Z, Folkert M, Cannon N, Iyengar P, Westover K, Zhang Y, Choy H, Timmerman R, Yan J, Xie XJ, Jiang S, Wang J. Predicting distant failure in early stage NSCLC treated with SBRT using clinical parameters. Radiother Oncol. 2016;119:501-504. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 33] [Cited by in RCA: 37] [Article Influence: 3.7] [Reference Citation Analysis (0)] |

| 65. | Wang C, Rimner A, Hu YC, Tyagi N, Jiang J, Yorke E, Riyahi S, Mageras G, Deasy JO, Zhang P. Toward predicting the evolution of lung tumors during radiotherapy observed on a longitudinal MR imaging study via a deep learning algorithm. Med Phys. 2019;46:4699-4707. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 33] [Cited by in RCA: 34] [Article Influence: 4.9] [Reference Citation Analysis (0)] |

| 66. | Tseng HH, Luo Y, Cui S, Chien JT, Ten Haken RK, Naqa IE. Deep reinforcement learning for automated radiation adaptation in lung cancer. Med Phys. 2017;44:6690-6705. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 101] [Cited by in RCA: 114] [Article Influence: 12.7] [Reference Citation Analysis (0)] |

| 67. | Hu Y, Xie C, Yang H, Ho JWK, Wen J, Han L, Lam KO, Wong IYH, Law SYK, Chiu KWH, Vardhanabhuti V, Fu J. Computed tomography-based deep-learning prediction of neoadjuvant chemoradiotherapy treatment response in esophageal squamous cell carcinoma. Radiother Oncol. 2021;154:6-13. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 38] [Cited by in RCA: 107] [Article Influence: 21.4] [Reference Citation Analysis (0)] |

| 68. | Hu Y, Xie C, Yang H, Ho JWK, Wen J, Han L, Chiu KWH, Fu J, Vardhanabhuti V. Assessment of Intratumoral and Peritumoral Computed Tomography Radiomics for Predicting Pathological Complete Response to Neoadjuvant Chemoradiation in Patients With Esophageal Squamous Cell Carcinoma. JAMA Netw Open. 2020;3:e2015927. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 32] [Cited by in RCA: 133] [Article Influence: 22.2] [Reference Citation Analysis (0)] |

| 69. | Du R, Lee VH, Yuan H, Lam KO, Pang HH, Chen Y, Lam EY, Khong PL, Lee AW, Kwong DL, Vardhanabhuti V. Radiomics Model to Predict Early Progression of Nonmetastatic Nasopharyngeal Carcinoma after Intensity Modulation Radiation Therapy: A Multicenter Study. Radiol Artif Intell. 2019;1:e180075. [RCA] [DOI] [Full Text] [Cited by in Crossref: 15] [Cited by in RCA: 49] [Article Influence: 7.0] [Reference Citation Analysis (0)] |

| 70. | Ibragimov B, Toesca D, Chang D, Yuan Y, Koong A, Xing L. Development of deep neural network for individualized hepatobiliary toxicity prediction after liver SBRT. Med Phys. 2018;45:4763-4774. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 69] [Cited by in RCA: 84] [Article Influence: 10.5] [Reference Citation Analysis (0)] |

| 71. | Valdes G, Solberg TD, Heskel M, Ungar L, Simone CB 2nd. Using machine learning to predict radiation pneumonitis in patients with stage I non-small cell lung cancer treated with stereotactic body radiation therapy. Phys Med Biol. 2016;61:6105-6120. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 58] [Cited by in RCA: 74] [Article Influence: 7.4] [Reference Citation Analysis (0)] |

| 72. | Qi X, Neylon J, Santhanam A. Dosimetric Predictors for Quality of Life After Prostate Stereotactic Body Radiation Therapy via Deep Learning Network. Int J Radiat Oncol. 2017;99:S167. [RCA] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 5] [Article Influence: 0.6] [Reference Citation Analysis (0)] |

| 73. | Wong K, Gallant F, Szumacher E. Perceptions of Canadian radiation oncologists, radiation physicists, radiation therapists and radiation trainees about the impact of artificial intelligence in radiation oncology - national survey. J Med Imaging Radiat Sci. 2021;52:44-48. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 9] [Cited by in RCA: 29] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 74. | Bridge P, Bridge R. Artificial Intelligence in Radiotherapy: A Philosophical Perspective. J Med Imaging Radiat Sci. 2019;50:S27-S31. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 10] [Article Influence: 1.4] [Reference Citation Analysis (0)] |

| 75. | Karches KE. Against the iDoctor: why artificial intelligence should not replace physician judgment. Theor Med Bioeth. 2018;39:91-110. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 18] [Cited by in RCA: 29] [Article Influence: 3.6] [Reference Citation Analysis (0)] |

| 76. | Jarrahi MH. Artificial intelligence and the future of work: Human-AI symbiosis in organizational decision making. Bus Horiz. 2018;61:577-586. [DOI] [Full Text] |

| 77. | Amershi S, Weld D, Vorvoreanu M, Fourney A, Nushi B, Collisson P, Suh J, Iqbal S, Bennett PN, Inkpen K, Teevan J, Kikin-Gil R, Horvitz E. Guidelines for human-AI interaction. In: CHI '19: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems; 2019 May 4-9; Glasgow Scotland; UK. New York: Association for Computing Machinery, 2019: 1-13. [DOI] [Full Text] |