Published online May 20, 2022. doi: 10.5662/wjm.v12.i3.92

Peer-review started: October 19, 2021

First decision: January 18, 2022

Revised: February 5, 2022

Accepted: March 26, 2022

Article in press: March 26, 2022

Published online: May 20, 2022

Processing time: 211 Days and 10.5 Hours

It is an undeniable fact that systematic reviews play a crucial role in informing clinical practice; however, conventional head-to-head meta-analyses do have limitations. In particular, studies can only be compared in a pair-wise fashion, and conclusions can only be drawn in the light of direct evidence. In contrast, network meta-analyses can not only compare multiple interventions but also utilize indirect evidence which increases their precision. On top of that, they can also rank competing interventions. In this mini-review, we have aimed to elaborate on the principles and techniques governing network meta-analyses to achieve a methodologically sound synthesis, thus enabling safe conclusions to be drawn in clinical practice. We have emphasized the prerequisites of a well-conducted Network Meta-Analysis (NMA), the value of selecting appropriate outcomes according to guidelines for transparent reporting, and the clarity achieved via sophisticated graphical tools. What is more, we have addressed the importance of incorporating the level of evidence into the results and interpreting the findings according to validated appraisal systems (i.e., the Grade of Recommendations, Assessment, Development, and Evaluation system - GRADE). Lastly, we have addressed the possibility of planning future research via NMAs. Thus, we can conclude that NMAs could be of great value to clinical practice.

Core Tip: Systematic reviews with or without meta-analyses provide the highest quality of evidence, thus lying on the top of evidence-based medicine hierarchy. However, pair-wise meta-analyses present the inherent limitation of exclusively comparing direct evidence. By contrast, Network Meta-Analyses (NMAs) also consider indirect evidence, thereby offering additional useful information. Conducting an NMA, however, has certain requirements such as assuming that transitivity across the included studies exists. What is more, maintaining sufficient statistical power in the analyses is crucial. In addition, performing head-to-head statistical comparisons before setting up networks of interventions is a prerequisite for a methodologically sound NMA, and selecting not only positive but also negative outcomes is required. Lastly, implementing quality appraisal systems to grade the level of evidence is highly recommended. Should all the above criteria be fulfilled, then accurate clinical conclusions can be drawn from an NMA.

- Citation: Christofilos SI, Tsikopoulos K, Tsikopoulos A, Kitridis D, Sidiropoulos K, Stoikos PN, Kavarthapu V. Network meta-analyses: Methodological prerequisites and clinical usefulness. World J Methodol 2022; 12(3): 92-98

- URL: https://www.wjgnet.com/2222-0682/full/v12/i3/92.htm

- DOI: https://dx.doi.org/10.5662/wjm.v12.i3.92

Due to the plethora of different interventions for various clinical entities[1] identifying the most efficient and safe treatment is among the prime interests of a researcher[2-4]. In the case of conventional meta-analyses, only two interventions can be compared at a time, and only those evaluated in head-to-head trials[5-7]. What is more, intervention effect estimates can only be calculated from direct evidence[2]. In contrast to pair-wise meta-analyses, network meta-analyses (NMA) enable not only simultaneous direct comparisons of multiple interventions but also indirect comparisons provided a common comparator is shared between interventions[2]. This is even possible in the case of two interventions that have never been directly compared[2]. In addition, interventions may also be ranked utilizing the surface under the cumulative ranking (SUCRA) curves, thus allowing for judgments such as which treatment presents the highest probability of being the most effective[2]. It is underlined that identifying more than one highly efficacious treatment in an NMA is a common phenomenon given the subtle differences in treatment rankings of the modalities lying on the top of ranking probabilitiy tables. Overall, incorporating the results from network meta-analyses into clinical practice guidelines could help clinicians select the best available intervention to improve healthcare.

For a systematic review of randomized evidence to qualify as a network meta-analysis, the assumption of transitivity must be fulfilled. To elaborate further, transitivity implies that it is possible to conclude hypothetical comparisons through a common comparator[6]. However, this is only possible in the absence of systematic differences between studies[8] with some degree of heterogeneity being permitted[6]. To illustrate further, heterogeneity is defined as a form of inter-study discrepancy due to differences that cause deviations in the observed effects other than sampling error[9]. However, when the discrepancy between studies exceeds that explained by clinical diversity, effects sizes cannot be safely estimated based on direct and indirect evidence and the distribution of effect modifiers needs to be examined[6].

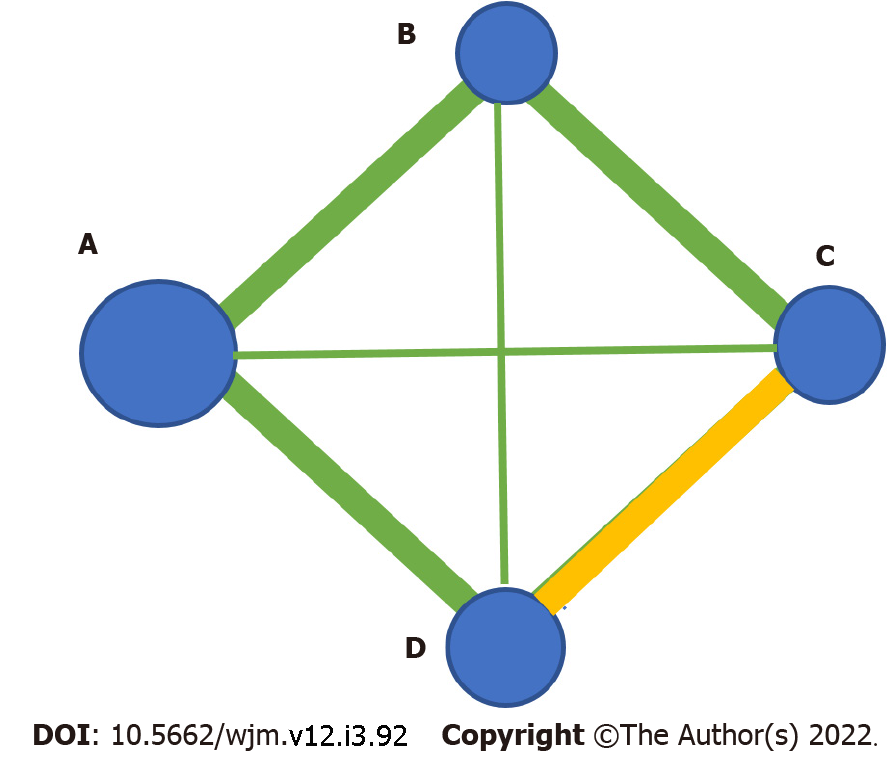

It is worthy of note that the statistical power of a network of interventions should be sufficient to enable safe clinical conclusions to be drawn. To be more specific, the ratio between the number of included papers relative to the number of the competing interventions should be satisfactory. On top of that, the sample size per intervention arm as depicted by the size of the nodes in a network meta-analysis plot should also be robust enough (Figure 1). Lastly, prospective registration with systems such as the grade of recommendations, assessment, development, and evaluation system (GRADE) is valuable in assessing the heterogeneity and additional characteristics such as publication bias, indirectness, imprecision, the study limitations, and inconsistency[5].

Of additional note, for a given dataset, researchers must conduct not only NMA but also traditional pair-wise meta-analyses. To be more precise, one can take advantage of early exploration of the results of conventional pair-wise meta-analyses before setting up networks of interventions. Authors should then proceed with the network meta-analysis to take advantage of indirect evidence synthesis for them to supplement their study results.

In determining primary and secondary outcomes, both positive and negative results should be considered. Outcomes of primary interest should be prioritized over outcomes of secondary clinical importance to ensure that the findings will be clinically relevant. For instance, laboratory tests are not routinely considered as primary endpoints as they tend to not directly inform decisions. However, they may play an explanatory and/or adjuvant role in explaining the intervention outcome[10].

The PRISMA guidelines represent a checklist of 27 items that may be used when reporting a systematic review of health interventions with or without meta-anlysis[11]. Hutton et al[12], in 2015, has expanded the original list by including 5 additional items that apply to network meta-analyses. Firstly, the geometry and summary of the intervention networks have been incorporated in the methods, including a diagrammatic representation and a brief description. What is more, the findings of inconsistency assessment can be included in addition to the presentation of the networks’ structure.

It should be noted that prospective registration (e.g. with PROSPERO database) of all NMAs is encouraged. By doing so, transparency is promoted and bias is prevented by avoiding unintended duplicate reviews[13]. It is also highlighted that adherence to a pre-existing protocol plays a crucial role in preventing selective outcome reporting[10,14,15]. In other words, registration of a systematic review in advance of study commencement precludes data manipulation and/or unethical reporting. Last but not least, prospective registration may enable researchers to assess whether the topic they intend to investigate has already been addressed by earlier authors, thus avoiding unnecessary research repetition.

Despite NMAs gaining popularity, a lot of criticism exists given their complex methodology discouraging clinicians from getting involved in this type of research[16]. This is due to the increased level of statistical and computational knowledge required. To tackle this issue, introducing graphical tools into the manuscript results in a significant increase in clarity and reproducibility[16].

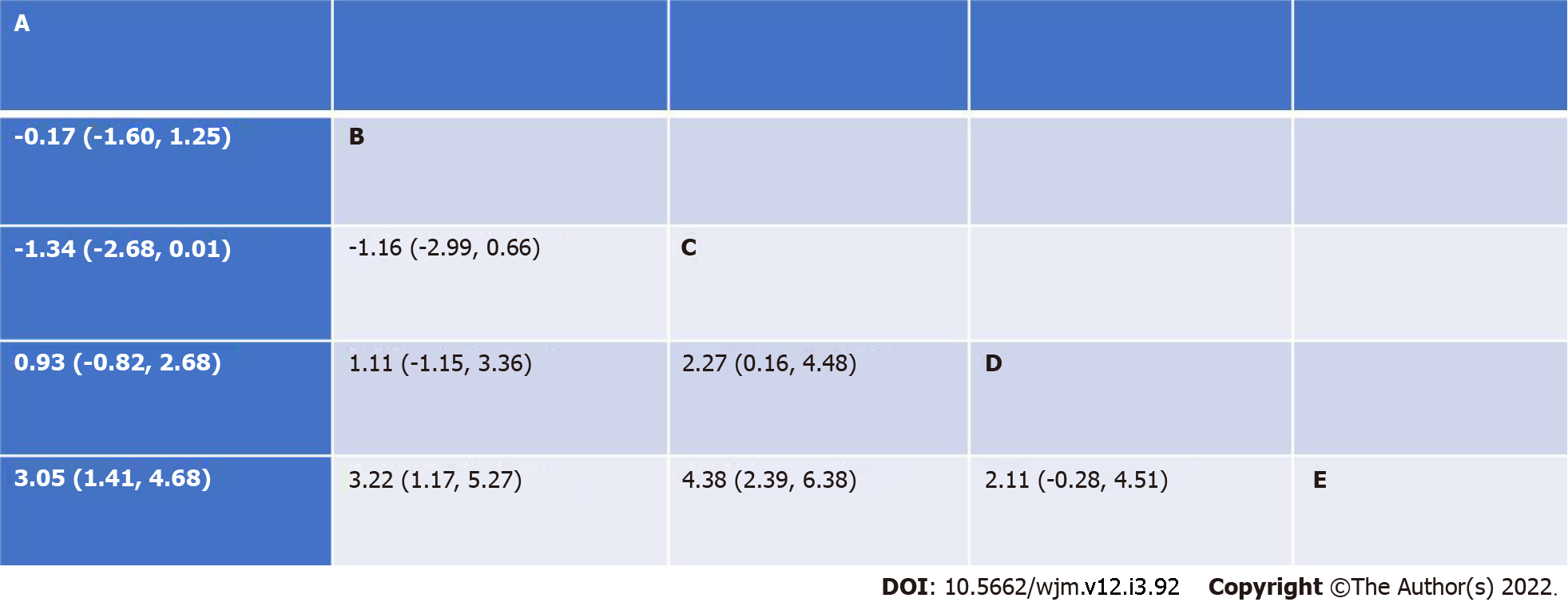

What is more, competing interventions can be ranked from the most to the least effective via the use of SUCRA curves[2]. On the other hand, league tables enable a structured presentation of the result of each pair of comparisons with its corresponding 95% confidence intervals (Figure 2).

It has been evidenced that detection of publication bias (that is typically reporting positive more often than negative results) in NMA is not uncommon. Inevitably, introducing this kind of bias in meta-analysis threatens the validity of the results of the study as an “overly rosy picture” may be painted. To elaborate further, the evaluation of small study effects acts as a proxy for the assessment of publication bias. For the above assessment, a sophisticated statistical tool namely a comparison-adjusted funnel plot can be implemented. Apart from funnel plots, researchers can also employ Egger’s test to statistically evaluate the presence of small-study effects[16-18].

The GRADE system features 6 components[5], that are study limitations, heterogeneity, inconsistency, indirectness, imprecision, and publication bias[5,19]. The quality of evidence may be high, moderate, low, or very low. As a rule of thumb, randomized trials yield high-quality evidence, whereas observational studies more often than not offer a low quality of evidence with the risk of bias potentially affecting clinical judgment[20].

Potential limitations of randomized trials include failure to conceal allocation, failure to blind, loss to follow-up, and failure to appropriately consider the intention[20]. Guyatt et al[20], in 2011, also mentioned terminating a study early for apparent benefit, and selective reporting of outcomes according to the results. The indirectness may be due to patients deviating from those of interest, when the treatments have not been compared in head-to-head trials, and when there are different outcomes from those being expected from the study[21]. Furthermore, the contributions of biological and social factors to the magnitude of effect in the outcomes represents indirectness[21]. On the other hand, inconsistency is defined as a disagreement between direct and indirect evidence in NMA[19]. In addition, Salanti et al[19], have suggested the adoption of a quantitative approach to assess the risk of bias.

It is an undeniable fact that a great many confounding factors can be encountered in a broad systematic review of randomized trials. Thus, conducting sensitivity analysis to delineate the impact of clinical heterogeneity factors is strongly recommended. For instance, the effect of low-quality trials, variation in intervention characteristics as well as differences due to variable outcome measurement tools needs to be considered in those secondary analyses. From a technical point of view, the researcher needs to improve the trial(s) with the above characteristics from the analysis, repeat the statistical tests and subsequently compare the new results with the findings of the primary analysis[22].

Directing the design of future studies based on NMA results appears to be of significant importance as mismanagement of resources can be overcome[23-25]. For a researcher to provide an estimate of whether the results of a subsequent trial are likely to change in the future, an interval plot should be considered. By visually inspecting an interval plot, an investigator can enable predictions on the efficacy of a particular intervention in a future trial[16,26,27].

To improve interpretability and clarity of the results of an NMA, researchers are encouraged to back-transform their data in a manner that interpretation of their results is improved. For instance, when it comes to Patient-Reported Outcome Measures, investigators can back-transform Standard Mean Differences to Mean Differences and subsequently assess their findings against the established minimal clinically important difference for a particular questionnaire[28].

Overall, NMAs play a crucial role in the decision-making process. As long as common methodological mistakes are avoided, researchers can produce reliable and accurate clinical conclusions.

| 1. | Tsikopoulos K, Vasiliadis HS, Mavridis D. Injection therapies for plantar fasciopathy ('plantar fasciitis'): a systematic review and network meta-analysis of 22 randomised controlled trials. Br J Sports Med. 2016;50:1367-1375. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 32] [Cited by in RCA: 29] [Article Influence: 2.9] [Reference Citation Analysis (0)] |

| 2. | Antoniou SA, Koelemay M, Antoniou GA, Mavridis D. A Practical Guide for Application of Network Meta-Analysis in Evidence Synthesis. Eur J Vasc Endovasc Surg. 2019;58:141-144. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 17] [Cited by in RCA: 18] [Article Influence: 2.6] [Reference Citation Analysis (0)] |

| 3. | Tsikopoulos K, Sidiropoulos K, Kitridis D, Cain Atc SM, Metaxiotis D, Ali A. Do External Supports Improve Dynamic Balance in Patients with Chronic Ankle Instability? Clin Orthop Relat Res. 2020;478:359-377. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 19] [Cited by in RCA: 20] [Article Influence: 3.3] [Reference Citation Analysis (0)] |

| 4. | Kitridis D, Tsikopoulos K, Bisbinas I, Papaioannidou P, Givissis P. Efficacy of Pharmacological Therapies for Adhesive Capsulitis of the Shoulder: A Systematic Review and Network Meta-analysis. Am J Sports Med. 2019;47:3552-3560. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 34] [Cited by in RCA: 25] [Article Influence: 3.6] [Reference Citation Analysis (0)] |

| 5. | Rouse B, Chaimani A, Li T. Network meta-analysis: an introduction for clinicians. Intern Emerg Med. 2017;12:103-111. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 510] [Cited by in RCA: 653] [Article Influence: 72.6] [Reference Citation Analysis (0)] |

| 6. | Salanti G. Indirect and mixed-treatment comparison, network, or multiple-treatments meta-analysis: many names, many benefits, many concerns for the next generation evidence synthesis tool. Res Synth Methods. 2012;3:80-97. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 823] [Cited by in RCA: 1262] [Article Influence: 90.1] [Reference Citation Analysis (0)] |

| 7. | Cipriani A, Higgins JP, Geddes JR, Salanti G. Conceptual and technical challenges in network meta-analysis. Ann Intern Med. 2013;159:130-137. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 611] [Cited by in RCA: 813] [Article Influence: 62.5] [Reference Citation Analysis (0)] |

| 8. | Salanti G, Marinho V, Higgins JP. A case study of multiple-treatments meta-analysis demonstrates that covariates should be considered. J Clin Epidemiol. 2009;62:857-864. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 254] [Cited by in RCA: 236] [Article Influence: 13.9] [Reference Citation Analysis (0)] |

| 9. | Lu G, Ades AE. Assessing evidence inconsistency in mixed treatment comparisons. J Am Stat Assoc. 2006;101:447-459. [RCA] [DOI] [Full Text] [Cited by in Crossref: 491] [Cited by in RCA: 556] [Article Influence: 39.7] [Reference Citation Analysis (0)] |

| 10. | O’Connor D, Green S, Higgins JPT (editors). Chapter 5: Defining the review question and developing criteria for including studies. In: Higgins JPT, Green S (editors), Cochrane Handbook of Systematic Reviews of Intervention. Version 5.1.0 (updated March 2011). The Cochrane Collaboration, 2011. Available from: http://www.handbook.cochrane.org. |

| 11. | Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff JM, Akl EA, Brennan SE, Chou R, Glanville J, Grimshaw JM, Hróbjartsson A, Lalu MM, Li T, Loder EW, Mayo-Wilson E, McDonald S, McGuinness LA, Stewart LA, Thomas J, Tricco AC, Welch VA, Whiting P, Moher D. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 44932] [Cited by in RCA: 51976] [Article Influence: 10395.2] [Reference Citation Analysis (2)] |

| 12. | Hutton B, Salanti G, Caldwell DM, Chaimani A, Schmid CH, Cameron C, Ioannidis JP, Straus S, Thorlund K, Jansen JP, Mulrow C, Catalá-López F, Gøtzsche PC, Dickersin K, Boutron I, Altman DG, Moher D. The PRISMA extension statement for reporting of systematic reviews incorporating network meta-analyses of health care interventions: checklist and explanations. Ann Intern Med. 2015;162:777-784. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 4165] [Cited by in RCA: 5899] [Article Influence: 536.3] [Reference Citation Analysis (1)] |

| 13. | Stewart L, Moher D, Shekelle P. Why prospective registration of systematic reviews makes sense. Syst Rev. 2012;1:7. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 177] [Cited by in RCA: 236] [Article Influence: 16.9] [Reference Citation Analysis (0)] |

| 14. | Centre for Reviews and Dissemination (CRD), University of York:Systematic Reviews: CRD’s Guidance for Undertaking Reviews in Health Care York, UK: Centre for Reviews and Dissemination, University of York 2009. Available from: http://www.york.ac.uk/inst/crd/pdf/Systematic_Reviews.pdf. |

| 15. | Finding What Works in Health Care: Standards for Systematic Reviews. Washington (DC): National Academies Press (US); 2011 . [PubMed] |

| 16. | Chaimani A, Higgins JP, Mavridis D, Spyridonos P, Salanti G. Graphical tools for network meta-analysis in STATA. PLoS One. 2013;8:e76654. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1217] [Cited by in RCA: 1987] [Article Influence: 152.8] [Reference Citation Analysis (0)] |

| 17. | Irwig L, Macaskill P, Berry G, Glasziou P. Bias in meta-analysis detected by a simple, graphical test. Graphical test is itself biased. BMJ. 1998;316:470; author reply 470-470; author reply 471. [PubMed] |

| 18. | Mavridis D, Salanti G. Exploring and accounting for publication bias in mental health: a brief overview of methods. Evid Based Ment Health. 2014;17:11-15. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 53] [Cited by in RCA: 65] [Article Influence: 5.4] [Reference Citation Analysis (0)] |

| 19. | Salanti G, Del Giovane C, Chaimani A, Caldwell DM, Higgins JP. Evaluating the quality of evidence from a network meta-analysis. PLoS One. 2014;9:e99682. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 676] [Cited by in RCA: 1102] [Article Influence: 91.8] [Reference Citation Analysis (0)] |

| 20. | Guyatt GH, Oxman AD, Vist G, Kunz R, Brozek J, Alonso-Coello P, Montori V, Akl EA, Djulbegovic B, Falck-Ytter Y, Norris SL, Williams JW Jr, Atkins D, Meerpohl J, Schünemann HJ. GRADE guidelines: 4. Rating the quality of evidence--study limitations (risk of bias). J Clin Epidemiol. 2011;64:407-415. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1662] [Cited by in RCA: 2158] [Article Influence: 143.9] [Reference Citation Analysis (0)] |

| 21. | Guyatt GH, Oxman AD, Kunz R, Woodcock J, Brozek J, Helfand M, Alonso-Coello P, Falck-Ytter Y, Jaeschke R, Vist G, Akl EA, Post PN, Norris S, Meerpohl J, Shukla VK, Nasser M, Schünemann HJ; GRADE Working Group. GRADE guidelines: 8. Rating the quality of evidence--indirectness. J Clin Epidemiol. 2011;64:1303-1310. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1045] [Cited by in RCA: 1426] [Article Influence: 95.1] [Reference Citation Analysis (0)] |

| 22. | Deeks JJ, Higgins JPT, Altman DG (editors). Chapter 9: Analysing data and undertaking meta-analyses. In: Higgins JPT, Green S (editors). Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 (updated March 2011). The Cochrane Collaboration, 2011. Available from: http://www.handbook.cochrane.org. |

| 23. | Nikolakopoulou A, Mavridis D, Salanti G. Planning future studies based on the precision of network meta-analysis results. Stat Med. 2016;35:978-1000. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 26] [Cited by in RCA: 28] [Article Influence: 2.8] [Reference Citation Analysis (0)] |

| 24. | Roloff V, Higgins JP, Sutton AJ. Planning future studies based on the conditional power of a meta-analysis. Stat Med. 2013;32:11-24. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 49] [Cited by in RCA: 49] [Article Influence: 3.8] [Reference Citation Analysis (0)] |

| 25. | Fergusson D, Glass KC, Hutton B, Shapiro S. Randomized controlled trials of aprotinin in cardiac surgery: could clinical equipoise have stopped the bleeding? Clin Trials. 2005;2:218-29; discussion 229. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 110] [Cited by in RCA: 112] [Article Influence: 11.2] [Reference Citation Analysis (0)] |

| 26. | Higgins JP, Thompson SG, Spiegelhalter DJ. A re-evaluation of random-effects meta-analysis. J R Stat Soc Ser A Stat Soc. 2009;172:137-159. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1858] [Cited by in RCA: 1825] [Article Influence: 107.4] [Reference Citation Analysis (0)] |

| 27. | Riley RD, Higgins JP, Deeks JJ. Interpretation of random effects meta-analyses. BMJ. 2011;342:d549. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 2076] [Cited by in RCA: 2039] [Article Influence: 135.9] [Reference Citation Analysis (0)] |

| 28. | Schünemann HJ, Oxman AD, Vist GE, Higgins JPT, Deeks JJ, Glasziou P, Guyatt GH. Chapter 12: Interpreting results and drawing conclusions. In: Higgins JPT, Green S (editors), Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 (updated March 2011). The Cochrane Collaboration, 2011. Available from: http://www.handbook.cochrane.org. |

Open-Access: This article is an open-access article that was selected by an in-house editor and fully peer-reviewed by external reviewers. It is distributed in accordance with the Creative Commons Attribution NonCommercial (CC BY-NC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is non-commercial. See: https://creativecommons.org/Licenses/by-nc/4.0/

Provenance and peer review: Invited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Methodology

Country/Territory of origin: United Kingdom

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): B, B, B

Grade C (Good): 0

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Elfayoumy KN, Egypt; Hasabo EA, Sudan; Yahaya TO, Nigeria S-Editor: Liu JH L-Editor: A P-Editor: Liu JH