INTRODUCTION

Since its release in 2005, next-generation sequencing (NGS) has been responsible for a drastic reduction in the price of genome sequencing and for a tidal wave of genetic information[1]. NGS technologies have made high-throughput sequencing available to medium- and small-size laboratories. The new possibility of generating a large number of sequenced bacterial genomes not only brought excitement to the field of genomics but also heightened expectations that the development of vaccines and the search for new antibacterial targets would be boosted. Nevertheless, these expectations were shown to be naïve. The complexity of host-bacteria interactions and the large diversity of bacterial genetic products have been shown to play greater roles in vaccine development and antibacterial discovery[2-4].

Additionally, as with any methodology, NGS presents its own drawbacks. Among the new sequencing technologies the most consolidated in the market are the 454 GS FLX platform (Roche), Illumina (Genome Analyzer) and SOLiD (Life Technologies)[5,6]. These devices are capable of generating millions of reads, providing high coverage genomic but with a drawback, reads are considerably smaller than the ones produced by Sanger methodology[7,8]. While Sanger methodology produces reads ranging from 800 to 1000 bases, NGS platforms produces reads ranging from 50 (SOLiD V3) to 2 × 150 bases (Illumina)[9]. The small amount of information contained in each read makes it difficult to completely assemble a genome using exclusively computational tools[10,11]. Therefore small reads made the genome assembly process a quite more laborious task.

In recent years, approaches that use hybrid assemblies were developed to facilitate the assembly process. They take advantage of high read quality of second generation sequencers, i.e., Illumina (Genome Analyzer), and longer read lengths from third generation sequencers, i.e., SMRT sequencers (Pacific Biosciences) and Ion Torrent PGM[12,13]. Although empirically logical, this kind of approach wasn’t facilitated due to the lack of integration between sequencers.

In order to improving and verifying quality genome is essential to know which combination of sequencing data, computer algorithms, and parameters can produce the highest quality assembly[14,15]. Also, it is necessary to know the more likely type of error data a sequencer platform will present. For instance, Illumina and SOLiD are more likely to present nucleotide substitution, while 454 GS FLX and Ion Torrent are more likely to present indels[16]. Nearly none bioinformatic system has been developed to integrate reads from different sequencers into a single assembly[12,17]. This new developed approaches aim to reduce the manual intervention in finishing genomes, since repetitive regions may be solved using an hybrid approach.

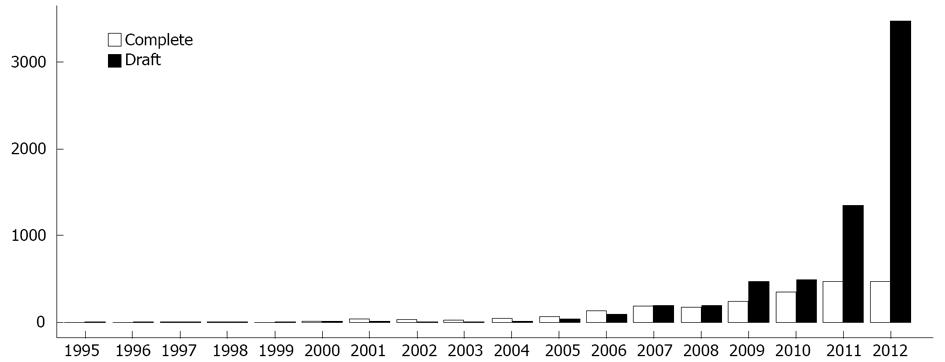

Although NGS is directly responsible for considerable growth in the size of genomic databases, it has also been indirectly responsible for a decrease in genome quality[1,10]. The number of draft genome (partial data) deposits in public databases has grown exponentially since 2005 (Figure 1). In general, if no further studies will be developed using a particular organism’s genome, it is more likely to be deposited as a draft genome. Otherwise, the painstaking tasks of improving and finishing the genome (complete data) must be undertaken[18].

Figure 1 Number of complete genome and draft genome (partial data) deposits in public databases.

This review will address the “scientific value” of a newly sequenced genome and the amount of insight it can provide. We will address the factors that could be leading to the increase in the number of draft deposits and the consequent loss of relevant biological information. Additionally, we will summarize the expectations created by NGS technologies regarding vaccine development and antibacterial discovery.

OVERVIEW OF SEQUENCING AND ASSEMBLY

For 30 years, sequencing technologies based on Sanger chemistry dominated the market. Although sequencing had undergone numerous improvements over the years, gene cloning techniques were still necessary to obtain genomic DNA sequences. Therefore, the time and cost required to obtain a complete genome sequence remained high. Moreover, the capacity of parallel sequencing was quite limited[19-21]. NGS platforms made it possible to sequence complete prokaryotic genomes using massively parallel sequencing more rapidly and at a lower cost[20,22].

Although NGS has facilitated sequencing processes, its relatively smaller reads make the assembly process a computational challenge[10,11]. The main limitation of short-read assembly methods is their inability to resolve repetitive regions of the genome without paired libraries[11]. The assembly of repetitive regions was an important issue even before the introduction of NGS platforms; shorter reads only made the problem worse.

In 2001, Kececioglu et al[23] argued about the impossibility of correctly assembling regions of the genome that contain identical copies of a sequence. Usually, long DNA repeats are not exact copies. They contain small differences that could, in principle, permit their correct assembly. Nevertheless, a major difficulty arises from sequencing errors. Assembly software must accept imperfect sequencing alignments to avoid missing genuine connections between sequences[22]. With the small amount of information within each read adding to the inherent sequencing error, it is difficult to separate true differences within repeated sequences from sequencing errors.

A study by Phillippy et al[24] revealed that the majority of contig ends in draft genomes were associated with repeated regions. They concluded that it was possible to categorize the majority of mis-assembly events into two general classes: (1) repeat collapse or expansion; and (2) sequence rearrangement and inversion. Each of these classes exhibits specific mis-assembly signatures: the first class is the result of incorrect assembly in repetitive regions, including fewer or additional copies; the second class is the result of the rearrangement of multiple repeated copies, which is caused by the insertion of a read between them. The second class may be considered more influential because, if not fixed, it might be interpreted as a real biological rearrangement event[25,26]. If the assembler cannot resolve the region between two genomic fragments, a gap is formed. Gaps may occur due to: (1) an intrinsic characteristic of the sequencing platform that leads to incomplete or incorrect information; or (2) the inability of an assembly algorithm to handle regions of low complexity or repeated DNA[18,27,28]. The process of identifying and closing these gaps is quite laborious and requires additional manual intervention.

Gap closure processes usually involve the design of primers flanking the gap region to perform semi-automated sequencing of the unrepresented parts of the genome[28]. Several bioinformatics methodologies have been developed to facilitate gap closure. IMAGE is a tool that uses de Bruijn methodology to fill gaps with short reads that are aligned with flanking regions of the gap and were not used in the assembly[28]. In 2011, Cerdeira et al[29] generated a similar strategy by using CLC Genomics Workbench for the recursive alignment of unused short reads from the SOLiD platform. GapFiller is another tool that uses local alignment; its main advantage is the use of paired reads to estimate gap size and allows define the type of paired library: reverse-reverse, forward-forward, reverse-forward and forward-reverse[30].

From a purely practical standpoint, assembly tools are not required to produce a perfectly finished genome as an output. Their main function is to reduce the sequencing reads to a manageable number of contigs[26]. The process of finishing a genome, ensuring that gaps are closed and the gene order is correct, requires human decision-making. Therefore, the lack of fully automated processes constitutes a bottleneck in generating complete genomes.

“SCIENTIFIC VALUE” OF A NEWLY SEQUENCED GENOME

The value of a newly sequenced genome can be assessed using many different metrics. If publications are considered the main “currency” within the scientific community, there has been a considerable decrease in the value of new sequences over the last four decades.

The introduction of Sanger methodology in 1977 was one of the main landmarks in the early stages of the genomic era[31]. During the first years of using Sanger sequencing, a sequence of no more than 1000 nucleotides was sufficient for a work to be accepted in a journal such as Cell (current impact factor: 32.40) or Nature (current impact factor: 36.28)[32-34]. In 1980, the shotgun DNA sequencing methodology was introduced, enabling the sequencing of longer DNA fragments[35]. Complete bacterial operons were sequenced and published in journals such as Molecular Microbiology (current impact factor: 5.01) and Proceedings of the National Academy of Sciences (PNAS - current impact factor: 9.68)[36-38].

A combination of DNA sequencing improvements and the newly developed TIGR Assembler[39] culminated in the publication of the first complete bacterial genomes in 1995. Papers containing the complete nucleotide sequences of Haemophilus influenzae Rd (1830137 base pairs) and Mycoplasma genitalium (580070 base pairs) were both published in Science (current impact factor: 31.20)[40,41]. Almost 20 years later, a paper containing the sequence of a prokaryotic genome alone may be published in the Genome Announcement section of the Journal of Bacteriology (current impact factor: 3.82) or in Standards in Genomic Sciences (SIGS - has not been published sufficiently long to receive an impact factor). A recent article by Smith even refers to the not-so-distant “death” of the “genome paper”, noting that the space for genome publication may come to an end soon[42].

The publication impact of newly sequenced genomes decreased following DNA sequencing improvements, and the reason is no mystery. High-impact journals only publish groundbreaking original scientific research or results of outstanding scientific importance. To produce a higher-impact publication, more information must be extracted from genomes. For instance, several genomes may be examined in a comparative genomic analysis or pangenomic study[43,44], or an analysis may focus on the presence or absence of specific markers or on small differences between DNA sequences[26,45]. In this context, the genome becomes a stepping stone to the main goal, the comparative analysis. As the basis of the analysis, the genome sequence remains important. Nevertheless, it may not be of sufficient importance for one to undertake the painstaking task of completing the genome sequence.

WHAT IS LOST WHEN WE OPT FOR A DRAFT GENOME?

Over the years, arguments have been presented in favor both of complete genomes[41,46] and of the superior “tradeoff” that a draft genome represents[47]. The discussion has been centered around two main points: (1) to provide the greatest amount of useful data, sequences must be as complete as possible; and (2) draft genomes (partial data) are sufficient for most scientific contexts. The issue at stake is the extra money and manpower necessary to finish a genome. Is the additional information contained in a finished genome worth the investment? To answer this question, one must identify the information that is lost from a draft and analyze the quality of data that is generated using drafts. Furthermore, it is necessary to understand the limits of draft genome use.

The first issue to consider is whether it is possible to properly identify all of an organism’s genes in a draft genome. Gene characterization consists of the following: (1) gene prediction with the identification of an open reading frame (ORF); and (2) the functional annotation of the gene product. The main gene identification problems in drafts are associated with the partial or complete loss of ORFs[10]. Such errors may lead either to over-annotation, due to the annotation of multiple fragments originating from the same ORF, or to under-annotation, possibly due to the absence of partial or entire domains from the ORF[10]. These problems affect genomic analyses, causing errors due to missing ORFs that are not annotated or due to multiple fragments that belong to the same ORF but are annotated separately. In other words, the mere absence of a gene from a draft cannot be considered definitive proof of its absence from the organism’s genome[10,41].

The pangenomic approach is one type of analysis that may be impaired by reliance on draft genomes, because many genes in a draft may be misidentified due to fragmentation. Pangenomic projects attempt to characterize the gene pool of a bacterial species as the genes that are present in all strains (the “core genome”) and the genes that are present in only a few species (the “dispensable genome”)[43]. Horizontal gene transfer (HGT) analysis is another approach that cannot be performed using drafts. HGT is one of the main sources of variability among bacteria because it allows the acquisition of several new genes[36,37]. There is evidence that most gaps in genomic sequences are associated with transposases, insertion sequences and integrases, structures that usually flank a genomic island[48]. Another approach that may be impaired by reliance on drafts is phylogenomics, which aims to reconstruct both the vertical and lateral gene transfer processes of a bacterial species using a whole-genome analysis[49].

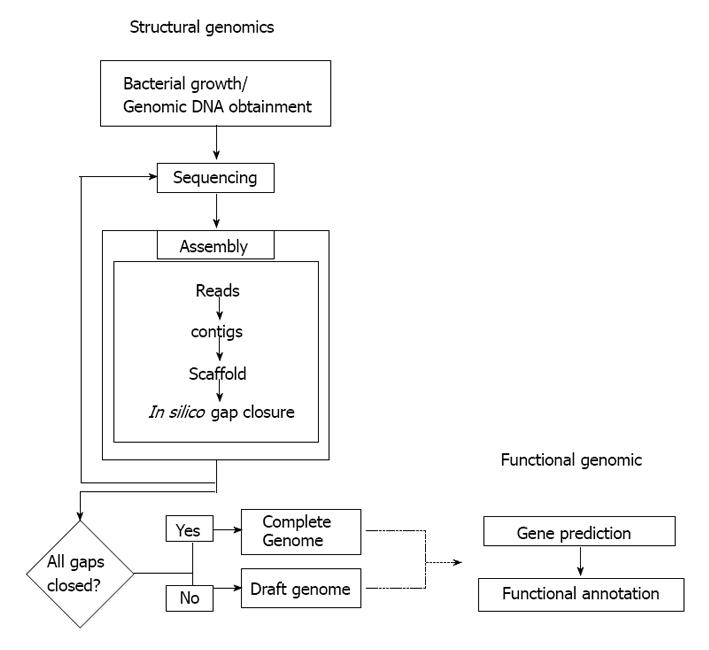

Although not strictly related to drafts, the functional annotation of genes is another feature that is usually neglected when we opt for a draft genome (Figure 2). Complete genomes may also present this problem because the quality of functional annotation is related to the amount of effort dedicated to a genome. DNA sequence is being generated much more rapidly than it can be analyzed; thus, a large proportion of the sequence information in databases has been annotated solely by automatic algorithms[50]. It is disturbing that although automatic annotation algorithms have improved over the years, misannotation has increased over time[50]. The misannotation of a reference strain is particularly harmful because the error will likely be propagated to other genomes. In our attempts to exploit the full potential of NGS, we risk having databases filled with incomplete and/or incorrect genomic data.

Figure 2 General workflow during sequencing process a bacterial genome.

Because the purpose of many sequencing projects is to identify a small number of differences between a newly sequenced genome and the sequence of a closely related species, a large number of genomes are left as drafts[26]. Considering the constant evolution of organisms, a sequenced genome represents a snapshot in the biological history of a species. Therefore, a single finished genome might be useful for decades of future studies. By opting for draft genomes, we may be shutting down the full gamut of future scientific analysis.

VACCINE DEVELOPMENT

Genomic information was expected to boost vaccine discovery. In an attempt to measure the impact of genomic information on this field, Prachi et al[2] analyzed all the patent applications that contained genomic information. They observed that there was an enormous increase in such applications shortly after the first complete genomes were released, but since 2002, there has been a continuous decrease. The authors attributed this decrease to more stringent legal requirements, which call for empirical evidence to complement in silico data.

The initial increase in patent applications containing genomic information was related to the development of a new paradigm in vaccine development. In 2000, Rappuoli[51] described the “reverse vaccinology” (RV) concept, in which he proposed inverting the traditional process of antigen identification. Instead of identifying the antigenic components of a pathogenic organism using serological or biochemical methods, RV uses the organism’s genome to predict all of its protein antigens. RV approaches mainly focus on secreted proteins because they are more likely to induce immune responses. Secreted proteins are involved in several processes that modulate the host-pathogen relationship, such as cell adhesion and invasion, as well as resistance to stress conditions[52-54]. Over the years, several methodologies have been developed to predict secreted proteins and to evaluate their potential immunological properties.

In 2010, Vaxign was released as the first vaccine design tool with a web interface (http://www.violinet.org/vaxign/). Vaxign allows users to submit their own sequences to perform vaccine target predictions. The Vaxign predictions have been consistent with existing reports for organisms such as Mycobacterium tuberculosis and Neisseria meningitides[55]. Another vaccine design tool is MED (Mature Epitope Density - http://med.mmci.uni-saarland.de/). MED attempts to select the more promising vaccine targets by identifying proteins with higher concentrations of epitopes[56]. There are also tools exclusively for protein epitope prediction, such as Immune Epitope Analysis (http://tools.immuneepitope.org/main/) and Vaxitope (http://www.violinet.org/vaxign/vaxitop/index.php).

Because a large number of bacterial genomes are already available, reverse vaccinology is quite accessible and inexpensive. Nevertheless, as has been previously discussed[57,58], the expectations for reverse vaccinology techniques do not correspond to reality, given the small number of vaccines have been developed using the bacterial genome sequences available[59]. This occurs because there are also several factors that are involved in the host response during infection, for example, the production of antibodies by the immune system.

ANTIBACTERIAL DISCOVERY

The period between the 1930s and the 1960s is known as the “golden age” of antibiotic discovery[11,60]. During this period, most of the known classes of antibiotics were discovered. These discoveries involved screening natural products regardless of their mechanisms of action. After most of the low-hanging fruits were harvested, the rate of antibacterial discovery decreased, culminating in a slowdown beginning in the 1990s[61].

Hopes for turning this void into a rapid acceleration accompanied the completion of the first bacterial genome sequences. The goal was to use comparative genomic analysis to identify potential targets present in a desirable spectrum (e.g., the bacteria responsible for upper respiratory tract infections)[3,4,62]. It was naive to assume that having the genome sequences would be sufficient for this level of discovery; a possible drug target must undergo numerous stages, from discovery through human clinical tests, and it is not possible to develop drugs for all potential targets[3,62]. Nevertheless, the prospect of exploring hundreds of potential targets revived the interest of pharmaceutical companies.

After some years of trials, several companies ended their target-based programs because of a lack of productivity. Despite reports of multi-resistant bacterial strains, the efforts to discover new antibacterial targets were again reduced[63,64]. Although genomics has not been able to reverse the lack of new antibiotic development, it has significantly improved screening methodologies. Genomics has facilitated high-throughput drug campaigns, which are being used to determine the mechanisms of action of antibacterial compounds and bacterial resistance mechanisms[4].

CONCLUSION

Several next-generation platforms have been developed in recent decades, as well as bioinformatics programs to an enhancement of performance and optimization omics techniques. Is not yet possible to integrate reads from different sequencers into a single assembly[17,23]. This newly developed approach aims to reduce the amount of manual intervention needed to complete a genome sequence by using a hybrid approach to resolve repetitive regions.

Improvements are expected not only in sequencing platforms but also in assemblers. Recently, two groups assessed the quality of the currently available assemblers. The 2011 Assemblathon was the first competition among assemblers[65]. For this competition, simulated data were generated and groups of assemblers were asked to blindly assemble it. The use of simulated data poses a problem in determining the applicability of the results to other data sets. The 2012 GAGE (Genome Assembly Gold-Standard Evaluations) competition for assembling real data resulted in the following conclusions: (1) the data quality has a greater influence on the final outcome than the assembler itself; and (2) the results do not support the current measures of correctness (related to contiguity)[26].

There is a large gap between the availability of genomic sequences in databases and the commercial production of vaccines and antibiotics in recent years, especially in the fields of investment and success (“expected return”). Drug development for all potential targets and effective vaccines has produced limited success. In contrast, there has been an acceleration in the discovery of new targets due to the refinement of bioinformatics tools for this purpose, such as epitope mapping and searching for secreted proteins. However, the major problems facing vaccine and antibiotic development, such as resistance mechanisms and host immune responses, remain unsolved.

Genome analysis constitutes a strategy for the expansion and diversification of the pharmacology and vaccinology sectors. This methodology can be used to explore a large number of targets and to reduce the costs of molecular and immunological tests. Finally, to improve the production of antibiotics and vaccines, it is necessary to know more about bacterial regulatory pathways. New interactome and microbiome studies must be implemented to assist this search.

ACKNOWLEDGEMENTS

This work involved the collaboration of various institutions, including the Genomics and Proteomics Network of the State of Pará of the Federal University of Pará (Rede Paraense de Genômica e Proteômica da Universidade Federal do Pará), the Amazon Research Foundation (Fundação Amazônia Paraense - FAPESPA), the National Council for Scientific and Technological Development (Conselho Nacional de Desenvolvimento Científico e Tecnológico - CNPq), the Brazilian Federal Agency for the Support and Evaluation of Graduate Education (Coordenação de Aperfeiçoamento de Pessoal de Nível Superior - CAPES) and the Minas Gerais Research Foundation (Fundação de Amparo à Pesquisa do estado de Minas Gerais).

P- Reviewers: Bhattacharya SK, Faik A S- Editor: Ma YJ L- Editor: A E- Editor: Lu YJ