Published online Mar 16, 2024. doi: 10.4253/wjge.v16.i3.126

Peer-review started: December 31, 2023

First decision: January 16, 2024

Revised: January 18, 2024

Accepted: February 23, 2024

Article in press: February 23, 2024

Published online: March 16, 2024

Processing time: 73 Days and 19.5 Hours

The number and variety of applications of artificial intelligence (AI) in gastr

Core Tip: As an endoscopist you should familiarise yourself with the capabilities, strengths and weaknesses of any artificial intelligence (AI) technology you intend to use. It is important to be cognisant of the human factors and psychological biases that influence how you as an individual user treat advice from AI platforms. Those using AI technologies in healthcare should be involved in the development of those technologies and should advocate for a human-centred approach to their design and implementation.

- Citation: Campion JR, O'Connor DB, Lahiff C. Human-artificial intelligence interaction in gastrointestinal endoscopy. World J Gastrointest Endosc 2024; 16(3): 126-135

- URL: https://www.wjgnet.com/1948-5190/full/v16/i3/126.htm

- DOI: https://dx.doi.org/10.4253/wjge.v16.i3.126

Artificial intelligence (AI) encompasses a wide variety of applications for sophisticated computer algorithms that use large volumes of data to perform tasks traditionally thought to require human intelligence[1]. There is a growing list of current and proposed applications for AI in medicine, including direct patient interaction with AI chatbots to answer patient queries, analysis of a large amount of disparate data to predict disease diagnosis and course, and interpretation of images from radiological investigations[2-4]. In gastroenterology, potential clinical applications span from use of domain-specific large-language models (LLMs) in the triage of specialist referrals to prediction of early-stage pancreatic cancer before it becomes overtly visible on imaging[5,6].

Following the development of convolutional neural networks (CNNs) for computer-aided detection and diagnosis of pathology in the fields of radiology and dermatology, gastrointestinal (GI) endoscopy became an area of early research into applications of CNNs in medicine[7-10]. Among the most promising initial applications of AI in GI endoscopy were computer-aided detection (CADe) and computer-aided diagnosis (CADx) of premalignant polyps during colonoscopy using machine learning (ML) systems[11,12]. These applications were prioritised in an effort to improve adenoma detection rate (ADR) and to differentiate premalignant polyps from those without malignant potential, with the attendant possibility of reducing incidence of colorectal cancer (CRC) and reducing costs and complications associated with unnecessary polypectomy[13,14]. Additional applications have developed rapidly to include detection and diagnosis of other pathology in upper and lower GI endoscopy, capsule endoscopy and biliary endoscopy. There has also been initial exploratory use of LLMs to aid decision-making on management of early CRCs and patient-facing applications to determine adequacy of bowel preparation prior to colonoscopy[15-19].

While initial results on colorectal polyp CADe showed impressive improvements in key metrics of colonoscopy quality[20], some subsequent real-world studies showed more modest effects or even no effect, and noted an increased rate of unnecessary resection of non-neoplastic polyps[21-23]. It is possible that factors involved in real-world human-AI interaction (HAII) are a driver of such differences between experimental and real-world results[24].

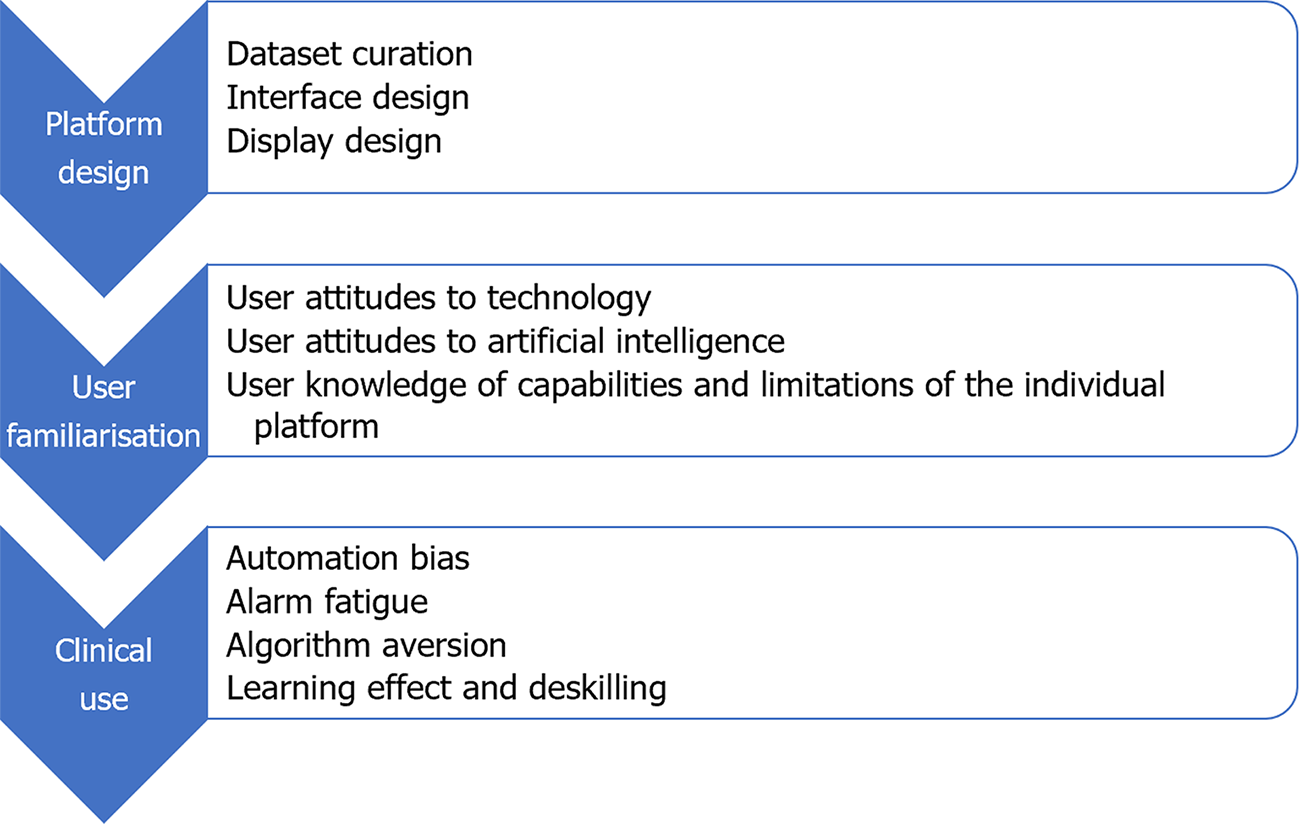

More than most other advances in medical science, successful implementation of AI platforms will depend not solely on the technical success and technical efficacy of the platform, but equally on the ability of the technology to interact with its human operators[25]. There was early adoption of CADe technology in the field of breast radiology, based on experimental evidence of benefit[26]. Analysis of real-world data from those systems later showed that early iterations contributed to greater resource utilisation due to false positives and increased additional radiological investigations[27]. It is an important lesson for application of AI in GI endoscopy, that effectiveness of AI platforms and their impact on patient outcomes can only be properly assessed in real-world settings. Despite the high speed of progress in development and roll-out of new applications for AI in GI endoscopy, the real-world effects of AI on clinician decision-making remain underexplored[28]. Multiple factors can affect HAII at each phase of the development and deployment of an AI platform (Figure 1). Areas of interest in the interaction between humans and AI in GI endoscopy, which will be explored in this review, include: (1) Human design choices in creation of AI platforms and their user interfaces; (2) Regulatory processes and interventions for new AI platforms; (3) Human factors influencing user interaction with AI platforms; and (4) Clinician and patient attitudes toward individual platforms and AI broadly.

Growth in use of information and computer technologies in the 1980s led to recognition of the importance of studying the relationship between humans and these new technologies[29]. The field of human–computer interaction (HCI) sought to investigate social and psychological aspects of interactions that would influence the acceptability and utility of these new technologies[30]. Humans interacted with early computers by inputting code via keyboard, but as humans’ methods of interacting with technology have become more sophisticated, the influences on and impact of HCI have also become more complex[31]. Ease of use is recognised as an important driver of uptake of new technological products or platforms[32].

Psychological aspects of HCI were extensively explored in the pre-AI era. The ‘computers are social actors’ (CASA) theory held that, because psychological mechanisms evolve over centuries rather than decades, the human brain reflexively treats any entity with human-like abilities as human[29,33]. Recent work has queried the durability of the CASA effect, and suggested that the human brain’s treatment of interactive technology as human may relate more to a technology’s relative novelty than to its existence[34]. Whether human operators innately regard new technologies as tools or as other humans has significant ramifications for how the presence of AI may affect human performance.

A key question with regard to technological development in any sector is that of function allocation i.e. deciding which roles should be performed by the human and which by the technology. AI has led to a rapidly burgeoning cadre of tasks that can be performed by technology, with an ever-diminishing number of tasks the sole preserve of humans[29]. Since the conception of AI, there has been disagreement between researchers on what the aim of AI should be; to replace human labour or to augment human performance. The prevailing view on this has changed from one position to the other frequently in the intervening period[35]. At the current juncture, it appears that the decision will be made by the speed at which the technology can be developed, rather than by specific ethical considerations. A contrast between HCI and HAII is seen in the view by the former that computers and new technologies should be assistive, whereas the latter field recognises that AI has the possibility to replace human efforts entirely in some instances, so-called agential AI[36].

CADe and CADx platforms based on CNNs are created by training the programme on large volumes of data e.g. images and videos with a defined diagnosis, allowing the programme to learn patterns in the images that are suggestive of the presence of pathology or of the specific diagnosis of interest[37]. Design of CADe and CADx systems requires the selection, curation and annotation of a large number of images of relevant pathology, to use as ‘ground truth’ for training and testing of the algorithm, while design of LLMs require large volumes of text data. Selection and curation of such image or text data represents the first point of contact between humans and the AI platform. There are several ways in which human decisions on training and design can influence the long-term operation of the AI platform. The functioning of the AI platform after its creation and the mechanism by which it arrives at its decisions are both opaque, with the processes being described as a ‘black box’[38]. The possibility of building biases into the platform’s functioning makes selection of the best possible training database imperative, as unintended consequences of biased training data have been shown in other applications to have negative consequences on health outcomes for patients from minority groups[39].

Difficulties can arise due to a number of problems with the training dataset, giving rise to different types of selection bias. When a CADe algorithm is trained using images from prior colonoscopies, those images are typically compressed and altered in the process of saving them to a database. The compression may introduce artefact and alter the value of the image for the CNN’s learning. It may also cause changes to the image that are imperceptible to the human but integrated into the algorithm’s processing. Choosing images that are too idealised may lead the algorithm to be poor at detecting pathology that deviates from archetypal descriptions[40]. There is also concern that if a CNN is trained on data that comes from homogenous Western populations in the most developed countries, this may weaken the algorithm’s ability to give appropriate advice in racially diverse groups[41]. An unbalanced dataset with too many instances of pathology and not enough images without pathology may skew the algorithm causing decreased specificity. The larger the number of images used to train the algorithm, the better the system can be expected to perform[42]. When the algorithm encounters, in real-world use, images outside what it encountered in the training set, it is more likely to flag those images as pathology[40]. A novel methodology to train a CADe algorithm that involves training the platform by teaching it to read images in a similar fashion to an expert clinician, has recently been described[43].

Design of the user interface is an important factor in optimising CADe/CADx performance. Design features that minimise additional cognitive burden and make alarms and advice coherent can result in synergistic effects. Conversely, poorly-designed platforms may increase the risk of automation bias, discussed later[44]. The effect of presenting, alongside a CADx bounding box, additional data regarding the algorithm’s confidence in the given diagnosis, may alter the endoscopist’s trust in the AI advice and influence their likelihood to endorse the same diagnosis[45,46].

Precise definitions and classifications for medical device software and AI systems differ between jurisdictions but in general AI or ML-based tools or algorithms when used for diagnostic or therapeutic purposes, including applications for GI endoscopy, will meet the definition of a medical device and should be appropriately developed and evaluated before they are approved for clinical use in accordance with the relevant regional regulation[47]. Similarly, clinical research including pilot studies to generate the clinical data required to validate and appraise novel and uncertified AI tools in endoscopy should be performed in accordance with applicable regulatory and ethical requirements.

To facilitate new and potentially beneficial advancements while protecting patients, regulation and scrutiny should be proportionate to the risk of the software and it is recognised that regulation of AI systems as medical devices is challenging and this is not unique to GI applications[48]. The intended use of the AI and not simply the technology is a critical determinant of risk so for example CADx for malignancy diagnosis would generally fall into a higher risk category and require sufficient evidence and evaluation to support its use. Other important principles influencing risk evaluation include transparency, explainability, interpretability and control of bias. In CADe in GI endoscopy this includes the ability of the clinician user to detect erroneous output information compared to so called ‘Blackbox’ algorithm-based interpretations. While many AI and ML applications have been approved, some experts have questioned the ability of currently emerging LLM products to meet these principles and GI clinicians must consider the evidence base and reliability of such devices for clinical practice use[49]. Outside of basic regulation and licensing, clinicians and health systems trialling or implementing AI in GI endoscopy practice have a responsibility to ensure the applications (whether diagnostic or therapeutic) have a sufficient evidence base and the clinical data supporting algorithms for example is reliable and representative for the intended use patient population.

Analysis of the interaction between humans and AI platforms in GI endoscopy can be informed by a human factors approach, examining how human work interacts with work systems[50]. Human factors theories help to study and optimise components of work systems to allow human workers to get the most from the system[25]. Human factors research also recognises that there are several cognitive biases that can affect human interaction with AI[51]. Some of the cognitive biases that are most relevant to applications of AI in GI endoscopy are discussed below.

Automation bias refers to the human propensity to disengage cognitively from tasks that are assigned for execution or support by an external technology, usually resulting in decreased situational awareness[52]. The potential for negative outcomes due to automation bias has been explored through a human factors paradigm in healthcare and other settings requiring high levels of accuracy[50,52]. In the example of AI in GI endoscopy, automation bias may manifest as an over-reliance on a CADe or CADx platform to rapidly detect and diagnose all pathology encountered during the endoscopic procedure[46]. The use of automated decision support systems that are presumed to be highly accurate can lead to an over-reliance on the part of users, which may manifest as bias or as complacency.

Automation complacency may manifest with the endoscopist paying less attention to the presence of on-screen pathology during endoscopy, due to an assumption that the software will detect any pathology that appears[53]. This reduced vigilance, whereby the user becomes dependent on the software to shoulder the detection burden, can result in reduced human detection of pathology[54,55]. Second, the user can become progressively more confident in the AI platform’s performance, to the point where they over-rely on its advice against their own correct judgement[56,57]. Studies of mammography and histology showed concerning over-reliance of clinicians on incorrect AI advice labelling cancers as benign[57]. The complexity of verifying that the AI platform is performing appropriately impacts on the degree of automation bias that arises in a given task[44]. In endoscopy, the ease of that verification task may vary depending on the endoscopist’s experience, where the complexity of verification is higher for non-expert endoscopists than for experts.

It is important that users of AI platforms are educated on the limitations of the individual platform. The latency of the system i.e. the time difference between pathology appearing on-screen and recognition of the pathology by the computer system is typically as short as 0.2 s in the current generation of CADe platforms[37]. The cumulative time taken for the platform to identify the pathology, activate the alarm and for the user to register the alarm may be significantly longer, however.

A related cognitive bias is anchoring bias, which posits that when presented with external advice, humans tend to adjust insufficiently from that advice toward their own opinion, in reaching their decision[58]. It has been suggested that this insufficient adjustment is due to a trade-off between the accuracy required in the decision and the time required to fully consider the difference between the external advice and one’s own opinion[59]. Taking longer to consider a decision may be an effective mitigation against anchoring bias[51]. In real-time CADe-assisted endoscopy, however, the rapidity of decisions is an important factor in the efficiency of the procedure.

In CADx applications, the effect of AI may be synergistic for both expert and non-expert endoscopists[46]. A study that reached this conclusion advised endoscopists to treat advice from CADx as that from a colleague, weighing it against how accurate it usually is compared to the endoscopist. A simple adjustment to reduce automation bias may involve decreasing the prominence of alarms on screen[60]. More comprehensive strategies to mitigate automation bias could aim to decrease cognitive load on the endoscopist, instigate thorough training on use of the specific AI platform, address explainability and transparency of decision making and design adaptive user interfaces[44,52,61].

False positives are of significant interest in CADe, as they may negatively affect the efficiency and economy of endoscopic procedures[62]. A false positive may prolong the procedure as the endoscopist reviews the highlighted area[63]. It may also add to the cost of the procedure by increasing use of implements e.g. forceps/snares and raising the number of normal tissue samples submitted for processing and pathologic analysis[64]. In colonoscopy, a CADe false positive may be caused by a normal colonic fold, other normal anatomy (e.g. the ileocaecal valve), a non-polypoid abnormality e.g. a diverticulum, or luminal contents[63]. In commercially-available CADe systems, false positives in colonoscopy may occur in a ratio to true positives as high as 25:1[63].

The incidence of false positives during colonoscopy has been reported to range from 0.071 to 27 alarms per colonoscopy, depending heavily on the definition used[11]. Whereas some studies defined a false positive as any activation of a bounding box, others defined it as an activation that resulted in resection of normal tissue. Most studies examined the incidence of false positives only during withdrawal, as the CADe system was typically only active during withdrawal. In real world practice, however, the CADe platform is often active during both insertion and withdrawal, likely leading to more false positives than in the reported experimental studies. False positive alarms may be categorised according to the amount of time the endoscopist spends examining the area involved in the false positive alarm: mild (< 1 s), moderate (1-3 s) or severe (> 3 s)[63]. While most false positives in the published studies did not result in additional examination time, the endoscopists involved in those studies were experts, so may have been more easily able to dismiss false alarms than non-expert endoscopists.

Alarm fatigue is a well-described phenomenon whereby the repetitive activation of visual or audio alarms causes diminished, delayed or absent response in the user over time[65]. Alarms have the potential to add to cognitive burden on the endoscopist, increasing fatigue and negatively impacting performance[66]. While the amount of time taken to examine the site of each false alarm is low in published studies, the effect of repeated activations (at a rate of 2.4 +/- 1.2 false positive alarms per minute of withdrawal time) on endoscopist fatigue and possibly on algorithm aversion remains to be elucidated[63].

The frequency of alarms may be addressed by altering the confidence level of the CADe i.e. decreasing the sensitivity of the platform, though this would need to be balanced against the resulting risks of decreased sensitivity. AI may also provide part of the solution for this problem, through development of CADe platforms with better accuracy and through filtering technology that uses generative learning to suppress false positives in real-world use[67]. Another approach may be to increase the latency of output, so that activations of the bounding box of less than one second duration, which are almost always spurious, are suppressed and do not trigger an alarm. Alarm fatigue may also be reduced by minimising the alarm stimulus e.g. visual alarm without audio alarm, or altering the prominence or display of the bounding box.

The ‘CASA’ phenomenon, discussed earlier, was a cornerstone of early HCI research. More recent research has shown that the way humans interact with technology is more nuanced than simply treating a new technology as they would another human. There are multiple influences on how humans interact with technology and how they use or discard advice given by technology, though the interaction of these factors is poorly understood[68,69].

Several studies have shown that a human user is likely to judge an AI algorithm more harshly for a mistake in advice than they would judge another human. This results in the user being substantially more likely to disregard the algorithm’s future advice, a phenomenon known as algorithm aversion[70]. In contrast to automation bias, algorithm aversion suggests that once a human user notices the imperfect nature of the algorithm advising them, their adherence to the algorithm’s future suggestions decreases, causing under-reliance on the AI system[71]. More recent work suggests that there are many factors affecting whether a user develops algorithm aversion, and durability of the phenomenon; these may include the individual endoscopist’s expertise, attitudes to AI and expectations of the system. Experts may be more likely to reduce their adherence to AI advice after a false alarm than non-experts, even when later advice is correct[72,73].

In the CADe setting, where the platform alerts for many more false positives than true positives, the impact of algorithm aversion may be important. A systematic review of the factors influencing algorithm aversion identified incorrect expectations of the algorithm, control of decision making and external incentives as significant contributors[55]. With respect to AI in GI endoscopy, these factors may provide a framework for research on the human and AI platform variables that affect the propensity of a user to develop algorithm aversion.

The effect of CADe and CADx platforms on an endoscopist’s learning and on their development of skills essential to performance of endoscopy is uncertain. Several studies have shown that CADe may improve a trainee’s ADR to close to that of an expert, providing additional safety and reducing the adenoma miss rate[74]. It is not clear, however, whether this improved performance produces a persistent learning effect or whether it may bring about dependence of trainees on the CADe platform. There is some evidence that the ability of such platforms to draw a trainee endoscopist’s eye to a polyp and to give advice on the likely histologic type of the polyp may improve the trainee’s recognition and diagnosis of such lesions[46].

Evidence from non-endoscopic applications of AI show that the potential for non-expert clinicians and female clinicians to over-estimate the reliability of an AI platform raises concerns for poor training outcomes and for inequitable distribution of performance benefits[68]. Interestingly, providing an explanation for its decision does not appear to improve the application of AI for training. In explainable AI platforms that showed the user how it had arrived at its advice, trainees were more likely to accept the advice, even when it was incorrect, than if no explanation was given, the so-called ‘white box paradox’[75].

Visual gaze pattern (VGP) is an important metric in vigilance tasks including detection of pathology during endoscopy, with substantial differences between VGPs of experts and those of non-experts[66,76-78]. Analysis of the VGP of endoscopists with high ADRs showed a positive correlation with VGPs that tracked the periphery of the bowel wall and the periphery of the screen in a ‘bottom U’ configuration during colonoscopy[76,79]. The repeated attraction of the endoscopist’s attention to a bounding box on screen may serve to embed alterations in the endoscopist’s VGP, which have been posited to have a negative effect on an endoscopist’s attainment of expertise in polyp detection in colonoscopy[80]. In the eye-tracking experiment, endoscopists’ gaze patterns focused more on the centre of the screen when using CADe, reducing their likelihood of detecting pathology peripherally.

The quality of HAII depends to a significant degree on the attitudes of users and patients toward the technology. Levels of trust in technology generally, and in AI technologies specifically, are heterogenous across groups of clinicians and groups of patients[81]. They depend on many factors including personal, professional, organisational and broader cultural considerations[82]. Research and speculation on the role of AI platforms have occupied increasing amounts of space in the endoscopy literature. The promise of AI in revolutionising patient care and administrative burden have been much-vaunted in academic literature and in popular media, leading to high levels of awareness of AI among the general population, but low levels of knowledge on specific applications[83].

Knowledge of AI is seen to correlate with a positive perception of the benefits of AI, and perhaps an underestimation of its risks[84]. Surveys of the attitudes of gastroenterologists and other endoscopists in the United States and the United Kingdom show a high degree of optimism on the potential role of AI to improve quality of care for patients[85]. They also support development of guidelines for use of AI devices[86] and endorse concerns that CADe technology will create operator dependence on the technology[87].

Patient attitudes toward AI algorithms making decisions or offering advice appear more cautious[88]. When used as a tool by their clinician, patients perceive benefit in AI in specific settings including cancer diagnosis and treatment planning[89]. Patient attitudes to use of AI in GI endoscopy require further research.

In many of its current applications, AI marks a fundamental transition from technology as a tool to technology as a team member. Research is required to define what skills clinicians will need to optimally leverage AI technologies and to apply AI advice with adequate discrimination. It will then be necessary to decide how best to teach these skills from undergraduate to expert endoscopist level.

While there are regulatory pathways for appropriate trialling and development of AI software applications, guidance for clinical evidence requirements is lacking for medical AI software in general and not limited to software devices in GI endoscopy. Frameworks for design and reporting of trials involving AI are therefore to be welcomed[90]. Uniform definitions of variables (e.g. false positive) are needed for research and reporting of real-world performance of AI platforms. Several professional societies have published opinions on priority areas for future research on AI in GI endoscopy[91,92]. These opinions place a notable emphasis on technical outcomes, rather than on outcomes related to human interaction or patient-centred endpoints. It can be expected that priorities will need to be updated and redefined by professional societies frequently, as new technologies emerge. The medical community and professional societies should lead the way in defining the research agendas for AI platforms including the clinical evidence base required for their validation, adoption into clinical practice and continuous appraisal, while ensuring that patient priorities, human factors and real-world evidence are prioritised[93].

Priorities for research on HAII in GI endoscopy should include factors predicting individual clinician variations in utility of AI and the effect of AI use on trainees’ development of core competencies for endoscopy[94]. A HAII focus in platform development may give rise to AI that learns how best to interact with each clinician based on their performance and use style, and adapts accordingly. Complementarity may be enhanced by prioritising study of human interaction with novel AI platforms that can perform tasks at which human clinicians are poor e.g. measurement of polyps or other pathology, measurement/estimation of the percentage of the stomach/colon visualised during a colonoscopy[95].

HAII will be a key determinant of the success or failure of individual applications of AI. It is therefore essential to optimise interface elements, as clinician frustration with poorly-designed platforms now may have a negative impact on later engagement and uptake[96]. The rapid development and implementation of AI platforms in GI endoscopy and elsewhere in medicine has been performed while studying mainly technical outcomes in idealised settings. This trend of adopting a technology-first approach expects clinicians and patients to adapt to the AI platforms, and risks taking insufficient account of human preferences and cognitive biases[50]. Reorienting the focus toward development of human-centred AI and incorporating the study of human interaction at each stage of a new platform’s development, while aligning to appropriate regulation and governance, may allow creation of AI that is more user-friendly, more effective, safer and better value[90].

| 1. | Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism. 2017;69S:S36-S40. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 728] [Cited by in RCA: 926] [Article Influence: 102.9] [Reference Citation Analysis (1)] |

| 2. | Lee P, Bubeck S, Petro J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N Engl J Med. 2023;388:1233-1239. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 973] [Cited by in RCA: 840] [Article Influence: 280.0] [Reference Citation Analysis (0)] |

| 3. | Thirunavukarasu AJ, Ting DSJ, Elangovan K, Gutierrez L, Tan TF, Ting DSW. Large language models in medicine. Nat Med. 2023;29:1930-1940. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 7] [Cited by in RCA: 1282] [Article Influence: 427.3] [Reference Citation Analysis (0)] |

| 4. | Chan HP, Hadjiiski LM, Samala RK. Computer-aided diagnosis in the era of deep learning. Med Phys. 2020;47:e218-e227. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 49] [Cited by in RCA: 141] [Article Influence: 28.2] [Reference Citation Analysis (0)] |

| 5. | Wee CK, Zhou X, Sun R, Gururajan R, Tao X, Li Y, Wee N. Triaging Medical Referrals Based on Clinical Prioritisation Criteria Using Machine Learning Techniques. Int J Environ Res Public Health. 2022;19. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 10] [Cited by in RCA: 8] [Article Influence: 2.0] [Reference Citation Analysis (0)] |

| 6. | Huang B, Huang H, Zhang S, Zhang D, Shi Q, Liu J, Guo J. Artificial intelligence in pancreatic cancer. Theranostics. 2022;12:6931-6954. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 46] [Cited by in RCA: 67] [Article Influence: 16.8] [Reference Citation Analysis (0)] |

| 7. | Yamashita R, Nishio M, Do RKG, Togashi K. Convolutional neural networks: an overview and application in radiology. Insights Imaging. 2018;9:611-629. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1202] [Cited by in RCA: 1287] [Article Influence: 160.9] [Reference Citation Analysis (0)] |

| 8. | Chang WY, Huang A, Yang CY, Lee CH, Chen YC, Wu TY, Chen GS. Computer-aided diagnosis of skin lesions using conventional digital photography: a reliability and feasibility study. PLoS One. 2013;8:e76212. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 56] [Cited by in RCA: 42] [Article Influence: 3.2] [Reference Citation Analysis (0)] |

| 9. | Vasilakos AV, Tang Y, Yao Y. Neural networks for computer-aided diagnosis in medicine: a review. Neurocomputing. 2016;216:700-708. [RCA] [DOI] [Full Text] [Cited by in Crossref: 57] [Cited by in RCA: 34] [Article Influence: 3.4] [Reference Citation Analysis (0)] |

| 10. | Sinonquel P, Eelbode T, Bossuyt P, Maes F, Bisschops R. Artificial intelligence and its impact on quality improvement in upper and lower gastrointestinal endoscopy. Dig Endosc. 2021;33:242-253. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 11] [Cited by in RCA: 24] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 11. | Repici A, Badalamenti M, Maselli R, Correale L, Radaelli F, Rondonotti E, Ferrara E, Spadaccini M, Alkandari A, Fugazza A, Anderloni A, Galtieri PA, Pellegatta G, Carrara S, Di Leo M, Craviotto V, Lamonaca L, Lorenzetti R, Andrealli A, Antonelli G, Wallace M, Sharma P, Rosch T, Hassan C. Efficacy of Real-Time Computer-Aided Detection of Colorectal Neoplasia in a Randomized Trial. Gastroenterology. 2020;159:512-520.e7. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 237] [Cited by in RCA: 443] [Article Influence: 73.8] [Reference Citation Analysis (2)] |

| 12. | Mori Y, Kudo S-e, Berzin TM, Misawa M, Takeda K. Computer-aided diagnosis for colonoscopy. Endoscopy. 2017;49:813-819. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 90] [Cited by in RCA: 99] [Article Influence: 11.0] [Reference Citation Analysis (0)] |

| 13. | Corley DA, Jensen CD, Marks AR, Zhao WK, Lee JK, Doubeni CA, Zauber AG, de Boer J, Fireman BH, Schottinger JE, Quinn VP, Ghai NR, Levin TR, Quesenberry CP. Adenoma detection rate and risk of colorectal cancer and death. N Engl J Med. 2014;370:1298-1306. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1251] [Cited by in RCA: 1655] [Article Influence: 137.9] [Reference Citation Analysis (1)] |

| 14. | Rex DK, Kahi C, O'Brien M, Levin TR, Pohl H, Rastogi A, Burgart L, Imperiale T, Ladabaum U, Cohen J, Lieberman DA. The American Society for Gastrointestinal Endoscopy PIVI (Preservation and Incorporation of Valuable Endoscopic Innovations) on real-time endoscopic assessment of the histology of diminutive colorectal polyps. Gastrointest Endosc. 2011;73:419-422. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 406] [Cited by in RCA: 480] [Article Influence: 32.0] [Reference Citation Analysis (0)] |

| 15. | Tokat M, van Tilburg L, Koch AD, Spaander MCW. Artificial Intelligence in Upper Gastrointestinal Endoscopy. Dig Dis. 2022;40:395-408. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 24] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 16. | Marya NB, Powers PD, Petersen BT, Law R, Storm A, Abusaleh RR, Rau P, Stead C, Levy MJ, Martin J, Vargas EJ, Abu Dayyeh BK, Chandrasekhara V. Identification of patients with malignant biliary strictures using a cholangioscopy-based deep learning artificial intelligence (with video). Gastrointest Endosc. 2023;97:268-278.e1. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1] [Cited by in RCA: 51] [Article Influence: 17.0] [Reference Citation Analysis (2)] |

| 17. | Dray X, Iakovidis D, Houdeville C, Jover R, Diamantis D, Histace A, Koulaouzidis A. Artificial intelligence in small bowel capsule endoscopy - current status, challenges and future promise. J Gastroenterol Hepatol. 2021;36:12-19. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 28] [Cited by in RCA: 45] [Article Influence: 9.0] [Reference Citation Analysis (0)] |

| 18. | Kudo SE, Ichimasa K, Villard B, Mori Y, Misawa M, Saito S, Hotta K, Saito Y, Matsuda T, Yamada K, Mitani T, Ohtsuka K, Chino A, Ide D, Imai K, Kishida Y, Nakamura K, Saiki Y, Tanaka M, Hoteya S, Yamashita S, Kinugasa Y, Fukuda M, Kudo T, Miyachi H, Ishida F, Itoh H, Oda M, Mori K. Artificial Intelligence System to Determine Risk of T1 Colorectal Cancer Metastasis to Lymph Node. Gastroenterology. 2021;160:1075-1084.e2. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 143] [Cited by in RCA: 140] [Article Influence: 28.0] [Reference Citation Analysis (0)] |

| 19. | Zhu Y, Zhang DF, Wu HL, Fu PY, Feng L, Zhuang K, Geng ZH, Li KK, Zhang XH, Zhu BQ, Qin WZ, Lin SL, Zhang Z, Chen TY, Huang Y, Xu XY, Liu JZ, Wang S, Zhang W, Li QL, Zhou PH. Improving bowel preparation for colonoscopy with a smartphone application driven by artificial intelligence. NPJ Digit Med. 2023;6:41. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 35] [Reference Citation Analysis (0)] |

| 20. | Hassan C, Spadaccini M, Iannone A, Maselli R, Jovani M, Chandrasekar VT, Antonelli G, Yu H, Areia M, Dinis-Ribeiro M, Bhandari P, Sharma P, Rex DK, Rösch T, Wallace M, Repici A. Performance of artificial intelligence in colonoscopy for adenoma and polyp detection: a systematic review and meta-analysis. Gastrointest Endosc. 2021;93:77-85.e6. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 361] [Cited by in RCA: 350] [Article Influence: 70.0] [Reference Citation Analysis (5)] |

| 21. | Hassan C, Spadaccini M, Mori Y, Foroutan F, Facciorusso A, Gkolfakis P, Tziatzios G, Triantafyllou K, Antonelli G, Khalaf K, Rizkala T, Vandvik PO, Fugazza A, Rondonotti E, Glissen-Brown JR, Kamba S, Maida M, Correale L, Bhandari P, Jover R, Sharma P, Rex DK, Repici A. Real-Time Computer-Aided Detection of Colorectal Neoplasia During Colonoscopy: A Systematic Review and Meta-analysis. Ann Intern Med. 2023;176:1209-1220. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 106] [Cited by in RCA: 135] [Article Influence: 45.0] [Reference Citation Analysis (0)] |

| 22. | Ahmad A, Wilson A, Haycock A, Humphries A, Monahan K, Suzuki N, Thomas-Gibson S, Vance M, Bassett P, Thiruvilangam K, Dhillon A, Saunders BP. Evaluation of a real-time computer-aided polyp detection system during screening colonoscopy: AI-DETECT study. Endoscopy. 2023;55:313-319. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 58] [Cited by in RCA: 54] [Article Influence: 18.0] [Reference Citation Analysis (0)] |

| 23. | Ladabaum U, Shepard J, Weng Y, Desai M, Singer SJ, Mannalithara A. Computer-aided Detection of Polyps Does Not Improve Colonoscopist Performance in a Pragmatic Implementation Trial. Gastroenterology. 2023;164:481-483.e6. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 83] [Cited by in RCA: 66] [Article Influence: 22.0] [Reference Citation Analysis (0)] |

| 24. | Sutton RA, Sharma P. Overcoming barriers to implementation of artificial intelligence in gastroenterology. Best Pract Res Clin Gastroenterol. 2021;52-53:101732. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 3] [Cited by in RCA: 9] [Article Influence: 1.8] [Reference Citation Analysis (0)] |

| 25. | Sujan M, Furniss D, Grundy K, Grundy H, Nelson D, Elliott M, White S, Habli I, Reynolds N. Human factors challenges for the safe use of artificial intelligence in patient care. BMJ Health Care Inform. 2019;26. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 70] [Cited by in RCA: 63] [Article Influence: 9.0] [Reference Citation Analysis (0)] |

| 26. | Nishikawa RM, Bae KT. Importance of Better Human-Computer Interaction in the Era of Deep Learning: Mammography Computer-Aided Diagnosis as a Use Case. J Am Coll Radiol. 2018;15:49-52. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 19] [Cited by in RCA: 27] [Article Influence: 3.0] [Reference Citation Analysis (0)] |

| 27. | Elmore JG, Lee CI. Artificial Intelligence in Medical Imaging-Learning From Past Mistakes in Mammography. JAMA Health Forum. 2022;3:e215207. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 14] [Article Influence: 3.5] [Reference Citation Analysis (0)] |

| 28. | Gaube S, Suresh H, Raue M, Merritt A, Berkowitz SJ, Lermer E, Coughlin JF, Guttag JV, Colak E, Ghassemi M. Do as AI say: susceptibility in deployment of clinical decision-aids. NPJ Digit Med. 2021;4:31. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 46] [Cited by in RCA: 175] [Article Influence: 35.0] [Reference Citation Analysis (0)] |

| 29. | Dix A. Human–computer interaction, foundations and new paradigms. J Vis Lang Comput. 2017;42:122-134. [RCA] [DOI] [Full Text] [Cited by in Crossref: 54] [Cited by in RCA: 15] [Article Influence: 1.7] [Reference Citation Analysis (0)] |

| 30. | Karray F, Alemzadeh M, Saleh JA, Arab MN. Human-Computer Interaction: Overview on State of the Art. Int J Smart Sen Intell Systems. 2008;1:137-159. [DOI] [Full Text] |

| 31. | Carroll JM, Olson JR. Chapter 2 - Mental Models in Human-Computer Interaction. In: Helander M, editor Handbook of Human-Computer Interaction. Amsterdam: N-Holl. 1988;45-65. [DOI] [Full Text] |

| 32. | Cutillo CM, Sharma KR, Foschini L, Kundu S, Mackintosh M, Mandl KD; MI in Healthcare Workshop Working Group. Machine intelligence in healthcare-perspectives on trustworthiness, explainability, usability, and transparency. NPJ Digit Med. 2020;3:47. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 109] [Cited by in RCA: 152] [Article Influence: 25.3] [Reference Citation Analysis (0)] |

| 33. | Nass C, Steuer J, Tauber ER. Computers are social actors. Proceedings of the Proceedings of the SIGCHI conference on Human factors in computing systems; 1994 April 24-28; Boston, USA. Boston: Human factors in computing systems, 1994: 72-78. |

| 34. | Heyselaar E. The CASA theory no longer applies to desktop computers. Sci Rep. 2023;13:19693. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 8] [Reference Citation Analysis (0)] |

| 35. | Winograd T. Shifting viewpoints: Artificial intelligence and human–computer interaction. Artif intel. 2006;170:1256-1258. [RCA] [DOI] [Full Text] [Cited by in Crossref: 45] [Cited by in RCA: 13] [Article Influence: 0.7] [Reference Citation Analysis (0)] |

| 36. | Sundar SS. Rise of machine agency: A framework for studying the psychology of human–AI interaction (HAII). J Comput-Mediat Commun. 2020;25:74-88. [DOI] [Full Text] |

| 37. | Cherubini A, Dinh NN. A Review of the Technology, Training, and Assessment Methods for the First Real-Time AI-Enhanced Medical Device for Endoscopy. Bioengineering. 2023;10:404. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 13] [Cited by in RCA: 13] [Article Influence: 4.3] [Reference Citation Analysis (0)] |

| 38. | Xu H, Shuttleworth KMJ. Medical artificial intellegence and the black box problem – a view based on the ethical principle of “Do No Harm”. Intell Med. 2023;. [DOI] [Full Text] |

| 39. | Obermeyer Z, Powers B, Vogeli C, Mullainathan S. Dissecting racial bias in an algorithm used to manage the health of populations. Science. 2019;366:447-453. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 1119] [Cited by in RCA: 2277] [Article Influence: 379.5] [Reference Citation Analysis (0)] |

| 40. | Hendrycks D, Gimpel K. A baseline for detecting misclassified and out-of-distribution examples in neural networks. 2016 Preprint. Available from: arXiv:1610.02136. [DOI] [Full Text] |

| 41. | Uche-Anya E, Anyane-Yeboa A, Berzin TM, Ghassemi M, May FP. Artificial intelligence in gastroenterology and hepatology: how to advance clinical practice while ensuring health equity. Gut. 2022;71:1909-1915. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 83] [Article Influence: 20.8] [Reference Citation Analysis (0)] |

| 42. | van der Sommen F. Gastroenterology needs its own ImageNet. J Med Artif Intell. 2019;2:23. [RCA] [DOI] [Full Text] [Cited by in Crossref: 4] [Cited by in RCA: 4] [Article Influence: 0.6] [Reference Citation Analysis (0)] |

| 43. | Wang S, Ouyang X, Liu T, Wang Q, Shen D. Follow My Eye: Using Gaze to Supervise Computer-Aided Diagnosis. IEEE Trans Med Imaging. 2022;41:1688-1698. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 31] [Article Influence: 7.8] [Reference Citation Analysis (0)] |

| 44. | Lyell D, Coiera E. Automation bias and verification complexity: a systematic review. J Am Med Inform Assoc. 2017;24:423-431. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 115] [Cited by in RCA: 133] [Article Influence: 14.8] [Reference Citation Analysis (0)] |

| 45. | Roumans R. Appropriate Trust in AI The Influence of Presenting Different Computer-Aided Diagnosis Variations on the Advice Utilization, Decision Certainty, and Diagnostic Accuracy of Medical Doctors. Department of Industrial Engineering. Eindhoven: Eindhoven University of Technology, 2022: 112. Available from: https://research.tue.nl/en/studentTheses/appropriate-trust-in-ai. |

| 46. | Reverberi C, Rigon T, Solari A, Hassan C, Cherubini P; GI Genius CADx Study Group, Cherubini A. Experimental evidence of effective human-AI collaboration in medical decision-making. Sci Rep. 2022;12:14952. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 55] [Cited by in RCA: 78] [Article Influence: 19.5] [Reference Citation Analysis (0)] |

| 47. | Fraser AG, Biasin E, Bijnens B, Bruining N, Caiani EG, Cobbaert K, Davies RH, Gilbert SH, Hovestadt L, Kamenjasevic E, Kwade Z, McGauran G, O'Connor G, Vasey B, Rademakers FE. Artificial intelligence in medical device software and high-risk medical devices - a review of definitions, expert recommendations and regulatory initiatives. Expert Rev Med Devices. 2023;20:467-491. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 29] [Reference Citation Analysis (0)] |

| 48. | Gilbert S, Anderson S, Daumer M, Li P, Melvin T, Williams R. Learning From Experience and Finding the Right Balance in the Governance of Artificial Intelligence and Digital Health Technologies. J Med Internet Res. 2023;25:e43682. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 28] [Reference Citation Analysis (0)] |

| 49. | Gilbert S, Harvey H, Melvin T, Vollebregt E, Wicks P. Large language model AI chatbots require approval as medical devices. Nat Med. 2023;29:2396-2398. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 88] [Article Influence: 29.3] [Reference Citation Analysis (0)] |

| 50. | Sujan M, Pool R, Salmon P. Eight human factors and ergonomics principles for healthcare artificial intelligence. BMJ Health Care Inform. 2022;29. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 2] [Cited by in RCA: 19] [Article Influence: 4.8] [Reference Citation Analysis (0)] |

| 51. | Rastogi C, Zhang Y, Wei D, Varshney KR, Dhurandhar A, Tomsett R. Deciding Fast and Slow: The Role of Cognitive Biases in AI-assisted Decision-making. Proc ACM Hum-Comput Interact. 2022;6:Article 83. [DOI] [Full Text] |

| 52. | Goddard K, Roudsari A, Wyatt JC. Automation bias: a systematic review of frequency, effect mediators, and mitigators. J Am Med Inform Assoc. 2012;19:121-127. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 397] [Cited by in RCA: 255] [Article Influence: 18.2] [Reference Citation Analysis (0)] |

| 53. | Bahner JE, Hüper A-D, Manzey D. Misuse of automated decision aids: Complacency, automation bias and the impact of training experience. Int J Hum-Comput Stud. 2008;66:688-699. [DOI] [Full Text] |

| 54. | Wickens CD, Clegg BA, Vieane AZ, Sebok AL. Complacency and Automation Bias in the Use of Imperfect Automation. Hum Factors. 2015;57:728-739. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 68] [Cited by in RCA: 46] [Article Influence: 4.2] [Reference Citation Analysis (0)] |

| 55. | Burton JW, Stein MK, Jensen TB. A systematic review of algorithm aversion in augmented decision making. J Behav Decis Mak. 2020;33:220-239. [RCA] [DOI] [Full Text] [Cited by in Crossref: 91] [Cited by in RCA: 101] [Article Influence: 14.4] [Reference Citation Analysis (0)] |

| 56. | Dratsch T, Chen X, Rezazade Mehrizi M, Kloeckner R, Mähringer-Kunz A, Püsken M, Baeßler B, Sauer S, Maintz D, Pinto Dos Santos D. Automation Bias in Mammography: The Impact of Artificial Intelligence BI-RADS Suggestions on Reader Performance. Radiology. 2023;307:e222176. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 153] [Reference Citation Analysis (0)] |

| 57. | Alberdi E, Povykalo A, Strigini L, Ayton P. Effects of incorrect computer-aided detection (CAD) output on human decision-making in mammography. Acad Radiol. 2004;11:909-918. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 65] [Cited by in RCA: 60] [Article Influence: 2.7] [Reference Citation Analysis (0)] |

| 58. | Tversky A, Kahneman D. Judgment under Uncertainty: Heuristics and Biases. Science. 1974;185:1124-1131. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 17249] [Cited by in RCA: 7393] [Article Influence: 462.1] [Reference Citation Analysis (0)] |

| 59. | Lieder F, Griffiths TL, M Huys QJ, Goodman ND. The anchoring bias reflects rational use of cognitive resources. Psychon Bull Rev. 2018;25:322-349. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 58] [Cited by in RCA: 59] [Article Influence: 8.4] [Reference Citation Analysis (0)] |

| 60. | Berner ES, Maisiak RS, Heuderbert GR, Young KR Jr. Clinician performance and prominence of diagnoses displayed by a clinical diagnostic decision support system. AMIA Annu Symp Proc. 2003;2003:76-80. [PubMed] |

| 61. | Sujan M, Furniss D, Hawkins RD, Habli I. Human factors of using artificial intelligence in healthcare: challenges that stretch across industries. Proc Saf-Crit Systems Symp 2020. Available from: https://eprints.whiterose.ac.uk/159105. |

| 62. | Hsieh YH, Tang CP, Tseng CW, Lin TL, Leung FW. Computer-Aided Detection False Positives in Colonoscopy. Diagnostics (Basel). 2021;11. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 12] [Cited by in RCA: 13] [Article Influence: 2.6] [Reference Citation Analysis (0)] |

| 63. | Hassan C, Badalamenti M, Maselli R, Correale L, Iannone A, Radaelli F, Rondonotti E, Ferrara E, Spadaccini M, Alkandari A, Fugazza A, Anderloni A, Galtieri PA, Pellegatta G, Carrara S, Di Leo M, Craviotto V, Lamonaca L, Lorenzetti R, Andrealli A, Antonelli G, Wallace M, Sharma P, Rösch T, Repici A. Computer-aided detection-assisted colonoscopy: classification and relevance of false positives. Gastrointest Endosc. 2020;92:900-904.e4. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 31] [Cited by in RCA: 66] [Article Influence: 11.0] [Reference Citation Analysis (0)] |

| 64. | Wang P, Berzin TM, Glissen Brown JR, Bharadwaj S, Becq A, Xiao X, Liu P, Li L, Song Y, Zhang D, Li Y, Xu G, Tu M, Liu X. Real-time automatic detection system increases colonoscopic polyp and adenoma detection rates: a prospective randomised controlled study. Gut. 2019;68:1813-1819. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 398] [Cited by in RCA: 590] [Article Influence: 84.3] [Reference Citation Analysis (0)] |

| 65. | Ruskin KJ, Hueske-Kraus D. Alarm fatigue: impacts on patient safety. Curr Opin Anaesthesiol. 2015;28:685-690. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 102] [Cited by in RCA: 131] [Article Influence: 11.9] [Reference Citation Analysis (0)] |

| 66. | Lavine RA, Sibert JL, Gokturk M, Dickens B. Eye-tracking measures and human performance in a vigilance task. Aviat Space Environ Med. 2002;73:367-372. [PubMed] |

| 67. | Wang H, Zhu Y, Qin W, Zhang Y, Zhou P, Li Q, Wang S, Song Z. EndoBoost: a plug-and-play module for false positive suppression during computer-aided polyp detection in real-world colonoscopy (with dataset). 2022 Preprint. Available from: arXiv: 2212.12204. [DOI] [Full Text] |

| 68. | Cabitza F, Campagner A, Simone C. The need to move away from agential-AI: Empirical investigations, useful concepts and open issues. Int J Hum-Comput Stud. 2021;155:102696. [DOI] [Full Text] |

| 69. | Chong L, Zhang G, Goucher-Lambert K, Kotovsky K, Cagan J. Human confidence in artificial intelligence and in themselves: The evolution and impact of confidence on adoption of AI advice. Comput Hum Behav. 2022;127:107018. [RCA] [DOI] [Full Text] [Cited by in Crossref: 15] [Cited by in RCA: 29] [Article Influence: 7.3] [Reference Citation Analysis (0)] |

| 70. | Dietvorst BJ, Simmons JP, Massey C. Algorithm aversion: people erroneously avoid algorithms after seeing them err. J Exp Psychol Gen. 2015;144:114-126. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 487] [Cited by in RCA: 351] [Article Influence: 29.3] [Reference Citation Analysis (0)] |

| 71. | Castelo N, Bos MW, Lehmann DR. Task-dependent algorithm aversion. J Mark Res. 2019;56:809-825. [DOI] [Full Text] |

| 72. | Jussupow E, Benbasat I, Heinzl A. Why are we averse towards algorithms? A comprehensive literature review on algorithm aversion. In Proceedings of the 28th European Conference on Information Systems (ECIS), An Online AIS Conference; 2020 Jun 15-17; Marrakesh, Morocco. Available from: https://aisel.aisnet.org/ecis2020_rp/168/. |

| 73. | Htet H, Siggens K, Saiko M, Maeda N, Longcroft-Wheaton G, Subramaniam S, Alkandari A, Basford P, Chedgy F, Kandiah K. The importance of human-machine interaction in detecting barrett's neoplasia using a novel deep neural network in the evolving era of artificial intelligence. Gastrointest Endosc. 2023;97:AB771. [DOI] [Full Text] |

| 74. | Biscaglia G, Cocomazzi F, Gentile M, Loconte I, Mileti A, Paolillo R, Marra A, Castellana S, Mazza T, Di Leo A, Perri F. Real-time, computer-aided, detection-assisted colonoscopy eliminates differences in adenoma detection rate between trainee and experienced endoscopists. Endosc Int Open. 2022;10:E616-E621. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1] [Cited by in RCA: 24] [Article Influence: 6.0] [Reference Citation Analysis (0)] |

| 75. | Cabitza F, Campagner A, Ronzio L, Cameli M, Mandoli GE, Pastore MC, Sconfienza LM, Folgado D, Barandas M, Gamboa H. Rams, hounds and white boxes: Investigating human-AI collaboration protocols in medical diagnosis. Artif Intell Med. 2023;138:102506. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 28] [Reference Citation Analysis (0)] |

| 76. | Lami M, Singh H, Dilley JH, Ashraf H, Edmondon M, Orihuela-Espina F, Hoare J, Darzi A, Sodergren MH. Gaze patterns hold key to unlocking successful search strategies and increasing polyp detection rate in colonoscopy. Endoscopy. 2018;50:701-707. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 25] [Cited by in RCA: 32] [Article Influence: 4.0] [Reference Citation Analysis (0)] |

| 77. | Almansa C, Shahid MW, Heckman MG, Preissler S, Wallace MB. Association between visual gaze patterns and adenoma detection rate during colonoscopy: a preliminary investigation. Am J Gastroenterol. 2011;106:1070-1074. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 32] [Cited by in RCA: 41] [Article Influence: 2.7] [Reference Citation Analysis (0)] |

| 78. | Karamchandani U, Erridge S, Evans-Harvey K, Darzi A, Hoare J, Sodergren MH. Visual gaze patterns in trainee endoscopists - a novel assessment tool. Scand J Gastroenterol. 2022;57:1138-1146. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 7] [Cited by in RCA: 1] [Article Influence: 0.3] [Reference Citation Analysis (0)] |

| 79. | Sivananthan A, Ahmed J, Kogkas A, Mylonas G, Darzi A, Patel N. Eye tracking technology in endoscopy: Looking to the future. Dig Endosc. 2023;35:314-322. [RCA] [PubMed] [DOI] [Full Text] [Cited by in RCA: 10] [Reference Citation Analysis (0)] |

| 80. | Troya J, Fitting D, Brand M, Sudarevic B, Kather JN, Meining A, Hann A. The influence of computer-aided polyp detection systems on reaction time for polyp detection and eye gaze. Endoscopy. 2022;54:1009-1014. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 5] [Cited by in RCA: 46] [Article Influence: 11.5] [Reference Citation Analysis (0)] |

| 81. | Holden RJ, Karsh BT. The technology acceptance model: its past and its future in health care. J Biomed Inform. 2010;43:159-172. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 1681] [Cited by in RCA: 1101] [Article Influence: 68.8] [Reference Citation Analysis (0)] |

| 82. | Stevens AF, Stetson P. Theory of trust and acceptance of artificial intelligence technology (TrAAIT): An instrument to assess clinician trust and acceptance of artificial intelligence. J Biomed Inform. 2023;148:104550. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 15] [Cited by in RCA: 22] [Article Influence: 7.3] [Reference Citation Analysis (0)] |

| 83. | Fritsch SJ, Blankenheim A, Wahl A, Hetfeld P, Maassen O, Deffge S, Kunze J, Rossaint R, Riedel M, Marx G. Attitudes and perception of artificial intelligence in healthcare: A cross-sectional survey among patients. Digit health. 2022;8:20552076221116772. [RCA] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 94] [Reference Citation Analysis (1)] |

| 84. | Said N, Potinteu AE, Brich I, Buder J, Schumm H, Huff M. An artificial intelligence perspective: How knowledge and confidence shape risk and benefit perception. Comput Hum Behav. 2023;149:107855. [DOI] [Full Text] |

| 85. | Kader R, Baggaley RF, Hussein M, Ahmad OF, Patel N, Corbett G, Dolwani S, Stoyanov D, Lovat LB. Survey on the perceptions of UK gastroenterologists and endoscopists to artificial intelligence. Frontline Gastroenterol. 2022;13:423-429. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 10] [Cited by in RCA: 15] [Article Influence: 3.8] [Reference Citation Analysis (0)] |

| 86. | Kochhar GS, Carleton NM, Thakkar S. Assessing perspectives on artificial intelligence applications to gastroenterology. Gastrointest Endosc. 2021;93:971-975.e2. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 5] [Cited by in RCA: 12] [Article Influence: 2.4] [Reference Citation Analysis (0)] |

| 87. | Wadhwa V, Alagappan M, Gonzalez A, Gupta K, Brown JRG, Cohen J, Sawhney M, Pleskow D, Berzin TM. Physician sentiment toward artificial intelligence (AI) in colonoscopic practice: a survey of US gastroenterologists. Endosc Int Open. 2020;8:E1379-E1384. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 15] [Cited by in RCA: 53] [Article Influence: 8.8] [Reference Citation Analysis (0)] |

| 88. | Yokoi R, Eguchi Y, Fujita T, Nakayachi K. Artificial intelligence is trusted less than a doctor in medical treatment decisions: Influence of perceived care and value similarity. Int J Hum–Comput Interact. 2021;37:981-990. [DOI] [Full Text] |

| 89. | Rodler S, Kopliku R, Ulrich D, Kaltenhauser A, Casuscelli J, Eismann L, Waidelich R, Buchner A, Butz A, Cacciamani GE, Stief CG, Westhofen T. Patients' Trust in Artificial Intelligence-based Decision-making for Localized Prostate Cancer: Results from a Prospective Trial. Eur Urol Focus. 2023;. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 12] [Cited by in RCA: 29] [Article Influence: 14.5] [Reference Citation Analysis (0)] |

| 90. | Decide-AI Steering Group. DECIDE-AI: new reporting guidelines to bridge the development-to-implementation gap in clinical artificial intelligence. Nat Med. 2021;27:186-187. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 91] [Cited by in RCA: 103] [Article Influence: 20.6] [Reference Citation Analysis (0)] |

| 91. | Ahmad OF, Mori Y, Misawa M, Kudo SE, Anderson JT, Bernal J, Berzin TM, Bisschops R, Byrne MF, Chen PJ, East JE, Eelbode T, Elson DS, Gurudu SR, Histace A, Karnes WE, Repici A, Singh R, Valdastri P, Wallace MB, Wang P, Stoyanov D, Lovat LB. Establishing key research questions for the implementation of artificial intelligence in colonoscopy: a modified Delphi method. Endoscopy. 2021;53:893-901. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 48] [Cited by in RCA: 47] [Article Influence: 9.4] [Reference Citation Analysis (0)] |

| 92. | Berzin TM, Parasa S, Wallace MB, Gross SA, Repici A, Sharma P. Position statement on priorities for artificial intelligence in GI endoscopy: a report by the ASGE Task Force. Gastrointest Endosc. 2020;92:951-959. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 89] [Cited by in RCA: 72] [Article Influence: 12.0] [Reference Citation Analysis (1)] |

| 93. | Schmitz R, Werner R, Repici A, Bisschops R, Meining A, Zornow M, Messmann H, Hassan C, Sharma P, Rösch T. Artificial intelligence in GI endoscopy: stumbling blocks, gold standards and the role of endoscopy societies. Gut. 2022;71:451-454. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 6] [Cited by in RCA: 9] [Article Influence: 2.3] [Reference Citation Analysis (0)] |

| 94. | Ekkelenkamp VE, Koch AD, de Man RA, Kuipers EJ. Training and competence assessment in GI endoscopy: a systematic review. Gut. 2016;65:607-615. [RCA] [PubMed] [DOI] [Full Text] [Cited by in Crossref: 124] [Cited by in RCA: 126] [Article Influence: 12.6] [Reference Citation Analysis (0)] |

| 95. | Sudarevic B, Sodmann P, Kafetzis I, Troya J, Lux TJ, Saßmannshausen Z, Herlod K, Schmidt SA, Brand M, Schöttker K, Zoller WG, Meining A, Hann A. Artificial intelligence-based polyp size measurement in gastrointestinal endoscopy using the auxiliary waterjet as a reference. Endoscopy. 2023;55:871-876. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in RCA: 28] [Reference Citation Analysis (0)] |

| 96. | van de Sande D, Van Genderen ME, Smit JM, Huiskens J, Visser JJ, Veen RER, van Unen E, Ba OH, Gommers D, Bommel JV. Developing, implementing and governing artificial intelligence in medicine: a step-by-step approach to prevent an artificial intelligence winter. BMJ Health Care Inform. 2022;29. [RCA] [PubMed] [DOI] [Full Text] [Full Text (PDF)] [Cited by in Crossref: 3] [Cited by in RCA: 64] [Article Influence: 16.0] [Reference Citation Analysis (0)] |

Open-Access: This article is an open-access article that was selected by an in-house editor and fully peer-reviewed by external reviewers. It is distributed in accordance with the Creative Commons Attribution NonCommercial (CC BY-NC 4.0) license, which permits others to distribute, remix, adapt, build upon this work non-commercially, and license their derivative works on different terms, provided the original work is properly cited and the use is non-commercial. See: https://creativecommons.org/Licenses/by-nc/4.0/

Provenance and peer review: Invited article; Externally peer reviewed.

Peer-review model: Single blind

Specialty type: Gastroenterology and hepatology

Country/Territory of origin: Ireland

Peer-review report’s scientific quality classification

Grade A (Excellent): 0

Grade B (Very good): B

Grade C (Good): 0

Grade D (Fair): 0

Grade E (Poor): 0

P-Reviewer: Soreq L, United Kingdom S-Editor: Liu H L-Editor: A P-Editor: Cai YX